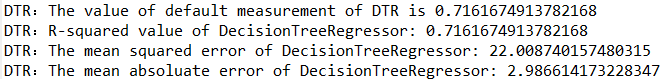

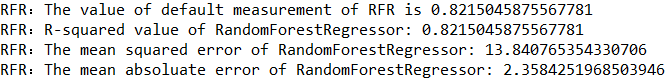

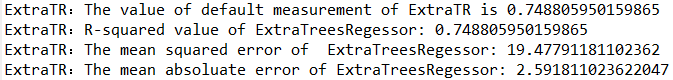

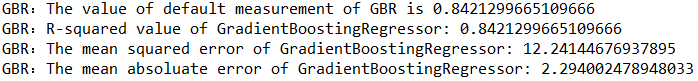

ML之DT&RFR&ExtraTR&GBR:基于四种算法(DT、RFR、ExtraTR、GBR)对Boston(波士顿房价)数据集(506,13+1)进行价格回归预测并对比各自性能

目录

输出结果

Boston House Prices dataset

===========================

Notes

------

Data Set Characteristics:

:Number of Instances: 506

:Number of Attributes: 13 numeric/categorical predictive

:Median Value (attribute 14) is usually the target

:Attribute Information (in order):

- CRIM per capita crime rate by town

- ZN proportion of residential land zoned for lots over 25,000 sq.ft.

- INDUS proportion of non-retail business acres per town

- CHAS Charles River dummy variable (= 1 if tract bounds river; 0 otherwise)

- NOX nitric oxides concentration (parts per 10 million)

- RM average number of rooms per dwelling

- AGE proportion of owner-occupied units built prior to 1940

- DIS weighted distances to five Boston employment centres

- RAD index of accessibility to radial highways

- TAX full-value property-tax rate per $10,000

- PTRATIO pupil-teacher ratio by town

- B 1000(Bk - 0.63)^2 where Bk is the proportion of blacks by town

- LSTAT % lower status of the population

- MEDV Median value of owner-occupied homes in $1000's

:Missing Attribute Values: None

:Creator: Harrison, D. and Rubinfeld, D.L.

This is a copy of UCI ML housing dataset.

http://archive.ics.uci.edu/ml/datasets/Housing

This dataset was taken from the StatLib library which is maintained at Carnegie Mellon University.

The Boston house-price data of Harrison, D. and Rubinfeld, D.L. 'Hedonic

prices and the demand for clean air', J. Environ. Economics & Management,

vol.5, 81-102, 1978. Used in Belsley, Kuh & Welsch, 'Regression diagnostics

...', Wiley, 1980. N.B. Various transformations are used in the table on

pages 244-261 of the latter.

The Boston house-price data has been used in many machine learning papers that address regression

problems.

**References**

- Belsley, Kuh & Welsch, 'Regression diagnostics: Identifying Influential Data and Sources of Collinearity', Wiley, 1980. 244-261.

- Quinlan,R. (1993). Combining Instance-Based and Model-Based Learning. In Proceedings on the Tenth International Conference of Machine Learning, 236-243, University of Massachusetts, Amherst. Morgan Kaufmann.

- many more! (see http://archive.ics.uci.edu/ml/datasets/Housing)

设计思路

核心代码

class DecisionTreeRegressor(BaseDecisionTree, RegressorMixin):

"""A decision tree regressor.

Read more in the :ref:`User Guide <tree>`.

Parameters

----------

criterion : string, optional (default="mse")

The function to measure the quality of a split. Supported criteria

are "mse" for the mean squared error, which is equal to variance

reduction as feature selection criterion and minimizes the L2

loss

using the mean of each terminal node, "friedman_mse", which

uses mean

squared error with Friedman's improvement score for potential

splits,

and "mae" for the mean absolute error, which minimizes the L1

loss

using the median of each terminal node.

class RandomForestRegressor(ForestRegressor):

"""A random forest regressor.

A random forest is a meta estimator that fits a number of classifying

decision trees on various sub-samples of the dataset and use averaging

to improve the predictive accuracy and control over-fitting.

The sub-sample size is always the same as the original

input sample size but the samples are drawn with replacement if

`bootstrap=True` (default).

Read more in the :ref:`User Guide <forest>`.

class ExtraTreesRegressor(ForestRegressor):

"""An extra-trees regressor.

This class implements a meta estimator that fits a number of

randomized decision trees (a.k.a. extra-trees) on various sub-samples

of the dataset and use averaging to improve the predictive accuracy

and control over-fitting.

Read more in the :ref:`User Guide <forest>`.

class GradientBoostingRegressor(BaseGradientBoosting, RegressorMixin):

"""Gradient Boosting for regression.

GB builds an additive model in a forward stage-wise fashion;

it allows for the optimization of arbitrary differentiable loss functions.

In each stage a regression tree is fit on the negative gradient of the

given loss function.

Read more in the :ref:`User Guide <gradient_boosting>`.

本文通过四种机器学习算法(决策树、随机森林、极端随机树、梯度提升)对波士顿房价数据集进行回归预测,详细介绍了每种算法的原理及应用,并对比了它们的预测性能。

本文通过四种机器学习算法(决策树、随机森林、极端随机树、梯度提升)对波士顿房价数据集进行回归预测,详细介绍了每种算法的原理及应用,并对比了它们的预测性能。

404

404

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?