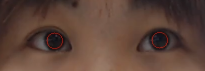

先上传效果图

main.py

// An highlighted block

"""

Demonstration of the GazeTracking library.

Check the README.md for complete documentation.

"""

import pandas as pd

import cv2

import dlib

import saccademodel

import fixationmodel

from imutils import resize

import os

from GazeTracking_master.gaze_tracking.gaze_tracking import GazeTracking

import matplotlib.pyplot as plt

from GazeTracking_master.gaze_tracking.FaceAligner import FaceAligner

# import calibration_

import collections

def gaze_():

# calibration_matrix = calibration_.main()

# def gaze_(path, path_csv):

gaze = GazeTracking()

webcam = cv2.VideoCapture(0)

frame_cnt = 0

Frame = [];

Gaze = [];

Left_gaze = [];Right_gaze = []

calibration_points = [];

X_gaze = []

while True:

# We get a new frame from the webcam

_, frame = webcam.read()

if not _:

print('no frame')

break

# We send this frame to GazeTracking to analyze it

# frame = cv2.rotate(frame, cv2.ROTATE_90_CLOCKWISE)#转化角度90度

# frame = cv2.resize(src=frame, dsize=(720, 1280))#压缩尺寸

cv2.imshow('frame',frame)

_face_detector = dlib.get_frontal_face_detector()

_predictor = dlib.shape_predictor("C:/Users/zhangjing/Anaconda3/envs/Django_1/GazeTracking_master/gaze_tracking/trained_models/shape_predictor_68_face_landmarks.dat")

gray = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

faces = _face_detector(frame)

Fa = FaceAligner(_face_detector, _predictor, (0.35, 0.35), desiredFaceWidth=720, desiredFaceHeight=1280)

aligned_face = Fa.align(frame, gray, faces[0])

frame = aligned_face

gaze.refresh(frame)

frame,left,right = gaze.annotated_frame()

Left_gaze.append(left);Right_gaze.append(right)

text = ""

if gaze.is_blinking():

text = "Blinking"

elif gaze.is_right():

text = "Looking right"

elif gaze.is_left():

text = "Looking left"

elif gaze.is_center():

text = "Looking center"

cv2.putText(frame, text, (20, 60), cv2.FONT_HERSHEY_DUPLEX, 1.4, (147, 58, 31), 2)

left_pupil = gaze.pupil_left_coords()

right_pupil = gaze.pupil_right_coords()

cv2.putText(frame, "Left pupil: " + str(left_pupil), (20, 130), cv2.FONT_HERSHEY_DUPLEX, 0.9, (147, 58, 31), 1)

cv2.putText(frame, "Right pupil: " + str(right_pupil), (20, 165), cv2.FONT_HERSHEY_DUPLEX, 0.9, (147, 58, 31), 1)

if left_pupil == None:

continue

left_pupil = list(left_pupil)

right_pupil = list(right_pupil)

Gaze.append(left_pupil)

# point = [left_pupil[0],left_pupil[1], left_pupil[0]*left_pupil[1], 1]

# calibration_point = np.dot(calibration_matrix[0][0], point)

# print(calibration_point)

# calibration_point_1 = np.transpose(calibration_point)

# print(calibration_point_1)

# calibration_point_2 = np.asarray(calibration_point_1)

# print(calibration_point_2)

# calibration_point = list(calibration_point)

# calibration_points.append([np.float(calibration_point[0]),abs(np.float(calibration_point[1]))])

Frame.append(frame_cnt)

# cv2.moveWindow("trans:" + frame, 1000, 100)

cv2.imshow('Dome',frame)

# cv2.imwrite("E:/ffmpeg-latest-win64-static/eye_frame_2/face/"+str(frame_cnt)</

最低0.47元/天 解锁文章

最低0.47元/天 解锁文章

1万+

1万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?