之前的工作流是rundeck,这主要是处理过程大多数是使用脚本语言。

新的布局是使用oozie,基本的可以看我之前的文章:oozie

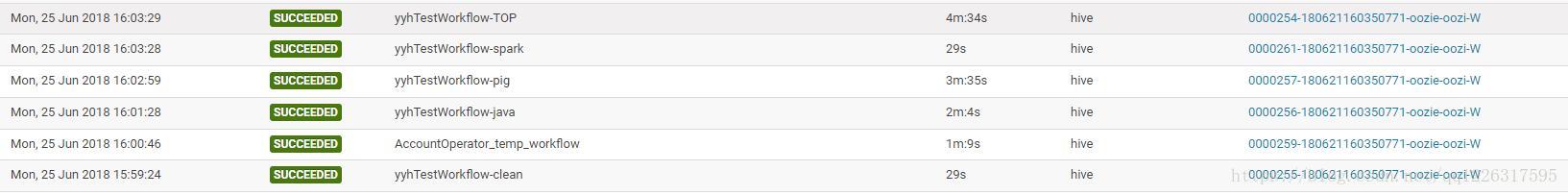

首先,先看一下成果

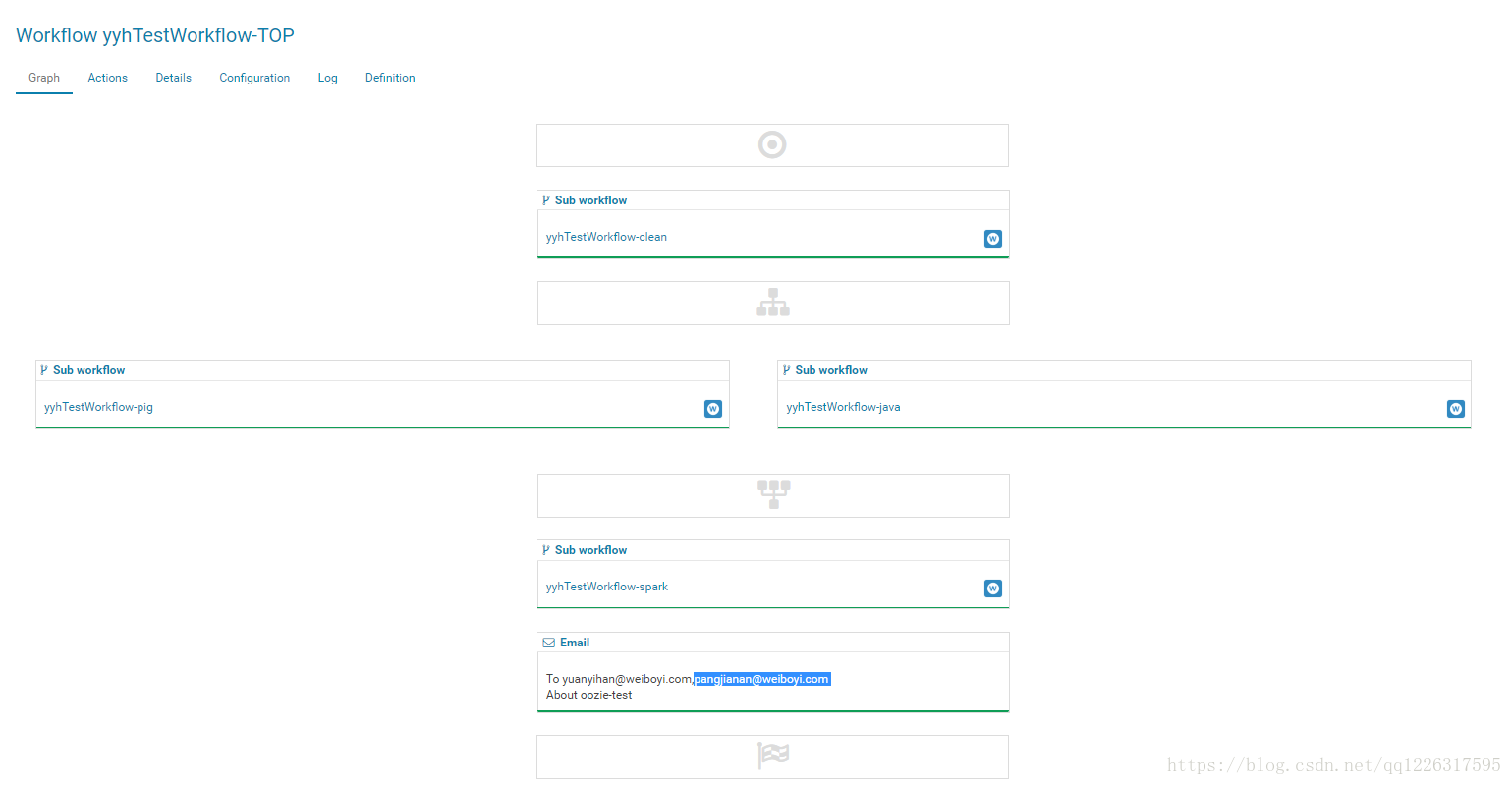

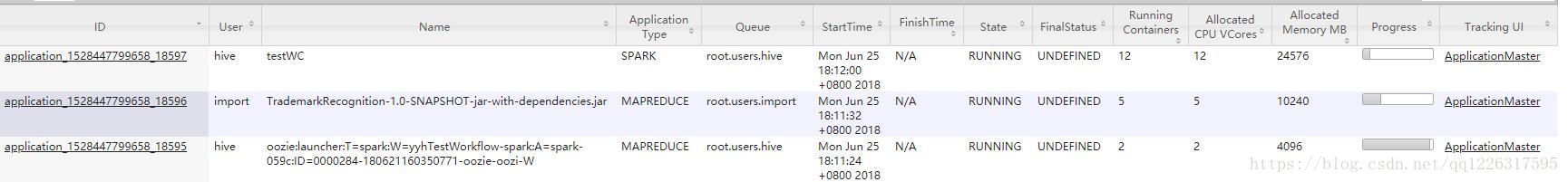

这里用一个例子来说明oozie的使用,首先上测试图:

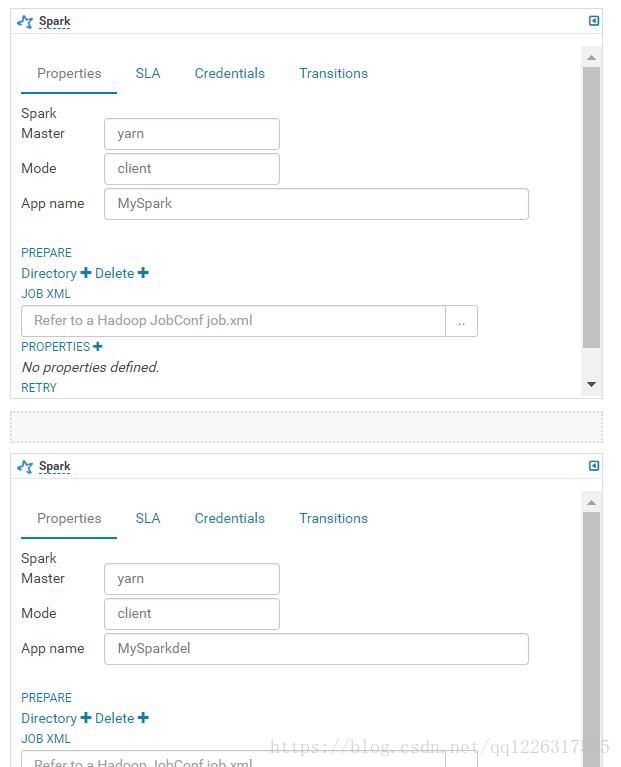

1 重点聊一下spark集群在oozie上的特殊性

这里有2个坑,分别是集群的参数和del

先讨论参数问题

从上图可以看出,1和2是运行成功的,3是失败的

我们看一下集群的参数配置,这里3个程序集群的参数是一致的,都类似于

spark2-submit --master yarn --num-executors 48 --driver-memory 1g --executor-memory 1g --class yyh.WC --executor-cores 3 testsparkwc.jar

均为master为yarn,mode为client。但是我还要再把1和2分开来说,虽然1,2均为yarn提交,并且参数完全一样,但是代码并不一样,我们看一下代码的区别

以下是spark测试代码

package yyh

import org.apache.spark.rdd.RDD

import org.apache.spark.{SparkConf, SparkContext}

object WC {

def main(args: Array[String]): Unit = {

var textpath=""

if(args.length==1){

textpath=args.apply(0)

}else{ textpath="hdfs://192.168.1.110:8020/user/yuanyihan/config/log4j.properties"

}

val conf = new SparkConf().setAppName("testWC")

val sparkContext = new SparkContext(conf)

val lines: RDD[String] = sparkContext.textFile(textpath);

val words: RDD[String] = lines.flatMap(line => {

line.split(" ")

})

println(words)

val fun = (word: String) => (word, 1)

//K,V

val pair: RDD[(String, Int)] = words.map(fun)

val result: RDD[(String, Int)] = pair.reduceByKey((u: Int, v: Int) => {

v + u

})

val end: RDD[(String, Int)] = result.sortBy(x => x._2, false)

val iterator = end.toLocalIterator;

while (iterator.hasNext) {

println(iterator.next())

}

println("TEST:success!!!")

}

}也是第二个yarn的代码,第一个增加了val conf = new SparkConf().setAppName(“testWC”).setMaster(“Local”),设置为local方式

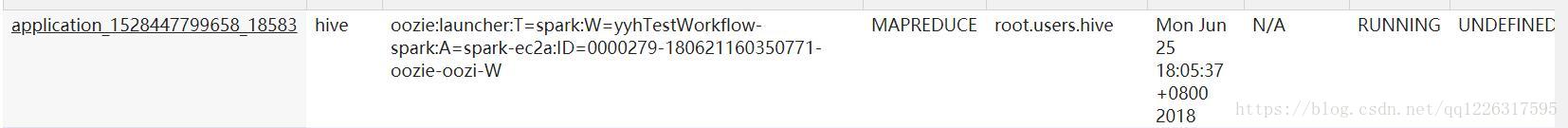

所以第一个local在8088中只有一个oozie分配

第二个在8088中有2个【第一个和第三个,谁知道第二个怎么乱入的】

下面讨论del问题

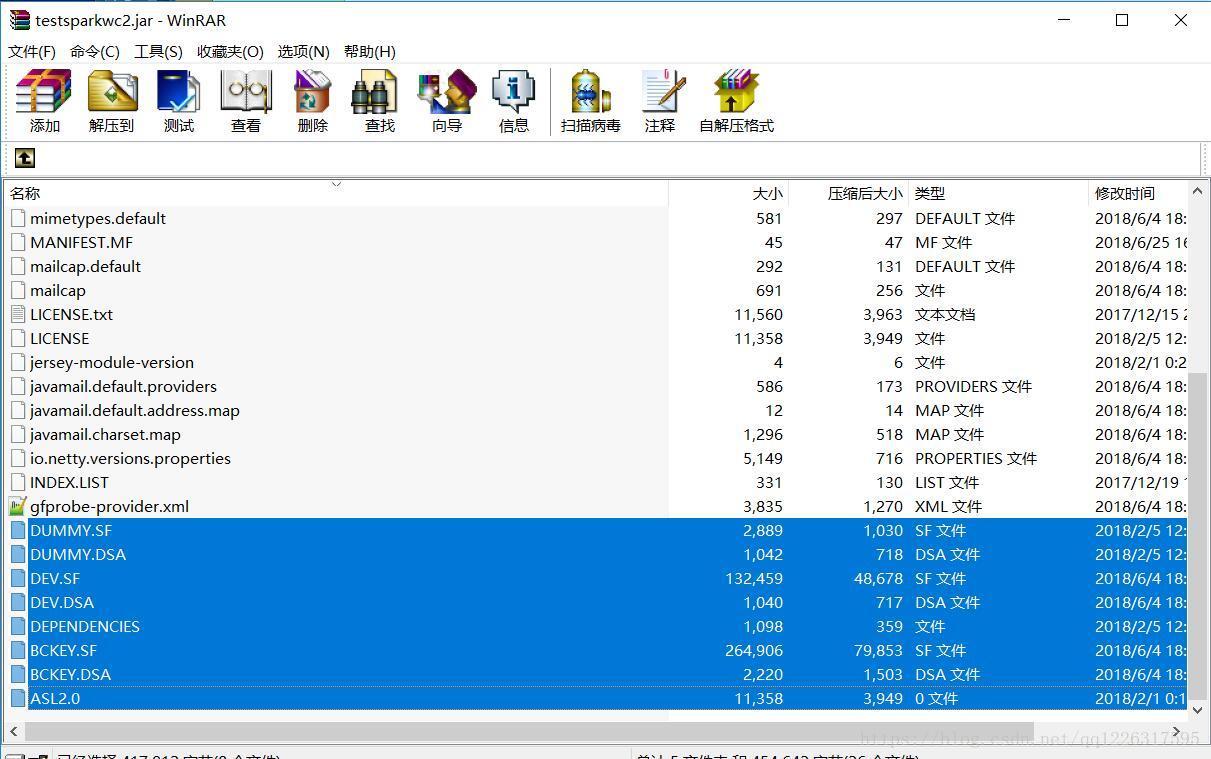

DEL问题其实是spark在打成jar包时候,出现的问题

Log Type: stderr

Log Upload Time: Mon Jun 25 19:28:46 +0800 2018

Log Length: 1908

Error: A JNI error has occurred, please check your installation and try again

Exception in thread "main" java.lang.SecurityException: Invalid signature file digest for Manifest main attributes

at sun.security.util.SignatureFileVerifier.processImpl(SignatureFileVerifier.java:314)

at sun.security.util.SignatureFileVerifier.process(SignatureFileVerifier.java:268)

at java.util.jar.JarVerifier.processEntry(JarVerifier.java:316)

at java.util.jar.JarVerifier.update(JarVerifier.java:228)

at java.util.jar.JarFile.initializeVerifier(JarFile.java:383)

at java.util.jar.JarFile.getInputStream(JarFile.java:450)

at sun.misc.JarIndex.getJarIndex(JarIndex.java:137)

at sun.misc.URLClassPath$JarLoader$1.run(URLClassPath.java:839)

at sun.misc.URLClassPath$JarLoader$1.run(URLClassPath.java:831)

at java.security.AccessController.doPrivileged(Native Method)

at sun.misc.URLClassPath$JarLoader.ensureOpen(URLClassPath.java:830)

at sun.misc.URLClassPath$JarLoader.<init>(URLClassPath.java:803)

at sun.misc.URLClassPath$3.run(URLClassPath.java:530)

at sun.misc.URLClassPath$3.run(URLClassPath.java:520)

at java.security.AccessController.doPrivileged(Native Method)

at sun.misc.URLClassPath.getLoader(URLClassPath.java:519)

at sun.misc.URLClassPath.getLoader(URLClassPath.java:492)

at sun.misc.URLClassPath.getNextLoader(URLClassPath.java:457)

at sun.misc.URLClassPath.getResource(URLClassPath.java:211)

at java.net.URLClassLoader$1.run(URLClassLoader.java:365)

at java.net.URLClassLoader$1.run(URLClassLoader.java:362)

at java.security.AccessController.doPrivileged(Native Method)

at java.net.URLClassLoader.findClass(URLClassLoader.java:361)

at java.lang.ClassLoader.loadClass(ClassLoader.java:424)

at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:331)

at java.lang.ClassLoader.loadClass(ClassLoader.java:357)

at sun.launcher.LauncherHelper.checkAndLoadMain(LauncherHelper.java:495)意思是签名不合法,我们参考优快云的文章:使用meaven打包过程中遇到的一些问题

删掉如下签名就OK了

2 oozie平台下添加spark2的过程

因为过程过于恶心,另开一个文章,详细说明,博客地址:工作流开源系统–OOZIE之安装spark2

3 现在Spark 运行能够成功了,但是spark还有坑

某些次运行时,显示找不到类,或者找不到方法,我们推测为:集群jar不统一,这个等后续解决

4 Java远程调用系统

使用如下的包,官网为:http://www.ganymed.ethz.ch/ssh2/

这里直接使用mvnrepository.com的包

<!-- https://mvnrepository.com/artifact/ch.ethz.ganymed/ganymed-ssh2 -->

<dependency>

<groupId>ch.ethz.ganymed</groupId>

<artifactId>ganymed-ssh2</artifactId>

<version>build210</version>

</dependency>例子使用官网下载的例子中的StdoutAndStderr.java即可

import java.io.BufferedReader;

import java.io.IOException;

import java.io.InputStream;

import java.io.InputStreamReader;

import ch.ethz.ssh2.Connection;

import ch.ethz.ssh2.Session;

import ch.ethz.ssh2.StreamGobbler;

public class StdoutAndStderr

{

public static void main(String[] args)

{

String hostname = "127.0.0.1";

String username = "joe";

String password = "joespass";

try

{

/* Create a connection instance */

Connection conn = new Connection(hostname);

/* Now connect */

conn.connect();

/* Authenticate */

boolean isAuthenticated = conn.authenticateWithPassword(username, password);

if (isAuthenticated == false)

throw new IOException("Authentication failed.");

/* Create a session */

Session sess = conn.openSession();

sess.execCommand("echo \"Text on STDOUT\"; echo \"Text on STDERR\" >&2");

InputStream stdout = new StreamGobbler(sess.getStdout());

InputStream stderr = new StreamGobbler(sess.getStderr());

BufferedReader stdoutReader = new BufferedReader(new InputStreamReader(stdout));

BufferedReader stderrReader = new BufferedReader(new InputStreamReader(stderr));

System.out.println("Here is the output from stdout:");

while (true)

{

String line = stdoutReader.readLine();

if (line == null)

break;

System.out.println(line);

}

System.out.println("Here is the output from stderr:");

while (true)

{

String line = stderrReader.readLine();

if (line == null)

break;

System.out.println(line);

}

/* Close this session */

sess.close();

/* Close the connection */

conn.close();

}

catch (IOException e)

{

e.printStackTrace(System.err);

System.exit(2);

}

}

}然后本地放置test.sh即可,然后sess.execCommand(“/路径/test.sh”);

加上pig的,效果如下

5 若干坑

5.1 pig和spark有明显的用户权限,所以记得一定要把文件放到可以读,可以写的地方

5.2 oozie是hive用户,那么pig,java之类的运行也是hive用户,所以还是权限问题

5.3 spark的概率失败问题,暂时归结于版本,所以需要运维帮忙,暂时中止测试

5.4 ssh,运维嫌弃维护麻烦,我才做java远程中间件,期待好办法

5.5 参数和网络差别老大了https://blog.youkuaiyun.com/pan_haufei/article/details/75503517

686

686

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?