MNIST数字识别问题

1、mnist_inference.py

定义了神经网络中的参数设置 W 、前向传播过程

import tensorflow as tf

INPUT_NODE = 784

OUTPUT_NODE = 10

LAYER1_NODE = 500

"""

函数功能:创建一个shape形状的变量矩阵 W 权重 ,

并将这个变量的正则化算是加入 losses 集合中

"""

def get_weight_variable(shape,regularizer): #regularizer:正则化损失函数 L1 或 L2

weights = tf.get_variable("weights",shape,initializer=tf.truncated_normal_initializer(stddev=0.1))

if regularizer != None:#如果存在正则化函数

tf.add_to_collection('losses',regularizer(weights))#那么把变量的正则化加入losses的集合中

return weights

"""

函数功能:定义神经网络的前向传播过程

传入 x regularizer正则化损失函数

"""

def inference(input_tensor,regularizer):

with tf.variable_scope('layer1'): #隐藏层

weights = get_weight_variable([INPUT_NODE,LAYER1_NODE],regularizer) #定义了变量w 并正则化损失传入 losses 集合中

biases = tf.get_variable("biases",[LAYER1_NODE],initializer=tf.constant_initializer(0.0))

layer1 = tf.nn.relu(tf.matmul(input_tensor,weights)+biases)

with tf.variable_scope('layer2'): #输出层

weights = get_weight_variable([LAYER1_NODE,OUTPUT_NODE],regularizer)

biases = tf.get_variable("biases",[OUTPUT_NODE],initializer=tf.constant_initializer(0.0))

layer2 = tf.matmul(layer1,weights)+biases

return layer2

2、mnist_train.py

定义了神经网络的训练过程

import os

import tensorflow as tf

from tensorflow.examples.tutorials.mnist import input_data

import mnist_inference

#配置神经网络中的参数

BATCH_SIZE = 100 #一次训练需要的样本点个数

REGULARAZTION_RATE = 0.0001 #正则化损失系数

def train(mnist):

#为x y_开辟空间

x = tf.placeholder(tf.float32,[None,mnist_inference.INPUT_NODE],name='x-input')

y_ = tf.placeholder(tf.float32,[None,mnist_inference.OUTPUT_NODE],name='y-input')

#正则化损失函数 系数为 0.0001

regularizerl2 = tf.contrib.layers.l2_regularizer(REGULARAZTION_RATE)

#y = (x w1) w2

#使用定义好的前向传播函数,返回输出结果 y 并将正则化损失函数放到集合中

y = mnist_inference.inference(x,regularizerl2)

# 存放训练轮数

global_step = tf.Variable(0,trainable=False)

# 定义滑动平均函数 给所有变量使用滑动平均函数

variables_averages = tf.train.ExponentialMovingAverage(0.99,global_step)

variables_averages_op = variables_averages.apply(tf.trainable_variables())

#交叉熵 :判断一个输出向量和期望的向量的接近程度 也就是两个概率分布之间的距离

cross_entropy = tf.nn.sparse_softmax_cross_entropy_with_logits(logits=y,labels=tf.argmax(y_,1))

cross_entropy_mean = tf.reduce_mean(cross_entropy)

#损失函数 loss

loss = cross_entropy_mean + tf.add_n(tf.get_collection('losses'))

#学习率

l_m_B = mnist.train.num_examples/BATCH_SIZE

learning_rate = tf.train.exponential_decay(0.8,global_step,l_m_B,0.99)

#反向传播结果

train_step = tf.train.GradientDescentOptimizer(learning_rate).minimize(loss,global_step=global_step)

#在每一次训练时 都要更新参数 和 滑动平均值 train_op

with tf.control_dependencies([train_step,variables_averages_op]):

train_op = tf.no_op(name='train')

#初始化持久化类

saver = tf.train.Saver()

with tf.Session() as sess:

tf.global_variables_initializer().run() #完成所有变量的初始化过程

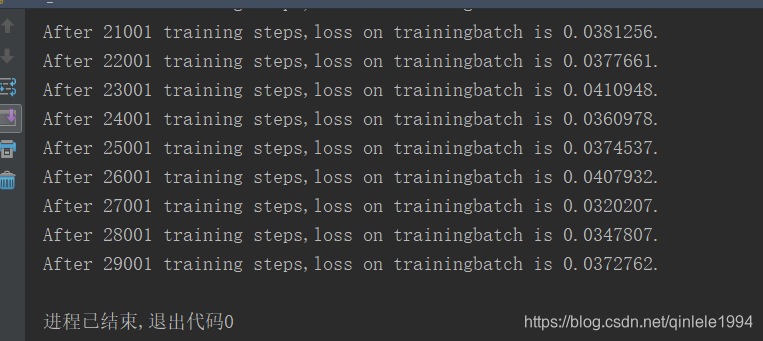

for i in range(30000):

xs,ys = mnist.train.next_batch(BATCH_SIZE)

_,loss_value,step=sess.run([train_op,loss,global_step],feed_dict={x:xs,y_:ys})

if i % 1000 == 0:

print("After %d training steps,loss on training"

"batch is %g."%(step,loss_value))

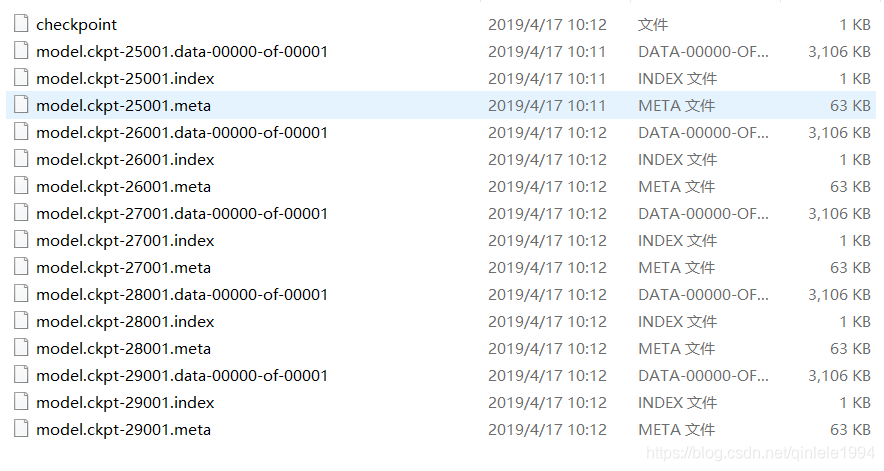

saver.save(sess,os.path.join("C:/Users/asus/Desktop/TensorFlow/model/","model.ckpt"),global_step=global_step)

def main(argv=None):

mnist = input_data.read_data_sets("C:/Users/asus/Desktop/TensorFlow/mnist_data",one_hot=True)

train(mnist)

if __name__ == '__main__':

tf.app.run()

最后的结果是每训练1000轮之后,输出损失函数大小,并且保存当前模型

3、mnist_eval.py

测试代码

import time

import tensorflow as tf

from tensorflow.examples.tutorials.mnist import input_data

#加载 mnist_inference.py 和 mnist_train.py

import mnist_inference

import mnist_train

#每10秒加载一次最新的模型,并在测试数据上测试最新模型的正确率

EVAL_INTERVAL_SECS = 10

def evaluate(mnist):

#实例化一个图 并将该图作为该网络的默认图

with tf.Graph().as_default() as g:

#定义输入输出

x = tf.placeholder(tf.float32,[None,mnist_inference.INPUT_NODE],name='x-input')

y_ = tf.placeholder(tf.float32,[None,mnist_inference.OUTPUT_NODE],name='y-input')

#从测试集中读入x 和 y_

validate_feed = {x:mnist.validation.images, y_:mnist.validation.labels}

#通过调用封装好的前向传播算法,测试时候不关注正则化损失的值

y = mnist_inference.inference(x,None)

#通过对比计算正确率

correct_prediction = tf.equal(tf.argmax(y,1),tf.argmax(y_,1))

accuracy = tf.reduce_mean(tf.cast(correct_prediction,tf.float32))

#滑动平均

variable_averages = tf.train.ExponentialMovingAverage(mnist_train.MOVING_AVERAGE_DECAY)

#将滑动变量的影子值映射到变量本身

variables_to_restore = variable_averages.variables_to_restore()

#这样通过变量名加载模型的时候,就可以直接加载变量的滑动平均值

saver = tf.train.Saver(variables_to_restore)

while True:

with tf.Session() as sess:

#tf.train.get_checkpoint_state()函数自动找到目录中最新模型的文件名

ckpt = tf.train.get_checkpoint_state(mnist_train.MODEL_SAVE_PATH)

# ckpt.model_checkpoint_path表示找到的最新模型存储的位置

if ckpt and ckpt.model_checkpoint_path:

saver.restore(sess,ckpt.model_checkpoint_path)

#加载模型到会话Session中

saver.restore(sess,ckpt.model_checkpoint_path)

#通过文件名得到模型保存时迭代的轮数

global_step = ckpt.model_checkpoint_path.split('/')[-1].split('-')[-1]

accuracy_sore = sess.run(accuracy,feed_dict=validate_feed)

print("After %s training step(s),validation " "accuracy = %g"%(global_step,accuracy_sore))

else:

print('No checkpoint file found')

retun

time.sleep(EVAL_INTERVAL_SECS)

def main(argv=None):

mnist = input_data.read_data_sets("C:/Users/asus/Desktop/TensorFlow/mnist_data",one_hot=True)

evaluate(mnist)

if __name__=='__main__':

tf.app.run()

本文详细介绍了一种基于TensorFlow的MNIST手写数字识别系统,包括神经网络的参数设置、前向传播过程、训练流程及测试代码。通过定义神经网络结构、训练模型并在测试集上评估准确率,实现了对MNIST数据集的手写数字识别。

本文详细介绍了一种基于TensorFlow的MNIST手写数字识别系统,包括神经网络的参数设置、前向传播过程、训练流程及测试代码。通过定义神经网络结构、训练模型并在测试集上评估准确率,实现了对MNIST数据集的手写数字识别。

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?