制作模板机

- OS CentOS-7-x86_64-Minimal-1611,安装jdk,rsync,lrzsz后,链接克隆虚拟机

- 所有操作都在root用户下

- 四台虚拟机hostname 分别为s101,s102,s103,s104

- s101作为master node

s101上安装Hadoop-2.7.5

1.下载hadoop-2.7.3.tar.gz

2.tar开文件到/soft

3.配置环境变量

[/etc/profile]

HADOOP_HOME=/soft/hadoop

PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin

4.source profile,使其生效

$>source /etc/profile

5.验证hadoop是否成功

$>hadoop version

搭建hadoop完全分布式

**1.配置s101无密登录s102 ~ s104 /root目录下**

1.1)在s101上生成公私秘钥对

$>ssh-keygen -t rsa -P '' -f ~/.ssh/id_rsa

1.2)对s101自身实现无密登录,添加公钥到认证库中

$>cd .ssh

$>cat id_rsa.pub >> authorized_keys

1.3)修改authorized_keys文件的权限为755

$>cd .ssh

$>chmod 755 authorized_keys

1.4)验证ssh本机

$>ssh localhost

yes

$>exit

1.5)使用ssh-copy-id复制当前用户的公钥到远程主机指定用户的认证库中。

$>ssh-copy-id root@192.168.231.102

$>ssh-copy-id root@192.168.231.103

$>ssh-copy-id root@192.168.231.104

1.6)修改所有的/etc/hosts文件,可通过主机名访问远程主机

a)修改s101的/etc/hosts文件

127.0.0.1 localhost

192.168.231.101 s101

192.168.231.102 s102

192.168.231.103 s103

192.168.231.104 s104

b)远程复制该文件到所有主机

$>scp /etc/hosts root@s102:/etc/

$>scp /etc/hosts root@s103:/etc/

$>scp /etc/hosts root@s104:/etc/

**2.通过scp复制/soft/hadoop到所有节点**

$>scp -r /soft/hadoop-2.7.3 root@s102:/soft/

$>scp -r /soft/hadoop-2.7.3 root@s103:/soft/

$>scp -r /soft/hadoop-2.7.3 root@s104:/soft/

**3.登录102 ~ 102创建软连接**

#102

$>ln -s hadoop-2.7.5 hadoop

#103

$>ln -s hadoop-2.7.5 hadoop

#104

$>ln -s hadoop-2.7.5 hadoop

**4.分发s101的/etc/profile文件到所有主机**

$>scp /etc/profile root@s102:/etc/

$>scp /etc/profile root@s103:/etc/

$>scp /etc/profile root@s104:/etc/

**5.配置hadoop配置文件[hadoop-2.7.5]**

[hadoop-2.7.5/etc/hadoop/core-site.xml]

<?xml version="1.0"?>

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://s101/</value>

</property>

</configuration>

[hadoop-2.7.5/etc/hadoop/hdfs-site.xml]

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<property>

<name>dfs.replication</name>

<value>3</value>

</property>

</configuration>

[hadoop-2.7.5/etc/hadoop/mapred-site.xml]

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

</configuration>

[hadoop-2.7.5/etc/hadoop/yarn-site.xml]

<?xml version="1.0"?>

<configuration>

<property>

<name>yarn.resourcemanager.hostname</name>

<value>s101</value>

</property>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

</configuration>

[hadoop-2.7.5/etc/hadoo/hadoop-env.sh

修改JAVA_HOME:

export JAVA_HOME=/soft/jdk

...

**6.分发/hadoop-2.7.5/etc目录到所有节点**

$>cd /soft/hadoop

$>scp -r etc root@s102:/soft/hadoop

$>scp -r etc root@s103:/soft/hadoop

$>scp -r etc root@s104:/soft/hadoop

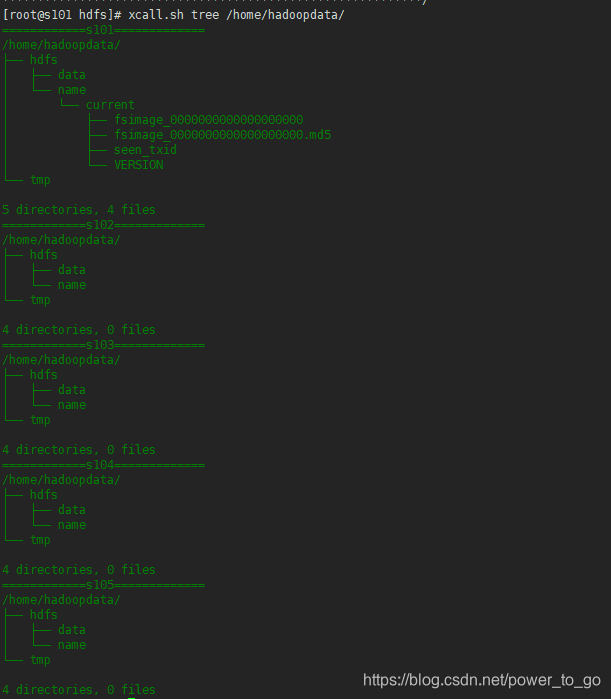

**7.s101格式化hadoop的hdfs**

$>hadoop namenode -format

**8.配置/etc/hadoop/slaves文件并分发**

s102

s103

s104

**9.启动hadoop集群**

[s101]

start-all.sh 已废弃

start-dfs.sh

start-yarn.sh

**10.查看进程**

jps

**11.关闭linux防火墙**

[s101 ~ s104]

service firewalld stop

systemctl disable firewalld.service

**12.通过浏览器访问hdfs**

http://s101:50070/

**13.创建hdfs目录**

hdfs dfs -mkdir -p /user/centos/data

hdfs dfs -put 1.txt /user/centos/data

问题

主机访问虚拟机里的web服务

多次执行hadoop namenode -format datanode启动失败解决方法

put 上传失败,关闭所有datanode的防火墙

[第一次执行 hadoop namenode -format]

3969

3969

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?