文章目录

我的建议是直接从相关的简单项目入手,这里以pytorch版本的U-Net分割代码为例(https://github.com/milesial/Pytorch-UNet)

我会先对项目的代码做一个全面的解析,再结合自身的经验给出一些关于网络模块自定义和调参的经验。

项目整体流程

这里先用伪代码的形式介绍下这个项目的大致流程

# 定义网络

net = UNet(n_channels=3, n_classes=args.classes, bilinear=args.bilinear)

# 加载数据集

dataset = BasicDataset(dir_img, dir_mask)

n_val = int(len(dataset) * val_percent)

n_train = len(dataset) - n_val

train_set, val_set = random_split(dataset, [n_train, n_val], generator=torch.Generator().manual_seed(0))

loader_args = dict(batch_size=batch_size, num_workers=4, pin_memory=True)

train_loader = DataLoader(train_set, shuffle=True, **loader_args)

val_loader = DataLoader(val_set, shuffle=False, drop_last=True, **loader_args)

# 优化参数设置

optimizer = optim.RMSprop(net.parameters(), lr=learning_rate, weight_decay=1e-8, momentum=0.9)

scheduler = optim.lr_scheduler.ReduceLROnPlateau(optimizer, 'max', patience=2) # goal: maximize Dice score

grad_scaler = torch.cuda.amp.GradScaler(enabled=amp)

criterion = nn.CrossEntropyLoss()

# 训练

for epoch in range(1, epochs+1):

net.train()

epoch_loss = 0

with tqdm(total=n_train, desc=f'Epoch {epoch}/{epochs}', unit='img') as pbar:

for batch in train_loader:

optimizer.zero_grad()

images = batch['image']

true_masks = batch['mask']

images = images.to(device=device, dtype=torch.float32)

true_masks = true_masks.to(device=device, dtype=torch.long)

masks_pred = net(images)

loss = criterion(masks_pred, true_masks) \

+ dice_loss(F.softmax(masks_pred, dim=1).float(),

F.one_hot(true_masks, net.n_classes).permute(0, 3, 1, 2).float(),

multiclass=True)

optimizer.step()

loss.backward()

# 验证

val_score = evaluate(net, val_loader, device)

定义网络

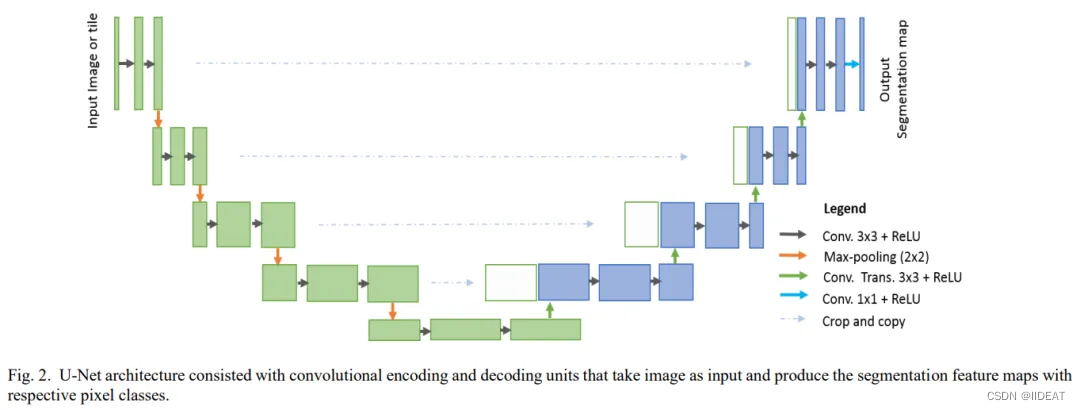

这里先回顾一下U-Net网络的基本结构,如下图所示

本质上就是先进行多次下采样,再进行多次上采样,途中使用双卷积提取特征,上采样时结合下采样时同尺寸的特征。

本项目中网络代码在/unet目录下,/unet/unet_model.py给出了模型的定义

class UNet(nn.Module):

def __init__(self, n_channels, n_classes, bilinear=False):

super(UNet, self).__init__()

self.n_channels = n_channels

self.n_classes = n_classes

self.bilinear = bilinear

self.inc = DoubleConv(n_channels, 64)

self.down1 = Down(64, 128)

self.down2 = Down(128, 256)

self.down3 = Down(256, 512)

factor = 2 if bilinear else 1

self.down4 = Down(512, 1024 // factor)

self.up1 = Up(1024, 512 // factor, bilinear)

self.up2 = Up(512, 256 // factor, bilinear)

self.up3 = Up(256, 128 // factor, bilinear)

self.up4 = Up(128, 64, bilinear)

self.outc = OutConv(64, n_classes)

def forward(self, x):

x1 = self.inc(x)

x2 = self.down1(x1)

x3 = self.down2(x2)

x4 = self.down3(x3)

x5 = self.down4(x4)

x = self.up1(x5, x4)

x = self.up2(x, x3)

x = self.up3(x, x2)

x = self.up4(x, x1)

logits = self.outc(x)

return logits

可以看到,作者通过定义了一个U-Net的类来实现网络的定义,class中一共有两个函数:__init__与forward。

__init__函数用于对网络需要使用的参数或模块进行定义与声明,当通过下行代码构建网络时

net = UNet(n_channels=3, n_classes=args.classes, bilinear=args.bilinear)

网络会先唤起__init__函数将传入的n_channel等参数在网络中进行定义,令其成为class中的全局变量,这样才能让class中的其他函数对其访问。

self.n_channels = n_channels

self.n_classes = n_classes

self.bilinear = bilinear

forward函数则定义了网络前向传播的过程,

def forward(self, x):

x1 = self.inc(x)

x2 = self.down1(x1)

x3 = self.down2(x2)

x4 = self.down3(x3)

x5 = self.down4(x4)

x = self.up1(x5, x4)

x = self.up2(x, x3)

x = self.up3(x, x2)

x = self.up4(x, x1)

logits = self.outc(x)

return logits

其中,inc, down, up, outc分别表示输入卷积、下采样、上采样和输出卷积,在__init__中进行了定义,相应函数的定义在/unet/unet_parts.py中,这里先不多做介绍。

有了__init__函数与forward函数后就可以对网络进行调用了,

net = UNet(n_channels=3, n_classes=args.classes, bilinear=args.bilinear)

masks_pred = net(images)

这里可能会有疑问,为什么不需要调用forward函数就可以对网络进行调用,实际上是因为在定义class时,我们声明了class UNet(nn.Module),其中nn.Module包含了__call__函数,会自动对forward函数进行调用。因此,实际操作过程中只要给定义好的网络输入tensor就可以输出推理结果了

(具体实现原理可以参考https://blog.youkuaiyun.com/qq_23981335/article/details/103683737,实际只要知道给net输入tensor就可以触发定义好的forward函数就可以了)

加载数据集

项目中数据集的加载主要流程如下,

dataset = BasicDataset(dir_img, dir_mask)

n_val = int(len(dataset) * val_percent)

n_train = len(dataset) - n_val

train_set, val_set = random_split(dataset, [n_train, n_val], generator=torch.Generator().manual_seed(0))

loader_args = dict(batch_size=batch_size, num_workers=4, pin_memory=True)

train_loader = DataLoader(train_set, shuffle=True, **loader_args)

val_loader = DataLoader(val_set, shuffle=False, drop_last=True, **loader_args)

主要就是BasicDataset类和Dataloader的调用,其中前者是作者自己定义的,后者是pytorch自带的。

对于BasicDataset类,我们重点关注__getitem_函数即可,在Dataloader中会自动调用这一函数生成pytorch的数据集。

def __getitem__(self, idx):

name = self.ids[idx]

mask_file = list(self.masks_dir.glob(name + self.mask_suffix + '.*'))

img_file = list(self.images_dir.glob(name + '.*'))

mask = self.load(mask_file[0])

img = self.load(img_file[0])

img = self.preprocess(img, self.scale, is_mask=False)

mask = self.preprocess(mask, self.scale, is_mask=True)

return {

'image': torch.as_tensor(img.copy()).float().contiguous(),

'mask': torch.as_tensor(mask.copy()).long().contiguous()

}

其中,self.ids需要在__init__中定义,是一个包含训练/验证的文件名的list。Dataloader会不断调用__getitem__,每次从self.ids里取出一个文件名,在读取、预处理以及tensor转换后输出给Dataloader。

这里读取和预处理不做过多介绍,不同数据有不同的处理方式,我建议是都转换为numpy.array方便后续处理,要记住return是一个字典,后续需要通过字典的键值获得相应的值。

我们回过头看数据集加载的整个流程

dataset = BasicDataset(dir_img, dir_mask)

n_val = int(len(dataset) * val_percent)

n_train = len(dataset) - n_val

train_set, val_set = random_split(dataset, [n_train, n_val], generator=torch.Generator().manual_seed(0))

loader_args = dict(batch_size=batch_size, num_workers=4, pin_memory=True)

train_loader = DataLoader(train_set, shuffle=True, **loader_args)

val_loader = DataLoader(val_set, shuffle=False, drop_last=True, **loader_args)

先通过BasicDataset得到了变量dataset,将这一变量输入给pytorch自带的Dataloader函数后,会通过调用dataset中的__getitem__函数加载数据,并将数据按Batchsize加载到内存,最终可以通过Dataloader以Batch的形式调用数据。

值得一提是,这里要注意两个参数,一是shuffle=True/False,这表示是否每次都会打乱数据集;二是drop_last=True/False,这表示当数据集的量不能被Batchsize整除时是否要舍弃剩下的,False的话就会令余下的数据集单独成为一个Batch。

优化参数设置

optimizer = optim.RMSprop(net.parameters(), lr=learning_rate, weight_decay=1e-8, momentum=0.9)

scheduler = optim.lr_scheduler.ReduceLROnPlateau(optimizer, 'max', patience=2) # goal: maximize Dice score

grad_scaler = torch.cuda.amp.GradScaler(enabled=amp)

criterion = nn.CrossEntropyLoss()

这里主要是优化器、学习率调节与损失函数的定义(grad_scaler是自动混合精度,可以提速以及减少现存消耗,具体调用参考https://zhuanlan.zhihu.com/p/165152789,效果很显著)

关于优化器和学习率调节会在调参经验里叙述,这里重点讲一下损失函数的定义。

本项目中,使用的损失函数是criterion = nn.CrossEntropyLoss(),也就是交叉熵。

但要注意的是,使用 nn.CrossEntropyLoss(pred, true)时,

pred应为

[

B

,

C

l

a

s

s

,

C

h

,

H

,

W

]

[B,Class, Ch,H,W]

[B,Class,Ch,H,W]格式(B=batchsize, Ch=channel, H=height, w=width])且为网络输出的原始形态,不需要任何softmax或sigmoid激活;

true应为

[

B

,

C

h

,

H

,

W

]

[B, Ch, H, W]

[B,Ch,H,W]格式,每个像素处的值为该像素的类别值(整型)。

以本项目为例,输入为RGB图像,需要输出一个二分类结果,因此Class=2, Ch=3, 网络输出的pred中(忽略batch),每个像素处的值为onehot形式的预测,比如

(

红

色

通

道

,

0

,

0

)

(红色通道,0, 0)

(红色通道,0,0)位置处的值为

[

0.1

,

0.7

]

[0.1, 0.7]

[0.1,0.7],0.1、0.7分别表示该像素在0类和1类处计算的结果;而true在

(

红

色

通

道

,

0

,

0

)

(红色通道,0, 0)

(红色通道,0,0)位置处的值应为0或1,表示该像素属于0类还是1类。

在使用别人设计的损失函数时,一定要注意输入输出的格式和顺序,否则调试的时候很麻烦。

训练

训练的伪代码如下

for epoch in range(1, epochs+1):

net.train()

epoch_loss = 0

with tqdm(total=n_train, desc=f'Epoch {epoch}/{epochs}', unit='img') as pbar:

for batch in train_loader:

optimizer.zero_grad()

images = batch['image']

true_masks = batch['mask']

images = images.to(device=device, dtype=torch.float32)

true_masks = true_masks.to(device=device, dtype=torch.long)

masks_pred = net(images)

loss = criterion(masks_pred, true_masks) \

+ dice_loss(F.softmax(masks_pred, dim=1).float(),

F.one_hot(true_masks, net.n_classes).permute(0, 3, 1, 2).float(),

multiclass=True)

loss.backward()

optimizer.step()

训练这一块其实没什么好讲的,结合上面讲的内容应该都能看懂,但要记得使用optimizer.zero_grad()对优化器梯度清零、损失函数的回传loss.backward()以及优化器的步进optimizer.step(),这一块的原理自行查阅。

验证

验证这部分的代码在evaluate.py中,具体代码如下

def evaluate(net, dataloader, device):

net.eval()

num_val_batches = len(dataloader)

dice_score = 0

for batch in tqdm(dataloader, total=num_val_batches, desc='Validation round', unit='batch', leave=False):

image, mask_true = batch['image'], batch['mask']

image = image.to(device=device, dtype=torch.float32)

mask_true = mask_true.to(device=device, dtype=torch.long)

mask_true = F.one_hot(mask_true, net.n_classes).permute(0, 3, 1, 2).float()

with torch.no_grad():

mask_pred = net(image)

if net.n_classes == 1:

mask_pred = (F.sigmoid(mask_pred) > 0.5).float()

dice_score += dice_coeff(mask_pred, mask_true, reduce_batch_first=False)

else:

mask_pred = F.one_hot(mask_pred.argmax(dim=1), net.n_classes).permute(0, 3, 1, 2).float()

dice_score += multiclass_dice_coeff(mask_pred[:, 1:, ...], mask_true[:, 1:, ...], reduce_batch_first=False)

net.train()

return dice_score / num_val_batches

验证这里有两个点,

一是要注意在验证前需要开启网络的验证模式,即net.eval(),而在验证后要开启训练模式net.train();

二是mask_pred和mask_true的格式问题,本项目的验证通过计算Dice值进行,其中mask_pred形状应为

[

B

,

C

l

a

s

s

,

C

h

,

H

,

W

]

[B,Class, Ch,H,W]

[B,Class,Ch,H,W],且需要对模型的输出激活(sigmoid or softmax,激活后可以视为类别概率)并转换为硬指标(即整数,代码中sigmoid激活后通过判断是否>0.5将像素值设为0或1;softmax通过取类别值最大的索引作为像素值,比如[0.1, 0.7]中0.7最大,索引为1,将像素值设为1)

还是那句话,每个人写的损失函数或指标的代码都不尽相同,在用别人代码的时候一定要注意输入输出的数据形状与格式。

关于类别的重要补充

对于分类网络(分割可以视为像素级分类),我认为类别数主要可以分为以下几种:

- 单一类别,对应本项目中num_class=1

- 二分类,对应num_class=2

- 多分类,对应num_class >= 3

- multi class,即某些像素可以同时属于多个类别,本项目应该不适用,需要自己修改损失函数等。

这里重点说下前两个,单一类别和二分类实质上是等价的,但实现上会有很多区别,多分类就是这里二分类在维数上的推广。

单一类别是只对类别作一次二元的判断,比如我们要从图片从分割出汽车,我们只关心像素是否是汽车像素,对于某像素,网络仅输出一个值,通过sigmoid函数可以转换为概率,一般我们默认概率<0.5表示该像素不为汽车像素,反之为汽车像素。

而将其视为二分类问题的话,我们实际上是定义了两个类别:非汽车与汽车,对于某像素,网络会输出一个维数为2的向量,通过softmax函数转换为概率,会是

[

0.3

,

0.7

]

[0.3, 0.7]

[0.3,0.7]这样的形式,0.3表示该像素属于非汽车的概率,0.7表示该像素属于汽车的概率,一般我们取概率最大的类作为该像素的预测,同时,由于softmax是增函数,往往在输出最终结果时会直接取类别通道上的最大值的索引作为类别,不需要通过softmax函数。

在实现上,除了sigmoid与softmax激活的区别之外,对于mask_true,在单一类别中的形式一般是

[

B

,

C

h

,

H

,

W

]

[B, Ch, H, W]

[B,Ch,H,W];而在二分类(或多分类)中会转为onehot的形式,即

[

B

,

C

l

a

s

s

,

C

h

,

H

,

W

]

[B, Class, Ch, H, W]

[B,Class,Ch,H,W],图片中每个像素形如

[

0

,

1

]

[0, 1]

[0,1]或

[

1

,

0

]

[1, 0]

[1,0],表示1类或0类。

自定义网络经验

本项目模块解析

以/unet/unet_parts.py 中定义的双卷积模块为例

class DoubleConv(nn.Module):

"""(convolution => [BN] => ReLU) * 2"""

def __init__(self, in_channels, out_channels, mid_channels=None):

super().__init__()

if not mid_channels:

mid_channels = out_channels

self.double_conv = nn.Sequential(

nn.Conv2d(in_channels, mid_channels, kernel_size=3, padding=1, bias=False),

nn.BatchNorm2d(mid_channels),

nn.ReLU(inplace=True),

nn.Conv2d(mid_channels, out_channels, kernel_size=3, padding=1, bias=False),

nn.BatchNorm2d(out_channels),

nn.ReLU(inplace=True)

)

def forward(self, x):

return self.double_conv(x)

可以发现,模块的定义其实就是一些函数的封装。

首先我们需要先知道这里的双卷积就是卷积+BN+ReLu循环两次的一个结构。

那么实现时,就只要利用pytorch.nn中一些基本操作就可以实现这样一个模块了,这个代码里用nn.Sequential将一系列基本操作组合成一个序列,再在forward函数里面直接将输入输入序列就完成了双卷积的操作。

当然,也可以直接在forward里进行一系列操作,但需要注意的是,pytorch中定义卷积等操作时需要对每个卷积单独初始化,不能因为卷积的尺寸一样就共用一个(如果这个操作没有参数则随意,比如ReLu),以这个代码为例,应改写为

class DoubleConv(nn.Module):

"""(convolution => [BN] => ReLU) * 2"""

def __init__(self, in_channels, out_channels, mid_channels=None):

super().__init__()

if not mid_channels:

mid_channels = out_channels

self.conv1 = nn.Conv2d(in_channels, mid_channels, kernel_size=3, padding=1, bias=False)

self.bn1 = nn.BatchNorm2d(mid_channels)

self.relu = nn.ReLU(inplace=True)

self.conv2 = nn.Conv2d(mid_channels, out_channels, kernel_size=3, padding=1, bias=False)

self.bn2 = nn.BatchNorm2d(out_channels)

)

def forward(self, x):

x = self.conv1(x)

x = self.bn1(x)

x = self.relu1(x)

x = self.conv(x)

x = self.bn2(x)

x = self.relu(x)

return x

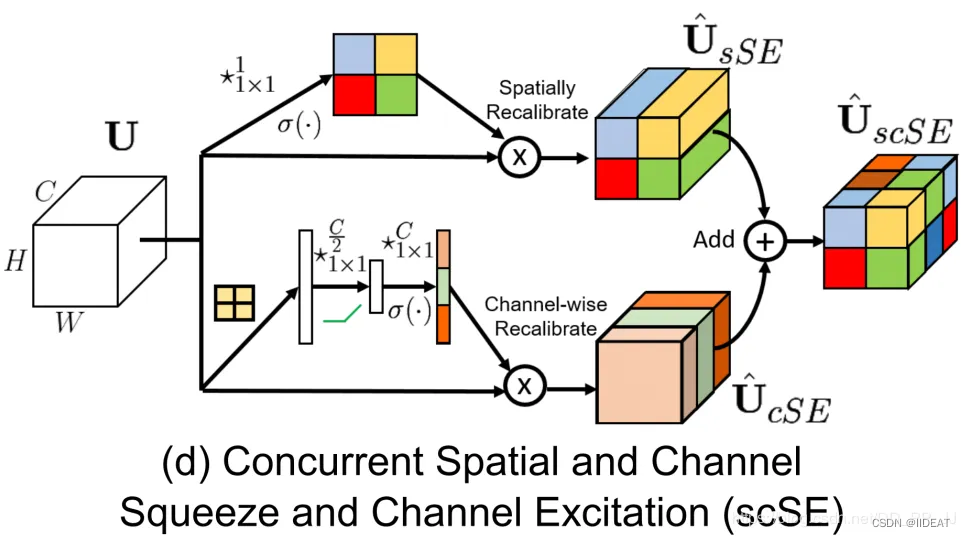

scse模块复现示例

scse paper:Concurrent Spatial and Channel `Squeeze & Excitation’ in Fully Convolutional Networks

scse模块是cv领域比较常用的一种注意力机制,要复现它,我们首先得十分清楚他的原理。

对于

C

×

H

×

W

C\times H \times W

C×H×W的输入,分为两个通道分别计算空间注意力(sse)与通道注意力(cse)并作用在输入上相加,构成最终的输出。

其中空间注意力通过11的卷积将通道数变为1,获得空间上的一个map,即

1

×

H

×

W

1\times H\times W

1×H×W的空间map,再作用sigmoid转换为0~1区间上的数作为权重,将输入与这一权重相乘,即可获得空间上的注意力结果。根据这个原理,只要实现11的卷积和sigmoid函数即可,这两个操作pytorch中就有,所以可以给出如下的模块复现:

class sSE(nn.Module):

def __init__(self, in_channels):

super().__init__()

self.Conv1x1 = nn.Conv3d(in_channels, 1, kernel_size=1, bias=False)

self.norm = nn.Sigmoid()

def forward(self, U):

q = self.Conv1x1(U)

q = self.norm(q)

return U * q

通道注意力其实原理是一样的,在论文中,通过池化将输入转为 C × 1 × 1 C\times 1 \times 1 C×1×1的形状,又通过两个全连接层对信息进行处理,最终用sigmoid激活获得通道上的权重,再将其与原始输入相乘即可。这里实际上有两种选择,一是按照论文的实现将输入矩阵全部展开再用全连接层计算注意力,实现如下:

class cSE(nn.Module):

def __init__(self, in_channels):

super(cSE, self).__init__()

self.linear1 = nn.Linear(in_channels, in_channels// 2)

self.linear2 = nn.Linear(in_channels // 2, in_channels)

self.norm = nn.Sigmoid()

def forward(self, U):

q = U.view(*(U.shape[:-2]), -1).mean(-1)

q = F.relu(self.linear1(q), inplace=True)

q = self.linear2(q)

q = q.unsqueeze(-1).unsqueeze(-1)

q = self.norm(q)

return U * q

当然,也可以用池化和1*1卷积代替全连接层的实现,

class cSE(nn.Module):

def __init__(self, in_channels):

super().__init__()

self.avgpool = nn.AdaptiveAvgPool2d(1)

self.Conv_Squeeze = nn.Conv3d(in_channels, in_channels // 2, kernel_size=1, bias=False)

self.Conv_Excitation = nn.Conv3d(in_channels // 2, in_channels, kernel_size=1, bias=False)

self.norm = nn.Sigmoid()

def forward(self, U):

z = self.avgpool(U)

z = self.Conv_Squeeze(z)

z = self.Conv_Excitation(z)

z = self.norm(z)

return U * z.expand_as(U)

双分支unet复现示例

我们再以项目中的unet代码为例,复现两个上采样分支的unet,

首先,原始unet代码如下

class UNet(nn.Module):

def __init__(self, n_channels, n_classes, bilinear=False):

super(UNet, self).__init__()

self.n_channels = n_channels

self.n_classes = n_classes

self.bilinear = bilinear

self.inc = DoubleConv(n_channels, 64)

self.down1 = Down(64, 128)

self.down2 = Down(128, 256)

self.down3 = Down(256, 512)

factor = 2 if bilinear else 1

self.down4 = Down(512, 1024 // factor)

self.up1 = Up(1024, 512 // factor, bilinear)

self.up2 = Up(512, 256 // factor, bilinear)

self.up3 = Up(256, 128 // factor, bilinear)

self.up4 = Up(128, 64, bilinear)

self.outc = OutConv(64, n_classes)

def forward(self, x):

x1 = self.inc(x)

x2 = self.down1(x1)

x3 = self.down2(x2)

x4 = self.down3(x3)

x5 = self.down4(x4)

x = self.up1(x5, x4)

x = self.up2(x, x3)

x = self.up3(x, x2)

x = self.up4(x, x1)

logits = self.outc(x)

return logits

我们要新增一个上采样分支,其实无非就是让x5通过另外的几个up模块就可以了,我们先给出代码

class UNet(nn.Module):

def __init__(self, n_channels, n_classes, bilinear=False):

super(UNet, self).__init__()

self.n_channels = n_channels

self.n_classes = n_classes

self.bilinear = bilinear

self.inc = DoubleConv(n_channels, 64)

self.down1 = Down(64, 128)

self.down2 = Down(128, 256)

self.down3 = Down(256, 512)

factor = 2 if bilinear else 1

self.down4 = Down(512, 1024 // factor)

self.up1 = Up(1024, 512 // factor, bilinear)

self.up2 = Up(512, 256 // factor, bilinear)

self.up3 = Up(256, 128 // factor, bilinear)

self.up4 = Up(128, 64, bilinear)

self.up11 = Up(1024, 512 // factor, bilinear)

self.up22 = Up(512, 256 // factor, bilinear)

self.up33 = Up(256, 128 // factor, bilinear)

self.up44 = Up(128, 64, bilinear)

self.outc1 = OutConv(64, n_classes)

self.outc2 = OutConv(64, n_classes)

def forward(self, x):

x1 = self.inc(x)

x2 = self.down1(x1)

x3 = self.down2(x2)

x4 = self.down3(x3)

x5 = self.down4(x4)

x55 = x5.clone()

x = self.up1(x5, x4)

x = self.up2(x, x3)

x = self.up3(x, x2)

x = self.up4(x, x1)

logits1 = self.outc1(x)

xx = self.up11(x55, x4)

xx = self.up22(xx, x3)

xx = self.up33(xx, x2)

xx = self.up44(xx, x1)

logits2 = self.outc1(x)

return [logits1, logits2]

可以看到,我们主要做了几个修改,

一是在__init__中新定义了四个up模块和一个outc模块作为新的上采样分支。

二是对x5进行了clone得到x55作为新上采样分支的输入,这里使用clone的原因是clone会为x55新开一个内存空间,修改x55不会导致x5的变化,保证两个上采样分支的独立,同时clone会共享x55与x5的梯度,这样梯度在回传时从两个上采样分支汇聚回x5,再完成梯度的后续回传。

三是使用新的上采样分支计算了xx和logits2,将logits1, logits2一起作为模型的输出。这里要注意,在后续的损失函数计算中,可以分别计算两个输出的损失,相加后backward。

调参经验

建议用tensorboard或其他工具记录训练和验证时的曲线,方便后续分析问题。

此外,我只给出普适的保证训练正常的调参经验,fine-tune的调参需要根据自己的数据集实践,刷点的话可以多做些尝试,warm up 学习率调节这些技巧都可以参考。

优化器与学习率选择

关于优化器的选择,如果不是为了刷sota,我建议先用adam训练,adam对初始学习率的设置不太敏感(我一般设置3e-4),不容易出问题。后续可以用sgd接着微调,或者直接全用sgd训练。sgd的学习率我一般从0.1开始试,如果loss的波动比较大,说明可能学习率太大了,就除以10接着试。

adam和sgd的一些变体我不太建议尝试,至少我个人试下来提升微乎其微。

梯度消失/爆炸

理论上现在模型的很多结构已经可以避免梯度消失或爆炸的问题,我建议先检查输入输出的格式和尺寸以及损失函数的计算。

此外,tensorboard可以记录每一层的梯度,可以保存下来分析是哪一层哪一步出了问题。

loss波动很大

这个可能的原因有很多,我建议还是先检查下输入输出的格式和尺寸还有损失函数的计算。也需要注意自己保存的loss是每个epoch上所有batch的均值还是单个batch的结果。

然后可以试下学习率调小会不会有什么变化,batchsize增大有没有什么变化。

再有就是数据量少和模型复杂度太低的原因。

其实碰到类似的问题,最好自己总结个方法论出来,可以先从原理入手,比如针对loss波动大这一现象,先得确定你的loss是怎么算的,这么算是不是合适,曲线呈现是按batch还是按什么的;再去分析这样可能的成因,loss波动大的本质还是个优化问题,无非就是优化方向对了但是步长太大导致波动大或者优化方向根本就不对;然后再分析成因的成因,一点点简化代码去排查。

深度学习项目实践指南

深度学习项目实践指南

本文介绍了深度学习项目从网络定义到训练验证的全流程,包括U-Net网络结构、数据加载、优化器设置、损失函数应用及调参经验。强调了在自定义网络、损失函数计算和训练过程中的关键点,提供了实用的调参建议。

本文介绍了深度学习项目从网络定义到训练验证的全流程,包括U-Net网络结构、数据加载、优化器设置、损失函数应用及调参经验。强调了在自定义网络、损失函数计算和训练过程中的关键点,提供了实用的调参建议。

3437

3437