#!/usr/bin/python

# -*- coding:utf8 -*-

import torch

import matplotlib.pyplot as plt

def relu(z):

return torch.maximum(z,torch.as_tensor(0.))

#带泄露值relu

def leaky_relu(z,negative_slope=0.1):

a1=(torch.can_cast((z>0).dtype,torch.float32)*z)

a2=(torch.can_cast((z<=0).dtype,torch.float32)*(negative_slope*z))

return a1+a2

z=torch.linspace(-10,10,10000)

plt.figure()

plt.plot(z.tolist(),relu(z).tolist(),color='#e4007f',label='ReLU Function')

plt.plot(z.tolist(),leaky_relu(z).tolist(),color='#f19ec2',linestyle='--',label='Leaky_ReLU')

ax=plt.gca()

ax.spines['top'].set_color('none')

ax.spines['right'].set_color('none')

ax.spines['left'].set_position(('data',0))

ax.spines['bottom'].set_position(('data',0))

plt.legend(loc='upper left',fontsize='large')

plt.savefig('fw-relu-leakyrelu.pdf')

plt.show()

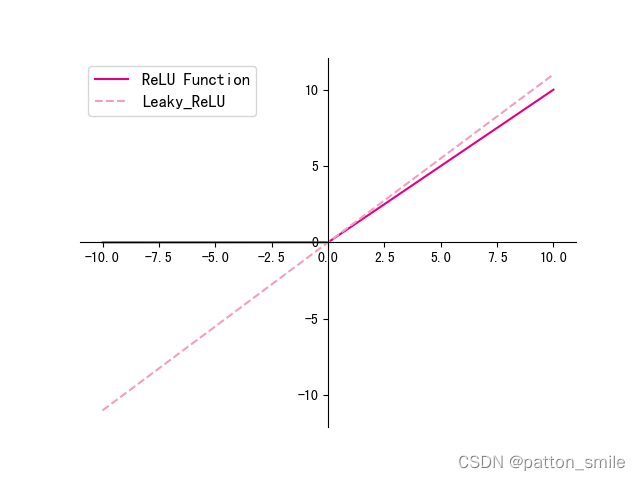

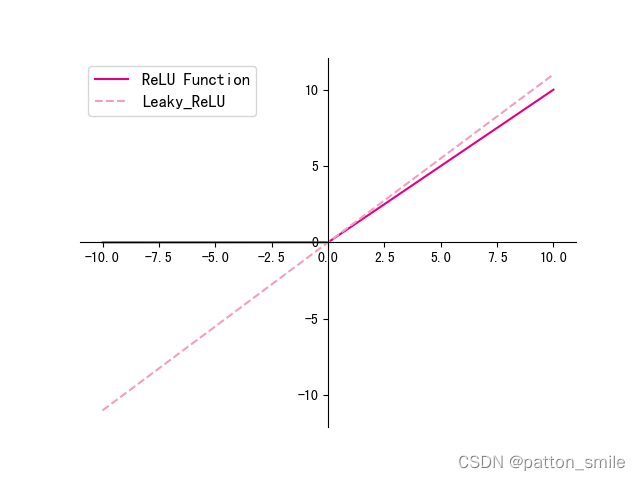

该代码示例展示了如何在Python中使用torch库实现ReLU和LeakyReLU激活函数,并通过matplotlib绘制它们的图形对比。ReLU在负区为零,而LeakyReLU有一个小的负斜率,避免了ReLU的‘死亡神经元’问题。

该代码示例展示了如何在Python中使用torch库实现ReLU和LeakyReLU激活函数,并通过matplotlib绘制它们的图形对比。ReLU在负区为零,而LeakyReLU有一个小的负斜率,避免了ReLU的‘死亡神经元’问题。

8816

8816

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?