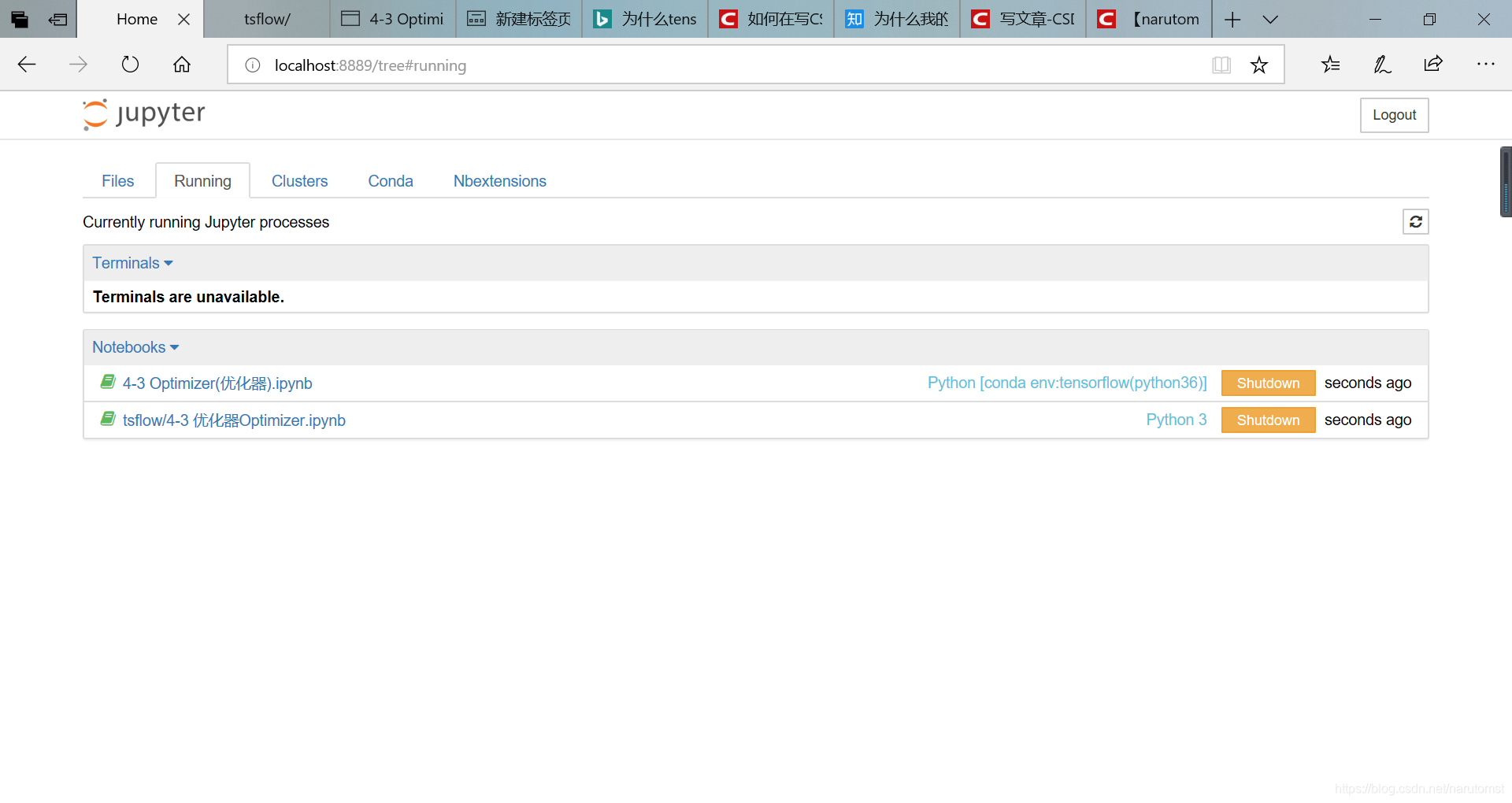

1. GPU资源不够时,只启动一个*.ipynb。将其他的处于Running状态的.ipynb文件全部“Shutdown”,这样就不容易出现InternalError报错了。

2. 有些时候CPU运行时间更短,而GPU在速度上显示不出优势来。例如下面的代码,tensorflow运行时间不到2min,而tensorflow-gpu运行时间超过了3min。

with tf.Session() as sess:

sess.run(init)

for epoch in range(201):

for batch in range(n_batch):

batch_xs,batch_ys = mnist.train.next_batch(batch_size)

sess.run(train_step,feed_dict={x:batch_xs,y:batch_ys})

acc = sess.run(accuracy,feed_dict={x:mnist.test.images,y:mnist.test.labels})

print("Iter " + str(epoch) + ", Testing Accuracy " + str(acc))

3. 在做神经网络计算时,GPU时间更短,例如下面的代码显示了一个多层神经网络。tensorflow运行时间超过4min,而tensorflow-gpu运行时间不到1min。

import tensorflow as tf

from tensorflow.examples.tutorials.mnist import input_data

# 载入数据

mnist = input_data.read_data_sets("MNIST_data", one_hot=True)

#每个批次的大小

batch_size = 100

# 计算一共有多少个批次

n_batch = mnist.train.num_examples // batch_size

# 定义两个placeholder

x = tf.placeholder(tf.float32,[None,784])

y = tf.placeholder(tf.float32,[None,10])

keep_prob = tf.placeholder(tf.float32)

# 创建一个不简单的神经网络:4-1的网络结构是784-10;而本节的网络结构是784-800-400-100-10,增加了3个中间隐层

W1 = tf.Variable(tf.truncated_normal([784,800],stddev=0.1))

b1 = tf.Variable(tf.zeros([800]) + 0.1)

L1 = tf.nn.tanh(tf.matmul(x,W1) + b1)

L1_drop = tf.nn.dropout(L1,keep_prob)

W2 = tf.Variable(tf.truncated_normal([800,400],stddev=0.1))

b2 = tf.Variable(tf.zeros([400]) + 0.1)

L2 = tf.nn.tanh(tf.matmul(L1_drop,W2) + b2)

L2_drop = tf.nn.dropout(L2,keep_prob)

W3 = tf.Variable(tf.truncated_normal([400,100],stddev=0.1))

b3 = tf.Variable(tf.zeros([100]) + 0.1)

L3 = tf.nn.tanh(tf.matmul(L2_drop,W3) + b3)

L3_drop = tf.nn.dropout(L3,keep_prob)

W4 = tf.Variable(tf.truncated_normal([100,10],stddev=0.1))

b4 = tf.Variable(tf.zeros([10]) + 0.1)

prediction = tf.nn.softmax(tf.matmul(L3_drop,W4) + b4)

#W = tf.Variable(tf.zeros([784,10]))

#b = tf.Variable(tf.zeros([10]))

#prediction = tf.nn.softmax(tf.matmul(x,W1) + b1)

# 二次代价函数(将代价函数由均方误差换成交叉熵)

#loss = tf.reduce_mean(tf.square(y-prediction))

loss = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits_v2(labels=y,logits=prediction))

#使用梯度下降法训练

train_step = tf.train.GradientDescentOptimizer(0.205).minimize(loss)

# 初始化变量

init = tf.global_variables_initializer()

# 结果存放在一个布尔型列表中,argmax()返回一维张量中最大的元素所在的位置

correct_prediction = tf.equal(tf.argmax(y,1),tf.argmax(prediction,1))

# 求准确率

accuracy = tf.reduce_mean(tf.cast(correct_prediction,tf.float32))

with tf.Session() as sess:

sess.run(init) #运行初始化

for epoch in range(21):

for batch in range(n_batch):

batch_xs,batch_ys = mnist.train.next_batch(batch_size)

sess.run(train_step,feed_dict={x:batch_xs, y:batch_ys, keep_prob:0.7})

test_acc = sess.run(accuracy,feed_dict={x:mnist.test.images,y:mnist.test.labels,keep_prob:1.0})

train_acc = sess.run(accuracy,feed_dict={x:mnist.train.images,y:mnist.train.labels,keep_prob:1.0})

print("Iter " + str(epoch) + ", Testing Accuracy " + str(test_acc) + ", Training Accuracy " + str(train_acc))

本文探讨了GPU与CPU在不同任务中的性能表现,指出在神经网络计算中GPU展现出显著优势,而在某些场景下CPU可能更高效。通过实例比较,如多层神经网络计算,展示了GPU加速效果。

本文探讨了GPU与CPU在不同任务中的性能表现,指出在神经网络计算中GPU展现出显著优势,而在某些场景下CPU可能更高效。通过实例比较,如多层神经网络计算,展示了GPU加速效果。

1万+

1万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?