背景:

上一篇文章已经给大家展示展示出来了音频系统开发的完整课表及专题相关的详细介绍。

https://mp.weixin.qq.com/s/pQr-HrW0EUoi5QHTMOGEBQ

今天来开始正式audio学习开始的audio相关的基础知识部分之核心属性部分。

## FORMAT和CHANNEL

## FORMAT和CHANNEL

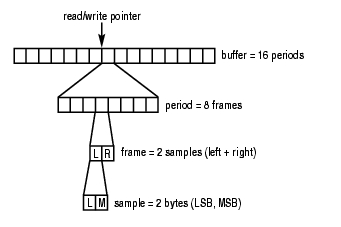

在音频pcm的buffer里有以下几个单位:

period 一个period当中存储多个多个frame

frame 一个frame中存储一个或多个同一时间采集的sample。多个ADC/DAC用于同一时间采集/转换多个sample,那么这几个同时间被处理的sample组成一个frame。通常,如果一个设备有N个channel,那么它的一个frame等于N个sample

sample 每一个采样得到的数值称为一个sample,它可能是多个字节的,大端或者小端,浮点数或者整数,有符号或者无符号

Channel 声道数量,比如MONO单声道,STEREO立体声左右两个声道

帧大小:

frame_size = sample * channels

sample: 即对应不同格式的位数,如PCM_16_BIT即2字节

channels:信道数,如左右信道,就是2

如果是个44100采样率,那就每秒的字节数据大小如下:

每秒数据大小 = 44100 x (PCM_16_BIT x channels)= 44100 x 4 = 176400 =172 KB

一般在在音频框架中最常用的还是AUDIO_FORMAT_PCM,PCM格式同时也分为多个数据位的情况,比如最常见的的AUDIO_FORMAT_PCM_16_BIT,AUDIO_FORMAT_PCM_32_BIT即16位和32位的音频格式。

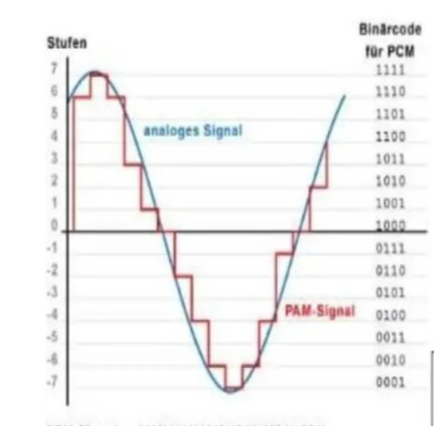

模拟信号变成pcm数字信号

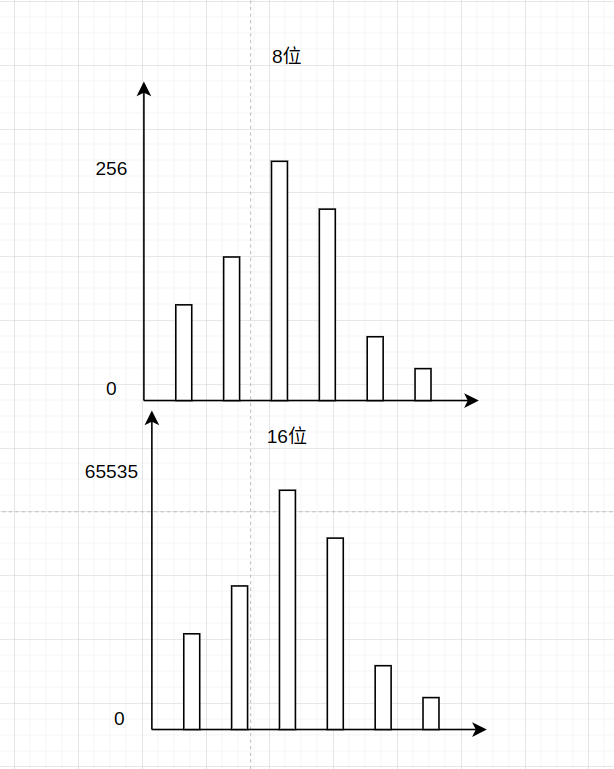

对比8位和16位差异

采样的值精度完全不一样,65535明显比256更加精确。

typedef enum {

AUDIO_FORMAT_INVALID = 0xFFFFFFFFu,

AUDIO_FORMAT_DEFAULT = 0,

AUDIO_FORMAT_PCM = 0x00000000u,

AUDIO_FORMAT_MP3 = 0x01000000u,

AUDIO_FORMAT_AMR_NB = 0x02000000u,

AUDIO_FORMAT_AMR_WB = 0x03000000u,

AUDIO_FORMAT_AAC = 0x04000000u,

AUDIO_FORMAT_HE_AAC_V1 = 0x05000000u,

AUDIO_FORMAT_HE_AAC_V2 = 0x06000000u,

AUDIO_FORMAT_VORBIS = 0x07000000u,

AUDIO_FORMAT_OPUS = 0x08000000u,

AUDIO_FORMAT_AC3 = 0x09000000u,

AUDIO_FORMAT_E_AC3 = 0x0A000000u,

AUDIO_FORMAT_DTS = 0x0B000000u,

AUDIO_FORMAT_DTS_HD = 0x0C000000u,

AUDIO_FORMAT_IEC61937 = 0x0D000000u,

AUDIO_FORMAT_DOLBY_TRUEHD = 0x0E000000u,

AUDIO_FORMAT_EVRC = 0x10000000u,

AUDIO_FORMAT_EVRCB = 0x11000000u,

AUDIO_FORMAT_EVRCWB = 0x12000000u,

AUDIO_FORMAT_EVRCNW = 0x13000000u,

AUDIO_FORMAT_AAC_ADIF = 0x14000000u,

AUDIO_FORMAT_WMA = 0x15000000u,

AUDIO_FORMAT_WMA_PRO = 0x16000000u,

AUDIO_FORMAT_AMR_WB_PLUS = 0x17000000u,

AUDIO_FORMAT_MP2 = 0x18000000u,

AUDIO_FORMAT_QCELP = 0x19000000u,

AUDIO_FORMAT_DSD = 0x1A000000u,

AUDIO_FORMAT_FLAC = 0x1B000000u,

AUDIO_FORMAT_ALAC = 0x1C000000u,

AUDIO_FORMAT_APE = 0x1D000000u,

AUDIO_FORMAT_AAC_ADTS = 0x1E000000u,

AUDIO_FORMAT_SBC = 0x1F000000u,

AUDIO_FORMAT_APTX = 0x20000000u,

AUDIO_FORMAT_APTX_HD = 0x21000000u,

AUDIO_FORMAT_AC4 = 0x22000000u,

AUDIO_FORMAT_LDAC = 0x23000000u,

AUDIO_FORMAT_MAT = 0x24000000u,

AUDIO_FORMAT_AAC_LATM = 0x25000000u,

AUDIO_FORMAT_CELT = 0x26000000u,

AUDIO_FORMAT_APTX_ADAPTIVE = 0x27000000u,

AUDIO_FORMAT_LHDC = 0x28000000u,

AUDIO_FORMAT_LHDC_LL = 0x29000000u,

AUDIO_FORMAT_APTX_TWSP = 0x2A000000u,

AUDIO_FORMAT_MAIN_MASK = 0xFF000000u,

AUDIO_FORMAT_SUB_MASK = 0x00FFFFFFu,

/* Subformats */

AUDIO_FORMAT_PCM_SUB_16_BIT = 0x1u,

AUDIO_FORMAT_PCM_SUB_8_BIT = 0x2u,

AUDIO_FORMAT_PCM_SUB_32_BIT = 0x3u,

AUDIO_FORMAT_PCM_SUB_8_24_BIT = 0x4u,

AUDIO_FORMAT_PCM_SUB_FLOAT = 0x5u,

AUDIO_FORMAT_PCM_SUB_24_BIT_PACKED = 0x6u,

AUDIO_FORMAT_MP3_SUB_NONE = 0x0u,

AUDIO_FORMAT_AMR_SUB_NONE = 0x0u,

AUDIO_FORMAT_AAC_SUB_MAIN = 0x1u,

AUDIO_FORMAT_AAC_SUB_LC = 0x2u,

AUDIO_FORMAT_AAC_SUB_SSR = 0x4u,

AUDIO_FORMAT_AAC_SUB_LTP = 0x8u,

AUDIO_FORMAT_AAC_SUB_HE_V1 = 0x10u,

AUDIO_FORMAT_AAC_SUB_SCALABLE = 0x20u,

AUDIO_FORMAT_AAC_SUB_ERLC = 0x40u,

AUDIO_FORMAT_AAC_SUB_LD = 0x80u,

AUDIO_FORMAT_AAC_SUB_HE_V2 = 0x100u,

AUDIO_FORMAT_AAC_SUB_ELD = 0x200u,

AUDIO_FORMAT_AAC_SUB_XHE = 0x300u,

AUDIO_FORMAT_VORBIS_SUB_NONE = 0x0u,

AUDIO_FORMAT_E_AC3_SUB_JOC = 0x1u,

AUDIO_FORMAT_MAT_SUB_1_0 = 0x1u,

AUDIO_FORMAT_MAT_SUB_2_0 = 0x2u,

AUDIO_FORMAT_MAT_SUB_2_1 = 0x3u,

/* Aliases */

AUDIO_FORMAT_PCM_16_BIT = 0x1u, // (PCM | PCM_SUB_16_BIT)

AUDIO_FORMAT_PCM_8_BIT = 0x2u, // (PCM | PCM_SUB_8_BIT)

AUDIO_FORMAT_PCM_32_BIT = 0x3u, // (PCM | PCM_SUB_32_BIT)

AUDIO_FORMAT_PCM_8_24_BIT = 0x4u, // (PCM | PCM_SUB_8_24_BIT)

AUDIO_FORMAT_PCM_FLOAT = 0x5u, // (PCM | PCM_SUB_FLOAT)

AUDIO_FORMAT_PCM_24_BIT_PACKED = 0x6u, // (PCM | PCM_SUB_24_BIT_PACKED)

AUDIO_FORMAT_AAC_MAIN = 0x4000001u, // (AAC | AAC_SUB_MAIN)

AUDIO_FORMAT_AAC_LC = 0x4000002u, // (AAC | AAC_SUB_LC)

AUDIO_FORMAT_AAC_SSR = 0x4000004u, // (AAC | AAC_SUB_SSR)

AUDIO_FORMAT_AAC_LTP = 0x4000008u, // (AAC | AAC_SUB_LTP)

AUDIO_FORMAT_AAC_HE_V1 = 0x4000010u, // (AAC | AAC_SUB_HE_V1)

AUDIO_FORMAT_AAC_SCALABLE = 0x4000020u, // (AAC | AAC_SUB_SCALABLE)

AUDIO_FORMAT_AAC_ERLC = 0x4000040u, // (AAC | AAC_SUB_ERLC)

AUDIO_FORMAT_AAC_LD = 0x4000080u, // (AAC | AAC_SUB_LD)

AUDIO_FORMAT_AAC_HE_V2 = 0x4000100u, // (AAC | AAC_SUB_HE_V2)

AUDIO_FORMAT_AAC_ELD = 0x4000200u, // (AAC | AAC_SUB_ELD)

AUDIO_FORMAT_AAC_XHE = 0x4000300u, // (AAC | AAC_SUB_XHE)

AUDIO_FORMAT_AAC_ADTS_MAIN = 0x1e000001u, // (AAC_ADTS | AAC_SUB_MAIN)

AUDIO_FORMAT_AAC_ADTS_LC = 0x1e000002u, // (AAC_ADTS | AAC_SUB_LC)

AUDIO_FORMAT_AAC_ADTS_SSR = 0x1e000004u, // (AAC_ADTS | AAC_SUB_SSR)

AUDIO_FORMAT_AAC_ADTS_LTP = 0x1e000008u, // (AAC_ADTS | AAC_SUB_LTP)

AUDIO_FORMAT_AAC_ADTS_HE_V1 = 0x1e000010u, // (AAC_ADTS | AAC_SUB_HE_V1)

AUDIO_FORMAT_AAC_ADTS_SCALABLE = 0x1e000020u, // (AAC_ADTS | AAC_SUB_SCALABLE)

AUDIO_FORMAT_AAC_ADTS_ERLC = 0x1e000040u, // (AAC_ADTS | AAC_SUB_ERLC)

AUDIO_FORMAT_AAC_ADTS_LD = 0x1e000080u, // (AAC_ADTS | AAC_SUB_LD)

AUDIO_FORMAT_AAC_ADTS_HE_V2 = 0x1e000100u, // (AAC_ADTS | AAC_SUB_HE_V2)

AUDIO_FORMAT_AAC_ADTS_ELD = 0x1e000200u, // (AAC_ADTS | AAC_SUB_ELD)

AUDIO_FORMAT_AAC_ADTS_XHE = 0x1e000300u, // (AAC_ADTS | AAC_SUB_XHE)

AUDIO_FORMAT_AAC_LATM_LC = 0x25000002u, // (AAC_LATM | AAC_SUB_LC)

AUDIO_FORMAT_AAC_LATM_HE_V1 = 0x25000010u, // (AAC_LATM | AAC_SUB_HE_V1)

AUDIO_FORMAT_AAC_LATM_HE_V2 = 0x25000100u, // (AAC_LATM | AAC_SUB_HE_V2)

AUDIO_FORMAT_E_AC3_JOC = 0xA000001u, // (E_AC3 | E_AC3_SUB_JOC)

AUDIO_FORMAT_MAT_1_0 = 0x24000001u, // (MAT | MAT_SUB_1_0)

AUDIO_FORMAT_MAT_2_0 = 0x24000002u, // (MAT | MAT_SUB_2_0)

AUDIO_FORMAT_MAT_2_1 = 0x24000003u, // (MAT | MAT_SUB_2_1)

} audio_format_t;

enum {

FCC_2 = 2,

FCC_8 = 8,

};

enum {

AUDIO_CHANNEL_REPRESENTATION_POSITION = 0x0u,

AUDIO_CHANNEL_REPRESENTATION_INDEX = 0x2u,

AUDIO_CHANNEL_NONE = 0x0u,

AUDIO_CHANNEL_INVALID = 0xC0000000u,

AUDIO_CHANNEL_OUT_FRONT_LEFT = 0x1u,

AUDIO_CHANNEL_OUT_FRONT_RIGHT = 0x2u,

AUDIO_CHANNEL_OUT_FRONT_CENTER = 0x4u,

AUDIO_CHANNEL_OUT_LOW_FREQUENCY = 0x8u,

AUDIO_CHANNEL_OUT_BACK_LEFT = 0x10u,

AUDIO_CHANNEL_OUT_BACK_RIGHT = 0x20u,

AUDIO_CHANNEL_OUT_FRONT_LEFT_OF_CENTER = 0x40u,

AUDIO_CHANNEL_OUT_FRONT_RIGHT_OF_CENTER = 0x80u,

AUDIO_CHANNEL_OUT_BACK_CENTER = 0x100u,

AUDIO_CHANNEL_OUT_SIDE_LEFT = 0x200u,

AUDIO_CHANNEL_OUT_SIDE_RIGHT = 0x400u,

AUDIO_CHANNEL_OUT_TOP_CENTER = 0x800u,

AUDIO_CHANNEL_OUT_TOP_FRONT_LEFT = 0x1000u,

AUDIO_CHANNEL_OUT_TOP_FRONT_CENTER = 0x2000u,

AUDIO_CHANNEL_OUT_TOP_FRONT_RIGHT = 0x4000u,

AUDIO_CHANNEL_OUT_TOP_BACK_LEFT = 0x8000u,

AUDIO_CHANNEL_OUT_TOP_BACK_CENTER = 0x10000u,

AUDIO_CHANNEL_OUT_TOP_BACK_RIGHT = 0x20000u,

AUDIO_CHANNEL_OUT_TOP_SIDE_LEFT = 0x40000u,

AUDIO_CHANNEL_OUT_TOP_SIDE_RIGHT = 0x80000u,

AUDIO_CHANNEL_OUT_HAPTIC_A = 0x20000000u,

AUDIO_CHANNEL_OUT_HAPTIC_B = 0x10000000u,

AUDIO_CHANNEL_OUT_MONO = 0x1u, // OUT_FRONT_LEFT

AUDIO_CHANNEL_OUT_STEREO = 0x3u, // OUT_FRONT_LEFT | OUT_FRONT_RIGHT

AUDIO_CHANNEL_OUT_2POINT1 = 0xBu, // OUT_FRONT_LEFT | OUT_FRONT_RIGHT | OUT_LOW_FREQUENCY

AUDIO_CHANNEL_OUT_2POINT0POINT2 = 0xC0003u, // OUT_FRONT_LEFT | OUT_FRONT_RIGHT | OUT_TOP_SIDE_LEFT | OUT_TOP_SIDE_RIGHT

AUDIO_CHANNEL_OUT_2POINT1POINT2 = 0xC000Bu, // OUT_FRONT_LEFT | OUT_FRONT_RIGHT | OUT_TOP_SIDE_LEFT | OUT_TOP_SIDE_RIGHT | OUT_LOW_FREQUENCY

AUDIO_CHANNEL_OUT_3POINT0POINT2 = 0xC0007u, // OUT_FRONT_LEFT | OUT_FRONT_RIGHT | OUT_FRONT_CENTER | OUT_TOP_SIDE_LEFT | OUT_TOP_SIDE_RIGHT

AUDIO_CHANNEL_OUT_3POINT1POINT2 = 0xC000Fu, // OUT_FRONT_LEFT | OUT_FRONT_RIGHT | OUT_FRONT_CENTER | OUT_TOP_SIDE_LEFT | OUT_TOP_SIDE_RIGHT | OUT_LOW_FREQUENCY

AUDIO_CHANNEL_OUT_QUAD = 0x33u, // OUT_FRONT_LEFT | OUT_FRONT_RIGHT | OUT_BACK_LEFT | OUT_BACK_RIGHT

AUDIO_CHANNEL_OUT_QUAD_BACK = 0x33u, // OUT_QUAD

AUDIO_CHANNEL_OUT_QUAD_SIDE = 0x603u, // OUT_FRONT_LEFT | OUT_FRONT_RIGHT | OUT_SIDE_LEFT | OUT_SIDE_RIGHT

AUDIO_CHANNEL_OUT_SURROUND = 0x107u, // OUT_FRONT_LEFT | OUT_FRONT_RIGHT | OUT_FRONT_CENTER | OUT_BACK_CENTER

AUDIO_CHANNEL_OUT_PENTA = 0x37u, // OUT_QUAD | OUT_FRONT_CENTER

AUDIO_CHANNEL_OUT_5POINT1 = 0x3Fu, // OUT_FRONT_LEFT | OUT_FRONT_RIGHT | OUT_FRONT_CENTER | OUT_LOW_FREQUENCY | OUT_BACK_LEFT | OUT_BACK_RIGHT

AUDIO_CHANNEL_OUT_5POINT1_BACK = 0x3Fu, // OUT_5POINT1

AUDIO_CHANNEL_OUT_5POINT1_SIDE = 0x60Fu, // OUT_FRONT_LEFT | OUT_FRONT_RIGHT | OUT_FRONT_CENTER | OUT_LOW_FREQUENCY | OUT_SIDE_LEFT | OUT_SIDE_RIGHT

AUDIO_CHANNEL_OUT_5POINT1POINT2 = 0xC003Fu, // OUT_5POINT1 | OUT_TOP_SIDE_LEFT | OUT_TOP_SIDE_RIGHT

AUDIO_CHANNEL_OUT_5POINT1POINT4 = 0x2D03Fu, // OUT_5POINT1 | OUT_TOP_FRONT_LEFT | OUT_TOP_FRONT_RIGHT | OUT_TOP_BACK_LEFT | OUT_TOP_BACK_RIGHT

AUDIO_CHANNEL_OUT_6POINT1 = 0x13Fu, // OUT_FRONT_LEFT | OUT_FRONT_RIGHT | OUT_FRONT_CENTER | OUT_LOW_FREQUENCY | OUT_BACK_LEFT | OUT_BACK_RIGHT | OUT_BACK_CENTER

AUDIO_CHANNEL_OUT_7POINT1 = 0x63Fu, // OUT_FRONT_LEFT | OUT_FRONT_RIGHT | OUT_FRONT_CENTER | OUT_LOW_FREQUENCY | OUT_BACK_LEFT | OUT_BACK_RIGHT | OUT_SIDE_LEFT | OUT_SIDE_RIGHT

AUDIO_CHANNEL_OUT_7POINT1POINT2 = 0xC063Fu, // OUT_7POINT1 | OUT_TOP_SIDE_LEFT | OUT_TOP_SIDE_RIGHT

AUDIO_CHANNEL_OUT_7POINT1POINT4 = 0x2D63Fu, // OUT_7POINT1 | OUT_TOP_FRONT_LEFT | OUT_TOP_FRONT_RIGHT | OUT_TOP_BACK_LEFT | OUT_TOP_BACK_RIGHT

AUDIO_CHANNEL_OUT_MONO_HAPTIC_A = 0x20000001u,// OUT_FRONT_LEFT | OUT_HAPTIC_A

AUDIO_CHANNEL_OUT_STEREO_HAPTIC_A = 0x20000003u,// OUT_FRONT_LEFT | OUT_FRONT_RIGHT | OUT_HAPTIC_A

AUDIO_CHANNEL_OUT_HAPTIC_AB = 0x30000000u,// OUT_HAPTIC_A | OUT_HAPTIC_B

AUDIO_CHANNEL_OUT_MONO_HAPTIC_AB = 0x30000001u,// OUT_FRONT_LEFT | OUT_HAPTIC_A | OUT_HAPTIC_B

AUDIO_CHANNEL_OUT_STEREO_HAPTIC_AB = 0x30000003u,// OUT_FRONT_LEFT | OUT_FRONT_RIGHT | OUT_HAPTIC_A | OUT_HAPTIC_B

AUDIO_CHANNEL_IN_LEFT = 0x4u,

AUDIO_CHANNEL_IN_RIGHT = 0x8u,

AUDIO_CHANNEL_IN_FRONT = 0x10u,

AUDIO_CHANNEL_IN_BACK = 0x20u,

AUDIO_CHANNEL_IN_LEFT_PROCESSED = 0x40u,

AUDIO_CHANNEL_IN_RIGHT_PROCESSED = 0x80u,

AUDIO_CHANNEL_IN_FRONT_PROCESSED = 0x100u,

AUDIO_CHANNEL_IN_BACK_PROCESSED = 0x200u,

AUDIO_CHANNEL_IN_PRESSURE = 0x400u,

AUDIO_CHANNEL_IN_X_AXIS = 0x800u,

AUDIO_CHANNEL_IN_Y_AXIS = 0x1000u,

AUDIO_CHANNEL_IN_Z_AXIS = 0x2000u,

AUDIO_CHANNEL_IN_BACK_LEFT = 0x10000u,

AUDIO_CHANNEL_IN_BACK_RIGHT = 0x20000u,

AUDIO_CHANNEL_IN_CENTER = 0x40000u,

AUDIO_CHANNEL_IN_LOW_FREQUENCY = 0x100000u,

AUDIO_CHANNEL_IN_TOP_LEFT = 0x200000u,

AUDIO_CHANNEL_IN_TOP_RIGHT = 0x400000u,

AUDIO_CHANNEL_IN_VOICE_UPLINK = 0x4000u,

AUDIO_CHANNEL_IN_VOICE_DNLINK = 0x8000u,

AUDIO_CHANNEL_IN_MONO = 0x10u, // IN_FRONT

AUDIO_CHANNEL_IN_STEREO = 0xCu, // IN_LEFT | IN_RIGHT

AUDIO_CHANNEL_IN_FRONT_BACK = 0x30u, // IN_FRONT | IN_BACK

AUDIO_CHANNEL_IN_6 = 0xFCu, // IN_LEFT | IN_RIGHT | IN_FRONT | IN_BACK | IN_LEFT_PROCESSED | IN_RIGHT_PROCESSED

AUDIO_CHANNEL_IN_2POINT0POINT2 = 0x60000Cu, // IN_LEFT | IN_RIGHT | IN_TOP_LEFT | IN_TOP_RIGHT

AUDIO_CHANNEL_IN_2POINT1POINT2 = 0x70000Cu, // IN_LEFT | IN_RIGHT | IN_TOP_LEFT | IN_TOP_RIGHT | IN_LOW_FREQUENCY

AUDIO_CHANNEL_IN_3POINT0POINT2 = 0x64000Cu, // IN_LEFT | IN_CENTER | IN_RIGHT | IN_TOP_LEFT | IN_TOP_RIGHT

AUDIO_CHANNEL_IN_3POINT1POINT2 = 0x74000Cu, // IN_LEFT | IN_CENTER | IN_RIGHT | IN_TOP_LEFT | IN_TOP_RIGHT | IN_LOW_FREQUENCY

AUDIO_CHANNEL_IN_5POINT1 = 0x17000Cu, // IN_LEFT | IN_CENTER | IN_RIGHT | IN_BACK_LEFT | IN_BACK_RIGHT | IN_LOW_FREQUENCY

AUDIO_CHANNEL_IN_VOICE_UPLINK_MONO = 0x4010u, // IN_VOICE_UPLINK | IN_MONO

AUDIO_CHANNEL_IN_VOICE_DNLINK_MONO = 0x8010u, // IN_VOICE_DNLINK | IN_MONO

AUDIO_CHANNEL_IN_VOICE_CALL_MONO = 0xC010u, // IN_VOICE_UPLINK_MONO | IN_VOICE_DNLINK_MONO

AUDIO_CHANNEL_COUNT_MAX = 30u,

AUDIO_CHANNEL_INDEX_HDR = 0x80000000u, // REPRESENTATION_INDEX << COUNT_MAX

AUDIO_CHANNEL_INDEX_MASK_1 = 0x80000001u, // INDEX_HDR | (1 << 1) - 1

AUDIO_CHANNEL_INDEX_MASK_2 = 0x80000003u, // INDEX_HDR | (1 << 2) - 1

AUDIO_CHANNEL_INDEX_MASK_3 = 0x80000007u, // INDEX_HDR | (1 << 3) - 1

AUDIO_CHANNEL_INDEX_MASK_4 = 0x8000000Fu, // INDEX_HDR | (1 << 4) - 1

AUDIO_CHANNEL_INDEX_MASK_5 = 0x8000001Fu, // INDEX_HDR | (1 << 5) - 1

AUDIO_CHANNEL_INDEX_MASK_6 = 0x8000003Fu, // INDEX_HDR | (1 << 6) - 1

AUDIO_CHANNEL_INDEX_MASK_7 = 0x8000007Fu, // INDEX_HDR | (1 << 7) - 1

AUDIO_CHANNEL_INDEX_MASK_8 = 0x800000FFu, // INDEX_HDR | (1 << 8) - 1

AUDIO_CHANNEL_INDEX_MASK_9 = 0x800001FFu, // INDEX_HDR | (1 << 9) - 1

AUDIO_CHANNEL_INDEX_MASK_10 = 0x800003FFu, // INDEX_HDR | (1 << 10) - 1

AUDIO_CHANNEL_INDEX_MASK_11 = 0x800007FFu, // INDEX_HDR | (1 << 11) - 1

AUDIO_CHANNEL_INDEX_MASK_12 = 0x80000FFFu, // INDEX_HDR | (1 << 12) - 1

AUDIO_CHANNEL_INDEX_MASK_13 = 0x80001FFFu, // INDEX_HDR | (1 << 13) - 1

AUDIO_CHANNEL_INDEX_MASK_14 = 0x80003FFFu, // INDEX_HDR | (1 << 14) - 1

AUDIO_CHANNEL_INDEX_MASK_15 = 0x80007FFFu, // INDEX_HDR | (1 << 15) - 1

AUDIO_CHANNEL_INDEX_MASK_16 = 0x8000FFFFu, // INDEX_HDR | (1 << 16) - 1

AUDIO_CHANNEL_INDEX_MASK_17 = 0x8001FFFFu, // INDEX_HDR | (1 << 17) - 1

AUDIO_CHANNEL_INDEX_MASK_18 = 0x8003FFFFu, // INDEX_HDR | (1 << 18) - 1

AUDIO_CHANNEL_INDEX_MASK_19 = 0x8007FFFFu, // INDEX_HDR | (1 << 19) - 1

AUDIO_CHANNEL_INDEX_MASK_20 = 0x800FFFFFu, // INDEX_HDR | (1 << 20) - 1

AUDIO_CHANNEL_INDEX_MASK_21 = 0x801FFFFFu, // INDEX_HDR | (1 << 21) - 1

AUDIO_CHANNEL_INDEX_MASK_22 = 0x803FFFFFu, // INDEX_HDR | (1 << 22) - 1

AUDIO_CHANNEL_INDEX_MASK_23 = 0x807FFFFFu, // INDEX_HDR | (1 << 23) - 1

AUDIO_CHANNEL_INDEX_MASK_24 = 0x80FFFFFFu, // INDEX_HDR | (1 << 24) - 1

};

AudioAttributes:

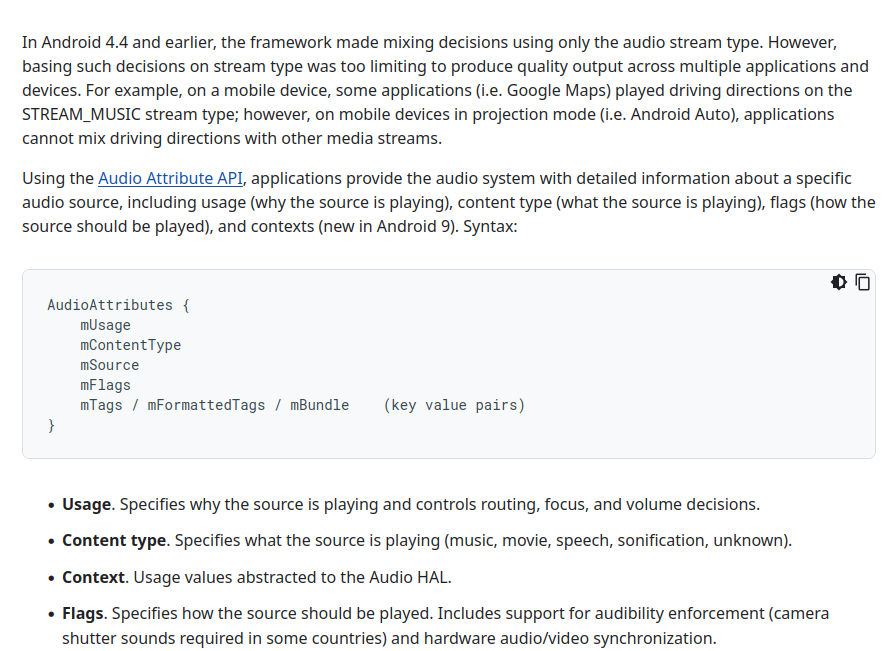

下面是AI给出的一些解释,算比较清楚

在 Android 4.4 及更早版本中,音频系统的设计确实存在以下关键问题,最终催生了 AudioAttributes 的引入:

问题本质:音频流类型(Stream Types)的“一刀切”缺陷

混合决策简单粗暴

系统仅基于 STREAM_MUSIC、STREAM_NOTIFICATION 等固定流类型进行混音和音量控制。例如,Google Maps 的导航语音和音乐播放器都被归类为 STREAM_MUSIC,导致:

无法区分优先级:导航语音和音乐在混音时被同等对待,无法自动降低音乐音量以突出导航提示。

音量控制耦合:用户在调节音量时只能统一控制所有 STREAM_MUSIC 流,无法单独调节导航或音乐。

多设备场景的灾难性冲突

当手机进入投影模式(如 Android Auto)时,问题进一步放大:

音频路由混乱:车载系统无法识别 STREAM_MUSIC 中的导航语音和音乐差异,导致两者共用同一逻辑通道。

用户体验割裂:用户无法在车载界面上单独调节导航音量,必须忍受导航语音与音乐互相覆盖的尴尬局面。

解决方案:AudioAttributes 的诞生

为了突破流类型的限制,Android 5.0 (API 21) 引入 AudioAttributes,通过以下机制解决上述问题:

1. 精准描述音频用途与内容

usage 属性:明确音频的“使用场景”(如 USAGE_ASSISTANCE_NAVIGATION_GUIDANCE 表示导航语音)。

contentType 属性:定义音频内容的类型(如 CONTENT_TYPE_SPEECH 表示语音内容)。

java

// 导航应用的正确配置

AudioAttributes attributes = new AudioAttributes.Builder()

.setUsage(AudioAttributes.USAGE_ASSISTANCE_NAVIGATION_GUIDANCE) // 明确用途

.setContentType(AudioAttributes.CONTENT_TYPE_SPEECH) // 内容类型为语音

.build();

mediaPlayer.setAudioAttributes(attributes);

2. 系统级音频策略优化

独立音量控制:Android Auto 等车载系统可根据 usage 属性将导航语音(USAGE_ASSISTANCE_NAVIGATION_GUIDANCE)和音乐(USAGE_MEDIA)映射到不同的硬件音量组,用户可独立调节。

智能混音策略:系统识别导航语音为高优先级语音内容,自动降低背景音乐音量(Ducking),避免强制停止音乐播放。

3. 向后兼容与开发者迁移

流类型映射:未使用 AudioAttributes 的旧应用(如仅设置 STREAM_MUSIC)会被系统自动映射为 USAGE_MEDIA,确保基础兼容性。

开发者适配激励:通过清晰的 API 设计鼓励开发者主动使用 AudioAttributes,以释放多设备场景的潜力。

案例对比:Android Auto 投影模式下的变革

场景 传统流类型(Android 4.4) AudioAttributes(Android 5.0+)

音频分类 导航和音乐均标记为 STREAM_MUSIC 导航用 USAGE_ASSISTANCE_NAVIGATION_GUIDANCE,音乐用 USAGE_MEDIA

音量控制 统一调节,无法隔离 车载界面显示独立滑块,用户可分别调节导航和音乐音量

混音行为 导航语音可能完全中断音乐 音乐短暂降低音量(Ducking),导航结束后自动恢复

开发者控制权 受限,依赖系统默认策略 可通过属性精细定义音频行为(如延迟、音效)

总结

AudioAttributes 的引入彻底改变了 Android 音频管理的范式:

从“简单分类”到“场景驱动”:通过语义化的属性描述音频的用途和内容,而非依赖僵化的流类型。

赋能多设备生态:为车载系统、穿戴设备等场景提供灵活适配能力,解决传统方案中音频冲突的顽疾。

开发者友好:通过清晰的 API 设计和兼容性映射,降低迁移成本,同时释放更强大的音频控制能力。

USAGE

明确音频的“使用场景”(如 USAGE_ASSISTANCE_NAVIGATION_GUIDANCE 表示导航语音)。

public final static int USAGE_UNKNOWN = 0;

/**

* Usage value to use when the usage is media, such as music, or movie

* soundtracks.

*/

public final static int USAGE_MEDIA = 1;

/**

* Usage value to use when the usage is voice communications, such as telephony

* or VoIP.

*/

public final static int USAGE_VOICE_COMMUNICATION = 2;

/**

* Usage value to use when the usage is in-call signalling, such as with

* a "busy" beep, or DTMF tones.

*/

public final static int USAGE_VOICE_COMMUNICATION_SIGNALLING = 3;

/**

* Usage value to use when the usage is an alarm (e.g. wake-up alarm).

*/

public final static int USAGE_ALARM = 4;

/**

* Usage value to use when the usage is notification. See other

* notification usages for more specialized uses.

*/

public final static int USAGE_NOTIFICATION = 5;

/**

* Usage value to use when the usage is telephony ringtone.

*/

public final static int USAGE_NOTIFICATION_RINGTONE = 6;

/**

* Usage value to use when the usage is a request to enter/end a

* communication, such as a VoIP communication or video-conference.

*/

public final static int USAGE_NOTIFICATION_COMMUNICATION_REQUEST = 7;

/**

* Usage value to use when the usage is notification for an "instant"

* communication such as a chat, or SMS.

*/

public final static int USAGE_NOTIFICATION_COMMUNICATION_INSTANT = 8;

/**

* Usage value to use when the usage is notification for a

* non-immediate type of communication such as e-mail.

*/

public final static int USAGE_NOTIFICATION_COMMUNICATION_DELAYED = 9;

/**

* Usage value to use when the usage is to attract the user's attention,

* such as a reminder or low battery warning.

*/

public final static int USAGE_NOTIFICATION_EVENT = 10;

/**

* Usage value to use when the usage is for accessibility, such as with

* a screen reader.

*/

public final static int USAGE_ASSISTANCE_ACCESSIBILITY = 11;

/**

* Usage value to use when the usage is driving or navigation directions.

*/

public final static int USAGE_ASSISTANCE_NAVIGATION_GUIDANCE = 12;

/**

* Usage value to use when the usage is sonification, such as with user

* interface sounds.

*/

public final static int USAGE_ASSISTANCE_SONIFICATION = 13;

/**

* Usage value to use when the usage is for game audio.

*/

public final static int USAGE_GAME = 14;

/**

* @hide

* Usage value to use when feeding audio to the platform and replacing "traditional" audio

* source, such as audio capture devices.

*/

public final static int USAGE_VIRTUAL_SOURCE = 15;

/**

* Usage value to use for audio responses to user queries, audio instructions or help

* utterances.

*/

public final static int USAGE_ASSISTANT = 16;

/**

* @hide

* Usage value to use for assistant voice interaction with remote caller on Cell and VoIP calls.

*/

@SystemApi

@RequiresPermission(allOf = {

android.Manifest.permission.MODIFY_PHONE_STATE,

android.Manifest.permission.MODIFY_AUDIO_ROUTING

})

public static final int USAGE_CALL_ASSISTANT = 17;

private static int usageForStreamType(int streamType) {

switch(streamType) {

case AudioSystem.STREAM_VOICE_CALL:

return USAGE_VOICE_COMMUNICATION;

case AudioSystem.STREAM_SYSTEM_ENFORCED:

case AudioSystem.STREAM_SYSTEM:

return USAGE_ASSISTANCE_SONIFICATION;

case AudioSystem.STREAM_RING:

return USAGE_NOTIFICATION_RINGTONE;

case AudioSystem.STREAM_MUSIC:

return USAGE_MEDIA;

case AudioSystem.STREAM_ALARM:

return USAGE_ALARM;

case AudioSystem.STREAM_NOTIFICATION:

return USAGE_NOTIFICATION;

case AudioSystem.STREAM_BLUETOOTH_SCO:

return USAGE_VOICE_COMMUNICATION;

case AudioSystem.STREAM_DTMF:

return USAGE_VOICE_COMMUNICATION_SIGNALLING;

case AudioSystem.STREAM_ACCESSIBILITY:

return USAGE_ASSISTANCE_ACCESSIBILITY;

case AudioSystem.STREAM_TTS:

default:

return USAGE_UNKNOWN;

}

}

ContentType

定义音频内容的类型(如 CONTENT_TYPE_SPEECH 表示语音内容)。

/**

* Content type value to use when the content type is unknown, or other than the ones defined.

*/

public final static int CONTENT_TYPE_UNKNOWN = 0;

/**

* Content type value to use when the content type is speech.

*/

public final static int CONTENT_TYPE_SPEECH = 1;

/**

* Content type value to use when the content type is music.

*/

public final static int CONTENT_TYPE_MUSIC = 2;

/**

* Content type value to use when the content type is a soundtrack, typically accompanying

* a movie or TV program.

*/

public final static int CONTENT_TYPE_MOVIE = 3;

/**

* Content type value to use when the content type is a sound used to accompany a user

* action, such as a beep or sound effect expressing a key click, or event, such as the

* type of a sound for a bonus being received in a game. These sounds are mostly synthesized

* or short Foley sounds.

*/

public final static int CONTENT_TYPE_SONIFICATION = 4;

描述的是一个音频使用场景。比如音乐播放场景可以用CONTENT_TYPE_MUSIC加上USAGE_MEDIA来表示;通话场景可以用CONTENT_TYPE_SPEECH加上USAGE_VOICE_COMMUNICATION来表示。它对应在Java世界中的定义是在frameworks/base/media/java/android/media/AudioAttributes.java文件中。在C/C++世界中的定义是在system/media/audio/include/system/audio.h头文件中的audio_attributes_t结构体。在audio_policy_engine_product_strategies.xml配置文件中对应的是节点。

StreamType:

Android早期用于表示音频使用场景的变量,定义音频流类型,用于区分不同用途的音频通道(如音乐、通话、通知),并独立控制音量。

比如创建AudioTrack就需要该参数:

AudioTrack audioTrack = new AudioTrack(

AudioManager.STREAM_MUSIC,

sampleRate,

channelConfig,

audioFormat,

bufferSize,

AudioTrack.MODE_STREAM

);

AudioManager.STREAM_MUSIC就是StreamType,在Java世界中的定义是在AudioManager.java文件中。

在C/C++世界中的定义是在system/media/audio/include/system/audio-hal-enums.h头文件中的audio_stream_type_t枚举。

类型有如下:

/* These values must be kept in sync with system/media/audio/include/system/audio-hal-enums.h */

/*

* If these are modified, please also update Settings.System.VOLUME_SETTINGS

* and attrs.xml and AudioManager.java.

*/

/** @hide Used to identify the default audio stream volume */

@TestApi

public static final int STREAM_DEFAULT = -1;

/** @hide Used to identify the volume of audio streams for phone calls */

public static final int STREAM_VOICE_CALL = 0;

/** @hide Used to identify the volume of audio streams for system sounds */

public static final int STREAM_SYSTEM = 1;

/** @hide Used to identify the volume of audio streams for the phone ring and message alerts */

public static final int STREAM_RING = 2;

/** @hide Used to identify the volume of audio streams for music playback */

public static final int STREAM_MUSIC = 3;

/** @hide Used to identify the volume of audio streams for alarms */

public static final int STREAM_ALARM = 4;

/** @hide Used to identify the volume of audio streams for notifications */

public static final int STREAM_NOTIFICATION = 5;

/** @hide

* Used to identify the volume of audio streams for phone calls when connected on bluetooth */

public static final int STREAM_BLUETOOTH_SCO = 6;

/** @hide Used to identify the volume of audio streams for enforced system sounds in certain

* countries (e.g camera in Japan) */

@UnsupportedAppUsage(maxTargetSdk = Build.VERSION_CODES.R, trackingBug = 170729553)

public static final int STREAM_SYSTEM_ENFORCED = 7;

/** @hide Used to identify the volume of audio streams for DTMF tones */

public static final int STREAM_DTMF = 8;

/** @hide Used to identify the volume of audio streams exclusively transmitted through the

* speaker (TTS) of the device */

public static final int STREAM_TTS = 9;

/** @hide Used to identify the volume of audio streams for accessibility prompts */

public static final int STREAM_ACCESSIBILITY = 10;

/** @hide Used to identify the volume of audio streams for virtual assistant */

public static final int STREAM_ASSISTANT = 11;

/**

这里StreamType属于过时的,但是也要兼容,所以和上面的ContentType有一个转换关系

switch (streamType) {

case AudioSystem.STREAM_VOICE_CALL:

mContentType = CONTENT_TYPE_SPEECH;

break;

case AudioSystem.STREAM_SYSTEM_ENFORCED:

mFlags |= FLAG_AUDIBILITY_ENFORCED;

// intended fall through, attributes in common with STREAM_SYSTEM

case AudioSystem.STREAM_SYSTEM:

mContentType = CONTENT_TYPE_SONIFICATION;

break;

case AudioSystem.STREAM_RING:

mContentType = CONTENT_TYPE_SONIFICATION;

break;

case AudioSystem.STREAM_ALARM:

mContentType = CONTENT_TYPE_SONIFICATION;

break;

case AudioSystem.STREAM_NOTIFICATION:

mContentType = CONTENT_TYPE_SONIFICATION;

break;

case AudioSystem.STREAM_BLUETOOTH_SCO:

mContentType = CONTENT_TYPE_SPEECH;

mFlags |= FLAG_SCO;

break;

case AudioSystem.STREAM_DTMF:

mContentType = CONTENT_TYPE_SONIFICATION;

break;

case AudioSystem.STREAM_TTS:

mContentType = CONTENT_TYPE_SONIFICATION;

mFlags |= FLAG_BEACON;

break;

case AudioSystem.STREAM_ACCESSIBILITY:

mContentType = CONTENT_TYPE_SPEECH;

break;

case AudioSystem.STREAM_ASSISTANT:

mContentType = CONTENT_TYPE_SPEECH;

break;

case AudioSystem.STREAM_MUSIC:

// leaving as CONTENT_TYPE_UNKNOWN

break;

default:

Log.e(TAG, "Invalid stream type " + streamType + " for AudioAttributes");

}

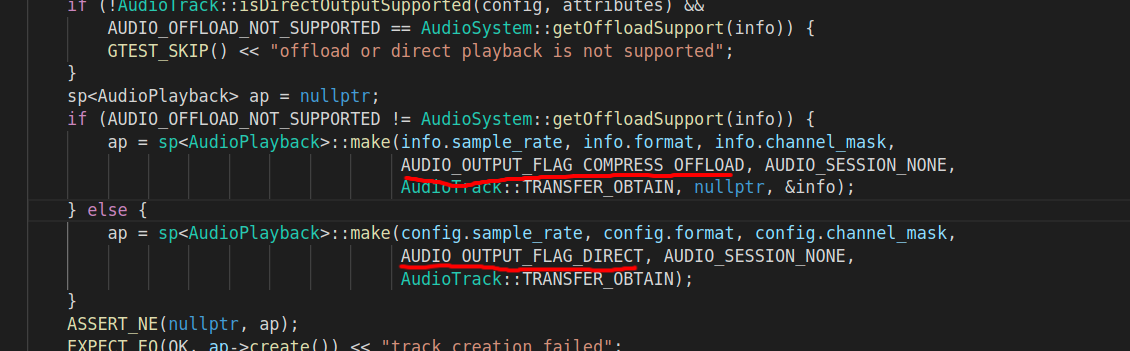

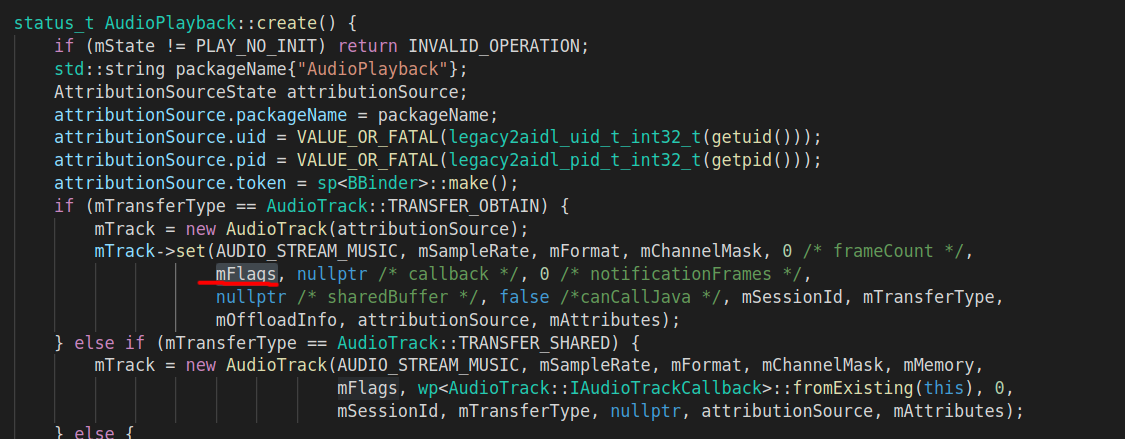

AUDIO_OUTPUT_FLAG相关

AUDIO_OUTPUT_FLAG 是 Android 音频系统中用于定义音频流特性的标志位,直接影响音频数据的处理方式、设备路由和性能优化。这些标志决定了音频流的优先级、延迟要求和硬件兼容性。

直接使用案例参考:

frameworks/av/media/libaudioclient/tests/audiotrack_tests.cpp

常见标志类型及用途

AUDIO_OUTPUT_FLAG_FAST

低延迟模式,优先选择低延迟硬件路径(如 low_latency 设备)

游戏音效、按键音、实时语音通话等对延迟敏感的场景

AUDIO_OUTPUT_FLAG_PRIMARY

主音频流,用于系统铃声、通知等基础声音

系统级音频(如来电铃声)

AUDIO_OUTPUT_FLAG_DEEP_BUFFER

高缓冲模式,适合高音质但对延迟不敏感的场景

音乐播放、视频背景音

AUDIO_OUTPUT_FLAG_DIRECT 直输模式,绕过软件混音,直接输出到硬件(如 USB 声卡)

专业音频设备、Hi-Fi 播放

AUDIO_OUTPUT_FLAG_COMPRESS_OFFLOAD

硬件解码模式,数据不经软件解码直接传输到硬件解码器

压缩音频格式(如 MP3、AAC)的高效播放

typedef enum {

AUDIO_OUTPUT_FLAG_NONE = 0x0,

AUDIO_OUTPUT_FLAG_DIRECT = 0x1,

AUDIO_OUTPUT_FLAG_PRIMARY = 0x2,

AUDIO_OUTPUT_FLAG_FAST = 0x4,

AUDIO_OUTPUT_FLAG_DEEP_BUFFER = 0x8,

AUDIO_OUTPUT_FLAG_COMPRESS_OFFLOAD = 0x10,

AUDIO_OUTPUT_FLAG_NON_BLOCKING = 0x20,

AUDIO_OUTPUT_FLAG_HW_AV_SYNC = 0x40,

AUDIO_OUTPUT_FLAG_TTS = 0x80,

AUDIO_OUTPUT_FLAG_RAW = 0x100,

AUDIO_OUTPUT_FLAG_SYNC = 0x200,

AUDIO_OUTPUT_FLAG_IEC958_NONAUDIO = 0x400,

AUDIO_OUTPUT_FLAG_DIRECT_PCM = 0x2000,

AUDIO_OUTPUT_FLAG_MMAP_NOIRQ = 0x4000,

AUDIO_OUTPUT_FLAG_VOIP_RX = 0x8000,

AUDIO_OUTPUT_FLAG_INCALL_MUSIC = 0x10000,

} audio_output_flags_t;

typedef enum {

AUDIO_INPUT_FLAG_NONE = 0x0,

AUDIO_INPUT_FLAG_FAST = 0x1,

AUDIO_INPUT_FLAG_HW_HOTWORD = 0x2,

AUDIO_INPUT_FLAG_RAW = 0x4,

AUDIO_INPUT_FLAG_SYNC = 0x8,

AUDIO_INPUT_FLAG_MMAP_NOIRQ = 0x10,

AUDIO_INPUT_FLAG_VOIP_TX = 0x20,

AUDIO_INPUT_FLAG_HW_AV_SYNC = 0x40,

AUDIO_INPUT_FLAG_DIRECT = 0x80,

} audio_input_flags_t;

AUDIO_OUTPUT_FLAG_DIRECT

表示音频流直接输出到音频设备,不需要软件混音,一般用于 HDMI 设备声音输出

AUDIO_OUTPUT_FLAG_PRIMARY

表示音频流需要输出到主输出设备,一般用于铃声类声音

AUDIO_OUTPUT_FLAG_FAST

表示音频流需要快速输出到音频设备,一般用于按键音、游戏背景音等对时延要求高的场景

AUDIO_OUTPUT_FLAG_DEEP_BUFFER

表示音频流输出可以接受较大的时延,一般用于音乐、视频播放等对时延要求不高的场景

AUDIO_OUTPUT_FLAG_COMPRESS_OFFLOAD

表示音频流没有经过软件解码,需要输出到硬件解码器,由硬件解码器进行解码

我们根据不同的播放场景,使用不同的输出标识,如按键音、游戏背景音对输出时延要求很高,那么就需要置 AUDIO_OUTPUT_FLAG_FAST,具体可以参考 ToneGenerator、SoundPool 和 OpenSL ES。

STRATEGY

类型

enum legacy_strategy {

STRATEGY_NONE = -1,

STRATEGY_MEDIA,

STRATEGY_PHONE,

STRATEGY_SONIFICATION,

STRATEGY_SONIFICATION_RESPECTFUL,

STRATEGY_DTMF,

STRATEGY_ENFORCED_AUDIBLE,

STRATEGY_TRANSMITTED_THROUGH_SPEAKER,

STRATEGY_ACCESSIBILITY,

STRATEGY_REROUTING,

STRATEGY_CALL_ASSISTANT,

};

老版本STRATEGY是可以直接从audio_stream_type_t转换过来的

routing_strategy Engine::getStrategyForStream(audio_stream_type_t stream)

{

// stream to strategy mapping

switch (stream) {

case AUDIO_STREAM_VOICE_CALL:

case AUDIO_STREAM_BLUETOOTH_SCO:

return STRATEGY_PHONE;

case AUDIO_STREAM_RING:

case AUDIO_STREAM_ALARM:

return STRATEGY_SONIFICATION;

case AUDIO_STREAM_NOTIFICATION:

return STRATEGY_SONIFICATION_RESPECTFUL;

case AUDIO_STREAM_DTMF:

return STRATEGY_DTMF;

default:

ALOGE("unknown stream type %d", stream);

case AUDIO_STREAM_SYSTEM:

// NOTE: SYSTEM stream uses MEDIA strategy because muting music and switching outputs

// while key clicks are played produces a poor result

case AUDIO_STREAM_MUSIC:

return STRATEGY_MEDIA;

case AUDIO_STREAM_ENFORCED_AUDIBLE:

return STRATEGY_ENFORCED_AUDIBLE;

case AUDIO_STREAM_TTS:

return STRATEGY_TRANSMITTED_THROUGH_SPEAKER;

case AUDIO_STREAM_ACCESSIBILITY:

return STRATEGY_ACCESSIBILITY;

case AUDIO_STREAM_REROUTING:

return STRATEGY_REROUTING;

}

}

APP有2种使用共享内存的方式:

AudioTrack创建时候需要考虑数据大小,如何很小一般都是一次性填充数据,如果很大,或者说需要源源不断的写入数据,这两种情况需要使用不一样的MODE方式来创建。

b.1 MODE_STATIC:

APP创建共享内存, APP一次性填充数据

b.2 MODE_STREAM:

APP使用obtainBuffer获得空白内存, 填充数据后使用releaseBuffer释放内存

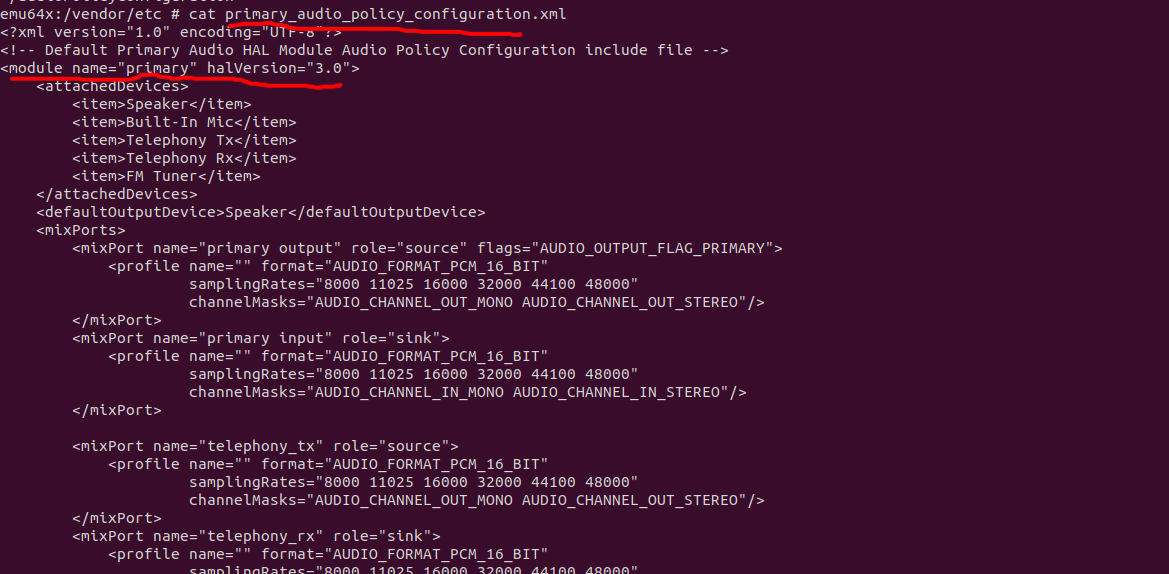

module

在Android音频系统中,module是指音频硬件接口,也称为HwModule。

这些module定义在audio_policy_configuration.xml文件中,主要包括以下几种类型:primary、a2dp、usb和r_submix等。每个module都被描述为HwModule,保存在mHwModules中

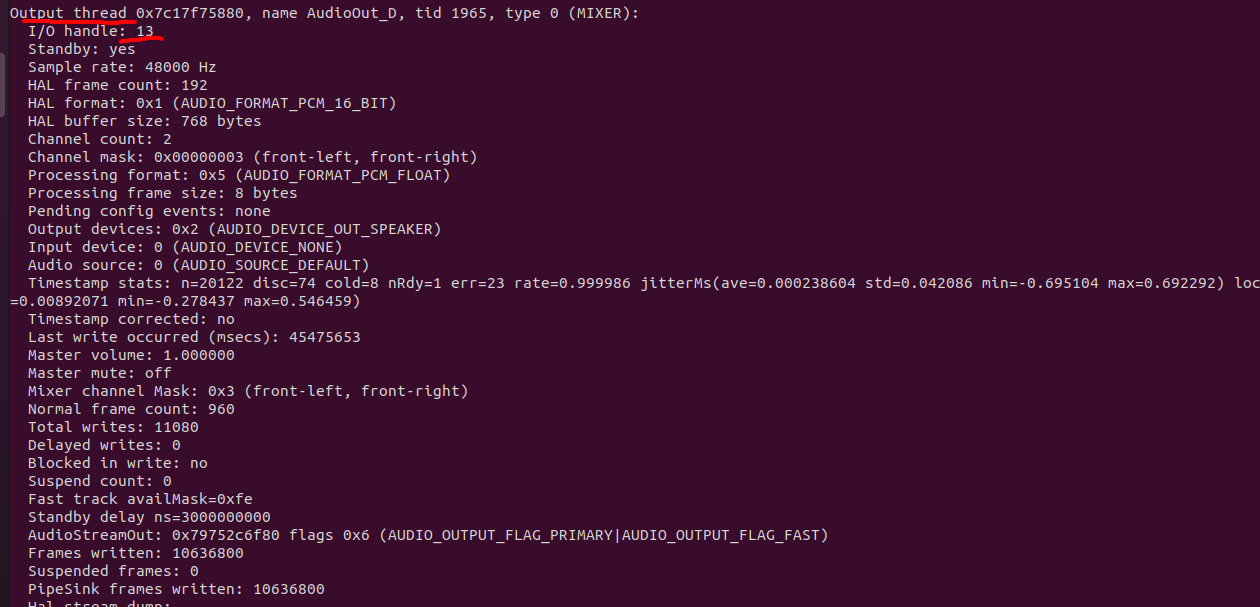

Output

output 概念

utput在Android音频架构中是一个重要的概念,它代表了音频数据的输出通道。每个output可以路由到不同的设备,如speaker、蓝牙设备或HDMI接口等

(1) 一个output对应一个或多个设备节点,比如/dev/snd/pcmC0D0p。为了避免麻烦,一个设备节点只由一个Thread(线程)操作。

(2) 一个output是多个device的组合(比如声卡上有喇叭(dev1)和耳机(dev2)),这些device(喇叭、耳机)属于同一个硬件上不同的端口。这些device需支持同样的参数,如采样率和通道。

(3) 一个output对一个Thread,

一般与AudioFlinger音频播放线程对应

Device

经常在播放时候会有手机喇叭出声,还是耳机出声等情况,那具体出声的设备就被叫做device,比如最常见的手机喇叭,和耳机被定义成如下:

AUDIO_DEVICE_OUT_SPEAKER = 0x2u,

AUDIO_DEVICE_OUT_WIRED_HEADSET = 0x4u,

当然设备除了播放设备,也有录制设备,比如mic设备,

AUDIO_DEVICE_IN_BUILTIN_MIC

汇总部分如下:

enum {

AUDIO_DEVICE_NONE = 0x0u,

AUDIO_DEVICE_BIT_IN = 0x80000000u,

AUDIO_DEVICE_BIT_DEFAULT = 0x40000000u,

AUDIO_DEVICE_OUT_EARPIECE = 0x1u,

AUDIO_DEVICE_OUT_SPEAKER = 0x2u,

AUDIO_DEVICE_OUT_WIRED_HEADSET = 0x4u,

AUDIO_DEVICE_OUT_WIRED_HEADPHONE = 0x8u,

AUDIO_DEVICE_OUT_BLUETOOTH_SCO = 0x10u,

AUDIO_DEVICE_OUT_BLUETOOTH_SCO_HEADSET = 0x20u,

AUDIO_DEVICE_OUT_BLUETOOTH_SCO_CARKIT = 0x40u,

AUDIO_DEVICE_OUT_BLUETOOTH_A2DP = 0x80u,

AUDIO_DEVICE_OUT_BLUETOOTH_A2DP_HEADPHONES = 0x100u,

AUDIO_DEVICE_OUT_BLUETOOTH_A2DP_SPEAKER = 0x200u,

AUDIO_DEVICE_OUT_AUX_DIGITAL = 0x400u,

AUDIO_DEVICE_OUT_HDMI = 0x400u, // OUT_AUX_DIGITAL

AUDIO_DEVICE_OUT_ANLG_DOCK_HEADSET = 0x800u,

AUDIO_DEVICE_OUT_DGTL_DOCK_HEADSET = 0x1000u,

AUDIO_DEVICE_OUT_USB_ACCESSORY = 0x2000u,

AUDIO_DEVICE_OUT_USB_DEVICE = 0x4000u,

AUDIO_DEVICE_OUT_REMOTE_SUBMIX = 0x8000u,

AUDIO_DEVICE_OUT_TELEPHONY_TX = 0x10000u,

AUDIO_DEVICE_OUT_LINE = 0x20000u,

AUDIO_DEVICE_OUT_HDMI_ARC = 0x40000u,

AUDIO_DEVICE_OUT_SPDIF = 0x80000u,

AUDIO_DEVICE_OUT_FM = 0x100000u,

AUDIO_DEVICE_OUT_AUX_LINE = 0x200000u,

AUDIO_DEVICE_OUT_SPEAKER_SAFE = 0x400000u,

AUDIO_DEVICE_OUT_IP = 0x800000u,

AUDIO_DEVICE_OUT_BUS = 0x1000000u,

AUDIO_DEVICE_OUT_PROXY = 0x2000000u,

AUDIO_DEVICE_OUT_USB_HEADSET = 0x4000000u,

AUDIO_DEVICE_OUT_HEARING_AID = 0x8000000u,

AUDIO_DEVICE_OUT_ECHO_CANCELLER = 0x10000000u,

AUDIO_DEVICE_OUT_DEFAULT = 0x40000000u, // BIT_DEFAULT

AUDIO_DEVICE_IN_COMMUNICATION = 0x80000001u, // BIT_IN | 0x1

AUDIO_DEVICE_IN_AMBIENT = 0x80000002u, // BIT_IN | 0x2

AUDIO_DEVICE_IN_BUILTIN_MIC = 0x80000004u, // BIT_IN | 0x4

AUDIO_DEVICE_IN_BLUETOOTH_SCO_HEADSET = 0x80000008u, // BIT_IN | 0x8

AUDIO_DEVICE_IN_WIRED_HEADSET = 0x80000010u, // BIT_IN | 0x10

AUDIO_DEVICE_IN_AUX_DIGITAL = 0x80000020u, // BIT_IN | 0x20

AUDIO_DEVICE_IN_HDMI = 0x80000020u, // IN_AUX_DIGITAL

AUDIO_DEVICE_IN_VOICE_CALL = 0x80000040u, // BIT_IN | 0x40

AUDIO_DEVICE_IN_TELEPHONY_RX = 0x80000040u, // IN_VOICE_CALL

AUDIO_DEVICE_IN_BACK_MIC = 0x80000080u, // BIT_IN | 0x80

AUDIO_DEVICE_IN_REMOTE_SUBMIX = 0x80000100u, // BIT_IN | 0x100

AUDIO_DEVICE_IN_ANLG_DOCK_HEADSET = 0x80000200u, // BIT_IN | 0x200

AUDIO_DEVICE_IN_DGTL_DOCK_HEADSET = 0x80000400u, // BIT_IN | 0x400

AUDIO_DEVICE_IN_USB_ACCESSORY = 0x80000800u, // BIT_IN | 0x800

AUDIO_DEVICE_IN_USB_DEVICE = 0x80001000u, // BIT_IN | 0x1000

AUDIO_DEVICE_IN_FM_TUNER = 0x80002000u, // BIT_IN | 0x2000

AUDIO_DEVICE_IN_TV_TUNER = 0x80004000u, // BIT_IN | 0x4000

AUDIO_DEVICE_IN_LINE = 0x80008000u, // BIT_IN | 0x8000

AUDIO_DEVICE_IN_SPDIF = 0x80010000u, // BIT_IN | 0x10000

AUDIO_DEVICE_IN_BLUETOOTH_A2DP = 0x80020000u, // BIT_IN | 0x20000

AUDIO_DEVICE_IN_LOOPBACK = 0x80040000u, // BIT_IN | 0x40000

AUDIO_DEVICE_IN_IP = 0x80080000u, // BIT_IN | 0x80000

AUDIO_DEVICE_IN_BUS = 0x80100000u, // BIT_IN | 0x100000

AUDIO_DEVICE_IN_PROXY = 0x81000000u, // BIT_IN | 0x1000000

AUDIO_DEVICE_IN_USB_HEADSET = 0x82000000u, // BIT_IN | 0x2000000

AUDIO_DEVICE_IN_BLUETOOTH_BLE = 0x84000000u, // BIT_IN | 0x4000000

AUDIO_DEVICE_IN_HDMI_ARC = 0x88000000u, // BIT_IN | 0x8000000

AUDIO_DEVICE_IN_ECHO_REFERENCE = 0x90000000u, // BIT_IN | 0x10000000

AUDIO_DEVICE_IN_DEFAULT = 0xC0000000u, // BIT_IN | BIT_DEFAULT

};

profile

配置,用来描述output,本可以支持哪些设备(仅逻辑上支持,不一定有实际硬件,比如耳机 output是插上耳机就支持,不插耳机就不支持,但profile一直支持)。

policy

一个stream如何最终选择到一个device,这些stream如何互相影响(一个高优先级的声音会使得其他声音静音),等等等,统称为policy (策略)。

文档参考:

https://source.android.google.cn/docs/core/audio/attributes

一些属性参考:

prebuilts/vndk/v30/arm/include/system/media/audio/include/system/audio-base.h

https://mp.weixin.qq.com/s/pQr-HrW0EUoi5QHTMOGEBQ

更多audio相关的干货内容知识,请关注下面“千里马学框架”

2696

2696

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?