[仓库地址]:https://github.com/huggingface/lerobot

[参考阅读]

LeRobot——Hugging Face打造的机器人开源库:包含对顶层script、与dataset的源码分析(含在简易机械臂SO-ARM100上的部署)

LeRobot DP——LeRobot对动作策略Diffusion Policy的封装与解读(含DexCap库中对diffusion_policy的封装与实现)

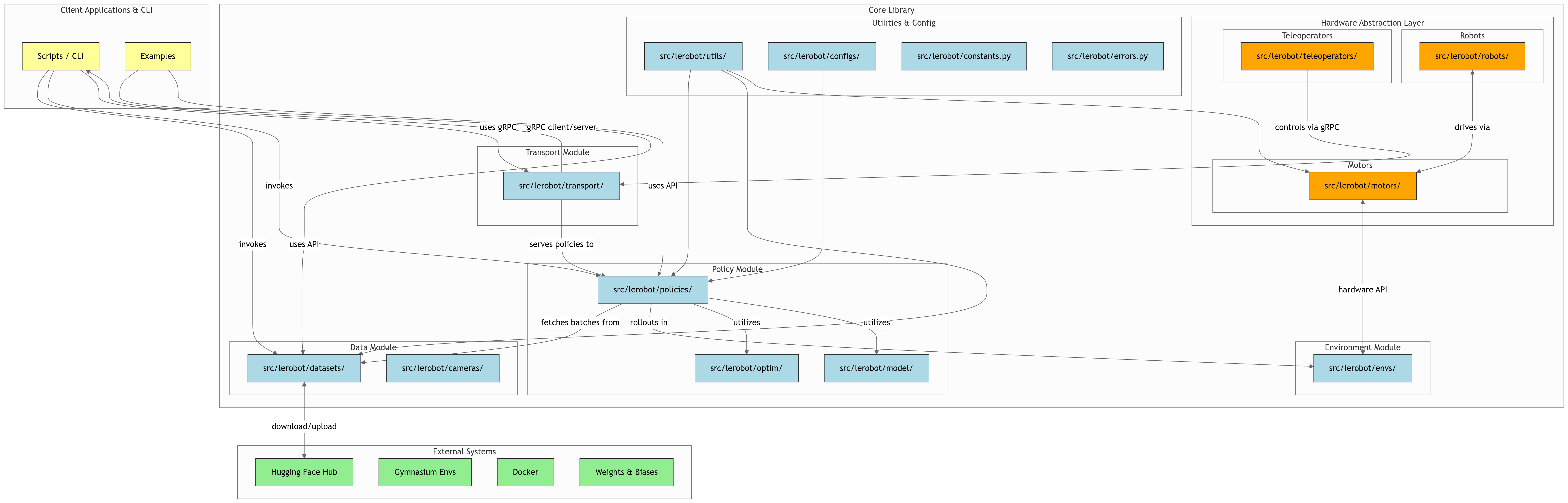

[代码结构](推荐一个代码仓库结构分析工具:gitdiagram)

[杂记]

- src/lerobot/model/kinematics.py里的注释说明项目的正向和逆向运动学用的是placo库

"""

Initialize placo-based kinematics solver.

Args:

urdf_path: Path to the robot URDF file

target_frame_name: Name of the end-effector frame in the URDF

joint_names: List of joint names to use for the kinematics solver

"""

try:

import placo

except ImportError as e:

raise ImportError(

"placo is required for RobotKinematics. "

"Please install the optional dependencies of `kinematics` in the package."

) from e

self.robot = placo.RobotWrapper(urdf_path)

self.solver = placo.KinematicsSolver(self.robot)

self.solver.mask_fbase(True) # Fix the base

self.target_frame_name = target_frame_name

# Set joint names

self.joint_names = list(self.robot.joint_names()) if joint_names is None else joint_namesplaco扩展阅读:

- src/lerobot/cameras文件夹下面放支持的摄像头驱动,截至到20250906支持的有:

- src/lerobot/motors文件夹下放电机的驱动,目前有Dynamixel和Feetech

- src/lerobot/robots文件夹下支持的机器人项目,截至到20250906有bi_so100_follower、hope_jr、koch_follower、lekiwi、reachy2、so100_follower、so101_follower、stretch3、viperx。

- src/lerobot/scripts文件夹:命令行工具,各种发号施令。包含一系列数据管理的脚本,涉及

将本地数据集推动到hf(push_dataset_to_hub.py移动到datasets文件夹了)、可视化数据集;几个模型相关的脚本,涉及上传预训练模型到hf(应该是移除了,改用hf命令行了)、模型训练train.py、模型评估eval.py;几个机器人控制的脚本,涉及控制实际机器人、配置电机等脚本。- rl文件夹:

The rl directory contains the main scripts and modules for running reinforcement learning (RL) workflows in LeRobot, especially for real-robot and simulation-based training. Key components include:

actor.py: Implements the actor process in distributed RL (HIL-SERL), which interacts with the robot/environment, collects experience, and sends transitions to the learner.

learner.py: Implements the learner process, which receives transitions from actors, updates the policy, and periodically sends updated parameters back.

learner_service.py: Defines the gRPC service for communication between actor(s) and learner.

gym_manipulator.py: Provides a gym-compatible environment for robot manipulation, supporting teleoperation, recording, replay, and RL training.

eval_policy.py: Script for evaluating a trained policy in the environment.

crop_dataset_roi.py: Utility for cropping and resizing dataset images based on regions of interest.

These scripts enable distributed RL training, human-in-the-loop workflows, dataset collection, and evaluation for robot learning tasks. - server文件夹:

The server directory contains scripts and modules for running the server-side components of LeRobot's distributed control and reinforcement learning infrastructure. Its main responsibilities are to manage communication between robots and policies, handle remote policy inference, and coordinate data exchange for RL workflows. Key components include:

policy_server.py: Implements the gRPC server that receives observations from robot clients, runs policy inference, and sends actions back. It manages policy loading, observation queues, and supports multiple policy types.

robot_client.py: Implements the robot-side client that connects to the policy server, sends observations, receives actions, and manages the robot control loop. It supports threading for concurrent action/observation handling.

configs.py: Contains configuration dataclasses for both the policy server and robot client, including network, device, and policy settings.

constants.py: Defines constants such as supported policies, robots, and default server parameters.

helpers.py: Provides utility functions and dataclasses for logging, observation/action formatting, FPS tracking, and feature validation.

This directory is essential for running LeRobot in distributed or remote-control setups, enabling real robots or simulators to interact with policies over the network.

- rl文件夹:

- examples文件夹

-

2_evaluate_pretrained_policy.py:评估已经训练好的策略(lerobot/diffusion_pusht)。目前lerobot版本(0.3.4)下的这个文件还不支持RTX 5070ti,因为0.3.4用的torch>=2.2.1,<2.8.0(pyproject.toml),而支持50系显卡的torch至少要2.8.0(对应cuda 12.8),所以要么维持torch在torch>=2.2.1,<2.8.0然后报:NVIDIA GeForce RTX 5070 with CUDA capability sm_120 is not compatible with the current PyTorch installation、要么把torch升级到2.8.0升级后报别的因为torch版本不匹配导致的错误。

-

3_train_policy.py:用准备好的数据集(lerobot/pusht),选择对应的策略进行训练。例程用的策略是diffusion policy(train Diffusion Policy on the PushT environment)。目前lerobot版本(0.3.4)下的这个文件还不支持RTX 5070ti,因为0.3.4用的torch>=2.2.1,<2.8.0(pyproject.toml),而支持50系显卡的torch至少要2.8.0(对应cuda 12.8),所以要么维持torch在torch>=2.2.1,<2.8.0然后报:NVIDIA GeForce RTX 5070 with CUDA capability sm_120 is not compatible with the current PyTorch installation、要么把torch升级到2.8.0升级后报别的因为torch版本不匹配导致的错误。

-

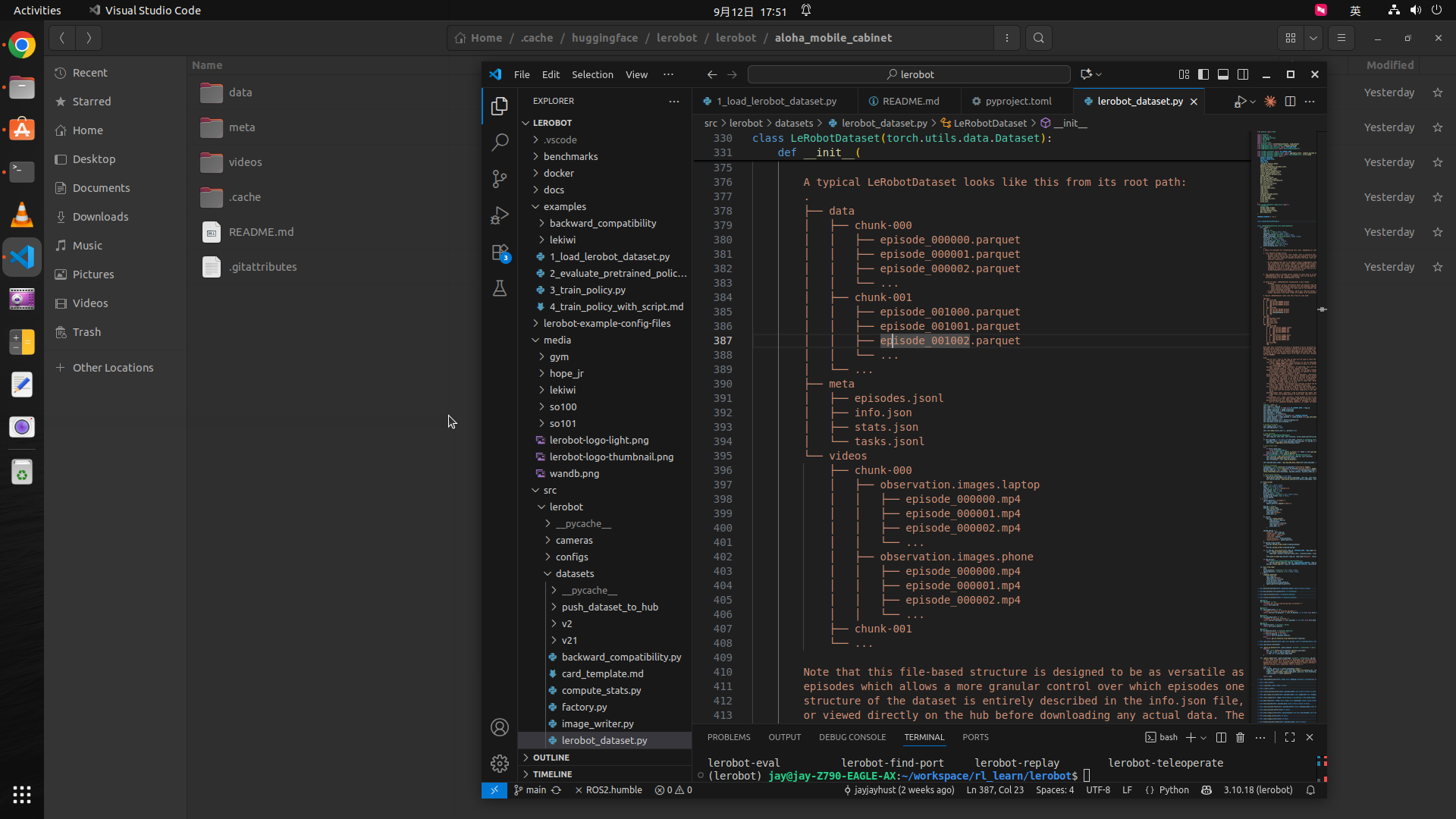

1_load_lerobot_dataset.py:lerobot数据集的线上仓库查看、下载、加载等。

-

运行这个文件的时候因为要从huggingface上查询和下载一些文件,所以可能要激活一下代理先

export HTTP_PROXY=http://127.0.0.1:7890

export HTTPS_PROXY=http://127.0.0.1:7890对应的线上数据集:lerobot/aloha_mobile_cabinet

Number of samples/frames: 127500

Number of episodes: 85

Frames per second: 50https://huggingface.co/spaces/lerobot/visualize_dataset?path=%2Flerobot%2Faloha_mobile_cabinet%2Fepisode_0

如果报错:No valid stream found in input file. Is -1 of the desired media type

安装一下ffmpeg

conda install -c conda-forge ffmpeg=6.1.1 -y

350

350

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?