本文基于下述链接修改

鱼眼图像的全景矫正_鱼眼图像校正-优快云博客

相对于woodscape柱面图的转换,优势是盲区范围更小,转换过程不需要鱼眼相机的畸变参数,只需要相机内参中的主点和相机的外参角度即可

woodscape柱面转换

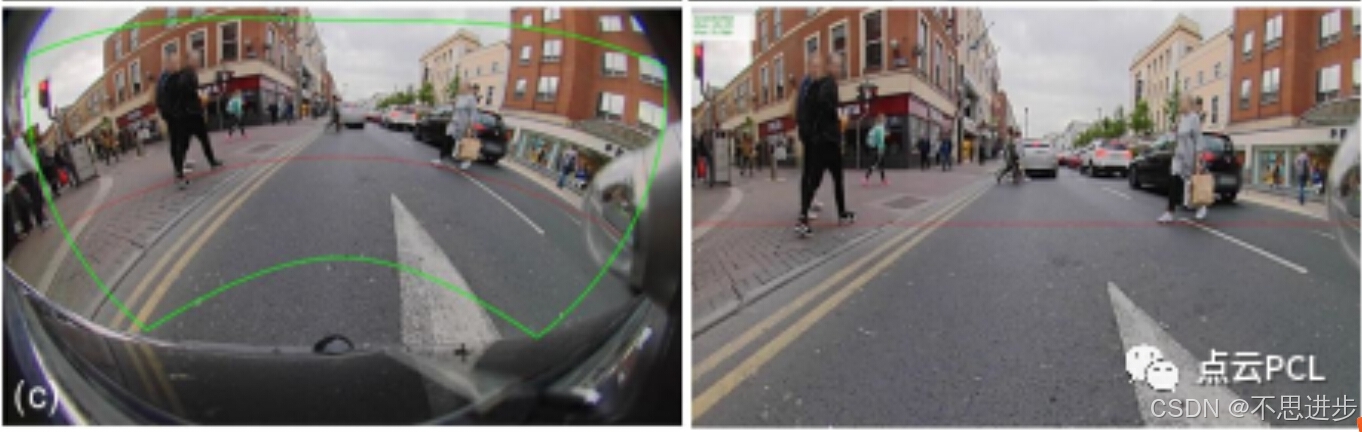

上述链接的转换效果如下:

1、左侧为鱼眼原图,右侧为转换出的柱面图,右侧柱面图的边框(绿色点)映射回左侧原图绿色点(原图中绿线围起来区域代表转换的范围)。

2、左侧原图中的红色点为在鱼眼原图中手动选的点,右图中红色的点为左图中通过下面推导的公式映射过去的点

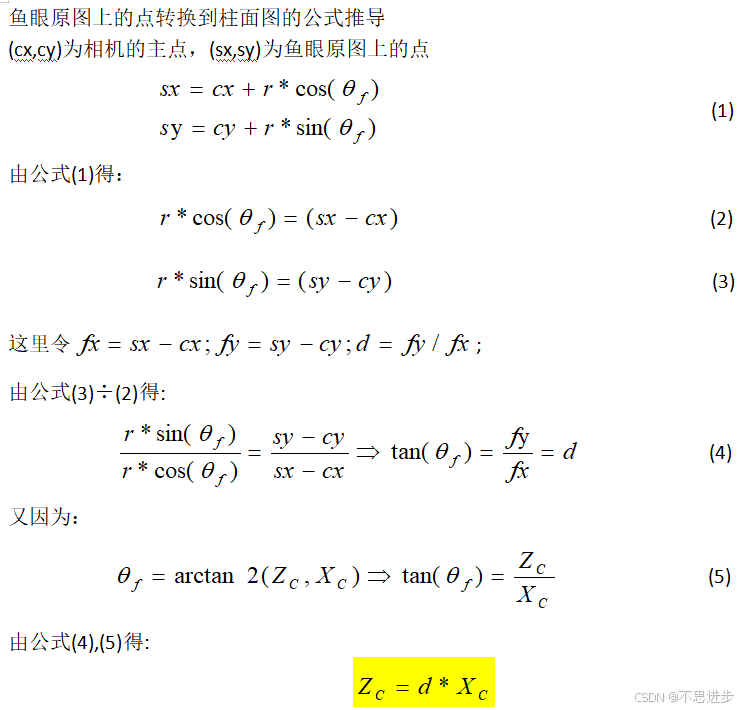

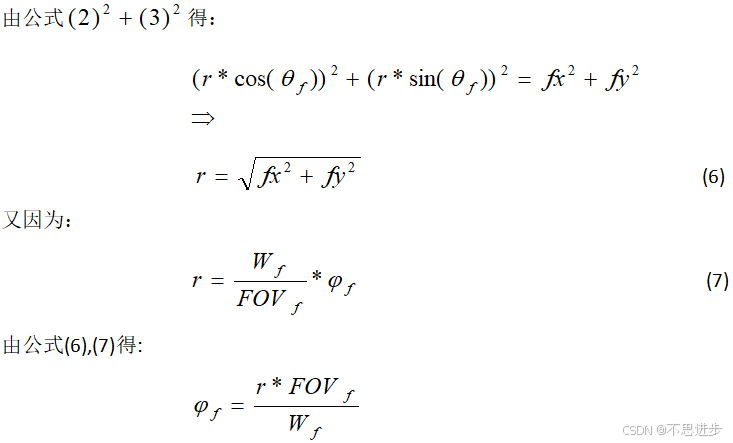

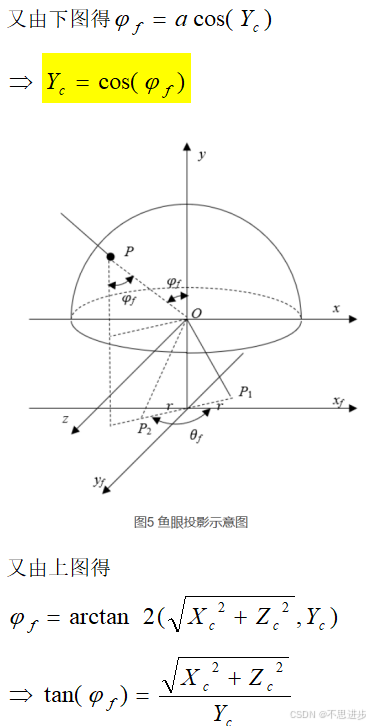

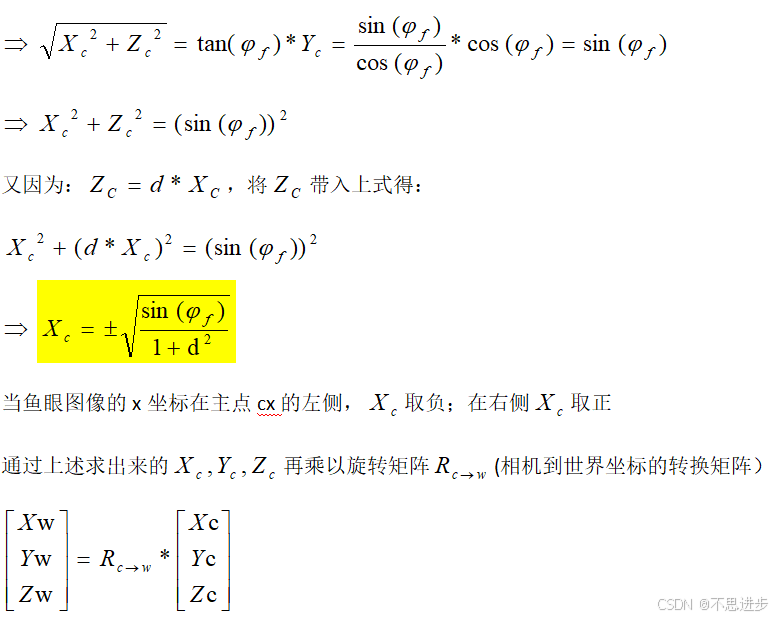

上述文章给出了柱面图中的点到鱼眼原图点的转换关系,本文根据上述文章给出鱼眼原图上的点转到柱面图的公式推导:

下面为全部代码包括 鱼眼转柱面和柱面转鱼眼的过程,柱面图的宽高可自己设定。柱面图的宽是按照光轴分别向左右做经度90度展开。ratioy控制图像上沿纬度的展开范围

#include <iostream>

#include <opencv2/opencv.hpp>

typedef struct _FisheyeExtrinsicParam {

float rx;

float ry;

float rz;

}FisheyeExtrinsicParam;

typedef struct _FisheyeIntrinsicParam {

float cx;

float cy;

}FisheyeIntrinsicParam;

void initCylindricalTable(double* theta, double* phi, int sphereW, int sphereH);

int swInitCameraRMatrix(FisheyeExtrinsicParam* fisheyeExtriParam, double* pRotateMatrix);

void initCylindricalToFisheyeMap(cv::Point2f* sphereMap, double* theta, double* phi,

double FOV, double R, double* rmatw2c, FisheyeIntrinsicParam* pIntriParam);

void equidistantCylindricalToFisheyePoint(cv::Point pIn, cv::Point2f* pOut, double* theta, double* phi,

double FOV, double R, double* rmatw2c, FisheyeIntrinsicParam* pIntriParam);

void fisheyeToCylindricalPoint(cv::Point src, cv::Point* dst, double FOV, double R, double* rmatw2c, FisheyeIntrinsicParam* pIntriParam);

cv::Mat cylindricalToFisheyeImage(const cv::Mat& src, cv::Point2f* sphereMap);

void drawConversionRange(const cv::Mat& cylinderImg, const cv::Mat& src, cv::Point2f* sphereMap);

const int imageW = 1920; //鱼眼原图宽

const int imageH = 1536; //鱼眼原图高

const int sphereW = 640 ; //柱面图的宽(可自己设定)

const int sphereH = 448; //柱面图的高

const float ratioy = 0.4;

float R = imageW / 2.0f;

double FOV = 180 * CV_PI / 180.0f; // FOV of the fisheye, eg: 180 degrees

double theta[sphereW];

double phi[sphereH];

cv::Point2f gSPHERE_MAP[sphereW * sphereH]; //存储柱面图的点对应的原图点索引

int main()

{

cv::Mat dst;

cv::Point p[10] = { {106,905},{1749,768},{322,492},{1021,244},{1044,956},

{445,1134},{1586,1010},{1004,598},{843,210},{202,524}};

cv::Point pd[10];

cv::Size dstSize(imageW/3, imageH /3);

FisheyeExtrinsicParam extri = { 0 };

FisheyeIntrinsicParam intri = { 0 };

double rotateMatW2C[9] = { 0 };

extri.rx = -38.156; //外参角度

extri.ry = -1.236;

extri.rz = -0.341;

intri.cx = 958.520996;//内参 主点

intri.cy = 769.257629;

// Read the input fisheye image

cv::Mat fisheyeImage = cv::imread("right.jpg");

if (fisheyeImage.empty()) {

std::cout << "Error: Could not read the input image." << std::endl;

return -1;

}

//------------------初始化只调用一次-生成映射表gSPHERE_MAP---------------------start

swInitCameraRMatrix(&extri, rotateMatW2C);

initCylindricalTable(theta, phi, sphereW, sphereH);

initCylindricalToFisheyeMap(gSPHERE_MAP, theta, phi,

FOV, R, rotateMatW2C, &intri);

//------------------初始化只调用一次-生成映射表gSPHERE_MAP---------------------end

//生成柱面图只用柱面到原图的映射表即可

cv::Mat sphericalImage = cylindricalToFisheyeImage(fisheyeImage, gSPHERE_MAP);

drawConversionRange(sphericalImage,fisheyeImage, gSPHERE_MAP);

for (int k = 0; k < 10; k++)//验证原图上的点转到柱面图是否正确

{

cv::circle(fisheyeImage, p[k], 8, cvScalar(0, 0, 255), -1);

fisheyeToCylindricalPoint(p[k], &pd[k],FOV, R, rotateMatW2C, &intri);

cv::circle(sphericalImage, pd[k], 3, cvScalar(0, 0, 255), -1);

}

cv::resize(fisheyeImage, dst, dstSize, 0, 0, cv::INTER_NEAREST);

cv::imshow("Image", dst);

cv::imshow("Spherical Image", sphericalImage);

cv::waitKey(0);

return 0;

}

void initCylindricalTable(double* theta, double* phi, int sphereW,int sphereH)

{

double halfPi = CV_PI * 0.5;

//by default, the horizontal FOV is[-pi, pi], the verticle FOV is[-pi / 2, pi / 2]

double interval = (1 - (-ratioy)) * 1.0 / sphereH;

for (int i = 0; i < sphereH; ++i)

{

phi[i] = (-ratioy + i * interval) * halfPi;//latitude of the equirectangular

}

interval = (1 - (-1)) * 1.0 / sphereW;

for (int i = 0; i < sphereW; ++i)

{

theta[i] = (-1 + i * interval) * halfPi;//longitude of the equirectangular

}

}

//矩阵相乘

/*

参数: a 矩阵 m行,n列

b 矩阵 n行,k列

结果矩阵 c 矩阵 m行,k列

*/

void swMultiMatrix(double* a, double* b, int m, int n, int k, double* c)

{

int i, j, l, u;

for (i = 0; i <= m - 1; i++)

for (j = 0; j <= k - 1; j++)

{

u = i * k + j;

c[u] = 0;

for (l = 0; l <= n - 1; l++)

c[u] = c[u] + a[i * n + l] * b[l * k + j];

}

return;

}

// R[w-c]: world -> camera

int swInitCameraRMatrix(FisheyeExtrinsicParam* fisheyeExtriParam, double* pRotateMatrix)

{

float rx = (float)(fisheyeExtriParam->rx * CV_PI / 180.0f);

float ry = (float)(fisheyeExtriParam->ry * CV_PI / 180.0f);

float rz = (float)(fisheyeExtriParam->rz * CV_PI / 180.0f);

double R_temp[9];

double R_X[9] = {

1, 0, 0,

0, cos(rx), -sin(rx),

0, sin(rx), cos(rx)

};

double R_Y[9] = {

cos(ry), 0, sin(ry),

0, 1, 0,

-sin(ry), 0, cos(ry)

};

double R_Z[9] = {

cos(rz), -sin(rz), 0,

sin(rz), cos(rz), 0,

0, 0, 1

};

swMultiMatrix(R_Z, R_Y, 3, 3, 3, R_temp);

swMultiMatrix(R_temp, R_X, 3, 3, 3, pRotateMatrix);

return 1;

}

void equidistantCylindricalToFisheyePoint(cv::Point pIn,cv::Point2f* pOut, double *theta,double *phi,

double FOV,double R,double* rmatw2c, FisheyeIntrinsicParam* pIntriParam)

{

int x = pIn.x;

int y = pIn.y;

double Xw = cos(phi[y]) * sin(theta[x]);

double Yw = cos(phi[y]) * cos(theta[x]);

double Zw = sin(phi[y]);

double Xc = (rmatw2c[0] * Xw) + (rmatw2c[1] * Yw) + (rmatw2c[2] * Zw);

double Yc = (rmatw2c[3] * Xw) + (rmatw2c[4] * Yw) + (rmatw2c[5] * Zw);

double Zc = (rmatw2c[6] * Xw) + (rmatw2c[7] * Yw) + (rmatw2c[8] * Zw);

// Calculate fisheye angle and radius

// double phi_f2 = atan2(sqrt(Xc * Xc + Zc * Zc), (Yc /*+ 1e-12*/));

double phi_f = acos(Yc);//phi_f2 等价于phi_f

double r_f = phi_f * 2 * R / FOV;

double theta_f = atan2(Zc, Xc);

if (abs(Xc) < 1e-12){

theta_f = atan2(Zc, (Xc + 1e-12));

}else{

theta_f = atan2(Zc, Xc);

}

// Pixel in fisheye space

float fishX = pIntriParam->cx + r_f * cos(theta_f);

float fishY = pIntriParam->cy + r_f * sin(theta_f);

if (fishX < 0.0)

{

fishX = 0;

}

if (fishX > static_cast<float>(imageW) - 1.0)

{

fishX = imageW - 1.0;

}

if (fishY < 0.0)

{

fishY = 0;

}

if (fishY > static_cast<float>(imageH) - 1.0)

{

fishY = static_cast<float>(imageH) - 1.0;

}

pOut->x = fishX;

pOut->y = fishY;

}

void fisheyeToCylindricalPoint(cv::Point src, cv::Point* dst, double FOV, double R, double* rmatw2c, FisheyeIntrinsicParam* pIntriParam)

{

float dx = 0.0f;

float fx = src.x - pIntriParam->cx;

float fy = src.y - pIntriParam->cy;

if (abs(fx) < 1e-12)

{

dx = fy / (fx + 1e-12);

}

else

{

dx = fy / fx;

}

double r_f = sqrt(fx * fx + fy * fy);

if (fx < 0)

{

r_f = -sqrt(fx * fx + fy * fy);

}

double phi_f = (r_f * FOV) / (2 * R);

double Yc = cos(phi_f);

double Xc = sin(phi_f) * sqrt(1 / (1 + dx * dx));

double Zc = Xc * dx;

double Xw = (rmatw2c[0] * Xc) + (rmatw2c[3] * Yc) + (rmatw2c[6] * Zc);//rmatw2c的转置 camera->world

double Yw = (rmatw2c[1] * Xc) + (rmatw2c[4] * Yc) + (rmatw2c[7] * Zc);

double Zw = (rmatw2c[2] * Xc) + (rmatw2c[5] * Yc) + (rmatw2c[8] * Zc);

double interval = (1 - (-ratioy)) * 1.0 / sphereH;

dst->y = (int)(((asin(Zw) * 2 / CV_PI) + ratioy) / interval + 0.5);

double interval2 = (1 - (-1)) * 1.0 / sphereW;

dst->x = (int)((atan2(Xw, Yw)*2 / CV_PI + 1) / interval2 + 0.5);

}

void initCylindricalToFisheyeMap(cv::Point2f* sphereMap,double* theta, double* phi,

double FOV, double R, double* rmatw2c, FisheyeIntrinsicParam* pIntriParam)

{

for (int y = 0; y < sphereH; ++y)

{

for (int x = 0; x < sphereW; ++x)

{

equidistantCylindricalToFisheyePoint(cv::Point(x, y), &sphereMap[y * sphereW + x], theta, phi, FOV, R, rmatw2c, pIntriParam);

}

}

}

cv::Mat cylindricalToFisheyeImage(const cv::Mat& src,cv::Point2f* sphereMap)

{

cv::Point2f fishPoint;

cv::Mat dest(sphereH, sphereW, CV_8UC3);

for (int y = 0; y < sphereH; ++y)

{

for (int x = 0; x < sphereW; ++x)

{

fishPoint = sphereMap[y * sphereW + x];

cv::Vec3b color = src.at<cv::Vec3b>(static_cast<int>(fishPoint.y + 0.5), static_cast<int>(fishPoint.x + 0.5));

dest.at<cv::Vec3b>(y, x) = color;

}

}

return dest;

}

void drawConversionRange(const cv::Mat& cylinderImg, const cv::Mat& src, cv::Point2f* sphereMap)

{

cv::Point2f fishPoint;

cv::Point drawPoint;

#if 1

for (int x = 0; x < cylinderImg.cols; ++x)

{

drawPoint.x = x;

drawPoint.y = 1;

cv::circle(cylinderImg, drawPoint, 1, cvScalar(0, 255, 0), -1);

drawPoint.x = x;

drawPoint.y = cylinderImg.rows-2;

cv::circle(cylinderImg, drawPoint, 1, cvScalar(0, 255, 0), -1);

}

for (int y = 0; y < cylinderImg.rows; ++y)

{

drawPoint.x = 1;

drawPoint.y = y;

cv::circle(cylinderImg, drawPoint, 1, cvScalar(0, 255, 0), -1);

drawPoint.x = cylinderImg.cols - 2;

drawPoint.y = y;

cv::circle(cylinderImg, drawPoint, 1, cvScalar(0, 255, 0), -1);

}

#endif

for (int x = 0; x < sphereW; ++x)

{

fishPoint = sphereMap[1*sphereW + x];

drawPoint.x = static_cast<int>(fishPoint.x + 0.5);

drawPoint.y = static_cast<int>(fishPoint.y + 0.5);

cv::circle(src, drawPoint, 3, cvScalar(0, 255, 0), -1);

fishPoint = sphereMap[(sphereH-1) * sphereW + x];

drawPoint.x = static_cast<int>(fishPoint.x + 0.5);

drawPoint.y = static_cast<int>(fishPoint.y + 0.5);

cv::circle(src, drawPoint, 3, cvScalar(0, 255, 0), -1);

}

for (int y = 0; y < sphereH; ++y)

{

fishPoint = sphereMap[y * sphereW+1];

drawPoint.x = static_cast<int>(fishPoint.x + 0.5);

drawPoint.y = static_cast<int>(fishPoint.y + 0.5);

cv::circle(src, drawPoint, 3, cvScalar(0, 255, 0), -1);

fishPoint = sphereMap[y * sphereW + sphereW-1];

drawPoint.x = static_cast<int>(fishPoint.x + 0.5);

drawPoint.y = static_cast<int>(fishPoint.y + 0.5);

cv::circle(src, drawPoint, 3, cvScalar(0, 255, 0), -1);

}

}

有需要原图的可以私信我

1万+

1万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?