概率论与随机过程笔记(2):离散随机变量(1)

2019-11-25

*这部分的笔记依据Dimitri P. Bertsekas和John N. Tsitsiklis的《概率导论》第2章前半部分的内容。

2.1 关于离散随机变量的几个概念

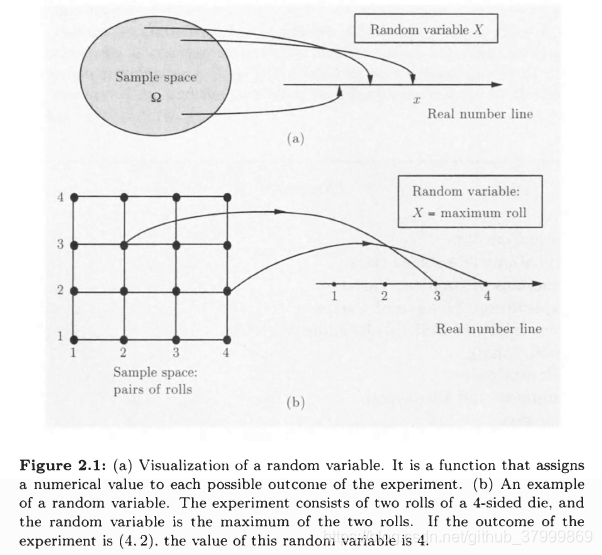

Given an experiment and the corresponding set of possible outcornes (the sample space), a random variable associates a particular number with each outcome: see Fig. 2.1. We refer to this number as the numerical value or simply the value of the random variable. mathematically, a random variable is a real-valued function of the experimental outcome.

Main Concepts Related to Random Variables:

Starting with a probabilistic model of an experiment:

- A random variable is a real-valued function of the outcome of the experiment.

- A function of a random variable defines another random variable.

- We can associate with each random variable certain “averages” of interest, such as the mean and the variance.

- A random variable can be conditioned on an event or on another random variable.

- There is a notion of independence of a random variable from an event or from another random variable.

A random variable is called discrete if its range (the set of values that it can take) is either finite or countably infinite. A random variable that can take an uncountably infinite number of values is not discrete.

Concepts Related to Discrete Random Variables

Starting with a probabilistic model of an experiment:

- A discrete random variable is a real-valued function of the outcome of the experiment that can take a finite or countably infinite number of values.

- A discrete random variable has an associated probability mass funcion (PMF), which gives the probability of each numeric the random variable can take.

- A function of a discrete random variable defines another discrete random variable, whose PMF can be obtained from the PMF of the original random variable.

2.2 概率质量函数(probability mass function)

The most important way to characterize a random variable is through the probabilities of the values that it can take. For a discrete random variable X X X, these are captured by the probability mass function (PMF for short) of X X X, denoted p X p_X pX. In particular. if x is any possible value of X X X. the probability mass of x x x. lenoted p X ( x ) p_X(x) pX(x). is the probability of the event { X = x } \{X = x\} { X=x} consisting of: that give rise to a value of X X X equal to x x x: p X ( x ) = P ( { X = x } ) p_X(x) = P(\{X=x\}) pX(x)=P({ X=x})

We will use upper case characters ( X X X) to denote random variables, and lower case characters ( x x x) to denote real numbers such as the numerical values of a random variable. Note that ∑ x p X ( x ) = 1 \sum_x p_X(x) = 1 x∑pX(x)=1

Calculation of the PMF of a Random Variable X X X

For each possible value x x x of X X X:

- collect all the posible outcomes that give rise to the event { X = x } \{X=x\} { X=x}

- add their probabilities to obtain p X ( x ) p_X(x) pX(x)

By a similar argument, for any set S S S of possible values of X X X,we have P ( x ∈ S ) = ∑ x ∈ S p X ( x ) P(x \in S) = \sum_{x \in S} p_X(x) P(x∈S)=x∈S∑pX(x)

【伯努利随机变量(The Bernoulli Random Variable)】Consider the toss of a coin, which comes up a head with probability p p p, and a tail with probability 1 − p 1 - p 1−p. The Bernoulli random variable takes the two values 1 and 0, depending on whether the outcome is a head or a tail:

f ( x ) = { 1 if a head 0 if a tail f(x)= \begin{cases} 1& \text{if a head}\\ 0& \text{if a tail} \end{cases} f(x)={

10if a headif a tail Its PMF is

f ( x ) = { p if k=1 1 − p if k=0 f(x)= \begin{cases} p& \text{if k=1}\\ 1-p& \text{if k=0} \end{cases} f(x)={

p1−pif k=1if k=0

For all its simplicity. the Bernoulli random variable is very important. In practice. it is used to model generic probabilistic situations with just two outcomes.

【二项随机变量(The Binomial Random Variable)】A coin is tossed n times. At each toss, the coin comes up a head with probability p p p, and a tail with probability 1 − p 1-p 1−p, independent of prior tosses. Let X X X be the number of heads in the n n n-toss sequence. We refer to X X X as a binomial random variable with parameters n n n and p p p. PMF of X X X consists of the binomial probabilities that were calculated

p X ( k ) = P ( x = K ) = ( n k ) p k ( 1 − p ) n − k p_X(k) = P(x=K) = {n \choose k} p^k (1-p)^{n-k} pX(k)=P(x=K)=(kn)pk(1

本文详细介绍了离散随机变量的概念,包括概率质量函数(PMF),并探讨了期望、均值和方差。通过伯努利、二项、几何和泊松随机变量举例说明了离散随机变量的应用。

本文详细介绍了离散随机变量的概念,包括概率质量函数(PMF),并探讨了期望、均值和方差。通过伯努利、二项、几何和泊松随机变量举例说明了离散随机变量的应用。

最低0.47元/天 解锁文章

最低0.47元/天 解锁文章

3118

3118

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?