一、安装 java8:省略.................

二、下载安装 hadoop-3.3.0.tar.gz

Index of /dist/hadoop/common/hadoop-3.0.0

解压 到 /hadoop-3.3.0

2.1、添加下面的内容到 ./etc/hadoop/hadoop-env.sh

## java Home环境变量

export JAVA_HOME=/usr/lib/jvm/java-8-openjdk-amd64

## NameNode用户

export HDFS_NAMENODE_USER=root

## DataNode 用户

export HDFS_DATANODE_USER=root

## 副本NameNode用户

export HDFS_SECONDARYNAMENODE_USER=root

##YARN 资源管理用户

export YARN_RESOURCEMANAGER_USE=root2.2、修改配置文件 core-site.xml,hdfs-site.xml,mapred-site.xml,yarn-site.xml

参考:Apache Hadoop 3.3.2 – Hadoop: Setting up a Single Node Cluster.

先创建一下配置文件需要的路径

/usr/local/hadoop/tmp/dfs/name

/usr/local/hadoop/tmp/dfs/data

core-site.xml:

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://localhost:9000</value>

<!-- ####注释 : HDFS的URI,文件系统://namenode标识:端口号 -->

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>/george/soft/hadoop/tmp</value>

<!-- ###注释: namenode上本地的hadoop临时文件夹 -->

</property>

</configuration>hdfs-site.xml:

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

<description>副本个数,配置默认是3,应小于datanode机器数量</description>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:/usr/local/hadoop/tmp/dfs/name</value>

<description>设置存放NameNode的文件路径</description>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>file:/usr/local/hadoop/tmp/dfs/data</value>

<description>设置存放DataNode的文件路径</description>

</property>

</configuration>mapred-site.xml:

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

</configuration>yarn-site.xml:

<configuration>

<!-- Site specific YARN configuration properties -->

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

</configuration>需要配置root 免密登录否则回出现下面的异常信息:

$ ssh localhost

$ ssh-keygen -t rsa -P '' -f ~/.ssh/id_rsa

$ cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

$ chmod 0600 ~/.ssh/authorized_keysStarting namenodes on [namenode]

ERROR: Attempting to operate on hdfs namenode as root

ERROR: but there is no HDFS_NAMENODE_USER defined. Aborting operation.

Starting datanodes

ERROR: Attempting to operate on hdfs datanode as root

ERROR: but there is no HDFS_DATANODE_USER defined. Aborting operation.

Starting secondary namenodes [datanode1]

ERROR: Attempting to operate on hdfs secondarynamenode as root

ERROR: but there is no HDFS_SECONDARYNAMENODE_USER defined. Aborting operation.

Starting resourcemanager

ERROR: Attempting to operate on yarn resourcemanager as root

ERROR: but there is no YARN_RESOURCEMANAGER_USER defined. Aborting operation.

Starting nodemanagers

ERROR: Attempting to operate on yarn nodemanager as root

ERROR: but there is no YARN_NODEMANAGER_USER defined. Aborting operation.

2.3、namenode格式化,启动前需要格式化

sudo /hadoop-3.3.0/bin/hdfs namenode -format

2.4、/hadoop-3.3.0/etc/hadoop/yarn-env.sh

YARN_RESOURCEMANAGER_USER=root

YARN_NODEMANAGER_USER=root2.5、最后启动,启动成功

george@ubuntu:/george/soft/hadoop-3.3.0/sbin$ sudo ./start-all.sh

[sudo] password for george:

Sorry, try again.

[sudo] password for george:

Starting namenodes on [localhost]

Starting datanodes

Starting secondary namenodes [ubuntu]

Starting resourcemanager

Starting nodemanagers

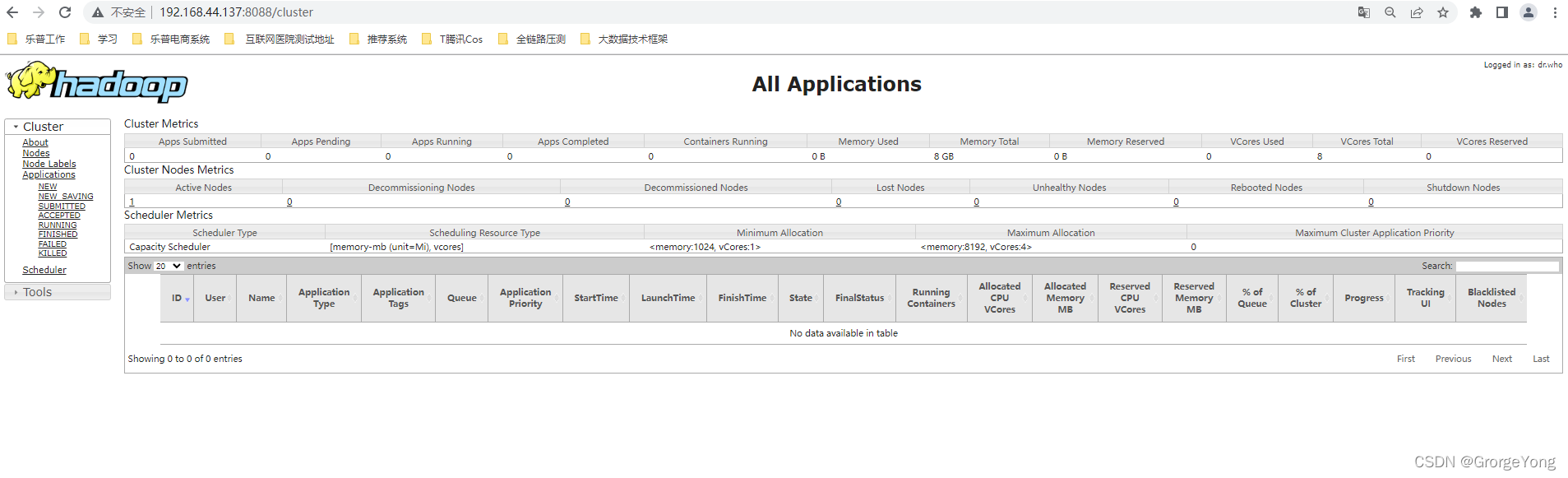

george@ubuntu:/george/soft/hadoop-3.3.0/sbin$ 三、访问 http://yourip:8088/cluster

成功显示

参考:

配置Hadoop3.3.0单节点集群并解决启动问题

配置Hadoop3.3.0单节点集群并解决启动问题

本文档详细介绍了如何在Ubuntu系统中安装Hadoop 3.3.0,包括设置环境变量、修改配置文件、格式化NameNode和启动所有服务。在启动过程中遇到因用户权限导致的错误,并提供了解决方案,如配置root用户免密登录,最终成功启动Hadoop集群并能通过8088端口访问YARN集群状态。

本文档详细介绍了如何在Ubuntu系统中安装Hadoop 3.3.0,包括设置环境变量、修改配置文件、格式化NameNode和启动所有服务。在启动过程中遇到因用户权限导致的错误,并提供了解决方案,如配置root用户免密登录,最终成功启动Hadoop集群并能通过8088端口访问YARN集群状态。

1527

1527

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?