import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

%matplotlib

%matplotlib inline

%config InlineBackend.figure_format = 'retina'

Using matplotlib backend: MacOSX

简单线性回归的实现

只有一个样本特征, 及只有一个变量: y = kx + b

最优化损失函数:

∑ i = 1 m ( y ( i ) − a x ( i ) − b ) 2 \sum_{i=1}^{m}\left(y^{(i)}-a x^{(i)}-b\right)^{2} i=1∑m(y(i)−ax(i)−b)2

使用最小二乘法问题: 最小化误差的平方

{ a = ∑ i = 1 m ( x ( i ) − x ˉ ) ( y ( i ) − y ˉ ) ∑ i = 1 m ( x ( i ) − x ˉ ) 2 b ^ = y ˉ − a x ˉ \left\{\begin{array}{l} a=\frac{\sum_{i=1}^{m}\left(x^{(i)}-\bar{x}\right)\left(y^{(i)}-\bar{y}\right)}{\sum_{i=1}^{m}\left(x^{(i)}-\bar{x}\right)^{2}} \\ \hat{b}=\bar{y}-a \bar{x} \end{array}\right. ⎩⎨⎧a=∑i=1m(x(i)−xˉ)2∑i=1m(x(i)−xˉ)(y(i)−yˉ)b^=yˉ−axˉ

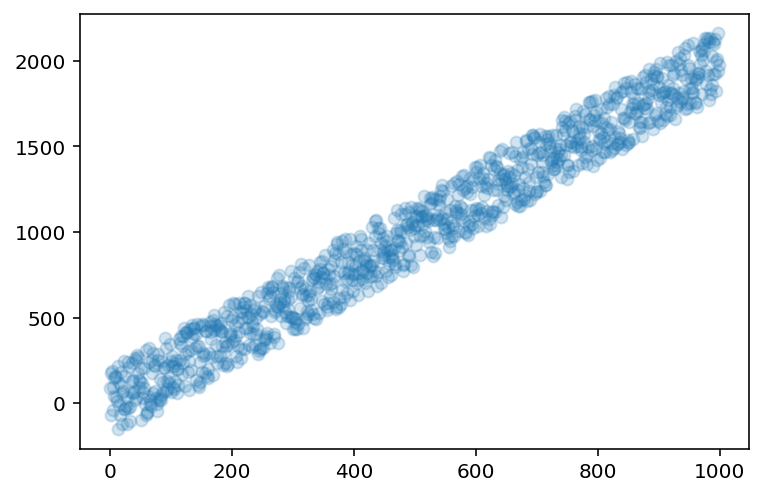

SIZE = 1000

UNDULATE = 200

x = np.arange(SIZE)

y = x * 2 + np.random.randint(-UNDULATE, UNDULATE, size=SIZE)

plt.scatter(x, y, alpha=0.2)

plt.show()

线性回归实现

class SimpleLinearRegression():

""" 简单线性会回归 """

def __init__(self):

self.a = 0

self.b = 0

def fit(self, x, y):

x_avg = x.mean()

y_avg = y.mean()

molecule = 0

for x_item, y_item in zip(x, y):

molecule += (x_item-x_avg) * (y_item-y_avg)

denominator = 0

for item in x:

denominator += pow((item-x_avg), 2)

self.a = molecule / denominator

self.b = y_avg - self.a * x_avg

def predict(self, x):

return x * self.a + self.b

obj = SimpleLinearRegression()

%time obj.fit(x, y)

y_predict = obj.predict(x)

print('a=', obj.a)

print('b=', obj.b)

plt.scatter(x, y, alpha=0.2)

plt.plot(x, y_predict, color='r')

plt.show()

CPU times: user 11.9 ms, sys: 2.54 ms, total: 14.5 ms

Wall time: 14.5 ms

a= 1.988647556647557

b= 6.512545454545261

使用向量计算优化

使用向量计算,避免走循环计算。可以大幅度提高效率。

class SimpleLinearRegression2():

""" 简单线性会回归(向量实现) """

def __init__(self):

self.a = 0

self.b = 0

def fit(self, x, y):

x_avg = x.mean()

y_avg = y.mean()

molecule = np.dot(x-x_avg, y-y_avg)

denominator = np.dot(x-x_avg, x-x_avg)

self.a = molecule / denominator

self.b = y_avg - self.a * x_avg

def predict(self, x):

return x * self.a + self.b

obj = SimpleLinearRegression2()

%time obj.fit(x, y)

y_predict = obj.predict(x)

print('a=', obj.a)

print('b=', obj.b)

plt.scatter(x, y, alpha=0.2)

plt.plot(x, y_predict, color='r')

plt.show()

CPU times: user 180 µs, sys: 20 µs, total: 200 µs

Wall time: 225 µs

a= 1.988647556647557

b= 6.512545454545261

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-6nLUE1iH-1584840575526)(output_9_1.png)]](https://i-blog.csdnimg.cn/blog_migrate/27860cb682eb94a5e90154d7753f4315.png)

模型评价

MSE

1 m ∑ i = 1 m ( y t e s t ( i ) − y ^ t e s t ( i ) ) 2 \frac{1}{m} \sum_{i=1}^{m}\left(y_{t e s t}^{(i)}-\hat{y}_{t e s t}^{(i)}\right)^{2} m1i=1∑m(ytest(i)−y^test(i))2

np.dot(y_predict - y, y_predict - y) / len(y)

12879.24321590063

RMSE

1 m ∑ i = 1 m ( y tare ( 10 ) − y ˙ irst ( i ) ) 2 = M S E tert \sqrt{\frac{1}{m} \sum_{i=1}^{m}\left(y_{\text {tare }}^{(10)}-\dot{y}_{\text {irst }}^{(i)}\right)^{2}}=\sqrt{M S E_{\text {tert }}} m1i=1∑m(ytare (10)−y˙irst (i))2=MSEtert

import math

math.sqrt(np.dot(y_predict - y, y_predict - y) / len(y))

113.48675348207222

MAE

1 m ∑ i = 1 m ∣ y t e s t ( i ) − y ^ t e s t ( i ) ∣ \frac{1}{m} \sum_{i=1}^{m}\left|y_{t e s t}^{(i)}-\hat{y}_{t e s t}^{(i)}\right| m1i=1∑m∣∣∣ytest(i)−y^test(i)∣∣∣

np.sum(np.absolute(y-y_predict)) / len(y)

97.3878859088179

R Square

1 − M S E ( y ^ , y ) Var ( y ) 1-\frac{M S E(\hat{y}, y)}{\operatorname{Var}(y)} 1−Var(y)MSE(y^,y)

1 - np.dot(y_predict - y, y_predict - y)/len(y)/np.var(y)

0.9623896540119193

多元线性回归

使目标函数尽可能小:

∑

i

=

1

m

(

y

(

i

)

−

y

^

(

i

)

)

2

\sum_{i=1}^{m}\left(y^{(i)}-\hat{y}^{(i)}\right)^{2}

i=1∑m(y(i)−y^(i))2

{ X 0 ( i ) = 1 y ^ ( i ) = θ 0 X 0 ( i ) + θ 1 X 1 ( i ) + θ 2 X 2 ( i ) + … + θ n X n ( i ) \left\{\begin{array}{l} X_{0}^{(i)}=1 \\ \hat{y}^{(i)}=\theta_{0} X_{0}^{(i)}+\theta_{1} X_{1}^{(i)}+\theta_{2} X_{2}^{(i)}+\ldots+\theta_{n} X_{n}^{(i)} \end{array}\right. {X0(i)=1y^(i)=θ0X0(i)+θ1X1(i)+θ2X2(i)+…+θnXn(i)

=>

{ θ = ( θ 0 , θ 1 , θ 2 , … , θ n ) T X ^ ( i ) = ( X 0 ( i ) , X 1 ( i ) , X 2 ( i ) , … , X n ( i ) ) y ^ ( i ) = X ( i ) ⋅ θ \left\{\begin{array}{l} \theta=\left(\theta_{0}, \theta_{1}, \theta_{2}, \ldots, \theta_{n}\right)^{T} \\ \hat{X}^{(i)}=\left(X_{0}^{(i)}, X_{1}^{(i)}, X_{2}^{(i)}, \ldots, X_{n}^{(i)}\right) \\ \hat{y}^{(i)}=X^{(i)} \cdot \theta \end{array}\right. ⎩⎪⎨⎪⎧θ=(θ0,θ1,θ2,…,θn)TX^(i)=(X0(i),X1(i),X2(i),…,Xn(i))y^(i)=X(i)⋅θ

=>

X b = ( 1 X 1 ( 1 ) X 2 ( 1 ) … X n ( 1 ) 1 X 1 ( 2 ) X 2 ( 2 ) … X n ( 2 ) … … 1 X 1 ( m ) X 2 ( m ) … X n ( m ) ) θ = ( θ 0 θ 1 θ 2 … θ n ) X_{b}=\left(\begin{array}{ccccc} 1 & X_{1}^{(1)} & X_{2}^{(1)} & \ldots & X_{n}^{(1)} \\ 1 & X_{1}^{(2)} & X_{2}^{(2)} & \ldots & X_{n}^{(2)} \\ \ldots & & & & \ldots \\ 1 & X_{1}^{(m)} & X_{2}^{(m)} & \ldots & X_{n}^{(m)} \end{array}\right) \quad \theta=\left(\begin{array}{c} \theta_{0} \\ \theta_{1} \\ \theta_{2} \\ \ldots \\ \theta_{n} \end{array}\right) Xb=⎝⎜⎜⎜⎛11…1X1(1)X1(2)X1(m)X2(1)X2(2)X2(m)………Xn(1)Xn(2)…Xn(m)⎠⎟⎟⎟⎞θ=⎝⎜⎜⎜⎜⎛θ0θ1θ2…θn⎠⎟⎟⎟⎟⎞

y ^ = X b ⋅ θ \hat{y}=X_{b} \cdot \theta y^=Xb⋅θ

=> 最终推导出(多元线性回归的正规方程解(Normal Euqation))

θ = ( X b T X b ) − 1 X b T y y ^ = X b ⋅ θ \theta=\left(X_{b}^{T} X_{b}\right)^{-1} X_{b}^{T} y \\ \hat{y}=X_{b} \cdot \theta θ=(XbTXb)−1XbTyy^=Xb⋅θ

- 优点: 不需要对数据做归一化处理

- 缺点: 时间复杂度高 O(n^3) 优化到O(n^2.4)

class CustomLinearRegression():

"""线性回归 """

def __init__(self):

self._theta = None

# 截距

self.intercept = None

# 参数系数

self.coef = None

def fit(self, x_train, y_train):

# 矩阵第一列填充1

X_b = np.hstack([np.ones((len(train_x_df),1)), train_x_df])

# np.linalg.inv求逆矩阵

self._theta = np.linalg.inv(np.dot(X_b.T,X_b)).dot(X_b.T).dot(y_train)

self.intercept = self._theta[0]

self.coef = self._theta[1:]

return self

def predict(self, x_predict):

X_b = np.hstack([np.ones((len(train_x_df),1)), train_x_df])

return np.dot(X_b, self._theta)

def score(self, y, y_predict):

""" """

return 1 - np.dot(y_predict - y, y_predict - y)/len(y)/np.var(y)

# 使用波士顿房价数据测试

from sklearn import datasets

from sklearn.linear_model import LinearRegression

# 加载数据

boston = datasets.load_boston()

train_x_df = boston['data']

train_y_df = boston['target']

estimator = CustomLinearRegression()

estimator.fit(train_x_df, train_y_df)

predit_y_df = estimator.predict(train_x_df)

print('---- CustomLinerRegression ----')

print('intercept: ', estimator.intercept)

print('coef: ', estimator.coef)

print('score:', estimator.score(train_y_df, predit_y_df))

estimator = LinearRegression()

estimator.fit(train_x_df, train_y_df)

predit_y_df = estimator.predict(train_x_df)

print('\n---- SkleanLinerRegression ----')

print('intercept: ', estimator.intercept_)

print('coef: ', estimator.coef_)

print('score:', estimator.score(train_x_df, train_y_df))

---- CustomLinerRegression ----

intercept: 36.45948838506836

coef: [-1.08011358e-01 4.64204584e-02 2.05586264e-02 2.68673382e+00

-1.77666112e+01 3.80986521e+00 6.92224640e-04 -1.47556685e+00

3.06049479e-01 -1.23345939e-02 -9.52747232e-01 9.31168327e-03

-5.24758378e-01]

score: 0.7406426641094095

---- SkleanLinerRegression ----

intercept: 36.459488385090125

coef: [-1.08011358e-01 4.64204584e-02 2.05586264e-02 2.68673382e+00

-1.77666112e+01 3.80986521e+00 6.92224640e-04 -1.47556685e+00

3.06049479e-01 -1.23345939e-02 -9.52747232e-01 9.31168327e-03

-5.24758378e-01]

score: 0.7406426641094095

自定义实现的线性回归算法与sklean关键几个参数值一样。同时线性回归学习到的参数值对数据具有较强的解释性.

较强的解释性

线性回归学习到的参数值对数据具有较强的解释性.

print(boston.DESCR)

.. _boston_dataset:

Boston house prices dataset

---------------------------

**Data Set Characteristics:**

:Number of Instances: 506

:Number of Attributes: 13 numeric/categorical predictive. Median Value (attribute 14) is usually the target.

:Attribute Information (in order):

- CRIM per capita crime rate by town

- ZN proportion of residential land zoned for lots over 25,000 sq.ft.

- INDUS proportion of non-retail business acres per town

- CHAS Charles River dummy variable (= 1 if tract bounds river; 0 otherwise)

- NOX nitric oxides concentration (parts per 10 million)

- RM average number of rooms per dwelling

- AGE proportion of owner-occupied units built prior to 1940

- DIS weighted distances to five Boston employment centres

- RAD index of accessibility to radial highways

- TAX full-value property-tax rate per $10,000

- PTRATIO pupil-teacher ratio by town

- B 1000(Bk - 0.63)^2 where Bk is the proportion of blacks by town

- LSTAT % lower status of the population

- MEDV Median value of owner-occupied homes in $1000's

:Missing Attribute Values: None

:Creator: Harrison, D. and Rubinfeld, D.L.

This is a copy of UCI ML housing dataset.

https://archive.ics.uci.edu/ml/machine-learning-databases/housing/

This dataset was taken from the StatLib library which is maintained at Carnegie Mellon University.

The Boston house-price data of Harrison, D. and Rubinfeld, D.L. 'Hedonic

prices and the demand for clean air', J. Environ. Economics & Management,

vol.5, 81-102, 1978. Used in Belsley, Kuh & Welsch, 'Regression diagnostics

...', Wiley, 1980. N.B. Various transformations are used in the table on

pages 244-261 of the latter.

The Boston house-price data has been used in many machine learning papers that address regression

problems.

.. topic:: References

- Belsley, Kuh & Welsch, 'Regression diagnostics: Identifying Influential Data and Sources of Collinearity', Wiley, 1980. 244-261.

- Quinlan,R. (1993). Combining Instance-Based and Model-Based Learning. In Proceedings on the Tenth International Conference of Machine Learning, 236-243, University of Massachusetts, Amherst. Morgan Kaufmann.

df = pd.DataFrame({

'feature': boston['feature_names'][np.argsort(estimator.coef_)],

'value':estimator.coef_[np.argsort(estimator.coef_)]

})

df

| feature | value | |

|---|---|---|

| 0 | NOX | -17.766611 |

| 1 | DIS | -1.475567 |

| 2 | PTRATIO | -0.952747 |

| 3 | LSTAT | -0.524758 |

| 4 | CRIM | -0.108011 |

| 5 | TAX | -0.012335 |

| 6 | AGE | 0.000692 |

| 7 | B | 0.009312 |

| 8 | INDUS | 0.020559 |

| 9 | ZN | 0.046420 |

| 10 | RAD | 0.306049 |

| 11 | CHAS | 2.686734 |

| 12 | RM | 3.809865 |

根据学习到的参数可以发现:

- 正相关最大值为RM(房间个数),即房间数越大,房价越贵,且正相关性最强。

- 负相关最大值NOX(一氧化氮浓度),即NOX越大,房价越便宜,且负相关性最强。

这篇博客介绍了线性回归的基本概念和实现方法,包括简单线性回归、使用向量计算优化、模型评价指标如MSE、RMSE、MAE和R Square,以及多元线性回归的正规方程解。通过自定义实现与sklearn比较,强调了线性回归模型对于数据的解释性强,能揭示变量间的相关性。

这篇博客介绍了线性回归的基本概念和实现方法,包括简单线性回归、使用向量计算优化、模型评价指标如MSE、RMSE、MAE和R Square,以及多元线性回归的正规方程解。通过自定义实现与sklearn比较,强调了线性回归模型对于数据的解释性强,能揭示变量间的相关性。

1064

1064

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?