持续测试与DevOps - 构建高效的测试流水线

在现代软件开发中,DevOps已经成为提高交付效率和质量的关键实践。持续测试作为DevOps的核心组成部分,将测试活动无缝集成到整个软件交付流水线中。

持续测试理论基础

持续测试的定义与价值

持续测试是一种软件测试方法,它将自动化测试作为软件交付流水线的一个组成部分,以获得对业务风险的即时反馈。

持续测试的核心原则

左移测试和右移测试

| 特性 | 左移测试(Shift-Left) | 右移测试(Shift-Right) |

|---|---|---|

| 测试阶段 | 开发早期(编码前/编码中) | 生产环境及发布后 |

| 关注点 | 代码质量、功能正确性 | 运行时稳定性、性能、用户体验 |

| 主要手段 | 单元测试、集成测试、静态分析 | 监控、日志、灰度发布、混沌测试 |

| 目标 | 预防缺陷,减少后期修复成本 | 及时发现问题,保障生产环境稳定 |

测试金字塔在持续测试中的应用

CI/CD管道中的测试集成

Jenkins Pipeline测试集成

// Jenkinsfile - 完整的测试流水线

/**

* 定义流水线类型和代理

* pipeline: 声明式流水线

* agent any: 在任何可用的 Jenkins 代理上运行

*/

pipeline {

agent any

/**

* 环境变量配置

* DOCKER_REGISTRY: Docker 镜像仓库地址

* APP_NAME: 应用名称

* TEST_DATABASE_URL: 测试数据库URL,使用 Jenkins 凭据管理

*/

environment {

DOCKER_REGISTRY = 'your-registry.com'

APP_NAME = 'user-service'

TEST_DATABASE_URL = credentials('test-db-url')

}

/**

* 流水线阶段定义

*/

stages {

// 阶段1: 代码检出

stage('Checkout') {

steps {

// 从版本控制系统检出代码

checkout scm

script {

// 获取短格式的 Git 提交哈希,用于镜像标签

env.GIT_COMMIT_SHORT = sh(

script: "git rev-parse --short HEAD",

returnStdout: true

).trim()

}

}

}

// 阶段2: 构建 Docker 镜像

stage('Build') {

steps {

// 使用 Dockerfile 构建应用镜像

sh 'docker build -t ${APP_NAME}:${GIT_COMMIT_SHORT} .'

}

}

// 阶段3: 单元测试

stage('Unit Tests') {

steps {

script {

// 在 Docker 容器中运行单元测试

sh '''

docker run --rm \

-v $(pwd):/app \

${APP_NAME}:${GIT_COMMIT_SHORT} \

python -m pytest tests/unit/ \

--junitxml=reports/unit-tests.xml \

--cov=src \

--cov-report=xml:reports/coverage.xml

'''

}

}

post {

always {

// 发布单元测试报告

junit 'reports/unit-tests.xml'

// 发布代码覆盖率报告

publishCoverage adapters: [

coberturaAdapter('reports/coverage.xml')

], sourceFileResolver: sourceFiles('STORE_LAST_BUILD')

}

}

}

// 阶段4: 集成测试

stage('Integration Tests') {

steps {

script {

// 启动测试环境(数据库、消息队列等依赖服务)

sh '''

docker-compose -f docker-compose.test.yml up -d

sleep 30 # 等待服务启动完成

'''

try {

// 运行集成测试

sh '''

docker run --rm \

--network test-network \

-e DATABASE_URL=${TEST_DATABASE_URL} \

-v $(pwd):/app \

${APP_NAME}:${GIT_COMMIT_SHORT} \

python -m pytest tests/integration/ \

--junitxml=reports/integration-tests.xml

'''

} finally {

// 确保测试环境被清理

sh 'docker-compose -f docker-compose.test.yml down'

}

}

}

post {

always {

// 发布集成测试报告

junit 'reports/integration-tests.xml'

}

}

}

// 阶段5: 安全测试

stage('Security Tests') {

steps {

script {

// 容器镜像安全扫描 - 使用 Trivy 扫描漏洞

sh '''

docker run --rm \

-v /var/run/docker.sock:/var/run/docker.sock \

-v $(pwd):/tmp \

aquasec/trivy:latest \

image --format json \

--output /tmp/security-report.json \

${APP_NAME}:${GIT_COMMIT_SHORT}

'''

// 静态代码安全扫描 - 使用 Semgrep 检测代码安全问题

sh '''

docker run --rm \

-v $(pwd):/app \

returntocorp/semgrep:latest \

--config=auto \

--json \

--output=/app/semgrep-report.json \

/app/src

'''

}

}

post {

always {

// 归档安全测试报告

archiveArtifacts artifacts: '*-report.json', fingerprint: true

}

}

}

// 阶段6: 性能测试(仅在 main 和 develop 分支运行)

stage('Performance Tests') {

when {

anyOf {

branch 'main'

branch 'develop'

}

}

steps {

script {

// 部署应用到临时容器用于性能测试

sh '''

docker run -d \

--name perf-test-app \

-p 8080:8080 \

${APP_NAME}:${GIT_COMMIT_SHORT}

sleep 10 # 等待应用启动

'''

try {

// 使用 JMeter 进行性能测试

sh '''

docker run --rm \

--network host \

-v $(pwd)/performance:/tests \

justb4/jmeter:latest \

-n -t /tests/load-test.jmx \

-l /tests/results.jtl \

-j /tests/jmeter.log

'''

} finally {

// 清理性能测试容器

sh 'docker stop perf-test-app && docker rm perf-test-app'

}

}

}

post {

always {

// 发布性能测试报告

perfReport sourceDataFiles: 'performance/results.jtl'

}

}

}

// 阶段7: 部署到预发环境(仅 develop 分支)

stage('Deploy to Staging') {

when {

branch 'develop'

}

steps {

script {

// 标记并推送镜像到仓库

sh '''

docker tag ${APP_NAME}:${GIT_COMMIT_SHORT} \

${DOCKER_REGISTRY}/${APP_NAME}:staging

docker push ${DOCKER_REGISTRY}/${APP_NAME}:staging

'''

// 更新 Kubernetes 部署

sh '''

kubectl set image deployment/${APP_NAME} \

${APP_NAME}=${DOCKER_REGISTRY}/${APP_NAME}:staging \

-n staging

kubectl rollout status deployment/${APP_NAME} -n staging

'''

}

}

}

// 阶段8: 冒烟测试(部署到预发环境后)

stage('Smoke Tests') {

when {

branch 'develop'

}

steps {

script {

// 对预发环境进行冒烟测试

sh '''

docker run --rm \

-v $(pwd):/app \

${APP_NAME}:${GIT_COMMIT_SHORT} \

python -m pytest tests/smoke/ \

--base-url=https://staging.example.com \

--junitxml=reports/smoke-tests.xml

'''

}

}

post {

always {

// 发布冒烟测试报告

junit 'reports/smoke-tests.xml'

}

}

}

// 阶段9: 部署到生产环境(仅 main 分支,需要人工确认)

stage('Deploy to Production') {

when {

branch 'main'

}

steps {

script {

// 生产部署需要人工确认

input message: 'Deploy to production?', ok: 'Deploy'

// 标记并推送生产镜像

sh '''

docker tag ${APP_NAME}:${GIT_COMMIT_SHORT} \

${DOCKER_REGISTRY}/${APP_NAME}:latest

docker push ${DOCKER_REGISTRY}/${APP_NAME}:latest

'''

// 使用 Kubernetes 进行蓝绿部署

sh '''

kubectl set image deployment/${APP_NAME} \

${APP_NAME}=${DOCKER_REGISTRY}/${APP_NAME}:latest \

-n production

kubectl rollout status deployment/${APP_NAME} -n production

'''

}

}

}

}

/**

* 流水线后处理操作

*/

post {

always {

// 清理工作空间

cleanWs()

}

failure {

// 流水线失败时发送邮件通知

emailext (

subject: "Pipeline Failed: ${env.JOB_NAME} - ${env.BUILD_NUMBER}",

body: "Pipeline failed. Check console output at ${env.BUILD_URL}",

to: "${env.CHANGE_AUTHOR_EMAIL}"

)

}

success {

// 流水线成功时发送 Slack 通知

slackSend (

channel: '#deployments',

color: 'good',

message: "✅ ${env.JOB_NAME} - ${env.BUILD_NUMBER} deployed successfully"

)

}

}

}

GitLab CI/CD测试配置

# .gitlab-ci.yml

stages:

- build

- test

- security

- deploy-staging

- test-staging

- deploy-production

variables:

DOCKER_DRIVER: overlay2

DOCKER_TLS_CERTDIR: "/certs"

POSTGRES_DB: testdb

POSTGRES_USER: postgres

POSTGRES_PASSWORD: postgres

# 构建阶段

build:

stage: build

image: docker:20.10.16

services:

- docker:20.10.16-dind

before_script:

- docker login -u $CI_REGISTRY_USER -p $CI_REGISTRY_PASSWORD $CI_REGISTRY

script:

- docker build -t $CI_REGISTRY_IMAGE:$CI_COMMIT_SHA .

- docker push $CI_REGISTRY_IMAGE:$CI_COMMIT_SHA

only:

- main

- develop

- merge_requests

# 单元测试

unit-test:

stage: test

image: python:3.9

services:

- postgres:13

- redis:6

variables:

DATABASE_URL: postgresql://postgres:postgres@postgres:5432/testdb

REDIS_URL: redis://redis:6379

before_script:

- pip install -r requirements.txt

- pip install -r requirements-test.txt

script:

- pytest tests/unit/

--cov=src

--cov-report=xml

--junitxml=reports/unit-tests.xml

artifacts:

reports:

junit: reports/unit-tests.xml

coverage_report:

coverage_format: cobertura

path: coverage.xml

paths:

- coverage.xml

expire_in: 1 week

coverage: '/TOTAL.+ ([0-9]{1,3}%)/'

# 集成测试

integration-test:

stage: test

image: docker:20.10.16

services:

- docker:20.10.16-dind

- postgres:13

- redis:6

variables:

DATABASE_URL: postgresql://postgres:postgres@postgres:5432/testdb

REDIS_URL: redis://redis:6379

script:

- docker run --rm

--network host

-e DATABASE_URL=$DATABASE_URL

-e REDIS_URL=$REDIS_URL

$CI_REGISTRY_IMAGE:$CI_COMMIT_SHA

pytest tests/integration/ --junitxml=reports/integration-tests.xml

artifacts:

reports:

junit: reports/integration-tests.xml

expire_in: 1 week

# 代码质量检查

code-quality:

stage: test

image: python:3.9

before_script:

- pip install flake8 mypy bandit

script:

- flake8 src tests

- mypy src

- bandit -r src -f json -o bandit-report.json

artifacts:

reports:

codequality: bandit-report.json

expire_in: 1 week

allow_failure: true

# 安全扫描

container-security:

stage: security

image: docker:20.10.16

services:

- docker:20.10.16-dind

before_script:

- apk add --no-cache curl

- curl -sfL https://raw.githubusercontent.com/aquasecurity/trivy/main/contrib/install.sh | sh -s -- -b /usr/local/bin

script:

- trivy image --format template --template "@contrib/gitlab.tpl"

--output gl-container-scanning-report.json

$CI_REGISTRY_IMAGE:$CI_COMMIT_SHA

artifacts:

reports:

container_scanning: gl-container-scanning-report.json

expire_in: 1 week

allow_failure: true

# 部署到预发环境

deploy-staging:

stage: deploy-staging

image: alpine/helm:latest

before_script:

- apk add --no-cache curl

- curl -LO "https://dl.k8s.io/release/$(curl -L -s https://dl.k8s.io/release/stable.txt)/bin/linux/amd64/kubectl"

- chmod +x kubectl && mv kubectl /usr/local/bin/

script:

- helm upgrade --install $CI_PROJECT_NAME-staging ./helm-chart

--set image.repository=$CI_REGISTRY_IMAGE

--set image.tag=$CI_COMMIT_SHA

--set environment=staging

--namespace staging

--create-namespace

environment:

name: staging

url: https://staging.example.com

only:

- develop

# 预发环境测试

staging-tests:

stage: test-staging

image: python:3.9

dependencies:

- deploy-staging

before_script:

- pip install requests pytest

script:

- pytest tests/smoke/

--base-url=https://staging.example.com

--junitxml=reports/staging-tests.xml

artifacts:

reports:

junit: reports/staging-tests.xml

expire_in: 1 week

only:

- develop

# 生产部署

deploy-production:

stage: deploy-production

image: alpine/helm:latest

before_script:

- apk add --no-cache curl

- curl -LO "https://dl.k8s.io/release/$(curl -L -s https://dl.k8s.io/release/stable.txt)/bin/linux/amd64/kubectl"

- chmod +x kubectl && mv kubectl /usr/local/bin/

script:

- helm upgrade --install $CI_PROJECT_NAME ./helm-chart

--set image.repository=$CI_REGISTRY_IMAGE

--set image.tag=$CI_COMMIT_SHA

--set environment=production

--namespace production

--create-namespace

environment:

name: production

url: https://example.com

when: manual

only:

- main

测试环境管理策略

环境即代码”(IaC)

传统运维问题

传统上,环境配置往往是手工完成,容易出错且难以复现,导致“开发环境与生产环境不一致”的问题频发。

IaC 的核心思想

将环境配置用代码(脚本、配置文件、声明式语言等)描述,环境的创建、修改、销毁都通过执行代码完成。

优势:自动化:减少人工干预,提高效率。一致性:保证不同环境配置一致,避免“环境漂移”。可版本化:环境配置代码可以纳入版本控制,方便回滚和审计。可扩展:支持大规模环境快速搭建和管理。可测试:环境配置代码可以进行自动化测试,提升可靠性。

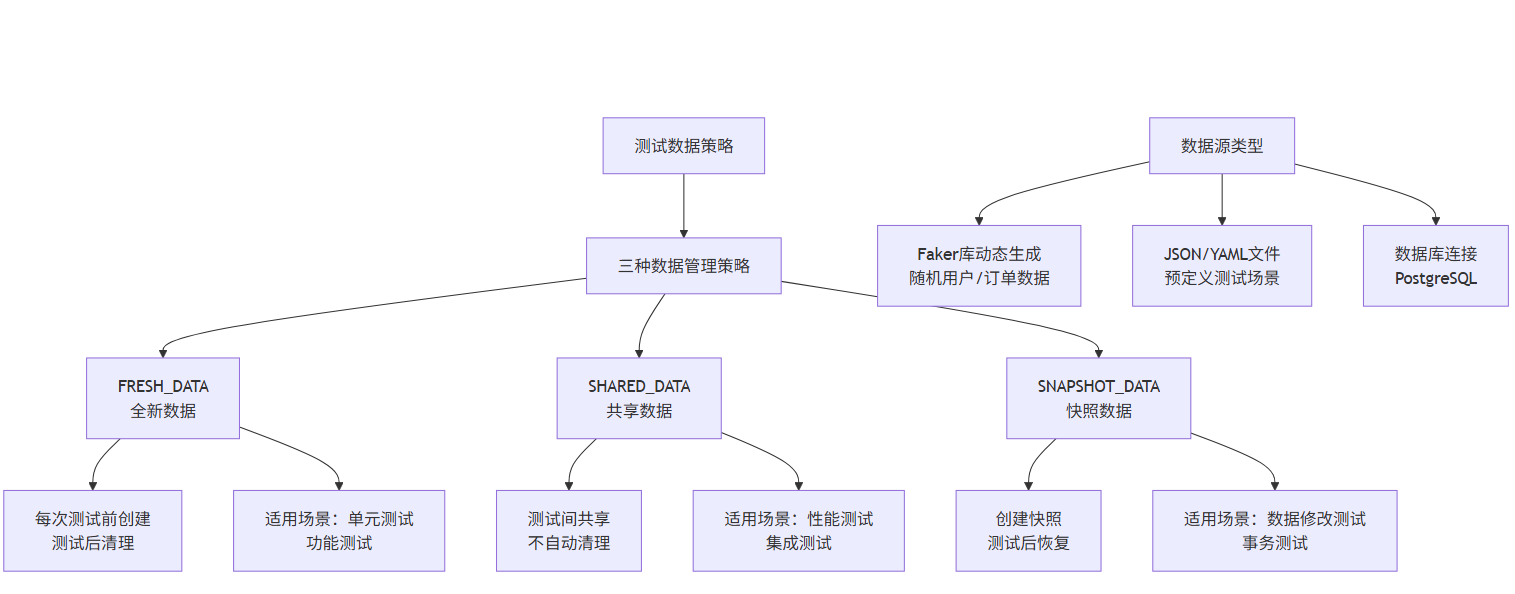

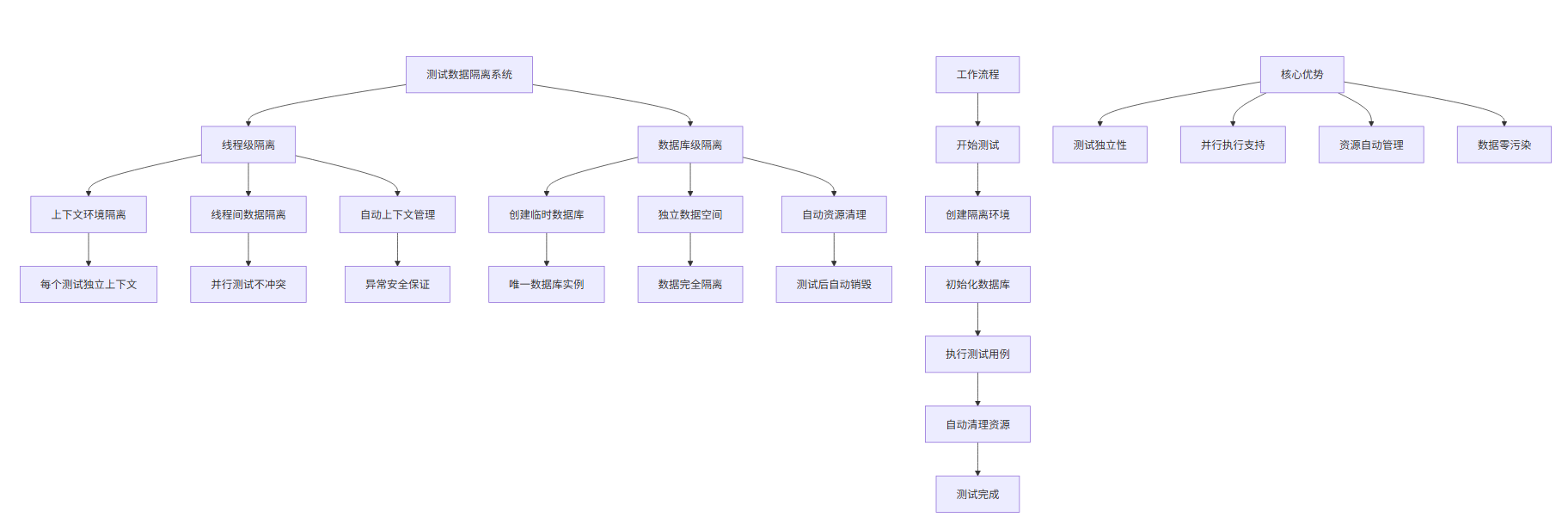

测试数据管理最佳实践

测试数据策略

测试数据隔离策略

测试失败分析与维护

智能测试失败分析

| 智能失败分类系统六大失败类别识别 | |

| 环境问题 |

服务连接、端口占用、超时等 |

| 数据问题 |

数据缺失、约束违反、格式错误 |

| 代码变更 |

语法错误、属性错误、导入问题 |

| 基础设施 |

内存不足、磁盘空间、网络问题 |

| 外部依赖 |

API限流、第三方服务异 |

| 间歇性失败 | 不稳定测试用例 |

根本原因分析引擎:

多维度分析:1)模式匹配:基于错误消息的正则表达式匹配;2)历史分析:7天内失败频率和模式分析;3)智能推断:根据失败特征推断根本原因

自动化维护管理

主动维护操作:1)自动禁用:识别并禁用频繁失败的测试;2)健康监控:持续跟踪测试稳定性;3)行动记录:记录所有维护操作

智能改进建议:1)测试重构:针对高失败率测试的重构建议;2)环境优化:环境稳定性改进方案;3)数据管理:测试数据策略优化。

综合报告输出

数据分析报告:1)失败类别分布统计;2)根本原因频率排名;3)测试失败次数排名;4)潜在问题测试列表

测试维护自动化

# test_maintenance_automation.py

import os

import git

import subprocess

from typing import List, Dict, Optional

from datetime import datetime, timedelta

class TestMaintenanceAutomation:

"""测试维护自动化"""

def __init__(self, repo_path: str):

self.repo_path = repo_path

self.repo = git.Repo(repo_path)

def auto_update_test_dependencies(self) -> Dict:

"""自动更新测试依赖"""

results = {

"updated_packages": [],

"failed_updates": [],

"security_updates": []

}

try:

# 检查过期的包

result = subprocess.run(

["pip", "list", "--outdated", "--format=json"],

capture_output=True, text=True, check=True

)

import json

outdated_packages = json.loads(result.stdout)

for package in outdated_packages:

package_name = package["name"]

current_version = package["version"]

latest_version = package["latest_version"]

# 检查是否为安全更新

is_security_update = self._check_security_update(package_name, current_version)

try:

# 更新包

subprocess.run(

["pip", "install", "--upgrade", package_name],

check=True, capture_output=True

)

results["updated_packages"].append({

"name": package_name,

"from_version": current_version,

"to_version": latest_version,

"security_update": is_security_update

})

if is_security_update:

results["security_updates"].append(package_name)

except subprocess.CalledProcessError as e:

results["failed_updates"].append({

"name": package_name,

"error": str(e)

})

# 更新requirements.txt

self._update_requirements_file()

except Exception as e:

results["error"] = str(e)

return results

def _check_security_update(self, package_name: str, current_version: str) -> bool:

"""检查是否为安全更新"""

try:

# 使用safety库检查安全漏洞

result = subprocess.run(

["safety", "check", "--json"],

capture_output=True, text=True

)

if result.returncode == 0:

return False

import json

vulnerabilities = json.loads(result.stdout)

for vuln in vulnerabilities:

if vuln.get("package_name") == package_name:

return True

except Exception:

pass

return False

def _update_requirements_file(self):

"""更新requirements.txt文件"""

subprocess.run(

["pip", "freeze", ">", "requirements.txt"],

shell=True, check=True

)

def cleanup_obsolete_tests(self) -> List[str]:

"""清理过时的测试"""

obsolete_tests = []

# 查找没有对应源代码的测试文件

test_files = self._find_test_files()

for test_file in test_files:

corresponding_source = self._find_corresponding_source(test_file)

if not corresponding_source or not os.path.exists(corresponding_source):

# 检查测试文件是否长时间未修改

last_modified = self._get_last_modified_date(test_file)

if last_modified < datetime.now() - timedelta(days=90):

obsolete_tests.append(test_file)

return obsolete_tests

def _find_test_files(self) -> List[str]:

"""查找所有测试文件"""

test_files = []

for root, dirs, files in os.walk(os.path.join(self.repo_path, "tests")):

for file in files:

if file.startswith("test_") and file.endswith(".py"):

test_files.append(os.path.join(root, file))

return test_files

def _find_corresponding_source(self, test_file: str) -> Optional[str]:

"""查找测试文件对应的源代码文件"""

# 简单的映射逻辑,实际项目中可能需要更复杂的逻辑

test_name = os.path.basename(test_file)

if test_name.startswith("test_"):

source_name = test_name[5:] # 移除"test_"前缀

source_path = os.path.join(self.repo_path, "src", source_name)

return source_path if os.path.exists(source_path) else None

return None

def _get_last_modified_date(self, file_path: str) -> datetime:

"""获取文件最后修改日期"""

try:

# 从Git历史获取最后修改日期

commits = list(self.repo.iter_commits(paths=file_path, max_count=1))

if commits:

return datetime.fromtimestamp(commits[0].committed_date)

except Exception:

pass

# 如果Git历史不可用,使用文件系统时间

return datetime.fromtimestamp(os.path.getmtime(file_path))

def optimize_test_execution_order(self) -> Dict:

"""优化测试执行顺序"""

# 分析测试执行时间和依赖关系

test_metrics = self._analyze_test_metrics()

# 按执行时间排序,快速测试优先

fast_tests = [t for t in test_metrics if t["duration"] < 1.0]

medium_tests = [t for t in test_metrics if 1.0 <= t["duration"] < 10.0]

slow_tests = [t for t in test_metrics if t["duration"] >= 10.0]

optimized_order = {

"fast_tests": sorted(fast_tests, key=lambda x: x["duration"]),

"medium_tests": sorted(medium_tests, key=lambda x: x["duration"]),

"slow_tests": sorted(slow_tests, key=lambda x: x["duration"]),

"parallel_groups": self._create_parallel_groups(test_metrics)

}

return optimized_order

def _analyze_test_metrics(self) -> List[Dict]:

"""分析测试指标"""

# 这里应该从测试报告或历史数据中获取测试指标

# 示例数据

return [

{"name": "test_user_login", "duration": 0.5, "success_rate": 0.95},

{"name": "test_order_creation", "duration": 2.3, "success_rate": 0.98},

{"name": "test_payment_processing", "duration": 15.2, "success_rate": 0.92},

]

def _create_parallel_groups(self, test_metrics: List[Dict]) -> List[List[str]]:

"""创建并行测试组"""

# 简单的分组策略:按执行时间平衡分组

groups = [[], [], []] # 3个并行组

group_times = [0, 0, 0]

# 按执行时间降序排序

sorted_tests = sorted(test_metrics, key=lambda x: x["duration"], reverse=True)

for test in sorted_tests:

# 选择当前总时间最少的组

min_group_idx = group_times.index(min(group_times))

groups[min_group_idx].append(test["name"])

group_times[min_group_idx] += test["duration"]

return groups

def generate_test_health_report(self) -> Dict:

"""生成测试健康报告"""

report = {

"timestamp": datetime.now().isoformat(),

"test_coverage": self._calculate_test_coverage(),

"test_performance": self._analyze_test_performance(),

"test_stability": self._analyze_test_stability(),

"maintenance_recommendations": []

}

# 生成维护建议

if report["test_coverage"]["percentage"] < 80:

report["maintenance_recommendations"].append(

"测试覆盖率低于80%,建议增加测试用例"

)

if report["test_performance"]["avg_duration"] > 300:

report["maintenance_recommendations"].append(

"平均测试执行时间超过5分钟,建议优化测试性能"

)

return report

def _calculate_test_coverage(self) -> Dict:

"""计算测试覆盖率"""

try:

result = subprocess.run(

["coverage", "report", "--format=json"],

capture_output=True, text=True, check=True

)

import json

coverage_data = json.loads(result.stdout)

return {

"percentage": coverage_data.get("totals", {}).get("percent_covered", 0),

"lines_covered": coverage_data.get("totals", {}).get("covered_lines", 0),

"lines_total": coverage_data.get("totals", {}).get("num_statements", 0)

}

except Exception:

return {"percentage": 0, "lines_covered": 0, "lines_total": 0}

def _analyze_test_performance(self) -> Dict:

"""分析测试性能"""

test_metrics = self._analyze_test_metrics()

if not test_metrics:

return {"avg_duration": 0, "total_duration": 0, "slowest_tests": []}

durations = [t["duration"] for t in test_metrics]

return {

"avg_duration": sum(durations) / len(durations),

"total_duration": sum(durations),

"slowest_tests": sorted(test_metrics, key=lambda x: x["duration"], reverse=True)[:5]

}

def _analyze_test_stability(self) -> Dict:

"""分析测试稳定性"""

test_metrics = self._analyze_test_metrics()

if not test_metrics:

return {"avg_success_rate": 0, "unstable_tests": []}

success_rates = [t["success_rate"] for t in test_metrics]

unstable_tests = [t for t in test_metrics if t["success_rate"] < 0.95]

return {

"avg_success_rate": sum(success_rates) / len(success_rates),

"unstable_tests": unstable_tests

}

# 使用示例

def run_test_maintenance():

"""运行测试维护的示例"""

maintenance = TestMaintenanceAutomation("/path/to/repo")

# 更新依赖

update_results = maintenance.auto_update_test_dependencies()

print("Dependency updates:", update_results)

# 清理过时测试

obsolete_tests = maintenance.cleanup_obsolete_tests()

print("Obsolete tests:", obsolete_tests)

# 优化执行顺序

optimized_order = maintenance.optimize_test_execution_order()

print("Optimized test order:", optimized_order)

# 生成健康报告

health_report = maintenance.generate_test_health_report()

print("Test health report:", health_report)

实战:构建完整的DevOps测试流水线

端到端流水线实现

# devops_pipeline.py

import os

import yaml

import subprocess

import time

from typing import Dict, List, Optional, Any

from dataclasses import dataclass

from enum import Enum

class PipelineStage(Enum):

"""流水线阶段"""

BUILD = "build"

UNIT_TEST = "unit_test"

INTEGRATION_TEST = "integration_test"

SECURITY_SCAN = "security_scan"

PERFORMANCE_TEST = "performance_test"

DEPLOY_STAGING = "deploy_staging"

SMOKE_TEST = "smoke_test"

DEPLOY_PRODUCTION = "deploy_production"

class PipelineStatus(Enum):

"""流水线状态"""

PENDING = "pending"

RUNNING = "running"

SUCCESS = "success"

FAILED = "failed"

SKIPPED = "skipped"

@dataclass

class PipelineResult:

"""流水线结果"""

stage: PipelineStage

status: PipelineStatus

duration: float

logs: str

artifacts: List[str] = None

metrics: Dict[str, Any] = None

class DevOpsPipeline:

"""DevOps测试流水线"""

def __init__(self, config_file: str):

self.config = self._load_config(config_file)

self.results: List[PipelineResult] = []

self.current_stage = None

self.pipeline_start_time = None

def _load_config(self, config_file: str) -> Dict:

"""加载流水线配置"""

with open(config_file, 'r') as f:

return yaml.safe_load(f)

def run_pipeline(self, branch: str = "main") -> Dict:

"""运行完整流水线"""

self.pipeline_start_time = time.time()

try:

# 定义流水线阶段

stages = [

PipelineStage.BUILD,

PipelineStage.UNIT_TEST,

PipelineStage.INTEGRATION_TEST,

PipelineStage.SECURITY_SCAN,

PipelineStage.PERFORMANCE_TEST,

PipelineStage.DEPLOY_STAGING,

PipelineStage.SMOKE_TEST,

PipelineStage.DEPLOY_PRODUCTION

]

# 执行每个阶段

for stage in stages:

if self._should_skip_stage(stage, branch):

self.results.append(PipelineResult(

stage=stage,

status=PipelineStatus.SKIPPED,

duration=0,

logs=f"Stage {stage.value} skipped for branch {branch}"

))

continue

result = self._execute_stage(stage)

self.results.append(result)

# 如果阶段失败,停止流水线

if result.status == PipelineStatus.FAILED:

break

return self._generate_pipeline_report()

except Exception as e:

return {

"status": "error",

"error": str(e),

"results": [r.__dict__ for r in self.results]

}

def _should_skip_stage(self, stage: PipelineStage, branch: str) -> bool:

"""判断是否应该跳过某个阶段"""

stage_config = self.config.get("stages", {}).get(stage.value, {})

# 检查分支条件

only_branches = stage_config.get("only", [])

if only_branches and branch not in only_branches:

return True

except_branches = stage_config.get("except", [])

if except_branches and branch in except_branches:

return True

return False

def _execute_stage(self, stage: PipelineStage) -> PipelineResult:

"""执行流水线阶段"""

self.current_stage = stage

start_time = time.time()

print(f"Executing stage: {stage.value}")

try:

if stage == PipelineStage.BUILD:

result = self._build_stage()

elif stage == PipelineStage.UNIT_TEST:

result = self._unit_test_stage()

elif stage == PipelineStage.INTEGRATION_TEST:

result = self._integration_test_stage()

elif stage == PipelineStage.SECURITY_SCAN:

result = self._security_scan_stage()

elif stage == PipelineStage.PERFORMANCE_TEST:

result = self._performance_test_stage()

elif stage == PipelineStage.DEPLOY_STAGING:

result = self._deploy_staging_stage()

elif stage == PipelineStage.SMOKE_TEST:

result = self._smoke_test_stage()

elif stage == PipelineStage.DEPLOY_PRODUCTION:

result = self._deploy_production_stage()

else:

raise ValueError(f"Unknown stage: {stage}")

duration = time.time() - start_time

result.duration = duration

result.stage = stage

return result

except Exception as e:

return PipelineResult(

stage=stage,

status=PipelineStatus.FAILED,

duration=time.time() - start_time,

logs=f"Stage failed with error: {str(e)}"

)

def _build_stage(self) -> PipelineResult:

"""构建阶段"""

build_config = self.config.get("stages", {}).get("build", {})

# 构建Docker镜像

image_name = build_config.get("image_name", "app")

dockerfile = build_config.get("dockerfile", "Dockerfile")

cmd = ["docker", "build", "-t", image_name, "-f", dockerfile, "."]

result = subprocess.run(cmd, capture_output=True, text=True)

if result.returncode == 0:

return PipelineResult(

stage=PipelineStage.BUILD,

status=PipelineStatus.SUCCESS,

duration=0, # 将在上层设置

logs=result.stdout,

artifacts=[image_name]

)

else:

return PipelineResult(

stage=PipelineStage.BUILD,

status=PipelineStatus.FAILED,

duration=0,

logs=result.stderr

)

def _unit_test_stage(self) -> PipelineResult:

"""单元测试阶段"""

test_config = self.config.get("stages", {}).get("unit_test", {})

# 运行单元测试

cmd = test_config.get("command", ["python", "-m", "pytest", "tests/unit/", "-v", "--junitxml=reports/unit-tests.xml"])

result = subprocess.run(cmd, capture_output=True, text=True)

# 解析测试结果

metrics = self._parse_test_results("reports/unit-tests.xml")

status = PipelineStatus.SUCCESS if result.returncode == 0 else PipelineStatus.FAILED

return PipelineResult(

stage=PipelineStage.UNIT_TEST,

status=status,

duration=0,

logs=result.stdout + result.stderr,

artifacts=["reports/unit-tests.xml"],

metrics=metrics

)

def _integration_test_stage(self) -> PipelineResult:

"""集成测试阶段"""

test_config = self.config.get("stages", {}).get("integration_test", {})

# 启动测试环境

env_manager = TestEnvironmentManager()

try:

with env_manager.test_environment():

# 运行集成测试

cmd = test_config.get("command", ["python", "-m", "pytest", "tests/integration/", "-v"])

result = subprocess.run(cmd, capture_output=True, text=True)

status = PipelineStatus.SUCCESS if result.returncode == 0 else PipelineStatus.FAILED

return PipelineResult(

stage=PipelineStage.INTEGRATION_TEST,

status=status,

duration=0,

logs=result.stdout + result.stderr

)

except Exception as e:

return PipelineResult(

stage=PipelineStage.INTEGRATION_TEST,

status=PipelineStatus.FAILED,

duration=0,

logs=f"Integration test environment failed: {str(e)}"

)

def _security_scan_stage(self) -> PipelineResult:

"""安全扫描阶段"""

security_config = self.config.get("stages", {}).get("security_scan", {})

# 运行安全扫描

scan_results = []

# 代码安全扫描

if security_config.get("code_scan", True):

cmd = ["bandit", "-r", "src/", "-f", "json", "-o", "reports/security-code.json"]

result = subprocess.run(cmd, capture_output=True, text=True)

scan_results.append(("code_scan", result.returncode == 0))

# 依赖安全扫描

if security_config.get("dependency_scan", True):

cmd = ["safety", "check", "--json", "--output", "reports/security-deps.json"]

result = subprocess.run(cmd, capture_output=True, text=True)

scan_results.append(("dependency_scan", result.returncode == 0))

# 容器安全扫描

if security_config.get("container_scan", True):

image_name = self.config.get("stages", {}).get("build", {}).get("image_name", "app")

cmd = ["trivy", "image", "--format", "json", "--output", "reports/security-container.json", image_name]

result = subprocess.run(cmd, capture_output=True, text=True)

scan_results.append(("container_scan", result.returncode == 0))

# 判断整体安全扫描结果

all_passed = all(result[1] for result in scan_results)

return PipelineResult(

stage=PipelineStage.SECURITY_SCAN,

status=PipelineStatus.SUCCESS if all_passed else PipelineStatus.FAILED,

duration=0,

logs=f"Security scan results: {scan_results}",

artifacts=["reports/security-code.json", "reports/security-deps.json", "reports/security-container.json"],

metrics={"scan_results": scan_results}

)

def _performance_test_stage(self) -> PipelineResult:

"""性能测试阶段"""

perf_config = self.config.get("stages", {}).get("performance_test", {})

# 启动应用进行性能测试

image_name = self.config.get("stages", {}).get("build", {}).get("image_name", "app")

# 启动应用容器

start_cmd = ["docker", "run", "-d", "--name", "perf-test-app", "-p", "8080:8080", image_name]

subprocess.run(start_cmd, capture_output=True)

try:

# 等待应用启动

time.sleep(10)

# 运行性能测试

jmx_file = perf_config.get("jmx_file", "performance/load-test.jmx")

cmd = [

"jmeter", "-n", "-t", jmx_file,

"-l", "reports/performance-results.jtl",

"-j", "reports/jmeter.log"

]

result = subprocess.run(cmd, capture_output=True, text=True)

# 解析性能测试结果

perf_metrics = self._parse_performance_results("reports/performance-results.jtl")

# 检查性能阈值

passed = self._check_performance_thresholds(perf_metrics, perf_config.get("thresholds", {}))

return PipelineResult(

stage=PipelineStage.PERFORMANCE_TEST,

status=PipelineStatus.SUCCESS if passed else PipelineStatus.FAILED,

duration=0,

logs=result.stdout + result.stderr,

artifacts=["reports/performance-results.jtl", "reports/jmeter.log"],

metrics=perf_metrics

)

finally:

# 清理测试容器

subprocess.run(["docker", "stop", "perf-test-app"], capture_output=True)

subprocess.run(["docker", "rm", "perf-test-app"], capture_output=True)

def _deploy_staging_stage(self) -> PipelineResult:

"""部署到预发环境"""

deploy_config = self.config.get("stages", {}).get("deploy_staging", {})

image_name = self.config.get("stages", {}).get("build", {}).get("image_name", "app")

registry = deploy_config.get("registry", "localhost:5000")

# 推送镜像到注册表

tag_cmd = ["docker", "tag", image_name, f"{registry}/{image_name}:staging"]

push_cmd = ["docker", "push", f"{registry}/{image_name}:staging"]

tag_result = subprocess.run(tag_cmd, capture_output=True, text=True)

if tag_result.returncode != 0:

return PipelineResult(

stage=PipelineStage.DEPLOY_STAGING,

status=PipelineStatus.FAILED,

duration=0,

logs=f"Failed to tag image: {tag_result.stderr}"

)

push_result = subprocess.run(push_cmd, capture_output=True, text=True)

if push_result.returncode != 0:

return PipelineResult(

stage=PipelineStage.DEPLOY_STAGING,

status=PipelineStatus.FAILED,

duration=0,

logs=f"Failed to push image: {push_result.stderr}"

)

# 部署到Kubernetes

if deploy_config.get("kubernetes", True):

kubectl_cmd = [

"kubectl", "set", "image", "deployment/app",

f"app={registry}/{image_name}:staging",

"-n", "staging"

]

kubectl_result = subprocess.run(kubectl_cmd, capture_output=True, text=True)

if kubectl_result.returncode != 0:

return PipelineResult(

stage=PipelineStage.DEPLOY_STAGING,

status=PipelineStatus.FAILED,

duration=0,

logs=f"Failed to deploy to Kubernetes: {kubectl_result.stderr}"

)

# 等待部署完成

rollout_cmd = ["kubectl", "rollout", "status", "deployment/app", "-n", "staging"]

rollout_result = subprocess.run(rollout_cmd, capture_output=True, text=True)

return PipelineResult(

stage=PipelineStage.DEPLOY_STAGING,

status=PipelineStatus.SUCCESS,

duration=0,

logs="Successfully deployed to staging environment"

)

def _smoke_test_stage(self) -> PipelineResult:

"""冒烟测试阶段"""

smoke_config = self.config.get("stages", {}).get("smoke_test", {})

base_url = smoke_config.get("base_url", "https://staging.example.com")

# 运行冒烟测试

cmd = [

"python", "-m", "pytest", "tests/smoke/",

f"--base-url={base_url}",

"--junitxml=reports/smoke-tests.xml",

"-v"

]

result = subprocess.run(cmd, capture_output=True, text=True)

# 解析测试结果

metrics = self._parse_test_results("reports/smoke-tests.xml")

return PipelineResult(

stage=PipelineStage.SMOKE_TEST,

status=PipelineStatus.SUCCESS if result.returncode == 0 else PipelineStatus.FAILED,

duration=0,

logs=result.stdout + result.stderr,

artifacts=["reports/smoke-tests.xml"],

metrics=metrics

)

def _deploy_production_stage(self) -> PipelineResult:

"""部署到生产环境"""

deploy_config = self.config.get("stages", {}).get("deploy_production", {})

# 生产部署通常需要人工确认

if deploy_config.get("manual_approval", True):

approval = input("Deploy to production? (y/N): ")

if approval.lower() != 'y':

return PipelineResult(

stage=PipelineStage.DEPLOY_PRODUCTION,

status=PipelineStatus.SKIPPED,

duration=0,

logs="Production deployment cancelled by user"

)

image_name = self.config.get("stages", {}).get("build", {}).get("image_name", "app")

registry = deploy_config.get("registry", "localhost:5000")

# 推送生产镜像

tag_cmd = ["docker", "tag", image_name, f"{registry}/{image_name}:latest"]

push_cmd = ["docker", "push", f"{registry}/{image_name}:latest"]

tag_result = subprocess.run(tag_cmd, capture_output=True, text=True)

push_result = subprocess.run(push_cmd, capture_output=True, text=True)

if tag_result.returncode != 0 or push_result.returncode != 0:

return PipelineResult(

stage=PipelineStage.DEPLOY_PRODUCTION,

status=PipelineStatus.FAILED,

duration=0,

logs=f"Failed to push production image: {push_result.stderr}"

)

# 蓝绿部署到生产环境

if deploy_config.get("blue_green", True):

result = self._blue_green_deployment(registry, image_name)

else:

# 滚动更新

kubectl_cmd = [

"kubectl", "set", "image", "deployment/app",

f"app={registry}/{image_name}:latest",

"-n", "production"

]

kubectl_result = subprocess.run(kubectl_cmd, capture_output=True, text=True)

result = kubectl_result.returncode == 0

return PipelineResult(

stage=PipelineStage.DEPLOY_PRODUCTION,

status=PipelineStatus.SUCCESS if result else PipelineStatus.FAILED,

duration=0,

logs="Successfully deployed to production" if result else "Production deployment failed"

)

def _blue_green_deployment(self, registry: str, image_name: str) -> bool:

"""蓝绿部署实现"""

try:

# 获取当前活跃的部署

get_active_cmd = [

"kubectl", "get", "service", "app-service",

"-n", "production",

"-o", "jsonpath={.spec.selector.version}"

]

active_result = subprocess.run(get_active_cmd, capture_output=True, text=True)

current_version = active_result.stdout.strip() or "blue"

new_version = "green" if current_version == "blue" else "blue"

# 部署新版本

deploy_cmd = [

"kubectl", "set", "image", f"deployment/app-{new_version}",

f"app={registry}/{image_name}:latest",

"-n", "production"

]

deploy_result = subprocess.run(deploy_cmd, capture_output=True, text=True)

if deploy_result.returncode != 0:

return False

# 等待新版本就绪

rollout_cmd = [

"kubectl", "rollout", "status", f"deployment/app-{new_version}",

"-n", "production", "--timeout=300s"

]

rollout_result = subprocess.run(rollout_cmd, capture_output=True, text=True)

if rollout_result.returncode != 0:

return False

# 切换流量到新版本

switch_cmd = [

"kubectl", "patch", "service", "app-service",

"-n", "production",

"-p", f'{{"spec":{{"selector":{{"version":"{new_version}"}}}}}}'

]

switch_result = subprocess.run(switch_cmd, capture_output=True, text=True)

return switch_result.returncode == 0

except Exception as e:

print(f"Blue-green deployment failed: {e}")

return False

def _parse_test_results(self, junit_file: str) -> Dict[str, Any]:

"""解析JUnit测试结果"""

try:

import xml.etree.ElementTree as ET

if not os.path.exists(junit_file):

return {"error": "Test results file not found"}

tree = ET.parse(junit_file)

root = tree.getroot()

# 解析测试统计

testsuite = root.find('testsuite') or root

return {

"total_tests": int(testsuite.get('tests', 0)),

"failures": int(testsuite.get('failures', 0)),

"errors": int(testsuite.get('errors', 0)),

"skipped": int(testsuite.get('skipped', 0)),

"time": float(testsuite.get('time', 0)),

"success_rate": self._calculate_success_rate(testsuite)

}

except Exception as e:

return {"error": f"Failed to parse test results: {e}"}

def _calculate_success_rate(self, testsuite) -> float:

"""计算测试成功率"""

total = int(testsuite.get('tests', 0))

failures = int(testsuite.get('failures', 0))

errors = int(testsuite.get('errors', 0))

if total == 0:

return 0.0

passed = total - failures - errors

return (passed / total) * 100

def _parse_performance_results(self, jtl_file: str) -> Dict[str, Any]:

"""解析性能测试结果"""

try:

if not os.path.exists(jtl_file):

return {"error": "Performance results file not found"}

import pandas as pd

# 读取JTL文件

df = pd.read_csv(jtl_file)

return {

"total_samples": len(df),

"avg_response_time": df['elapsed'].mean(),

"min_response_time": df['elapsed'].min(),

"max_response_time": df['elapsed'].max(),

"p95_response_time": df['elapsed'].quantile(0.95),

"p99_response_time": df['elapsed'].quantile(0.99),

"error_rate": (df['success'] == False).sum() / len(df) * 100,

"throughput": len(df) / (df['timeStamp'].max() - df['timeStamp'].min()) * 1000

}

except Exception as e:

return {"error": f"Failed to parse performance results: {e}"}

def _check_performance_thresholds(self, metrics: Dict[str, Any], thresholds: Dict[str, Any]) -> bool:

"""检查性能阈值"""

if "error" in metrics:

return False

# 检查平均响应时间

if "avg_response_time" in thresholds:

if metrics.get("avg_response_time", 0) > thresholds["avg_response_time"]:

return False

# 检查P95响应时间

if "p95_response_time" in thresholds:

if metrics.get("p95_response_time", 0) > thresholds["p95_response_time"]:

return False

# 检查错误率

if "error_rate" in thresholds:

if metrics.get("error_rate", 0) > thresholds["error_rate"]:

return False

# 检查吞吐量

if "min_throughput" in thresholds:

if metrics.get("throughput", 0) < thresholds["min_throughput"]:

return False

return True

def _generate_pipeline_report(self) -> Dict:

"""生成流水线报告"""

total_duration = time.time() - self.pipeline_start_time if self.pipeline_start_time else 0

# 统计各阶段状态

status_counts = {}

for result in self.results:

status = result.status.value

status_counts[status] = status_counts.get(status, 0) + 1

# 确定整体状态

overall_status = "success"

if any(r.status == PipelineStatus.FAILED for r in self.results):

overall_status = "failed"

elif any(r.status == PipelineStatus.RUNNING for r in self.results):

overall_status = "running"

# 收集所有制品

all_artifacts = []

for result in self.results:

if result.artifacts:

all_artifacts.extend(result.artifacts)

# 收集所有指标

all_metrics = {}

for result in self.results:

if result.metrics:

all_metrics[result.stage.value] = result.metrics

return {

"overall_status": overall_status,

"total_duration": total_duration,

"stage_results": [

{

"stage": r.stage.value,

"status": r.status.value,

"duration": r.duration,

"artifacts": r.artifacts or [],

"metrics": r.metrics or {}

}

for r in self.results

],

"status_summary": status_counts,

"artifacts": all_artifacts,

"metrics": all_metrics,

"timestamp": datetime.now().isoformat()

}

# 流水线配置文件示例

# pipeline-config.yaml

"""

stages:

build:

image_name: "user-service"

dockerfile: "Dockerfile"

unit_test:

command: ["python", "-m", "pytest", "tests/unit/", "-v", "--junitxml=reports/unit-tests.xml", "--cov=src"]

integration_test:

command: ["python", "-m", "pytest", "tests/integration/", "-v", "--junitxml=reports/integration-tests.xml"]

security_scan:

code_scan: true

dependency_scan: true

container_scan: true

performance_test:

jmx_file: "performance/load-test.jmx"

thresholds:

avg_response_time: 1000 # ms

p95_response_time: 2000 # ms

error_rate: 5 # %

min_throughput: 100 # requests/sec

only: ["main", "develop"]

deploy_staging:

registry: "your-registry.com"

kubernetes: true

only: ["develop"]

smoke_test:

base_url: "https://staging.example.com"

only: ["develop"]

deploy_production:

registry: "your-registry.com"

kubernetes: true

blue_green: true

manual_approval: true

only: ["main"]

"""

# 使用示例

def run_devops_pipeline():

"""运行DevOps流水线示例"""

pipeline = DevOpsPipeline("pipeline-config.yaml")

# 运行流水线

report = pipeline.run_pipeline(branch="develop")

print("Pipeline Report:")

print(f"Overall Status: {report['overall_status']}")

print(f"Total Duration: {report['total_duration']:.2f} seconds")

for stage_result in report['stage_results']:

print(f"\nStage: {stage_result['stage']}")

print(f"Status: {stage_result['status']}")

print(f"Duration: {stage_result['duration']:.2f} seconds")

if stage_result['artifacts']:

print(f"Artifacts: {stage_result['artifacts']}")

if stage_result['metrics']:

print(f"Metrics: {stage_result['metrics']}")

if __name__ == "__main__":

run_devops_pipeline()

流水线配置管理

# pipeline_config_manager.py

from datetime import datetime

from contextlib import contextmanager

import xml.etree.ElementTree as ET

import yaml

import json

from typing import Dict, List, Any, Optional

from dataclasses import dataclass, asdict

from enum import Enum

class EnvironmentType(Enum):

"""环境类型"""

DEVELOPMENT = "development"

TESTING = "testing"

STAGING = "staging"

PRODUCTION = "production"

@dataclass

class StageConfig:

"""阶段配置"""

name: str

enabled: bool = True

timeout: int = 300 # 秒

retry_count: int = 0

only_branches: List[str] = None

except_branches: List[str] = None

environment_variables: Dict[str, str] = None

commands: List[str] = None

artifacts: List[str] = None

dependencies: List[str] = None

@dataclass

class EnvironmentConfig:

"""环境配置"""

name: str

type: EnvironmentType

url: Optional[str] = None

database_url: Optional[str] = None

redis_url: Optional[str] = None

kubernetes_namespace: Optional[str] = None

resource_limits: Dict[str, str] = None

@dataclass

class PipelineConfig:

"""流水线配置"""

name: str

version: str

stages: List[StageConfig]

environments: List[EnvironmentConfig]

global_variables: Dict[str, str] = None

notifications: Dict[str, Any] = None

class PipelineConfigManager:

"""流水线配置管理器"""

def __init__(self, config_file: str):

self.config_file = config_file

self.config = self._load_config()

def _load_config(self) -> PipelineConfig:

"""加载配置文件"""

with open(self.config_file, 'r') as f:

if self.config_file.endswith('.yaml') or self.config_file.endswith('.yml'):

data = yaml.safe_load(f)

else:

data = json.load(f)

return self._parse_config(data)

def _parse_config(self, data: Dict) -> PipelineConfig:

"""解析配置数据"""

# 解析阶段配置

stages = []

for stage_data in data.get('stages', []):

stage = StageConfig(

name=stage_data['name'],

enabled=stage_data.get('enabled', True),

timeout=stage_data.get('timeout', 300),

retry_count=stage_data.get('retry_count', 0),

only_branches=stage_data.get('only_branches'),

except_branches=stage_data.get('except_branches'),

environment_variables=stage_data.get('environment_variables'),

commands=stage_data.get('commands'),

artifacts=stage_data.get('artifacts'),

dependencies=stage_data.get('dependencies')

)

stages.append(stage)

# 解析环境配置

environments = []

for env_data in data.get('environments', []):

env = EnvironmentConfig(

name=env_data['name'],

type=EnvironmentType(env_data['type']),

url=env_data.get('url'),

database_url=env_data.get('database_url'),

redis_url=env_data.get('redis_url'),

kubernetes_namespace=env_data.get('kubernetes_namespace'),

resource_limits=env_data.get('resource_limits')

)

environments.append(env)

return PipelineConfig(

name=data['name'],

version=data['version'],

stages=stages,

environments=environments,

global_variables=data.get('global_variables'),

notifications=data.get('notifications')

)

def get_stage_config(self, stage_name: str) -> Optional[StageConfig]:

"""获取阶段配置"""

for stage in self.config.stages:

if stage.name == stage_name:

return stage

return None

def get_environment_config(self, env_name: str) -> Optional[EnvironmentConfig]:

"""获取环境配置"""

for env in self.config.environments:

if env.name == env_name:

return env

return None

def should_run_stage(self, stage_name: str, branch: str) -> bool:

"""判断是否应该运行某个阶段"""

stage = self.get_stage_config(stage_name)

if not stage or not stage.enabled:

return False

# 检查分支条件

if stage.only_branches and branch not in stage.only_branches:

return False

if stage.except_branches and branch in stage.except_branches:

return False

return True

def get_stage_dependencies(self, stage_name: str) -> List[str]:

"""获取阶段依赖"""

stage = self.get_stage_config(stage_name)

return stage.dependencies if stage and stage.dependencies else []

def validate_config(self) -> List[str]:

"""验证配置"""

errors = []

# 检查阶段名称唯一性

stage_names = [stage.name for stage in self.config.stages]

if len(stage_names) != len(set(stage_names)):

errors.append("Stage names must be unique")

# 检查环境名称唯一性

env_names = [env.name for env in self.config.environments]

if len(env_names) != len(set(env_names)):

errors.append("Environment names must be unique")

# 检查阶段依赖

for stage in self.config.stages:

if stage.dependencies:

for dep in stage.dependencies:

if dep not in stage_names:

errors.append(f"Stage '{stage.name}' depends on non-existent stage '{dep}'")

return errors

def save_config(self, output_file: Optional[str] = None):

"""保存配置"""

output_file = output_file or self.config_file

config_dict = asdict(self.config)

with open(output_file, 'w') as f:

if output_file.endswith('.yaml') or output_file.endswith('.yml'):

yaml.dump(config_dict, f, default_flow_style=False)

else:

json.dump(config_dict, f, indent=2)

# 配置文件示例

SAMPLE_CONFIG = """

name: "User Service Pipeline"

version: "1.0"

global_variables:

DOCKER_REGISTRY: "your-registry.com"

APP_NAME: "user-service"

environments:

- name: "test"

type: "testing"

database_url: "postgresql://postgres:password@localhost:5432/testdb"

redis_url: "redis://localhost:6379"

resource_limits:

memory: "512Mi"

cpu: "500m"

- name: "staging"

type: "staging"

url: "https://staging.example.com"

kubernetes_namespace: "staging"

database_url: "postgresql://postgres:password@staging-db:5432/appdb"

redis_url: "redis://staging-redis:6379"

resource_limits:

memory: "1Gi"

cpu: "1000m"

- name: "production"

type: "production"

url: "https://example.com"

kubernetes_namespace: "production"

database_url: "postgresql://postgres:password@prod-db:5432/appdb"

redis_url: "redis://prod-redis:6379"

resource_limits:

memory: "2Gi"

cpu: "2000m"

stages:

- name: "build"

enabled: true

timeout: 600

commands:

- "docker build -t ${APP_NAME}:${BUILD_ID} ."

artifacts:

- "${APP_NAME}:${BUILD_ID}"

- name: "unit_test"

enabled: true

timeout: 300

dependencies: ["build"]

commands:

- "pytest tests/unit/ --junitxml=reports/unit-tests.xml --cov=src"

artifacts:

- "reports/unit-tests.xml"

- "reports/coverage.xml"

- name: "integration_test"

enabled: true

timeout: 600

dependencies: ["build"]

environment_variables:

DATABASE_URL: "${TEST_DATABASE_URL}"

REDIS_URL: "${TEST_REDIS_URL}"

commands:

- "pytest tests/integration/ --junitxml=reports/integration-tests.xml"

artifacts:

- "reports/integration-tests.xml"

- name: "security_scan"

enabled: true

timeout: 300

dependencies: ["build"]

commands:

- "bandit -r src/ -f json -o reports/security-code.json"

- "safety check --json --output reports/security-deps.json"

- "trivy image --format json --output reports/security-container.json ${APP_NAME}:${BUILD_ID}"

artifacts:

- "reports/security-code.json"

- "reports/security-deps.json"

- "reports/security-container.json"

- name: "performance_test"

enabled: true

timeout: 1800

only_branches: ["main", "develop"]

dependencies: ["build"]

commands:

- "jmeter -n -t performance/load-test.jmx -l reports/performance-results.jtl"

artifacts:

- "reports/performance-results.jtl"

- name: "deploy_staging"

enabled: true

timeout: 600

only_branches: ["develop"]

dependencies: ["unit_test", "integration_test", "security_scan"]

commands:

- "docker tag ${APP_NAME}:${BUILD_ID} ${DOCKER_REGISTRY}/${APP_NAME}:staging"

- "docker push ${DOCKER_REGISTRY}/${APP_NAME}:staging"

- "kubectl set image deployment/app app=${DOCKER_REGISTRY}/${APP_NAME}:staging -n staging"

- name: "smoke_test"

enabled: true

timeout: 300

only_branches: ["develop"]

dependencies: ["deploy_staging"]

commands:

- "pytest tests/smoke/ --base-url=https://staging.example.com --junitxml=reports/smoke-tests.xml"

artifacts:

- "reports/smoke-tests.xml"

- name: "deploy_production"

enabled: true

timeout: 600

only_branches: ["main"]

dependencies: ["unit_test", "integration_test", "security_scan", "performance_test"]

commands:

- "docker tag ${APP_NAME}:${BUILD_ID} ${DOCKER_REGISTRY}/${APP_NAME}:latest"

- "docker push ${DOCKER_REGISTRY}/${APP_NAME}:latest"

- "kubectl set image deployment/app app=${DOCKER_REGISTRY}/${APP_NAME}:latest -n production"

notifications:

slack:

webhook_url: "https://hooks.slack.com/services/YOUR/SLACK/WEBHOOK"

channel: "#deployments"

email:

smtp_server: "smtp.example.com"

recipients: ["team@example.com"]

"""

# 使用示例

def manage_pipeline_config():

"""管理流水线配置的示例"""

# 创建示例配置文件

with open('pipeline-config.yaml', 'w') as f:

f.write(SAMPLE_CONFIG)

# 加载配置

config_manager = PipelineConfigManager('pipeline-config.yaml')

# 验证配置

errors = config_manager.validate_config()

if errors:

print("Configuration errors:")

for error in errors:

print(f" - {error}")

return

# 检查阶段是否应该运行

branch = "develop"

for stage in config_manager.config.stages:

should_run = config_manager.should_run_stage(stage.name, branch)

print(f"Stage '{stage.name}' should run on branch '{branch}': {should_run}")

# 获取阶段依赖

stage_name = "deploy_staging"

dependencies = config_manager.get_stage_dependencies(stage_name)

print(f"Dependencies for stage '{stage_name}': {dependencies}")

# 获取环境配置

env_config = config_manager.get_environment_config("staging")

if env_config:

print(f"Staging environment URL: {env_config.url}")

print(f"Staging namespace: {env_config.kubernetes_namespace}")

if __name__ == "__main__":

manage_pipeline_config()

完整的CI/CD阶段:构建→测试→安全扫描→部署,支持多种测试类型:单元、集成、性能、冒烟测试,集成了主流安全扫描工具,支持蓝绿部署等高级部署策略。

20万+

20万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?