参考资料:

整个源码主要围绕着Transformers库中的model_t5展开,也默认大家都已经熟悉了transformer的基本原理,知道了像GPT和Bert这些为代表的decoder和encoder架构,这里我们就介绍另一个流派的代表:基于encoder-decoder架构的T5模型

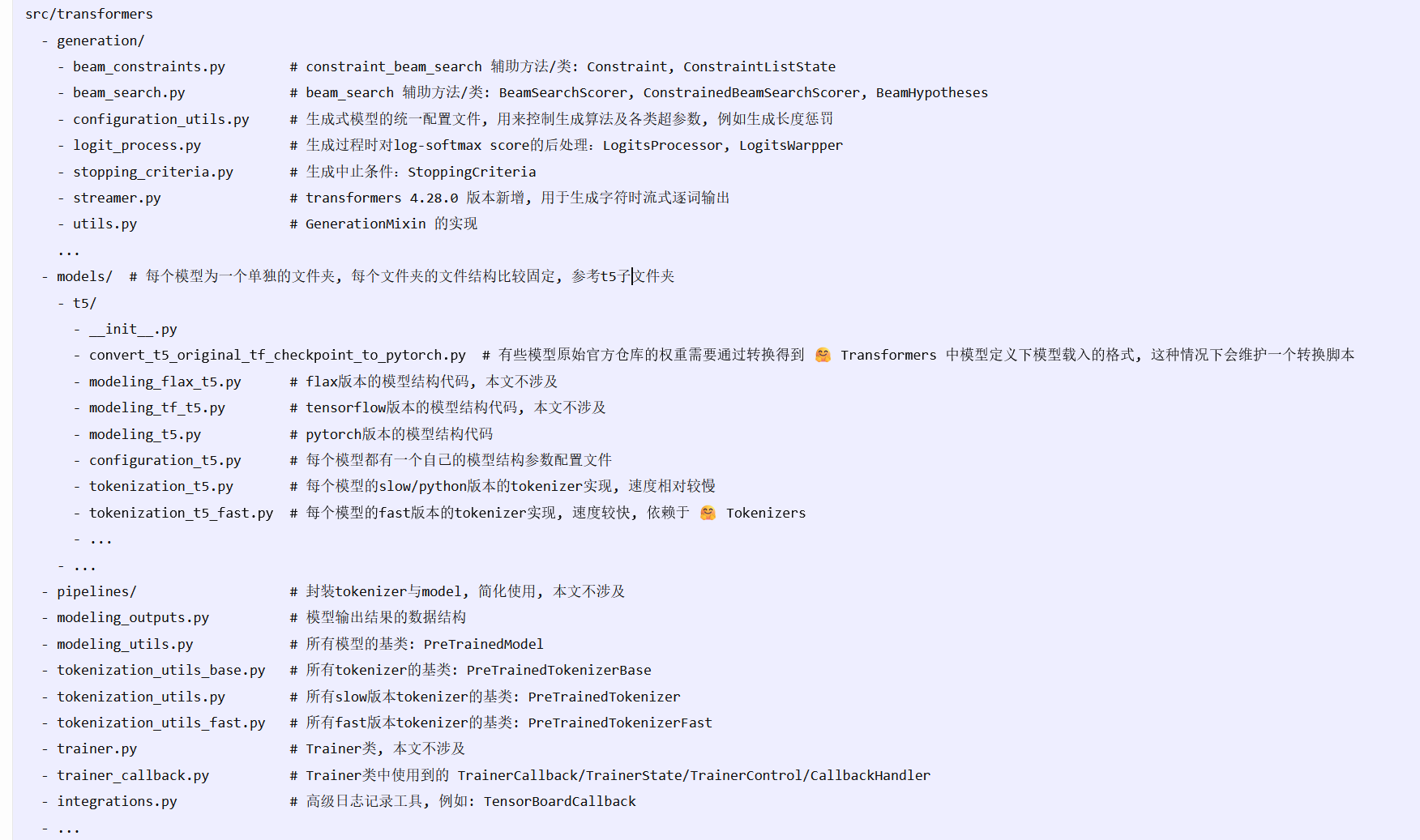

1.代码文件框架:

这里主要围绕modeling_t5展开(基本原理强力推荐上面的博客,下面主要是对官方代码的解读):

class T5LayerNorm(nn.Module):

def __init__(self, hidden_size, eps=1e-6):

"""

Construct a layernorm module in the T5 style. No bias and no subtraction of mean.

"""

super().__init__()

self.weight = nn.Parameter(torch.ones(hidden_size))

self.variance_epsilon = eps

def forward(self, hidden_states):

variance = hidden_states.to(torch.float32).pow(2).mean(-1, keepdim=True)

hidden_states = hidden_states * torch.rsqrt(variance + self.variance_epsilon)

# convert into half-precision if necessary

if self.weight.dtype in [torch.float16, torch.bfloat16]:

hidden_states = hidden_states.to(self.weight.dtype)

return self.weight * hidden_states层归一化:hidden_states(B,L,C),return (B,L,C)

class T5DenseActDense(nn.Module):

def __init__(self, config: T5Config):

super().__init__()

self.wi = nn.Linear(config.d_model, config.d_ff, bias=False)

self.wo = nn.Linear(config.d_ff, config.d_model, bias=False)

self.dropout = nn.Dropout(config.dropout_rate)

self.act = ACT2FN[config.dense_act_fn]

def forward(self, hidden_states):

hidden_states = self.wi(hidden_states)

hidden_states = self.act(hidden_states)

hidden_states = self.dropout(hidden_states)

if (

isinstance(self.wo.weight, torch.Tensor)

and hidden_states.dtype != self.wo.weight.dtype

and self.wo.weight.dtype != torch.int8

):

hidden_states = hidden_states.to(self.wo.weight.dtype)

hidden_states = self.wo(hidden_states)

return hidden_states主要作为FFN中的一部分,输入和输出维度没有变化,用于深层次加工和信息提炼

class T5DenseGatedActDense(nn.Module):

def __init__(self, config: T5Config):

super().__init__()

self.wi_0 = nn.Linear(config.d_model, config.d_ff, bias=False)

self.wi_1 = nn.Linear(config.d_model, config.d_ff, bias=False)

self.wo = nn.Linear(config.d_ff, config.d_model, bias=False)

self.dropout = nn.Dropout(config.dropout_rate)

self.act = ACT2FN[config.dense_act_fn]

def forward(self, hidden_states):

hidden_gelu = self.act(self.wi_0(hidden_states))

hidden_linear = self.wi_1(hidden_states)

hidden_states = hidden_gelu * hidden_linear

hidden_states = self.dropout(hidden_states)

# To make 8bit quantization work for google/flan-t5-xxl, self.wo is kept in float32.

# See https://github.com/huggingface/transformers/issues/20287

# we also make sure the weights are not in `int8` in case users will force `_keep_in_fp32_modules` to be `None``

if (

isinstance(self.wo.weight, torch.Tensor)

and hidden_states.dtype != self.wo.weight.dtype

and self.wo.weight.dtype != torch.int8

):

hidden_states = hidden_states.to(self.wo.weight.dtype)

hidden_states = self.wo(hidden_states)

return hidden_states这个和前面一个多了门控机制,也就是wi_0的输出当作模型重要性权重来控制wi_1,输入输出维度依旧不变

class T5LayerFF(nn.Module):

def __init__(self, config: T5Config):

super().__init__()

if config.is_gated_act:

self.DenseReluDense = T5DenseGatedActDense(config)

else:

self.DenseReluDense = T5DenseActDense(config)

self.layer_norm = T5LayerNorm(config.d_model, eps=config.layer_norm_epsilon)

self.dropout = nn.Dropout(config.dropout_rate)

def forward(self, hidden_states):

forwarded_states = self.layer_norm(hidden_states)

forwarded_states = self.DenseReluDense(forwarded_states)

hidden_states = hidden_states + self.dropout(forwarded_states)

return hidden_statesFFN,输入输出维度不变,用于特征融合。

下面介绍最重要的注意力机制:

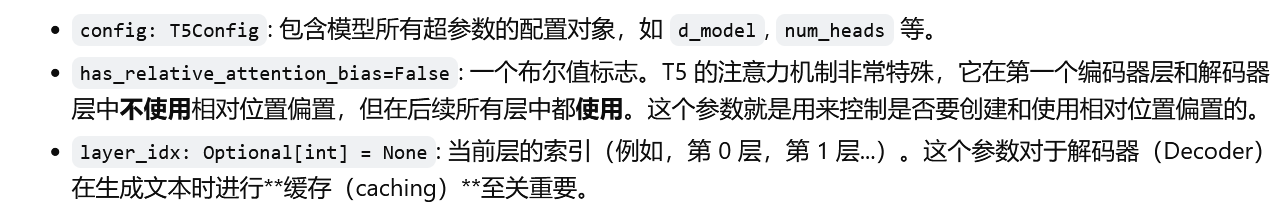

1.初始化:

def __init__(

self,

config: T5Config,

has_relative_attention_bias=False,

layer_idx: Optional[int] = None,

):

super().__init__()

self.is_decoder = config.is_decoder

self.has_relative_attention_bias = has_relative_attention_bias

self.relative_attention_num_buckets = config.relative_attention_num_buckets

self.relative_attention_max_distance = config.relative_attention_max_distance

self.d_model = config.d_model

self.key_value_proj_dim = config.d_kv

self.n_heads = config.num_heads

self.dropout = config.dropout_rate

self.inner_dim = self.n_heads * self.key_value_proj_dim

self.layer_idx = layer_idx

if layer_idx is None and self.is_decoder:

logger.warning_once(

f"Instantiating a decoder {self.__class__.__name__} without passing `layer_idx` is not recommended and "

"will to errors during the forward call, if caching is used. Please make sure to provide a `layer_idx` "

"when creating this class."

)

# Mesh TensorFlow initialization to avoid scaling before softmax

self.q = nn.Linear(self.d_model, self.inner_dim, bias=False)

self.k = nn.Linear(self.d_model, self.inner_dim, bias=False)

self.v = nn.Linear(self.d_model, self.inner_dim, bias=False)

self.o = nn.Linear(self.inner_dim, self.d_model, bias=False)

if self.has_relative_attention_bias:

self.relative_attention_bias = nn.Embedding(self.relative_attention_num_buckets, self.n_heads)

self.pruned_heads = set()

self.gradient_checkpointing = False

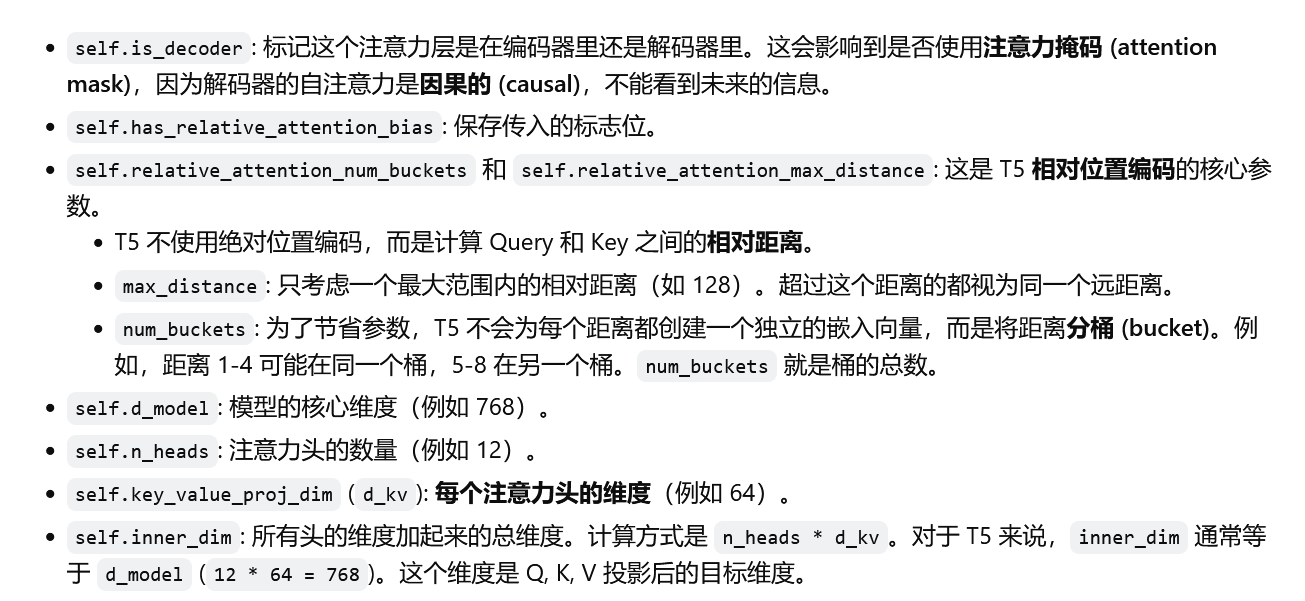

下面是各个参数的解释:

2.relative_position_bucket 函数

def _relative_position_bucket(relative_position, bidirectional=True, num_buckets=32, max_distance=128):

relative_buckets = 0

if bidirectional:

num_buckets //= 2

relative_buckets += (relative_position > 0).to(torch.long) * num_buckets

relative_position = torch.abs(relative_position)

else:

relative_position = -torch.min(relative_position, torch.zeros_like(relative_position))

# now relative_position is in the range [0, inf)

# half of the buckets are for exact increments in positions

max_exact

最低0.47元/天 解锁文章

最低0.47元/天 解锁文章

1491

1491

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?