Abstract 摘要

https://arxiv.org/html/2411.16832?_immersive_translate_auto_translate=1

Recent advancements in diffusion models have made generative image editing more accessible than ever. While these developments allow users to generate creative edits with ease, they also raise significant ethical concerns, particularly regarding malicious edits to human portraits that threaten individuals’ privacy and identity security. Existing general-purpose image protection methods primarily focus on generating adversarial perturbations to nullify edit effects. However, these approaches often exhibit instability to protect against diverse editing requests. In this work, we introduce a novel perspective to personal human portrait protection against malicious editing. Unlike traditional methods aiming to prevent edits from taking effect, our method, FaceLock, optimizes adversarial perturbations to ensure that original biometric information—such as facial features—is either destroyed or substantially altered post-editing, rendering the subject in the edited output biometrically unrecognizable. Our approach innovatively integrates facial recognition and visual perception factors into the perturbation optimization process, ensuring robust protection against a variety of editing attempts. Besides, we shed light on several critical issues with commonly used evaluation metrics in image editing and reveal cheating methods by which they can be easily manipulated, leading to deceptive assessments of protection. Through extensive experiments, we demonstrate that FaceLock significantly outperforms all baselines in defense performance against a wide range of malicious edits. Moreover, our method also exhibits strong robustness against purification techniques. Comprehensive ablation studies confirm the stability and broad applicability of our method across diverse diffusion-based editing algorithms. Our work not only advances the state-of-the-art in biometric defense but also sets the foundation for more secure and privacy-preserving practices in image editing. The code is publicly available at: https://github.com/taco-group/FaceLock.

扩散模型的最新进展使生成图像编辑比以往任何时候都更容易接触。虽然这些发展让用户能够轻松生成创意编辑,但也引发了重大的伦理问题,尤其是关于对人体肖像进行恶意编辑,威胁个人隐私和身份安全的问题。现有的通用图像保护方法主要集中于产生对抗扰动以抵消编辑效果。然而,这些方法常常存在不稳定性,以防范多样化的编辑请求。本文介绍了个人肖像防护恶意编辑的新视角。与旨在防止编辑生效的传统方法不同,我们的方法 FaceLock 优化了对抗扰动,确保原始生物特征信息(如面部特征)在编辑后被销毁或大幅改变,使编辑输出中的对象在生物识别上无法辨认。我们的方法创新地将人脸识别和视觉感知因素整合进扰动优化过程,确保对各种编辑尝试有强有力的防护。此外,我们还揭示了图像编辑中常用评估指标的若干关键问题,并揭示了这些指标容易控、导致保护评估产生欺骗性的作弊手法。通过大量实验,我们证明 FaceLock 在防御各种恶意编辑方面显著优于所有基线。此外,我们的方法对纯化技术表现出强烈的鲁棒性。 全面的消融研究证实了我们方法在多种基于扩散的编辑算法中的稳定性和广泛适用性。我们的工作不仅推动了生物识别防御的先进发展,也为图像编辑中更安全、更保护隐私的实践奠定了基础。该代码公开于:https://github.com/taco-group/FaceLock。

![[Uncaptioned image]](https://img-home.csdnimg.cn/images/20230724024159.png?origin_url=https%3A%2F%2Farxiv.org%2Fhtml%2F2411.16832v2%2Fx1.png&pos_id=wpMSwpz3)

Figure 1:An illustration of adversarial perturbation generation for safeguarding personal images from malicious editing. Perturbations generated by prior work [1, 2] aim to cancel off editing effects, resulting in instability due to the diversity of editing instructions. In contrast, FaceLock does not prevent edits from being applied but instead erases critical biometric information (e.g., human facial features) after editing, making it agnostic to specific prompts and achieving superior performance.

图 1: 保护个人图像免受恶意编辑的对抗性扰动生成示例。先前工作 产生的扰动 [1, 2] 旨在抵消编辑效果,导致编辑指令的多样性导致不稳定性。相比之下,FaceLock 并不阻止编辑的应用,而是在编辑后删除关键的生物特征信息( 如人类面部特征),使其对特定提示无关,从而实现更优的表现。

1Introduction 1 介绍

Image editing has advanced at an unprecedented rate due to the rise of diffusion-based techniques, making it possible to produce edits that are indistinguishable from reality [3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16]. This rapid development has led to tools capable of seamlessly modifying visual content, with edits so convincing that they are often impossible to differentiate from the original image. While this progress opens up creative possibilities, it also brings significant ethical and societal challenges.

由于基于扩散技术的兴起,图像编辑以前所未有的速度进步,使得编辑能够与现实无异[3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16]。 这种快速的发展催生了能够无缝修改视觉内容的工具,编辑极其逼真,常常无法与原始图像区分开来。虽然这一进展开启了创造的可能性,但也带来了重大的伦理和社会挑战。

The power of these editing techniques has led to severe ethical implications [17, 18, 19, 20, 21]. Recent incidents, such as the widely discussed manipulation of Taylor Swift’s images [22] and the proliferation of pornographic content affecting Korean schools [23], underscore the urgent need to address the risks associated with malicious image editing. These incidents have highlighted growing concerns about how personal images, particularly those depicting individuals’ faces, can be misused once they are posted online [24, 14, 15]. Protecting such images from unauthorized and malicious edits has thus become an important topic of research [25, 26, 27].

这些编辑技术的强大力量带来了严重的伦理问题 [17, 18, 19, 20, 21]。 近期事件,如广泛讨论的泰勒·斯威夫特图片 被篡改 [22] 以及影响韩国学校 的色情内容泛滥 [23],凸显了恶意图片编辑风险的紧迫性。这些事件凸显了人们对个人形象,尤其是描绘个人面部的图片,一旦发布到网上 可能被滥用的日益担忧 [24, 14, 15]。 因此,保护此类图像免受未经授权和恶意编辑已成为重要的研究 课题 [25, 26, 27]。

To address this challenge, several recent attempts [1, 28, 2, 29, 30, 31, 32] have focused on using adversarial perturbations, which are imperceptible to human eyes but are intended to negate the effects of editing when such images are used as inputs to diffusion-based editing algorithms. These perturbations aim to protect personal images by preventing the success of the intended edits (see Fig. 1 for an illustration). However, current methods suffer from instability [1, 2, 31, 32] and simple purification methods. Specifically, while they are effective for certain types of editing instructions, they fail against others, largely due to the inherent diversity and versatility of editing prompts. The underlying issue is that as long as existing methods continue to focus on ‘canceling off editing effects’, the inconsistency of results is inevitable. The diversity in editing prompts and the complexity of generative diffusion models make it difficult for such approaches to generalize effectively.

为应对这一挑战,近期的几次尝试[1, 28, 2, 29, 30, 31, 32] 都集中在使用对抗性扰动,这些扰动对人眼来说是不可察觉的,目的是抵消当此类图像作为扩散编辑算法输入时的编辑效果。这些干扰旨在通过防止预期编辑的成功来保护个人图像(见图)。1 作为插图)。然而,现有方法存在不稳定性 [1, 2, 31, 32] 和简单纯化方法的问题。具体来说,虽然它们对某些类型的编辑指令有效,但在其他方面则失效,主要原因是编辑提示本身的多样性和多样性。根本问题在于,只要现有方法仍专注于“取消编辑效果”,结果的不一致是不可避免的。编辑提示的多样性和生成扩散模型的复杂性使得此类方法难以有效推广。

The rationale behind current defense methods is to ensure that the edited image does not meet the requirements of a successful image editing task. To understand this more deeply, we first revisit what constitutes a successful image editing task: it should accurately reflect the editing instruction while preserving the original, irrelevant visual features, such as those related to the subject’s identity, including facial features. The latter requirement, which has been largely overlooked, provides an opportunity for a new defense strategy. Instead of attempting to cancel out edits, here, we ask:

当前防御方法的理由是确保编辑后的图像不符合成功图像编辑任务的要求。为了更深入地理解这一点,我们首先回顾什么才算是成功的图像编辑任务:它应准确反映编辑说明,同时保留原始且无关的视觉特征,如与被摄者身份相关的特征,包括面部特征。后者这一要求大多被忽视,为制定新的防御战略提供了机会。这里我们不试图取消编辑,而是在这里提出:

Through a series of algorithmic designs, we demonstrate that creating adversarial perturbations that disrupt facial recognition while also introducing distinct visual disparities in facial features is far from trivial. To address this, we propose FaceLock, which strategically integrates a state-of-the-art facial recognition model into the diffusion loop as an adversary while also penalizing feature embeddings to achieve visual dissimilarity. By doing so, our method not only disrupts facial recognition but also ensures significant visual differences from the original, providing robust protection against malicious editing, see Fig. 1 for a comparison between FaceLock and prior arts. To this end, we summarize our contributions as follows:

通过一系列算法设计,我们证明了制造干扰性扰动,既破坏面部识别又带来明显的面部特征视觉差异,绝非易事。为此,我们提出了 FaceLock,它战略性地将最先进的面部识别模型整合进扩散环路,作为对手,同时惩罚特征嵌入以实现视觉差异。通过这样做,我们的方法不仅干扰了面部识别,还确保了与原始图像显著的视觉差异,提供强大的防范恶意编辑保护,见图。1 用于比较 FaceLock 与现有技术。为此,我们总结了我们的贡献如下:

∙ We present a novel perspective for protecting personal images from malicious editing, focusing on making biometric features unrecognizable after edits.

∙ 我们提出了一种新颖的视角,保护个人图像免受恶意编辑,重点是让生物特征在编辑后无法辨认。

∙ We develop a new algorithm, FaceLock, that incorporates facial recognition models and feature embedding penalties to effectively protect against diffusion-based image editing.

∙ 我们开发了一种新算法 FaceLock,结合了面部识别模型并设置嵌入惩罚,有效防止基于扩散的图像编辑。

∙ We conduct a critical analysis of the quantitative evaluation metrics commonly used in image editing tasks, exposing their vulnerabilities and highlighting the potential for manipulation to achieve deceptive results.

∙ 我们对图像编辑任务中常用的定量评估指标进行了批判性分析,揭示了其脆弱性,并强调了控手段可能带来欺骗性结果的可能性。

∙ Through extensive experiments, we demonstrate that FaceLock effectively alters human facial features against various editing prompts, achieving superior defense performance compared to baselines. We also show that FaceLock generalizes well to multiple diffusion-based algorithms and exhibits inherent robustness against purification methods.

∙ 通过大量实验,我们证明 FaceLock 能有效改变人类面部特征,针对各种编辑提示,实现了优于基线的防御性能。我们还证明了 FaceLock 能够很好地推广到多种基于扩散的算法,并且对纯化方法表现出固有的鲁棒性。

2Related Work 2 相关工作

Generative editing models. Recent advances in latent diffusion models [33] have demonstrated superior image editing capabilities through instructions and prompt editing [3, 4, 5, 6]. Most recent methods [7, 8] combine diffusion models with large language models for understanding text prompts. InstructPix2Pix [9] leverages a fine-tuned version of GPT-3 and images generated from SD and achieves on-the-fly image editing without further per-sample finetuning. On the other hand, many such models also allow personalized image editing [10, 12]. DreamBooth [13] learns a unique identifier and class type of an object by finetuning a pretrained text-to-image model with a few images. SwapAnything [15] and Photoswap [14] allow for personal content editing by swapping faces and objects between two images. In the generative era, these tools offer unprecedented creative freedom but also raise ethical questions on privacy and malicious image editing, which motivate us to conduct this work.

生成式编辑模型。 潜扩散模型 的最新进展[33] 展示了通过指令和提示编辑 的优越图像编辑能力 [3, 4, 5, 6]。 最新的方法 [7, 8] 将扩散模型与大型语言模型结合起来,用于理解文本提示。InstructPix2Pix [9] 利用了 GPT-3 的精细版本和从 SD 生成的图像,实现了实时图像编辑,无需进一步的每个样本微调。另一方面,许多此类模型也支持个性化图像编辑 [10, 12]。DreamBooth [13] 通过微调预训练的文本转图像模型,学习对象的唯一标识符和类类型。SwapAnything [15] 和 Photoswap [14] 允许通过在两张图片之间交换面部和对象来编辑个人内容。在生成时代,这些工具提供了前所未有的创作自由,但也引发了关于隐私和恶意图像编辑的伦理问题,这激励着我们开展这项工作。

Defense against malicious editing. Adversarial samples are clean samples manipulated intentionally to fool a machine learning model, often done by perturbing the image with an imperceptible small noise. Under a white-box setting, gradient-based methods, such as fast gradient sign method (FGSM), projected gradient decent (PGD) [34] and Carlini & Wagner (CW) attack [35], are among the most effective techniques in generating adversarial examples in classification models. Recent works like PhotoGuard, Editshield, AdvDM [1, 2, 31] have extended gradient-based methods to diffusion models and aim to protect images from malicious editing. PhotoGuard demonstrated an effective encoder attack mechanism by perturbing the source image towards an unrelated target image, e.g. an image of gray background. In particular, let ℰ be the encoder, 𝐳target be the latent representation of the target image. Under a attack budget ϵ, PhotoGuard aims to optimize:

防止恶意编辑。 对抗样本是故意作的干净样本,目的是欺骗机器学习模型,通常通过用微小的微小噪声扰动图像实现。在白箱环境中,基于梯度的方法,如快速梯度符号法(FGSM)、投影梯度下降法(PGD) [34] 和 Carlini & Wagner(CW)攻击 [35],是生成分类模型中对抗实例的最有效技术之一。近期工作如 PhotoGuard、Editshield、AdvDM [1, 2, 31] 将基于渐变的方法扩展到扩散模型,旨在保护图像免受恶意编辑。PhotoGuard 展示了一种有效的编码器攻击机制,通过扰动源图像朝向无关的目标图像(例如灰色背景图像)来实现。特别地,设 ℰ 为编码器, 𝐳target 为目标图像的潜在表示。在攻击预算 ϵ 下,PhotoGuard 旨在优化:

| δEncoder=argmin‖δ‖∞≤ϵ‖ℰ(𝐱+δ)−𝐳target‖. | (1) |

Yet, the protection can be less effective if the image is slightly transformed. PhotoGuard takes a step further by considering expectations over transformation:

然而,如果图像略有变形,保护效果可能会减弱。PhotoGuard 更进一步,考虑了期望而非变革:

| max𝐱𝐩𝔼f∼ℱ[Dist(ℰ(f(𝐱𝐩)),ℰ(𝐱))]−β⋅‖𝐱𝐩−𝐱‖22, | (2) |

where 𝐱 is the source image, 𝐱𝐩 is the perturbed image, and ℱ is a distribution over a set of transformations.

其中 𝐱 是源图像, 𝐱𝐩 是扰动图像, 是 ℱ 一组变换上的分布。

However, these approaches are typically less robust, as the gradients are highly dependent on model architecture and parameters. Distraction Is All you Need [29] circumvent this by attacking the cross attention mechanism between image and editing instruction, so diffusion models misinterpret the target editing regions. Glaze and Nightshade [28, 30] instead perturb the image towards a completely different image with another style or concept. These approaches make the image less susceptible to the specificities of model architecture and is generally more robust across different models.

然而,这些方法通常较不稳健,因为梯度高度依赖于模型架构和参数。只需分散注意力[29],通过攻击图像与编辑指令之间的交叉注意力机制来规避此问题,使扩散模型误解目标编辑区域。《釉与夜影 》[28, 30] 则将画面调向另一种风格或概念的完全不同形象。这些方法使图像更不易受模型架构的特殊性影响,且在不同模型间通常更稳健。

The adversarial techniques mentioned above primarily protect portrait images by interfering with the editing process. However, nullifying the editing process does not always safeguard facial features or biometric information. We propose a novel way of protecting images by incorporating facial recognition model into the perturbation process. Although the model is capable of editing the image according to the prompt, we ensure that the facial features are altered or destroyed during the process.

上述对抗性技术主要通过干扰编辑过程来保护肖像图像。然而,取消编辑过程并不总能保护面部特征或生物识别信息。我们提出了一种通过将面部识别模型纳入扰动过程来保护图像的新方法。虽然模特能够根据提示编辑图像,但我们确保在过程中面部特征被修改或破坏。

Facial recognition. Recent facial recognition works [36, 37, 38, 39, 40, 41, 42] have proposed several margin-based softmax loss functions to enhance the discriminative power and feature extraction ability of facial recognition models. CVLFace [36] utilizes these models to extract features from two images and computes the cosine similarity between these features to verify a person’s identity. In addition, recent works [43, 44, 45, 46, 47] also leverage synthetic images during training for enhanced privacy protection, highlighting the need for privacy protection in image editing as well. Our approach builds on top of CVLFace and protects biometric information by minimizing the cosine similarity between features. Our work is the first application of facial recognition on perturbation generation, enabling a new axis of identity protection.

面部识别。 近期人脸识别工作 [36, 37, 38, 39, 40, 41, 42] 提出了多种基于边际的软最大损失函数,以增强面部识别模型的判别能力和特征提取能力。CVLFace [36] 利用这些模型从两张图像中提取特征,并计算这些特征之间的余弦相似度以验证个人身份。此外,近期研究 [43, 44, 45, 46, 47] 也在培训中利用合成图像以增强隐私保护,凸显了图像编辑中隐私保护的必要性。我们的方法建立在 CVLFace 之上,通过最小化特征间的余弦相似性来保护生物识别信息。我们的工作是首次将面部识别应用于扰动生成,开辟了身份保护的新轴。

3FaceLock: Adversarial Perturbations for Biometrics Erasure

3FaceLock:生物识别擦除的对抗扰动

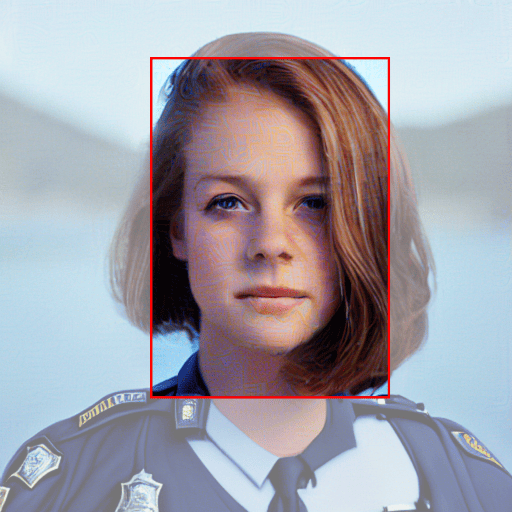

Figure 2:Illustration of the two requirements of image editing task: prompt fidelity and image integrity. (a) Source image before editing; (b) A successful editing example holding both metrics; (c) Failure case due to the lack of prompt fidelity leading to under-editing and (d) the lack of the image integrity leading to over-editing.

图 2: 图像编辑任务的两个要求:提示忠实度和图像完整性的示意。(a) 编辑前的原始图片;(b)一个成功的编辑示例,同时满足这两个指标;(c)因提示性不够准确导致编辑不足,以及(d)图像完整性不足导致过度编辑而导致失败案例。

What defines a successful image editing task? Before introducing our proposed method for safeguarding human portrait images from malicious edits, we revisit the criteria for a successful image editing outcome. Specifically, we propose that a successful text-guided image editing hinges on two critical requirements: ❶ prompt fidelity, and ❷ image integrity. Prompt fidelity requires that the edit accurately reflects the instructions provided in the prompt. For instance, as shown in Fig. 2, a successful edit replaces the person’s clothing with a police uniform as instructed by the prompt. Meanwhile, image integrity requires that other elements in the image remain intact after editing. Although this requirement is less explicit than prompt fidelity, it defines the essence of image editing and differentiates it from general text-to-image generation tasks. As illustrated in Fig. 2, aside from the change in attire, the edited image should retain as much of the subject’s original appearance as possible, including facial features, poses, and other details. While prompt fidelity has been emphasized and extensively studied [33, 13, 9, 7], image integrity remains long-overlooked and underexplored in literature. Next, we will demonstrate how this holistic view of image editing can provide new insights into protecting human portraits from malicious edits.

什么定义了成功的图像编辑任务? 在介绍我们提出的保护人像图片免受恶意编辑的方法之前,我们先回顾了成功编辑图像的标准。具体来说,我们提出,成功的文本引导图像编辑依赖于两个关键要求:❶ 提示性忠实度和❷ 图像完整性 。提示忠实度要求编辑准确反映提示中提供的指示。例如,如图所示。2,成功编辑时,按照提示将人物的服装替换为警察制服。与此同时, 图像完整性要求图像中的其他元素在编辑后保持完整。虽然这一要求不如提示性精确度明确,但它定义了图像编辑的本质,并将其区别于一般的文本生成生成任务。如图所示。2,除了服装的变化外,编辑后的图像应尽可能保留被拍摄者的原始外观,包括面部特征、姿势及其他细节。尽管即时保真度已被强调并被广泛研究 [33, 13, 9, 7],但图像完整性在文献中长期被忽视和探讨不足。接下来,我们将展示这种整体的图像编辑视角如何为保护人体肖像免受恶意编辑提供新的见解。

|

|

|

|

|

|

|

| FR=1.0 | FR=0.972 | FR=0.901 | FR=0.273 | FR=0.658 | FR=0.093 |

| ✗ Visual Change ✗ 视觉变化 | ✗ Visual Change ✗ 视觉变化 | ✗ Visual Change ✗ 视觉变化 | ✗ Visual Change ✗ 视觉变化 | ✓ Visual Change ✓ 视觉变化 | |

| (a) Source Image (a) 图片来源 | (b) No Protection (b)无保护 | (c) Design I: CVL (c) 设计 I:CVL | (d) Design II: CVL-D (d) 设计 II:CVL-D | (e) Design III: CVL-D + Pixel (e) 设计 III:CVL-D + 像素 | (f) FaceLock (f) 面锁 |

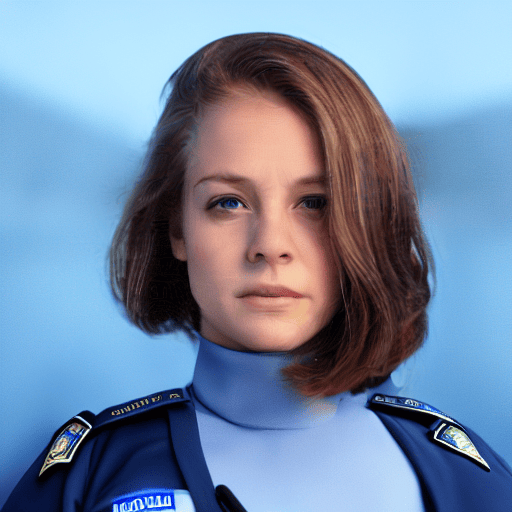

Figure 3:Source and edited images generated from different protection methods based on the instruction “Let the person wear a police suit”. The FR score below each image represents the facial representation similarity between the edited and source images and scores marked in red indicate insignificant changes biometric recognition results by CVLFace compared to source image. ‘CVL’ refers to perturbations generated targeting the CVLFace model alone. ‘CVL-D’ represents protection targeting both the CVLFace model and the diffusion model, while ‘CVL-DP’ incorporates an auxiliary loss to enforce pixel-level disparity between the edited and source images. FaceLock targets the CVLFace and diffusion model, aiming to enhance the disparity between the feature embeddings of the decoded and original images.

图 3: 根据“让当事人穿警服”指令,来源并编辑自不同防护方法生成的图片。每张图像下方的 FR 分数代表编辑后图像与源图像之间的面部表现相似度,红色标记的分数表示生物识别结果与源图像相比变化不显著。“CVL”指的是仅针对 CVLFace 模型产生的扰动。“CVL-D”代表针对 CVLFace 模型和扩散模型的保护,而“CVL-DP”则包含辅助损耗,以强制编辑后图像与源图像之间的像素级差异。FaceLock 针对 CVLFace 和扩散模型,旨在增强解码图像与原始图像特征嵌入之间的差异。

A new direction for defending against malicious editing. As discussed above, to safeguard personal images from malicious editing, the defender must ensure that at least one of the two requirements is not met. Previous works have primarily focused on generating adversarial perturbations to prevent edits from taking effect, thereby reducing prompt fidelity [1, 2, 28, 31, 30]. However, these approaches often suffer from instability and are effective only for a limited range of editing instructions, resulting in poor generalization. The core issue is the versatility of editing instructions—making it unlikely that a single perturbation can defend against all potential prompts. Therefore, we explore a new direction: optimizing perturbations to destroy biometric information after editing, rendering the edited image biometrically unrecognizable and thereby causing the edit to fail.

防御恶意编辑的新方向。 如上所述,为了防止恶意编辑,辩护方必须确保至少不满足其中一项要求。以往的研究主要集中于产生对抗扰动以阻止编辑生效,从而降低即时的忠实度 [1, 2, 28, 31, 30]。 然而,这些方法通常存在不稳定性,且仅对有限范围的编辑指令有效,导致推广效果较差。核心问题在于编辑指令的多样性——这使得单一扰动难以抵御所有潜在提示。因此,我们探索一个新方向:优化扰动,在编辑后破坏生物识别信息,使编辑后的图像生物识别无法识别,从而导致编辑失败。

Adversarial perturbation for facial disruption is nontrivial. The goal of our defense method is to disrupt human facial features during the sampling process in diffusion-based editing models. Design I (CVL): Perturbation against facial recognition models. A straightforward approach is to apply an adversarial perturbation against a state-of-the-art (SOTA) facial recognition model, such as the CVLFace model [36], and use the perturbed image as input to the image editing model. However, as shown in Fig. 3(c), the perturbation that successfully fools the CVLFace model does not persist through the diffusion model’s sampling process, resulting in an edited image with minimal disruption to facial features, as indicated by both the high facial similarity (FR) score and the visually similar appearance. The underlying issue with this approach is that the perturbations are generated independently of the diffusion process. Prior work [48] highlights that diffusion models possess an inherent ability to “purify” adversarial perturbations through their sampling process.

面部扰动的对抗扰动并非简单。 我们辩护方法的目标是在基于扩散的编辑模型中,在采样过程中干扰人类面部特征。 设计 I(CVL):对面部识别模型的扰动。 一种直接的方法是对最先进的(SOTA)面部识别模型(如 CVLFace 模型 [36])施加对抗扰动,并将扰动图像作为图像编辑模型的输入。然而,如图所示。3(c) 中,成功欺骗 CVLFace 模型的扰动不会在扩散模型采样过程中持续存在,导致编辑图像对面部特征影响最小,这从高面部相似度(FR)得分和视觉相似性均可看出。这种方法的根本问题在于,扰动是独立于扩散过程产生的。先前的研究 [48] 强调,扩散模型具有通过采样过程 “净化” 对抗扰动的固有能力。

Design II (CVL-D): Perturbation against diffusion with CVLFace model in the loop. To address this, we incorporate the CVLFace model into the diffusion process and design a method to directly interfere with the sampling stage. Given the high computational costs of disrupting the entire diffusion process, we instead bypass this step once the latent representation of the input image is obtained. The perturbation is then optimized by a facial recognition loss that maximizes the biometric disparity between the decoded image and the source input:

设计 II(CVL-D):在环路中与 CVLFace 模型进行扩散的微扰。 为此,我们将 CVLFace 模型纳入扩散过程,并设计了一种直接干涉采样阶段的方法。鉴于破坏整个扩散过程的高计算成本,一旦获得输入图像的潜在表示,我们就绕过这一步。随后,通过面部识别丢失来优化扰动,最大化解码图像与源输入之间的生物识别差异:

| 𝜹=argmax‖𝜹‖∞≤ϵfFR(𝒟(ℰ(𝐱+𝜹)),𝐱), | (3) |

where 𝒟 and ℰ denote the decoder and encoder used by the diffusion model, respectively, and fFR(⋅,⋅) computes the facial similarity score. As shown in Fig. 3(d), while this method significantly reduces the facial recognition similarity score, the edited image still resembles the original subject, suggesting room for further improvement in visual effects.

其中 𝒟 和 ℰ 分别表示扩散模型中使用的解码器和编码器,计算 fFR(⋅,⋅) 面部相似度评分。如图所示。3(d)),虽然该方法显著降低了面部识别相似度评分,但编辑后的图像仍然与原始对象相似,表明视觉效果还有进一步改进的空间。

Design III (CVL-DP): Perturbation against diffusion facial similarity with pixel-level penalty. To enhance the visual disparity between the edited image and the source image, we introduce a pixel-level loss focused on facial regions defined by a mask:

设计三(CVL-DP):对扩散面相似性的扰动,伴有像素级惩罚。 为了增强编辑图像与源图像之间的视觉差异,我们引入了像素级损失,聚焦于由遮罩定义的面部区域:

| 𝜹=argmax‖𝜹‖∞≤ϵfFR(𝒟(ℰ(𝐱+𝜹)),𝐱)+λ‖𝜹⊙𝐦‖2, | (4) |

where 𝐦 defines the facial region extracted by the CVLFace model. However, as shown in Fig. 3(e), the pixel-level loss primarily results in color shifts rather than significant distortion of the subject’s facial features. This limitation motivated the development of FaceLock, which aims to generate perturbations that enhance both facial dissimilarity scores and visual facial discrepancies.

其中 𝐦 定义了由 CVLFace 模型提取的面部区域。然而,如图所示。3(e)),像素级的损失主要导致的是色彩变化,而非显著扭曲受试者面部特征。这一限制促使了 FaceLock 的发展,旨在产生增强面部差异评分和视觉差异的扰动。

FaceLock: Perturbation optimization on facial disruption and feature embedding disparity. The lesson from CVL-DP indicates that pixel-level changes do not necessarily lead to distinct visual facial features. Thus, we transition to a more effective feature-level approach, using pretrained convolutional neural networks to extract and maximize the difference between high-level feature embeddings of the decoded and source image:

FaceLock:面部扰动和特征嵌入差异的微扰优化。CVL-DP 的经验表明,像素层级的变化不一定会导致明显的面部特征。因此,我们转向更有效的特征级方法,利用预训练卷积神经网络提取并最大化解码图像与源图像高层特征嵌入之间的差异:

| 𝜹=argmax‖𝜹‖∞≤ϵfFR(𝒟(ℰ(𝐱+𝜹)),𝐱)+λfFE(𝒟(ℰ(𝐱+𝜹),𝐱), | (5) |

where fFE(⋅,⋅) extracts feature embeddings from the input images and compute the distance between them. To solve (5), the widely used projected gradient descent (PGD) [34] method can be employed. We refer more implementation details in Sec. 5.

其中 fFE(⋅,⋅) 从 输入图像中提取特征嵌入并计算它们之间的距离。为求解(5),可以使用广泛使用的投影梯度下降(PGD) [34] 方法。更多实施细节见第 5 节。

4Pitfalls in The Widely-Used Quantitative Evaluation Metrics for Image Editing Tasks

4 广泛使用的图像编辑定量评估指标中的陷阱

In this section, we begin by providing a critical analysis of existing quantitative evaluation metrics for image editing tasks [49, 50, 51, 52]. For the first time, we highlight potential pitfalls in these widely accepted metrics, particularly how they can be easily manipulated to achieve deceptively high scores. Finally, we introduce two new, more robust metrics for evaluating human portrait editing. Detailed mathematical descriptions of the quantitative evaluation metrics discussed in this section can be found in Appx. A.

本节首先,我们对现有图像编辑任务的定量评估指标进行了批判性分析 [49, 50, 51, 52]。 我们首次指出这些广泛接受的指标中的潜在陷阱,特别是它们如何被轻易纵以获得欺骗性的高分。最后,我们引入了两个新的、更稳健的人体肖像编辑评估指标。本节讨论的定量评估指标的详细数学描述可见附录 A。

Existing quantitative metrics suffer from pitfalls and can be manipulated for misleading performance. As discussed in §3, the evaluation of general image editing tasks should consider two aspects: prompt fidelity and image integrity. However, all existing quantitative metrics, including CLIP scores [50], SSIM, and PSNR primarily focus on the former, namely how well the editing instruction is reflected in the edited image. In the following, we revisit each of these metrics and demonstrate the intrinsic pitfalls in their design.

现有的定量指标存在陷阱,容易纵以产生误导性表现。 如第 3 节所述,评估一般图像编辑任务应考虑两个方面:提示精度和图像完整性。然而,所有现有的定量指标,包括 CLIP 分数 [50]、SSIM 和 PSNR,主要关注前者,即编辑指令在编辑图像中的反映程度。以下我们将回顾这些指标,展示其设计中的内在陷阱。

Table 1:Quantitative evaluation on prompt fidelity (CLIP-S, PSNR, SSIM, LPIPS) and image integrity (CLIP-I, FR). Arrows (↑ or ↓) indicate whether a higher or lower value is preferred for a successful defense. All results are averaged over 5 different random seeds for editing. Results in the form a±b represent mean a with std b. The best result within each evaluation metric is highlighted in bold.

表 1: 对提示忠实度(CLIP-S、PSNR、SSIM、LPIPS)和图像完整性(CLIP-I、FR)进行定量评估。箭头( ↑ 或 ↓ )表示成功防御时优先选择更高或更低的数值。所有结果均通过 5 个不同的随机种子进行编辑平均。结果形式 a ±b 为 表示 a 平均值,标准为 b 。每个评估指标中的最佳结果以加粗标示。

| Prompt Fidelity 提示忠实 | Image Integrity 图像完整性 | |||||

|---|---|---|---|---|---|---|

| Method 方法 | CLIP-S↓ | PSNR↓ | SSIM↓ | LPIPS↑ | CLIP-I↓ | FR↓ |

| No Defense 无防御 | 0.118±0.037 | - | - | - | 0.808±0.074 | 0.833±0.111 |

| PhotoGuard Encoder attack PhotoGuard 编码器攻击 | 0.108±0.030 0.108±加减\pm±0.030 | 15.44±2.01 15.44±加减\pm±2.01 | 0.612±0.056 | 0.403±0.071 | 0.670±0.118 | 0.590±0.264 |

| EditShield 编辑盾牌 | 0.110±0.026 | 17.74±2.20 | 0.593±0.072 | 0.382±0.071 | 0.677±0.096 | 0.641±0.231 |

| Untargeted Encoder attack 无目标编码器攻击 | 0.116±0.023 | 16.74±2.27 | 0.589±0.084 0.589±加减\pm±0.084 | 0.371±0.094 | 0.653±0.090 | 0.563±0.236 |

| CW L2 attack CW L2 攻击 | 0.115±0.031 | 19.64±2.46 | 0.701±0.060 | 0.247±0.062 | 0.733±0.089 | 0.725±0.173 |

| VAE attack VAE 攻击 | 0.114±0.034 | 19.40±1.70 | 0.715±0.039 | 0.251±0.060 | 0.786±0.061 | 0.846±0.097 |

| FaceLock (ours) FaceLock (我们的) | 0.114±0.024 | 17.11±2.36 | 0.589±0.079 0.589±加减\pm±0.079 | 0.436±0.065 0.436±加减\pm±0.065 | 0.648±0.089 0.648±加减\pm±0.089 | 0.315±0.109 0.315±加减\pm±0.109 |

CLIP-based scores overemphasize the presence of elements from the editing instructions, often prioritizing over-editing. CLIP-based scores are widely used to assess prompt fidelity by measuring the cosine similarity between the CLIP text embedding of the editing prompt and the visual embedding difference between the edited and source images. While this metric effectively indicates whether the edit has taken effect, it tends to overemphasize the presence of specific elements in the edited image. Fig. 4 shows a contradictory CLIP score ranking compared to the visual editing quality. Although Fig. 4(b) demonstrates a visually balanced outcome between the editing effect ‘turn the hair pink’ and preserving other irrelevant (especially facial) features, the CLIP-based score still assigns higher values to Fig. 4(c) and (d) simply because they show stronger ‘pink hair’ effects, even if the subject’s identity has been completely altered. Therefore, CLIP-based scores can easily prioritize over-editing and be manipulated by replicating elements from the editing instructions.

基于 CLIP 的乐谱过分强调编辑说明中的元素,往往优先考虑过度编辑。 基于 CLIP 的评分被广泛用于评估提示的真实度,通过测量编辑提示的 CLIP 文本嵌入与编辑后图像与源图像之间视觉嵌入差异的余弦相似度。虽然该指标有效指示编辑是否生效,但往往过分强调编辑图像中特定元素的存在。 无花果。4 显示出与视觉编辑质量相反的 CLIP 评分排名。虽然 Fig.4(b)展示了编辑效果“让头发变粉色”与保留其他无关(尤其是面部)特征之间的视觉平衡,基于 CLIP 的评分仍然给图格赋予更高的分数。4(c) 和 (d) 仅仅因为它们表现出更强烈的“粉色头发”效果,即使受试者的身份已被完全改变。因此,基于 CLIP 的乐谱可以轻松优先处理过度编辑,并通过复制编辑指令中的元素进行作。

| Editing Prompt: ‘Let the person’s hair turn pink’. 编辑提示 :“ 让那个人的头发变成粉色 。” | |||

|

|

|

|

|

| CLIP-S=N/A | CLIP-S=0.091 | CLIP-S=0.103 | CLIP-S=0.118 |

| (a) Source Image (a) 图片来源 | (b) Edited I (b) 编辑版 I | (c) Edited II | (d) Edited III |

Figure 4:CLIP score (CLIP-S) of different editing results. The CLIP score provides a contradictory ranking (III > II > I) compared to the visual quality (I > II > III), as it overemphasizes the presence of elements from the editing prompt, thereby favoring over-editing.

图 4: 不同编辑结果的 CLIP 分数(CLIP-S)。CLIP 评分与视觉质量(I > II > III)形成矛盾,因为它过度强调编辑提示元素的存在,从而助长过度编辑 。

SSIM and PSNR over-rely on differences between the edited image and the undefended source, potentially leading to a false sense of successful defense. Unlike CLIP-based scores, metrics such as SSIM and PSNR evaluate whether a defense against editing is successful by comparing the pixel-level statistical differences between the edited images with and without defense. While comparing against the edited image without defense can be effective in some scenarios, concluding that a defense is successful simply because the defended image differs from the undefended one is premature. For example, in Fig. 5, Fig. 5(b) demonstrates a successful edit based on the instruction ‘Let the person wear a hat.’ While Fig. 5(c) shows a genuinely successful defense, Fig. 5(d) is incorrectly assigned a lower SSIM/PSNR score (where lower scores indicate better defense). This suggests a greater pixel-level statistical distance from Fig. 5(b) compared to Fig. 5(c). However, this assessment is flawed, as Fig. 5(d) clearly represents a failed defense, given that a green hat has been applied to the source image. The pixel statistics-based score is misleading simply because the color of the hat differs from that in Fig. 5(b). Therefore, treating the edited image without defense as a gold standard is risky, as the variability of editing effects, even with a single instruction, must be considered.

SSIM 和 PSNR 过度依赖编辑图像与未防卫来源之间的差异,可能导致错误的成功防御感。 与基于 CLIP 的评分不同,SSIM 和 PSNR 等指标通过比较带防御和无防御的编辑图像像素级统计差异来评估防剪防御是否成功。虽然在某些情况下,与未经辩护的编辑图像进行比较是有效的,但仅仅因为被辩护的图像与未辩护的图像不同就断定辩护成功是为时过早的。例如,在图中。5,Fig。5(b) 展示了基于“让本人戴帽子”指令的成功编辑。而 Fig。5(c) 显示了一次真正成功的辩护,见图。5(d)错误地被分配较低的 SSIM/PSNR 分数(分数越低表示防御越好)。这表明与图相比,像素级的统计距离更大。5(b)与图。 第 5(c)条。然而,这一评估存在缺陷,如图所示。5(d)显然代表辩护失败,因为对源图像已加以绿色帽子。基于像素统计的评分具有误导性,仅仅因为帽子的颜色与图中不同。5(b)。因此,将编辑后的图像视为无防御的黄金标准是有风险的,因为即使只有一条指令,也必须考虑编辑效果的多样性。

| Editing Prompt: ‘Let the person wear a hat’. 编辑提示 :“ 让那个人戴帽子 。” | |||

|

|

|

|

|

| SSIM=N/A SSIM=无/A | SSIM=0.869 | SSIM=0.746 | |

| PSNR=N/A PSNR=无/A | PSNR=16.44 | PSNR=11.60 | |

| (a) Source Image (a) 图片来源 | (b) No Defense (b)无辩护 | (c) Defense I (c) 防御 I | (d) Defense II (d) 防御 II |

Figure 5:SSIM and PSNR scores of different defense methods. Although Defense I (b) demonstrates a successful defense, Defense II (d) is assigned a much lower (better) SSIM and PSNR score simply due to its larger pixel-level statistical difference from (b). SSIM and PSNR treat the edited image w/o defense as the gold standard, without accounting for the diversity of possible editing outcomes, which can lead to a false sense of defense success.

图 5: 不同防御方法的 SSIM 和 PSNR 评分。虽然防御 I(b)证明了成功的防御,但防御 II(d)由于其像素级统计差异较大,SSIM 和 PSNR 分数被分配得更低(更好)。SSIM 和 PSNR 将未经防御的编辑图像视为黄金标准,却未考虑可能编辑结果的多样性,这可能导致错误的防御成功感。

LPIPS score as a more robust metric for prompt fidelity evaluation. To address the limitations of pixel-level statistics used by SSIM and PSNR, we propose using the Learned Perceptual Image Patch Similarity (LPIPS [53]) score to evaluate the similarity between edited images. Unlike traditional similarity metrics, LPIPS leverages pretrained neural networks to quantify perceptual differences by comparing high-level semantic features of images, offering a more robust assessment of protection effectiveness. We believe this approach can help mitigate the generalization issues associated with relying on a single reference image, as highlighted in the analysis above.

LPIPS 评分作为更稳健的即时保真度评估指标。 为了解决 SSIM 和 PSNR 所用像素级统计的局限性,我们建议使用学习性感知图像贴片相似度(LPIPS [53])评分来评估编辑图像之间的相似度。与传统相似度指标不同,LPIPS 利用预训练的神经网络,通过比较图像的高层语义特征来量化感知差异,从而提供更稳健的保护效果评估。我们认为,这种方法有助于缓解依赖单一参考图像时的泛化问题,正如上述分析所强调的那样。

Facial recognition similarity score for image integrity evaluation. In this work, we propose evaluating image integrity as a means of assessing defense performance. However, we acknowledge that developing a cost-effective metric for general image integrity—defined as retaining all elements irrelevant to the editing—is challenging due to the diversity of elements present in an image. Therefore, we focus specifically on how well human facial details are preserved after editing, under the assumption that facial features are not altered. For this purpose, we use the facial recognition (FR) similarity score to compare the subjects in the edited and source images. Generally, if the edited image does not statistically (in terms of FR score) and visually resemble the original subject, it indicates a successful defense. Additionally, we use the cosine similarity of the CLIP score (CLIP-I) between the edited and source images as a reference indicator on the general preservation effect.

用于图像完整性评估的面部识别相似度评分。 本研究提出通过评估图像完整性作为评估防御性能的手段。然而,我们承认,由于图像中元素的多样性,开发一个具有成本效益的图像完整性指标——即保留所有与编辑无关的元素——具有挑战性。因此,我们特别关注编辑后人类面部细节的保存程度,前提是面部特征未被改变。为此,我们使用面部识别(FR) 相似度评分来比较编辑后图像和源图像中的受试者。通常,如果编辑后的图像在统计上(以 FR 分数计)和视觉上都与原始对象不相似,则表示成功辩护。此外,我们使用编辑后图像与源图像之间 CLIP 分数的余弦相似度(CLIP-I)作为一般保存效果的参考指标。

5Experiments 5 实验

Figure 6:Qualitative results of different defense methods. Three editing types are included: facial feature modifications ((a) ‘Let the person have a tattoo’; (b) ‘Let the person wear purple makeup’; (c) ‘Turn the person’s hair pink’), accessory adjustments ((d) ‘Let the person wear a police suit’; (e) ‘Let the person wear sunglasses’; (f) ‘Let the person wear a helmet’), and background alterations ((g) ‘Let it be snowy’; (h) ‘Set the background in a library’; (i) ‘Change the background to a beach’). Images in green frames denote successful defenses.

图 6: 不同防御方法的定性结果。包含三种编辑类型:面部特征修改((a)“让人物有纹身”;(b)“让这个人涂紫色妆”;(c)“让其头发变粉色”),配件调整((d)“让该人穿警服”;(e)“让该人戴太阳镜”;(f)“让那个人戴头盔”),以及背景变化((g)“让它下雪”;(h)“将背景设定在图书馆”;(i)“将背景改为海滩”)。绿色框中的图像表示防御成功。

5.1Experiment Setup

5.1 实验装置

Models and dataset. We adopt the widely accepted InstructPix2Pix [9] as our primary target model for prompt-based image editing. In our experiments, we utilize a filtered subset of the CelebA-HQ dataset [54], a high-quality human face attribute dataset widely used in the facial analysis community. The dataset consists of 2,000 human portrait images spanning diverse race, age, and gender groups. For editing prompts, we manually selected 25 prompts across three categories: facial feature modifications (e.g., hair, nose modification), accessory adjustments (e.g., clothing, eyewear), and background alterations.

模型和数据集。 我们采用广泛接受的 InstructPix2Pix [9] 作为基于提示的图像编辑的主要目标模型。在我们的实验中,我们利用了 CelebA-HQ 数据集 [54] 的一个经过筛选的子集,这是一个在面部分析领域广泛使用的高质量人脸属性数据集。该数据集包含 2,000 涵盖不同种族、年龄和性别群体的人体肖像图像。在编辑提示时,我们手动选择 25 了三类提示:面部特征修改( 如发型、鼻子修改)、配饰调整( 如服装、眼镜)和背景修改。

Baselines. We evaluate FaceLock against two established text-guided image editing protection methods: PhotoGuard [1] and EditShield [2], both designed for general image protection. Additionally, we also compare against a variety of widely used methods [55, 56, 57, 58, 59, 60, 61] in adversarial machine learning field, including untargeted encoder attack, CW attack, and VAE attack as other baseline methods. Full details on these baselines are provided in Appx. A.

基线。 我们将 FaceLock 与两种成熟的文本引导图像编辑保护方法进行比较:PhotoGuard [1] 和 EditShield [2],这两种方法均为通用图像保护设计。此外,我们还比较了对抗机器学习领域中多种广泛使用的方法 [55、56、57、58、59、60、61],包括无目标编码攻击、CW 攻击和 VAE 攻击,这些都是其他基线方法。这些基线的完整细节见 Appx。 答 :

Evaluation metrics. We adopt quantitative evaluation metrics across two categories: prompt fidelity and image integrity. For prompt fidelity, we report PSNR, SSIM, and LPIPS scores between edits on protected and unprotected images, as well as the CLIP similarity score (CLIP-S), which captures the alignment between the edit-source image embedding shift and the text embedding. For image integrity, we report the CLIP image similarity score (CLIP-I) and facial recognition similarity score (FR). CLIP-I captures overall visual similarity, while FR specifically measures similarity in biometric information.

评估指标。 我们在提示忠实度和图像完整性两大类别采用定量评估指标。为了提示准确度,我们报告受保护图像与非保护图像编辑之间的 PSNR、SSIM 和 LPIPS 分数,以及 CLIP 相似度评分(CLIP-S),该分数捕捉编辑源图像嵌入偏移与文本嵌入之间的对齐。对于图像完整性,我们报告 CLIP 图像相似度评分(CLIP-I)和面部识别相似度评分(FR)。CLIP-I 捕捉整体视觉相似性,而 FR 则专门测量生物识别信息的相似性。

Implementation details For a fair comparison, we set the perturbation budget to 0.02 and the number of iterations to 100 for all methods, except EditShield, which does not have a default perturbation budget. Additionally, we include the untargeted latent-wise loss from EditShield as a regularization term to stabilize the protection results. Further experimental details are provided in Appx. A.

实现细节 为了公平对比,我们将所有方法的扰动预算设为 0.02,迭代次数设为 100,除了 EditShield,EditShield 没有默认扰动预算。此外,我们还将 EditShield 的非定向潜在损失作为正则化项,以稳定保护效果。更多实验细节见 Appx。 答 :

5.2Experiment Results

5.2 实验结果

Superior performance of FaceLock in human portrait image protection: quantitative and qualitative evaluation. Building upon our analysis of comprehensive evaluation metrics for image editing and protection, we present a quantitative evaluation of various protection methods in Tab. 1. Our proposed method, FaceLock, demonstrates remarkable protection effectiveness across both prompt fidelity and image integrity metrics. Regarding prompt fidelity, FaceLock achieves competitive results in multiple metrics. It ties the lowest SSIM score and maintains a competitive CLIP-S score, and more notably, it excels in the LPIPS metric with the highest score. This aligns with our discussion on the importance of perceptual measures over pixel-based metrics. For image integrity, FaceLock outperforms all baselines significantly, especially in FR scores. This underscores its unparalleled efficacy in protecting the subject’s biometric information against malicious editing. In Fig. 6, we present qualitative results of the three editing types. As we can see, Our approach demonstrates the most pronounced alteration of biometric details between the edited and source images. For example, in the “Let the person wear sunglasses” editing scenario, while the edited image presents a person wearing sunglasses, it also transforms the individual from an elderly man in the source image to a young woman. These results indicate that FaceLock effectively protects images from different editing instructions.

FaceLock 在人像保护中的卓越性能:定量与定性评估。 基于对图像编辑和保护综合评估指标的分析,我们在标签 1 中对各种保护方法进行了定量评估。我们提出的方法 FaceLock 在提示精度和图像完整性指标上都展现出了卓越的保护效果。在提示准确度方面,FaceLock 在多个指标上都取得了竞争成绩。它追平了最低的 SSIM 分数,保持了有竞争力的 CLIP-S 分数,更值得注意的是,它在 LPIPS 指标中以最高分表现出色。这与我们关于感知测量相较于像素指标重要性的讨论相符。在图像完整性方面,FaceLock 显著优于所有基线,尤其是在 FR 分数方面。这凸显了其在保护受试者生物识别信息免受恶意编辑方面的无与伦比的效力。 图 6 中,我们展示了三种编辑类型的定性结果。正如我们所见,我们的方法展示了编辑后图像与原始图像之间生物特征细节变化最显著的差异。例如,在“ 让某人戴太阳镜 ” 的编辑场景中,虽然编辑图像呈现的是戴着太阳镜的人,但同时也将该人从原图中的老年男性转变为年轻女性。这些结果表明,FaceLock 有效地保护了图片免受不同编辑指令的影响。

FaceLock demonstrates consistent protection across diverse editing types. In Tab. 2, we present the facial recognition similarity scores for three editing types: facial feature modifications, accessory adjustments, and background alterations. As shown, FaceLock provides robust protection across all three editing types. Notably, background alterations generally yield the highest facial recognition similarity scores across all methods and the clean edit scenario, indicating that just modifying the background is prone to preserve more facial identity compared to direct facial modifications. Despite the inherent challenge of protecting identity during background alterations, our method still achieves promising protection results in this category, demonstrating its effectiveness even in the most demanding scenarios.

FaceLock 在多种编辑类型中展现了一致的保护效果。 在标签 2 中,我们展示了三种编辑类型的面部识别相似度评分:面部特征修改、配件调整和背景修改。如图所示,FaceLock 在三种编辑类型中都提供了强有力的保护。值得注意的是,背景修改通常在所有方法和干净编辑场景中获得最高的面部识别相似度评分,表明仅修改背景相比直接面部修改更能保留面部识别。尽管在背景更改中保护身份本身存在固有挑战,我们的方法在该类别中仍取得了令人期待的保护效果,即使在最严苛的场景中也展现了其有效性。

1529

1529

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?