import torch

import torch.nn as nn

import torch.nn.functional as F

import torch.optim as optim

from torchvision import datasets, transforms

batch_size=200

learning_rate=0.01

epochs=10

train_db = datasets.MNIST('./data', train=True, download=True,

transform=transforms.Compose([

transforms.ToTensor(),

transforms.Normalize((0.1307,), (0.3081,))

]))

train_loader = torch.utils.data.DataLoader(

train_db,

batch_size=batch_size, shuffle=True)

test_db = datasets.MNIST('./data', train=False, transform=transforms.Compose([

transforms.ToTensor(),

transforms.Normalize((0.1307,), (0.3081,))

]))

test_loader = torch.utils.data.D

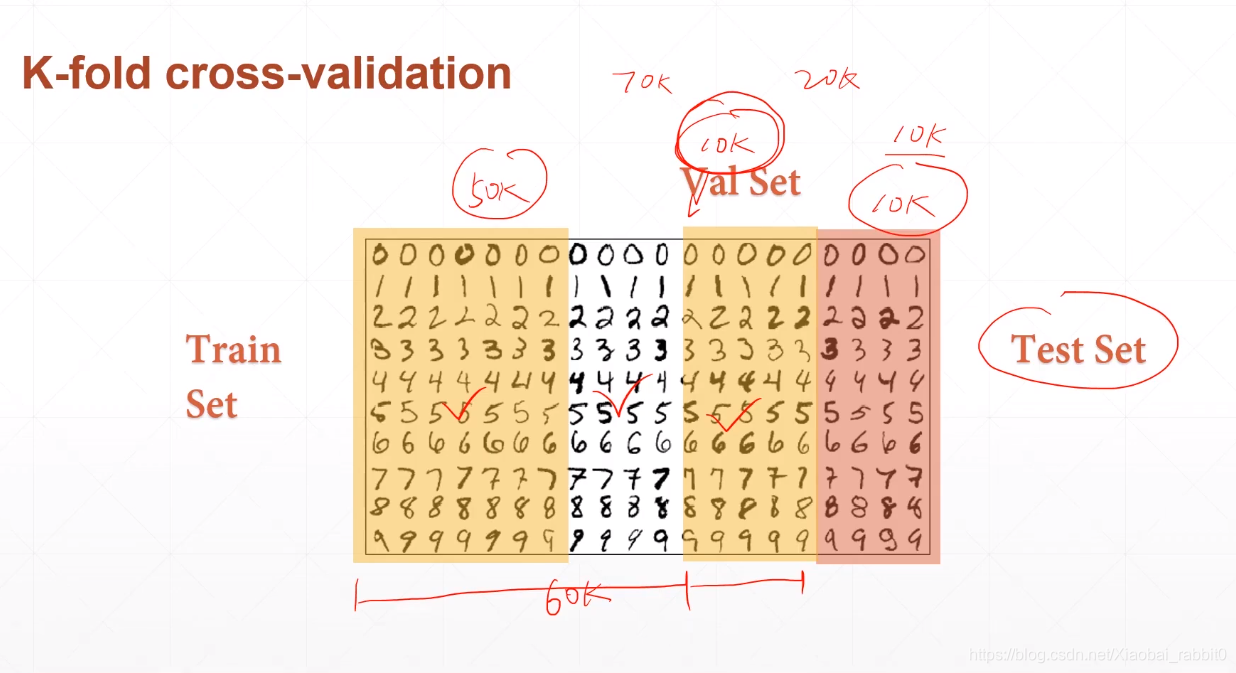

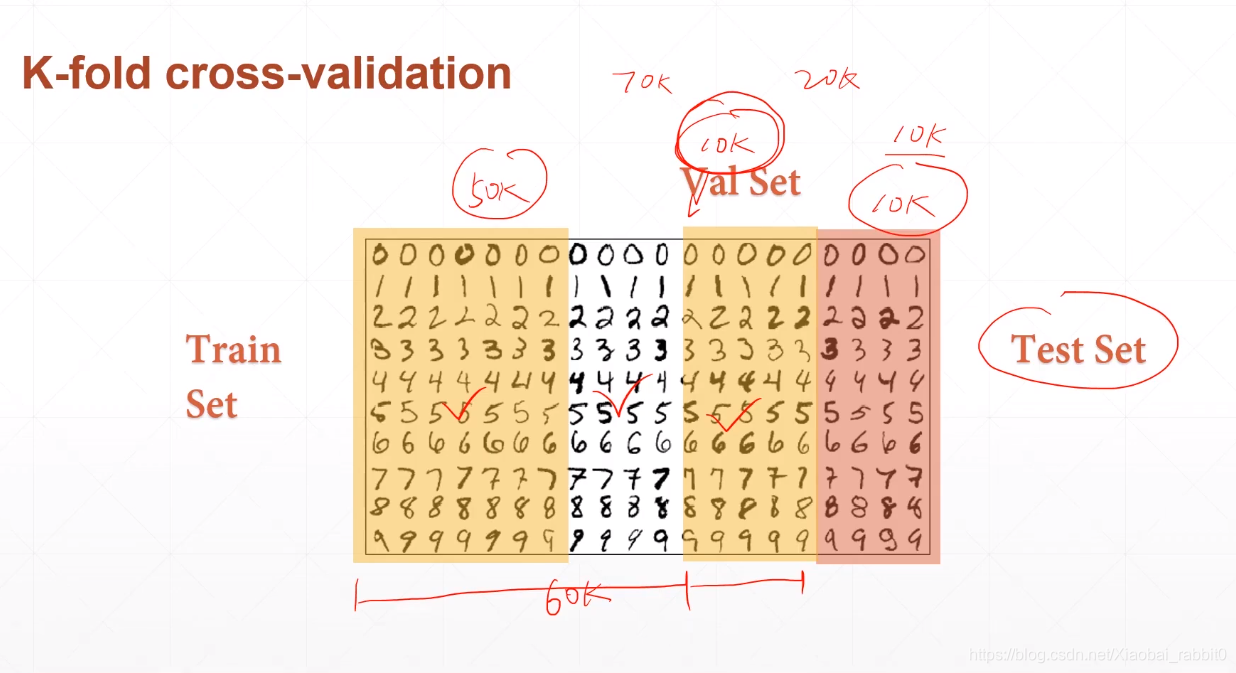

本文详细探讨了使用PyTorch进行深度学习模型的训练、验证和测试流程,包括数据集划分、交叉验证的概念及其在PyTorch中的实现,旨在提升模型的泛化能力。

本文详细探讨了使用PyTorch进行深度学习模型的训练、验证和测试流程,包括数据集划分、交叉验证的概念及其在PyTorch中的实现,旨在提升模型的泛化能力。

最低0.47元/天 解锁文章

最低0.47元/天 解锁文章

952

952

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?