环境:

python

cpu

说明

需要根据关键字检索文本中的内容。

代码

import os

import re

import pickle

import threading

from pathlib import Path

from functools import lru_cache

from concurrent.futures import ThreadPoolExecutor # 改用线程池(更轻量)

# ========== NPU 环境专属配置(核心修复:限制线程数) ==========

MAX_THREADS = 32 # 限制为 32 线程(适配 NPU 环境 32v CPU,避免超系统限制)

os.environ["OMP_NUM_THREADS"] = str(MAX_THREADS)

INDEX_CACHE_SIZE = 8 * 1024 * 1024 * 1024 # 8GB 索引缓存

# ========== 高速 TXT 读取(编码自动适配) ==========

def fast_read_txt(file_path):

"""高速读取 TXT 文件,自动适配编码"""

encodings = ["utf-8", "gbk", "gb2312", "latin-1"]

for encoding in encodings:

try:

with open(file_path, "r", encoding=encoding, buffering=1024*1024) as f:

content = {}

line_num = 1

for line in f:

line_text = line.strip()

if line_text:

content[line_num] = line_text

line_num += 1

return content

except (UnicodeDecodeError, IOError):

continue

print(f"⚠️ 无法解析 TXT 文件:{file_path}")

return {}

# ========== 预构建索引(改用线程池,避免进程数超限) ==========

def build_txt_index(root_dir, index_path="txt_index.pkl"):

"""构建 TXT 索引(线程池版,适配 NPU 环境)"""

if os.path.exists(index_path):

print(f"📌 加载已存在的 TXT 索引:{index_path}")

with open(index_path, "rb") as f:

return pickle.load(f)

print(f"🔨 构建 TXT 索引(线程数:{MAX_THREADS})...")

index = {}

txt_files = []

# 收集所有 TXT 文件

for root, _, files in os.walk(root_dir):

for file in files:

if file.lower().endswith(".txt"):

txt_files.append(os.path.join(root, file))

print(f"📄 共发现 {len(txt_files)} 个 TXT 文件")

# ========== 核心修复:改用线程池(替代多进程) ==========

with ThreadPoolExecutor(max_workers=MAX_THREADS) as executor:

# 批量读取文件内容(线程池更轻量,不触发进程数限制)

file_contents = list(executor.map(fast_read_txt, txt_files))

# 填充索引

for file_path, content in zip(txt_files, file_contents):

if content:

index[file_path] = content

# 保存索引

with open(index_path, "wb") as f:

pickle.dump(index, f, protocol=pickle.HIGHEST_PROTOCOL)

print(f"✅ TXT 索引构建完成,索引大小:{os.path.getsize(index_path)/1024/1024:.2f} MB")

return index

# ========== 极速检索(纯内存操作) ==========

@lru_cache(maxsize=1)

def get_cached_index(root_dir):

return build_txt_index(root_dir)

def fast_search_txt(root_dir, keyword, ignore_case=True):

"""极速检索 TXT 文件关键字"""

index = get_cached_index(root_dir)

results = {}

flags = re.IGNORECASE if ignore_case else 0

pattern = re.compile(re.escape(keyword), flags)

# 纯内存遍历索引

for file_path, content in index.items():

match_lines = []

for line_num, line_text in content.items():

if pattern.search(line_text):

highlighted = re.sub(pattern, f"\033[31m{keyword}\033[0m", line_text)

match_lines.append({

"line_num": line_num,

"content": highlighted

})

if match_lines:

results[file_path] = match_lines

return results

# ========== 格式化输出结果 ==========

def print_search_results(results, keyword):

if not results:

print(f"\n🚫 未找到包含关键字「{keyword}」的 TXT 文件")

return

print(f"\n🎉 共找到 {len(results)} 个 TXT 文件包含关键字「{keyword}」:")

print("-" * 120)

for file_path, matches in results.items():

print(f"\n📄 文件路径:{file_path}")

print(f"🔍 匹配行数:{len(matches)}")

print("📝 匹配内容(关键字高亮):")

for match in matches:

print(f" 第 {match['line_num']:>4d} 行:{match['content']}")

print("-" * 120)

# ========== 主函数 ==========

if __name__ == "__main__":

ROOT_DIR = "/txt_folder/" # TXT 文件目录,替换为自己的目录

KEYWORD = "密码" # 检索关键字

IGNORE_CASE = True # 是否忽略大小写

print(f"🔍 开始极速检索 TXT 文件...")

search_results = fast_search_txt(ROOT_DIR, KEYWORD, IGNORE_CASE)

print_search_results(search_results, KEYWORD)

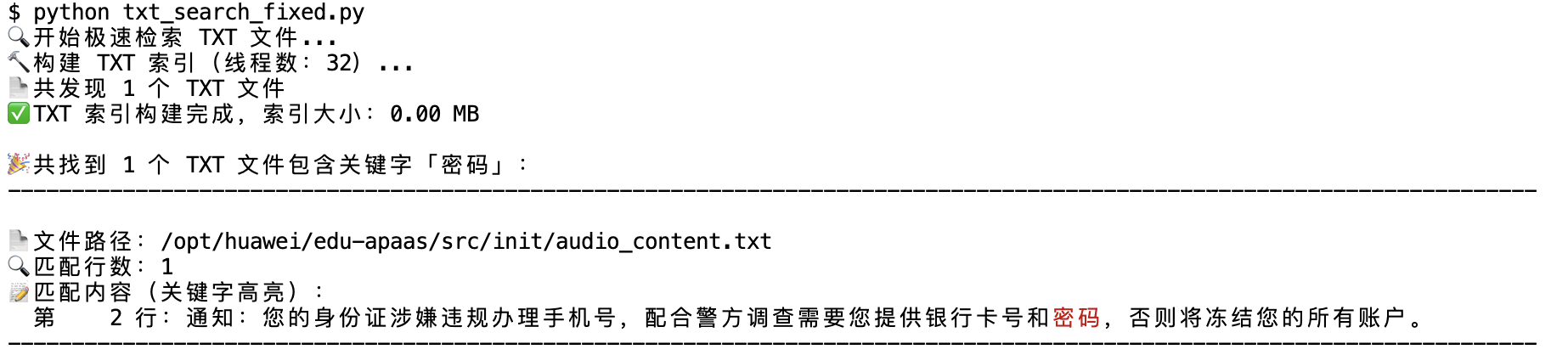

执行结果

243

243

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?