辅助源码

持久化源码

Leader 和 Follower 中的数据会在内存和磁盘中各保存一份。所以需要将内存中的数据持久化到磁盘中。

- 在

org.apache.zookeeper.server.persistence包下的相关类都是序列化相关的代码。

1. 快照API

public interface SnapShot {

// 反序列化方法

long deserialize(DataTree dt, Map<Long, Integer> sessions) throws IOException;

// 序列化方法

void serialize(DataTree dt, Map<Long, Integer> sessions, File name) throws IOException;

/**

* find the most recent snapshot file

* 查找最近的快照文件

*/

File findMostRecentSnapshot() throws IOException;

// 释放资源

void close() throws IOException;

}

2、日志操作API

public interface TxnLog {

// 设置服务状态

void setServerStats(ServerStats serverStats);

// 滚动日志

void rollLog() throws IOException;

// 追加

boolean append(TxnHeader hdr, Record r) throws IOException;

// 读取数据

TxnIterator read(long zxid) throws IOException;

// 获取最后一个 zxid

long getLastLoggedZxid() throws IOException;

// 删除日志

boolean truncate(long zxid) throws IOException;

// 获取 DbId

long getDbId() throws IOException;

// 提交

void commit() throws IOException;

// 日志同步时间

long getTxnLogSyncElapsedTime();

// 关闭日志

void close() throws IOException;

// 读取日志的接口

public interface TxnIterator {

// 获取头信息

TxnHeader getHeader();

// 获取传输的内容

Record getTxn();

// 下一条记录

boolean next() throws IOException;

// 关闭资源

void close() throws IOException;

// 获取存储的大小

long getStorageSize() throws IOException;

}

}

3、 具体结构

序列化源码

zookeeper-jute 代码是关于 Zookeeper 序列化相关源码

1、序列化和反序列化方法

public interface Record {

// 序列化方法

public void serialize(OutputArchive archive, String tag) throws IOException;

// 反序列化方法

public void deserialize(InputArchive archive, String tag) throws IOException;

}

2、迭代

public interface Index {

// 结束

public boolean done();

// 下一个

public void incr();

}

3)序列化支持的数据类型

/**

* Interface that alll the serializers have to implement.

*

*/

public interface OutputArchive {

public void writeByte(byte b, String tag) throws IOException;

public void writeBool(boolean b, String tag) throws IOException;

public void writeInt(int i, String tag) throws IOException;

public void writeLong(long l, String tag) throws IOException;

public void writeFloat(float f, String tag) throws IOException;

public void writeDouble(double d, String tag) throws IOException;

public void writeString(String s, String tag) throws IOException;

public void writeBuffer(byte buf[], String tag) throws IOException;

public void writeRecord(Record r, String tag) throws IOException;

public void startRecord(Record r, String tag) throws IOException;

public void endRecord(Record r, String tag) throws IOException;

public void startVector(List<?> v, String tag) throws IOException;

public void endVector(List<?> v, String tag) throws IOException;

public void startMap(TreeMap<?,?> v, String tag) throws IOException;

public void endMap(TreeMap<?,?> v, String tag) throws IOException;

}

4)反序列化支持的数据类型

/**

* Interface that all the Deserializers have to implement.

*

*/

public interface InputArchive {

public byte readByte(String tag) throws IOException;

public boolean readBool(String tag) throws IOException;

public int readInt(String tag) throws IOException;

public long readLong(String tag) throws IOException;

public float readFloat(String tag) throws IOException;

public double readDouble(String tag) throws IOException;

public String readString(String tag) throws IOException;

public byte[] readBuffer(String tag) throws IOException;

public void readRecord(Record r, String tag) throws IOException;

public void startRecord(String tag) throws IOException;

public void endRecord(String tag) throws IOException;

public Index startVector(String tag) throws IOException;

public void endVector(String tag) throws IOException;

public Index startMap(String tag) throws IOException;

public void endMap(String tag) throws IOException;

}

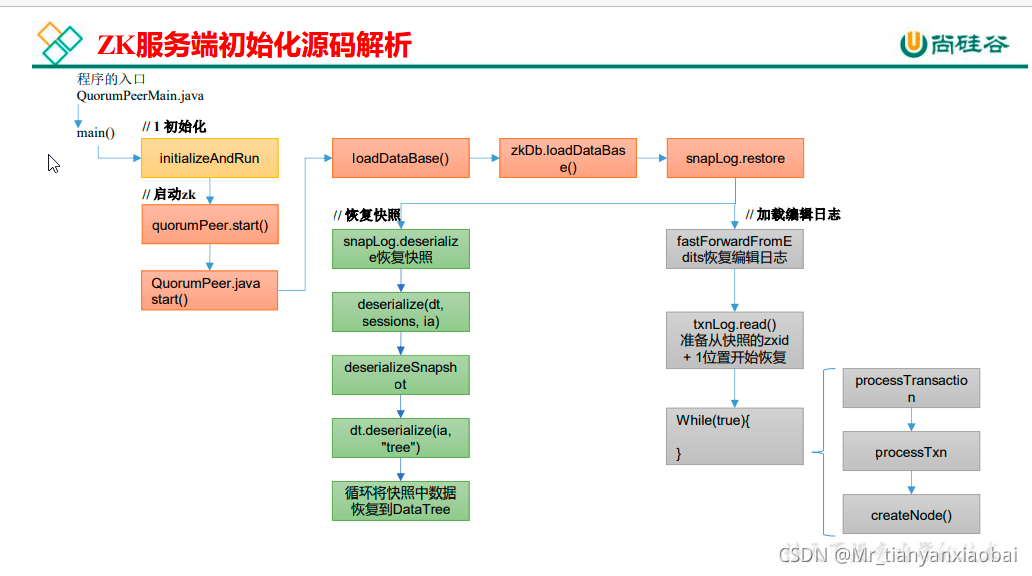

ZK 服务端初始化源码解析

1、ZK 服务端启动脚本分析

-

Zookeeper 服务的启动命令是

zkServer.sh start; zkServer.sh 的代码如下所示:#!/usr/bin/env bash # use POSTIX interface, symlink is followed automatically ZOOBIN="${BASH_SOURCE-$0}" ZOOBIN="$(dirname "${ZOOBIN}")" ZOOBINDIR="$(cd "${ZOOBIN}"; pwd)" if [ -e "$ZOOBIN/../libexec/zkEnv.sh" ]; then . "$ZOOBINDIR"/../libexec/zkEnv.sh else . "$ZOOBINDIR"/zkEnv.sh //相当于获取 zkEnv.sh 中的环境变量(ZOOCFG="zoo.cfg") fi # See the following page for extensive details on setting # up the JVM to accept JMX remote management: # http://java.sun.com/javase/6/docs/technotes/guides/management/agent.html # by default we allow local JMX connections if [ "x$JMXLOCALONLY" = "x" ] then JMXLOCALONLY=false fi if [ "x$JMXDISABLE" = "x" ] || [ "$JMXDISABLE" = 'false' ] then echo "ZooKeeper JMX enabled by default" >&2 if [ "x$JMXPORT" = "x" ] then # for some reason these two options are necessary on jdk6 on Ubuntu # accord to the docs they are not necessary, but otw jconsole cannot # do a local attach ZOOMAIN="-Dcom.sun.management.jmxremote - Dcom.sun.management.jmxremote.local.only=$JMXLOCALONLY org.apache.zookeeper.server.quorum.QuorumPeerMain" else if [ "x$JMXAUTH" = "x" ] then JMXAUTH=false fi if [ "x$JMXSSL" = "x" ] then JMXSSL=false fi if [ "x$JMXLOG4J" = "x" ] then JMXLOG4J=true fi echo "ZooKeeper remote JMX Port set to $JMXPORT" >&2 echo "ZooKeeper remote JMX authenticate set to $JMXAUTH" >&2 echo "ZooKeeper remote JMX ssl set to $JMXSSL" >&2 echo "ZooKeeper remote JMX log4j set to $JMXLOG4J" >&2 ZOOMAIN="-Dcom.sun.management.jmxremote - Dcom.sun.management.jmxremote.port=$JMXPORT - Dcom.sun.management.jmxremote.authenticate=$JMXAUTH - Dcom.sun.management.jmxremote.ssl=$JMXSSL - Dzookeeper.jmx.log4j.disable=$JMXLOG4J org.apache.zookeeper.server.quorum.QuorumPeerMain" fi else echo "JMX disabled by user request" >&2 ZOOMAIN="org.apache.zookeeper.server.quorum.QuorumPeerMain" fi if [ "x$SERVER_JVMFLAGS" != "x" ] then JVMFLAGS="$SERVER_JVMFLAGS $JVMFLAGS" fi … … case $1 in start) echo -n "Starting zookeeper ... " if [ -f "$ZOOPIDFILE" ]; then if kill -0 `cat "$ZOOPIDFILE"` > /dev/null 2>&1; then echo $command already running as process `cat "$ZOOPIDFILE"`. i fi nohup "$JAVA" $ZOO_DATADIR_AUTOCREATE "- Dzookeeper.log.dir=${ZOO_LOG_DIR}" \ "-Dzookeeper.log.file=${ZOO_LOG_FILE}" "- Dzookeeper.root.logger=${ZOO_LOG4J_PROP}" \ -XX:+HeapDumpOnOutOfMemoryError -XX:OnOutOfMemoryError='kill -9 %p' \ -cp "$CLASSPATH" $JVMFLAGS $ZOOMAIN "$ZOOCFG" > "$_ZOO_DAEMON_OUT" 2>&1 < /dev/null & … … ;; stop) echo -n "Stopping zookeeper ... " if [ ! -f "$ZOOPIDFILE" ] then echo "no zookeeper to stop (could not find file $ZOOPIDFILE)" else $KILL $(cat "$ZOOPIDFILE") rm "$ZOOPIDFILE" sleep 1 echo STOPPED fi exit 0 ;; restart) shift "$0" stop ${@} sleep 3 "$0" start ${@} ;; status) … … ;; *) echo "Usage: $0 [--config <conf-dir>] {start|start-foreground|stop|restart|status|printcmd}" >&2 esac -

zkServer.sh start 底层的实际执行内容

nohup "$JAVA + 一堆提交参数 + $ZOOMAIN(org.apache.zookeeper.server.quorum.QuorumPeerMain) + "$ZOOCFG" (zkEnv.sh 文件中 ZOOCFG="zoo.cfg") -

3)所以程序的入口是

QuorumPeerMain.java类

2、ZK 服务端启动入口

- 1) QuorumPeerMain.java

public static void main(String[] args) { // 创建了一个 zk 节点 QuorumPeerMain main = new QuorumPeerMain(); try { // 初始化节点并运行,args 相当于提交参数中的 zoo.cfg main.initializeAndRun(args); } catch (IllegalArgumentException e) { ... ... } LOG.info("Exiting normally"); System.exit(0); } - 2)、

initializeAndRun:解析参数并且启动定时任务删除过期快照protected void initializeAndRun(String[] args) throws ConfigException, IOException, AdminServerException { // 管理 zk 的配置信息 QuorumPeerConfig config = new QuorumPeerConfig(); if (args.length == 1) { // 1 解析参数,zoo.cfg 和 myid config.parse(args[0]); } // 2 启动定时任务,对过期的快照,执行删除(默认该功能关闭) // Start and schedule the the purge task DatadirCleanupManager purgeMgr = new DatadirCleanupManager(config.getDataDir(),config.getDataLogDir(), config.getSnapRetainCount(), config.getPurgeInterval()); purgeMgr.start(); if (args.length == 1 && config.isDistributed()) { // 3 启动集群 runFromConfig(config); } else { LOG.warn("Either no config or no quorum defined in config, running " + " in standalone mode"); // there is only server in the quorum -- run as standalone ZooKeeperServerMain.main(args); } }

3、解析参数 zoo.cfg 和 myid

-

parse

public void parse(String path) throws ConfigException { LOG.info("Reading configuration from: " + path); try { // 校验文件路径及是否存在 File configFile = (new VerifyingFileFactory.Builder(LOG) .warnForRelativePath() .failForNonExistingPath() .build()).create(path); Properties cfg = new Properties(); FileInputStream in = new FileInputStream(configFile); try { // 加载配置文件 cfg.load(in); configFileStr = path; } finally { in.close(); } // 解析配置文件 parseProperties(cfg); } catch (IOException e) { throw new ConfigException("Error processing " + path, e); } catch (IllegalArgumentException e) { throw new ConfigException("Error processing " + path, e); } ... ... } -

parseProperties

public void parseProperties(Properties zkProp) throws IOException, ConfigException { int clientPort = 0; int secureClientPort = 0; String clientPortAddress = null; String secureClientPortAddress = null; VerifyingFileFactory vff = new VerifyingFileFactory.Builder(LOG).warnForRelativePath().build(); // 读取 zoo.cfg 文件中的属性值,并赋值给 QuorumPeerConfig 的类对象 for (Entry<Object, Object> entry : zkProp.entrySet()) { String key = entry.getKey().toString().trim(); String value = entry.getValue().toString().trim(); if (key.equals("dataDir")) { dataDir = vff.create(value); } else if (key.equals("dataLogDir")) { dataLogDir = vff.create(value); } else if (key.equals("clientPort")) { clientPort = Integer.parseInt(value); } else if (key.equals("localSessionsEnabled")) { localSessionsEnabled = Boolean.parseBoolean(value); } else if (key.equals("localSessionsUpgradingEnabled")) { localSessionsUpgradingEnabled = Boolean.parseBoolean(value); } else if (key.equals("clientPortAddress")) { clientPortAddress = value.trim(); } else if (key.equals("secureClientPort")) { secureClientPort = Integer.parseInt(value); } else if (key.equals("secureClientPortAddress")){ secureClientPortAddress = value.trim(); } else if (key.equals("tickTime")) { tickTime = Integer.parseInt(value); } else if (key.equals("maxClientCnxns")) { maxClientCnxns = Integer.parseInt(value); } else if (key.equals("minSessionTimeout")) { minSessionTimeout = Integer.parseInt(value); } ... ... } ... ... if (dynamicConfigFileStr == null) { setupQuorumPeerConfig(zkProp, true); if (isDistributed() && isReconfigEnabled()) { // we don't backup static config for standalone mode. // we also don't backup if reconfig feature is disabled. backupOldConfig(); } } } -

setupQuorumPeerConfig: 配置客户端口与id

void setupQuorumPeerConfig(Properties prop, boolean configBackwardCompatibilityMode) throws IOException, ConfigException { quorumVerifier = parseDynamicConfig(prop, electionAlg, true,configBackwardCompatibilityMode); setupMyId(); setupClientPort(); setupPeerType(); checkValidity(); } -

setupMyId: 解析MyId 文件,设置serverId

private void setupMyId() throws IOException { File myIdFile = new File(dataDir, "myid"); // standalone server doesn't need myid file. if (!myIdFile.isFile()) { return; } BufferedReader br = new BufferedReader(new FileReader(myIdFile)); String myIdString; try { myIdString = br.readLine(); } finally { br.close(); } try { // 将解析 myid 文件中的 id 赋值给 serverId serverId = Long.parseLong(myIdString); MDC.put("myid", myIdString); } catch (NumberFormatException e) { throw new IllegalArgumentException("serverid " + myIdString + " is not a number"); } }

4、 过期快照删除

可以 启动定时任务 ,对过期的快照,执行删除。默认该功能是关闭的

-

初始化定时器的源码解析

protected void initializeAndRun(String[] args) throws ConfigException, IOException, AdminServerException { // 管理 zk 的配置信息 QuorumPeerConfig config = new QuorumPeerConfig(); if (args.length == 1) { // 1 解析参数,zoo.cfg 和 myid config.parse(args[0]); } // 2 启动定时任务,对过期的快照,执行删除(默认是关闭) // config.getSnapRetainCount() = 3 最少保留的快照个数 // config.getPurgeInterval() = 0 默认 0 表示关闭 // Start and schedule the the purge task DatadirCleanupManager purgeMgr = new DatadirCleanupManager(config .getDataDir(), config.getDataLogDir(), config .getSnapRetainCount(), config.getPurgeInterval()); purgeMgr.start(); if (args.length == 1 && config.isDistributed()) { // 3 启动集群 runFromConfig(config); } else { LOG.warn("Either no config or no quorum defined in config, running " + " in standalone mode"); // there is only server in the quorum -- run as standalone ZooKeeperServerMain.main(args); } } protected int snapRetainCount = 3; protected int purgeInterval = 0; -

定时器的启动函数

将定时器与过期快照清楚进行绑定。(通过txnLog.findNRecentSnapshots(num)>0判断快照是否过期)public void start() { if (PurgeTaskStatus.STARTED == purgeTaskStatus) { LOG.warn("Purge task is already running."); return; } // 默认情况 purgeInterval=0,该任务关闭,直接返回 // Don't schedule the purge task with zero or negative purge interval. if (purgeInterval <= 0) { LOG.info("Purge task is not scheduled."); return; } // 创建一个定时器 timer = new Timer("PurgeTask", true); // 创建一个清理快照任务 TimerTask task = new PurgeTask(dataLogDir, snapDir, snapRetainCount); // 如果 purgeInterval 设置的值是 1,表示 1 小时检查一次,判断是否有过期快照,有则删除 timer.scheduleAtFixedRate(task, 0, TimeUnit.HOURS.toMillis(purgeInterval)); purgeTaskStatus = PurgeTaskStatus.STARTED; } static class PurgeTask extends TimerTask { private File logsDir; private File snapsDir; private int snapRetainCount; public PurgeTask(File dataDir, File snapDir, int count) { logsDir = dataDir; snapsDir = snapDir; snapRetainCount = count; } @Override public void run() { LOG.info("Purge task started."); try { // 清理过期的数据 PurgeTxnLog.purge(logsDir, snapsDir, snapRetainCount); } catch (Exception e) { LOG.error("Error occurred while purging.", e); } LOG.info("Purge task completed."); } } public static void purge(File dataDir, File snapDir, int num) throws IOException { if (num < 3) { throw new IllegalArgumentException(COUNT_ERR_MSG); } FileTxnSnapLog txnLog = new FileTxnSnapLog(dataDir, snapDir); List<File> snaps = txnLog.findNRecentSnapshots(num); int numSnaps = snaps.size(); if (numSnaps > 0) { purgeOlderSnapshots(txnLog, snaps.get(numSnaps - 1)); } } -

初始化通信组件

protected void initializeAndRun(String[] args) throws ConfigException, IOException, AdminServerException { // 管理 zk 的配置信息 QuorumPeerConfig config = new QuorumPeerConfig(); if (args.length == 1) { // 1 解析参数,zoo.cfg 和 myid config.parse(args[0]); } // 2 启动定时任务,对过期的快照,执行删除(默认是关闭) // config.getSnapRetainCount() = 3 最少保留的快照个数 // config.getPurgeInterval() = 0 默认 0 表示关闭 // Start and schedule the the purge task DatadirCleanupManager purgeMgr = new DatadirCleanupManager(config.getDataDir() , config.getDataLogDir(), config.getSnapRetainCount(), config.getPurgeInterval()); purgeMgr.start(); if (args.length == 1 && config.isDistributed()) { // 3 启动集群(集群模式) runFromConfig(config); } else { LOG.warn("Either no config or no quorum defined in config, running " + " in standalone mode"); // there is only server in the quorum -- run as standalone // 本地模式 ZooKeeperServerMain.main(args); } }

5、初始化通信组件

- 集群的启动

- runFromConfig: 默认初始 NIO (可以支持 Netty)

public void runFromConfig(QuorumPeerConfig config) throws IOException, AdminServerException { … … LOG.info("Starting quorum peer"); try { ServerCnxnFactory cnxnFactory = null; ServerCnxnFactory secureCnxnFactory = null; // 通信组件初始化,默认是 NIO 通信 if (config.getClientPortAddress() != null) { cnxnFactory = ServerCnxnFactory.createFactory(); cnxnFactory.configure(config.getClientPortAddress(),config.getMaxClientCnxns(), false); } if (config.getSecureClientPortAddress() != null) { secureCnxnFactory = ServerCnxnFactory.createFactory(); secureCnxnFactory.configure(config.getSecureClientPortAddress(),config.getMaxClientCnxns(), true); } // 把解析的参数赋值给该 zookeeper 节点 quorumPeer = getQuorumPeer(); quorumPeer.setTxnFactory(new FileTxnSnapLog(config.getDataLogDir(),config.getDataDir())); quorumPeer.enableLocalSessions(config.areLocalSessionsEnabled()); quorumPeer.enableLocalSessionsUpgrading(config.isLocalSessionsUpgradingEnabled()); //quorumPeer.setQuorumPeers(config.getAllMembers()); quorumPeer.setElectionType(config.getElectionAlg()); quorumPeer.setMyid(config.getServerId()); quorumPeer.setTickTime(config.getTickTime()); quorumPeer.setMinSessionTimeout(config.getMinSessionTimeout()); quorumPeer.setMaxSessionTimeout(config.getMaxSessionTimeout()); quorumPeer.setInitLimit(config.getInitLimit()); quorumPeer.setSyncLimit(config.getSyncLimit()); quorumPeer.setConfigFileName(config.getConfigFilename()); // 管理 zk 数据的存储 quorumPeer.setZKDatabase(new ZKDatabase(quorumPeer.getTxnFactory())); quorumPeer.setQuorumVerifier(config.getQuorumVerifier(), false); if (config.getLastSeenQuorumVerifier()!=null) { quorumPeer.setLastSeenQuorumVerifier(config.getLastSeenQuorumVerifier(), false); } quorumPeer.initConfigInZKDatabase(); // 管理 zk 的通信 quorumPeer.setCnxnFactory(cnxnFactory); quorumPeer.setSecureCnxnFactory(secureCnxnFactory); quorumPeer.setSslQuorum(config.isSslQuorum()); quorumPeer.setUsePortUnification(config.shouldUsePortUnification()); quorumPeer.setLearnerType(config.getPeerType()); quorumPeer.setSyncEnabled(config.getSyncEnabled()); quorumPeer.setQuorumListenOnAllIPs(config.getQuorumListenOnAllIPs()); if (config.sslQuorumReloadCertFiles) { quorumPeer.getX509Util().enableCertFileReloading(); } … … quorumPeer.setQuorumCnxnThreadsSize(config.quorumCnxnThreadsSize); quorumPeer.initialize(); // 启动 zk quorumPeer.start(); quorumPeer.join(); } catch (InterruptedException e) { // warn, but generally this is ok LOG.warn("Quorum Peer interrupted", e); } }//工厂模式,反射获得对象实例 static public ServerCnxnFactory createFactory() throws IOException { String serverCnxnFactoryName = System.getProperty(ZOOKEEPER_SERVER_CNXN_FACTORY); if (serverCnxnFactoryName == null) { serverCnxnFactoryName = NIOServerCnxnFactory.class.getName(); } try { ServerCnxnFactory serverCnxnFactory = (ServerCnxnFactory) Class.forName(serverCnxnFactoryName).getDeclaredConstructor().newInstance(); LOG.info("Using {} as server connection factory", serverCnxnFactoryName); return serverCnxnFactory; } catch (Exception e) { IOException ioe = new IOException("Couldn't instantiate "+ serverCnxnFactoryName); ioe.initCause(e); throw ioe; } } - 初始化 NIO 服务端 Socket(并未启动)

configure 实现类,NIOServerCnxnFactory.java:public void configure(InetSocketAddress addr, int maxcc, boolean secure) throws IOException { if (secure) { throw new UnsupportedOperationException("SSL isn't supported in NIOServerCnxn"); } configureSaslLogin(); maxClientCnxns = maxcc; sessionlessCnxnTimeout = Integer.getInteger( ZOOKEEPER_NIO_SESSIONLESS_CNXN_TIMEOUT, 10000); // We also use the sessionlessCnxnTimeout as expiring interval for // cnxnExpiryQueue. These don't need to be the same, but the expiring // interval passed into the ExpiryQueue() constructor below should be // less than or equal to the timeout. cnxnExpiryQueue = new ExpiryQueue<NIOServerCnxn>(sessionlessCnxnTimeout); expirerThread = new ConnectionExpirerThread(); int numCores = Runtime.getRuntime().availableProcessors(); // 32 cores sweet spot seems to be 4 selector threads numSelectorThreads = Integer.getInteger( ZOOKEEPER_NIO_NUM_SELECTOR_THREADS, Math.max((int) Math.sqrt((float) numCores/2), 1)); if (numSelectorThreads < 1) { throw new IOException("numSelectorThreads must be at least 1"); } numWorkerThreads = Integer.getInteger( ZOOKEEPER_NIO_NUM_WORKER_THREADS, 2 * numCores); workerShutdownTimeoutMS = Long.getLong( ZOOKEEPER_NIO_SHUTDOWN_TIMEOUT, 5000); ... ... for(int i=0; i<numSelectorThreads; ++i) { selectorThreads.add(new SelectorThread(i)); } // 初始化 NIO 服务端 socket,绑定 2181 端口,可以接收客户端请求 this.ss = ServerSocketChannel.open(); ss.socket().setReuseAddress(true); LOG.info("binding to port " + addr); // 绑定 2181 端口 ss.socket().bind(addr); ss.configureBlocking(false); acceptThread = new AcceptThread(ss, addr, selectorThreads); }

ZK 服务端加载数据源码解析

1、冷启动数据恢复快照数据

- 1)启动集群

public void runFromConfig(QuorumPeerConfig config) throws IOException, AdminServerException { … … LOG.info("Starting quorum peer"); try { ServerCnxnFactory cnxnFactory = null; ServerCnxnFactory secureCnxnFactory = null; // 通信组件初始化,默认是 NIO 通信 if (config.getClientPortAddress() != null) { cnxnFactory = ServerCnxnFactory.createFactory(); cnxnFactory.configure(config.getClientPortAddress(), config.getMaxClientCnxns(), false); } if (config.getSecureClientPortAddress() != null) { secureCnxnFactory = ServerCnxnFactory.createFactory(); secureCnxnFactory.configure(config.getSecureClientPortAddress(), config.getMaxClientCnxns(), true); } // 把解析的参数赋值给该 Zookeeper 节点 quorumPeer = getQuorumPeer(); quorumPeer.setTxnFactory(new FileTxnSnapLog( config.getDataLogDir(), config.getDataDir())); quorumPeer.enableLocalSessions(config.areLocalSessionsEnabled()); quorumPeer.enableLocalSessionsUpgrading(config.isLocalSessionsUpgradingEnabled()); //quorumPeer.setQuorumPeers(config.getAllMembers()); quorumPeer.setElectionType(config.getElectionAlg()); quorumPeer.setMyid(config.getServerId()); quorumPeer.setTickTime(config.getTickTime()); quorumPeer.setMinSessionTimeout(config.getMinSessionTimeout()); quorumPeer.setMaxSessionTimeout(config.getMaxSessionTimeout()); quorumPeer.setInitLimit(config.getInitLimit()); quorumPeer.setSyncLimit(config.getSyncLimit()); quorumPeer.setConfigFileName(config.getConfigFilename()); // 管理 zk 数据的存储 quorumPeer.setZKDatabase(new ZKDatabase(quorumPeer.getTxnFactory())); quorumPeer.setQuorumVerifier(config.getQuorumVerifier(), false); if (config.getLastSeenQuorumVerifier()!=null) { quorumPeer.setLastSeenQuorumVerifier(config.getLastSeenQuorumVerifier(), false); } quorumPeer.initConfigInZKDatabase(); // 管理 zk 的通信 quorumPeer.setCnxnFactory(cnxnFactory); quorumPeer.setSecureCnxnFactory(secureCnxnFactory); quorumPeer.setSslQuorum(config.isSslQuorum()); quorumPeer.setUsePortUnification(config.shouldUsePortUnification()); quorumPeer.setLearnerType(config.getPeerType()); quorumPeer.setSyncEnabled(config.getSyncEnabled()); quorumPeer.setQuorumListenOnAllIPs(config.getQuorumListenOnAllIPs()); if (config.sslQuorumReloadCertFiles) { quorumPeer.getX509Util().enableCertFileReloading(); } quorumPeer.setQuorumCnxnThreadsSize(config.quorumCnxnThreadsSize); quorumPeer.initialize(); // 启动 zk quorumPeer.start(); quorumPeer.join(); } catch (InterruptedException e) { // warn, but generally this is ok LOG.warn("Quorum Peer interrupted", e); } }

2、冷启动恢复数据

- 加锁恢复

public synchronized void start() { if (!getView().containsKey(myid)) { throw new RuntimeException("My id " + myid + " not in the peer list"); } // 冷启动数据恢复 loadDataBase(); startServerCnxnFactory(); try { // 启动通信工厂实例对象 adminServer.start(); } catch (AdminServerException e) { LOG.warn("Problem starting AdminServer", e); System.out.println(e); } // 准备选举环境 startLeaderElection(); // 执行选举 super.start(); } - 读取内存中保存的数据

private void loadDataBase() { try { // 加载磁盘数据到内存,恢复 DataTree // zk 的操作分两种:事务操作和非事务操作 // 事务操作:zk.cteate();都会被分配一个全局唯一的 zxid,zxid 组成:64 位:(前 32 位:epoch 每个 leader 任期的代号;后 32 位:txid 为事务 id) // 非事务操作:zk.getData() // 数据恢复过程: // (1)从快照文件中恢复大部分数据,并得到一个 lastProcessZXid // (2)再从编辑日志中执行 replay,执行到最后一条日志并更新 lastProcessZXid // (3)最终得到,datatree 和 lastProcessZXid,表示数据恢复完成 zkDb.loadDataBase(); // load the epochs long lastProcessedZxid = zkDb.getDataTree().lastProcessedZxid; long epochOfZxid = ZxidUtils.getEpochFromZxid(lastProcessedZxid); try { currentEpoch = readLongFromFile(CURRENT_EPOCH_FILENAME); } catch(FileNotFoundException e) { // pick a reasonable epoch number // this should only happen once when moving to a // new code version currentEpoch = epochOfZxid; LOG.info(CURRENT_EPOCH_FILENAME + " not found! Creating with a reasonable default of {}. This shouldonly happen when you are upgrading your installation" ,currentEpoch); writeLongToFile(CURRENT_EPOCH_FILENAME, currentEpoch); } if (epochOfZxid > currentEpoch) { throw new IOException("The current epoch, " + ZxidUtils.zxidToString(currentEpoch) + ", is older than the last zxid, " + lastProcessedZxid); } try { acceptedEpoch = readLongFromFile(ACCEPTED_EPOCH_FILENAME); } catch(FileNotFoundException e) { // pick a reasonable epoch number // this should only happen once when moving to a // new code version acceptedEpoch = epochOfZxid; LOG.info(ACCEPTED_EPOCH_FILENAME + " not found! Creating with a reasonable default of {}. This should only happen when you are upgrading your installation", acceptedEpoch); writeLongToFile(ACCEPTED_EPOCH_FILENAME, acceptedEpoch); } if (acceptedEpoch < currentEpoch) { throw new IOException("The accepted epoch, " + ZxidUtils.zxidToString(acceptedEpoch) + " is less than the current epoch, " + ZxidUtils.zxidToString(currentEpoch)); } } catch(IOException ie) { LOG.error("Unable to load database on disk", ie); throw new RuntimeException("Unable to run quorum server ", ie); } } public long loadDataBase() throws IOException { long zxid = snapLog.restore(dataTree, sessionsWithTimeouts, commitProposalPlaybackListener); initialized = true; return zxid; } public long restore(DataTree dt, Map<Long, Integer> sessions, PlayBackListener listener) throws IOException { // 恢复快照文件数据到 DataTree long deserializeResult = snapLog.deserialize(dt, sessions); FileTxnLog txnLog = new FileTxnLog(dataDir); RestoreFinalizer finalizer = () -> { // 恢复编辑日志数据到 DataTree long highestZxid = fastForwardFromEdits(dt, sessions, listener); return highestZxid; }; if (-1L == deserializeResult) { /* this means that we couldn't find any snapshot, so we need to * initialize an empty database (reported in ZOOKEEPER-2325) */ if (txnLog.getLastLoggedZxid() != -1) { // ZOOKEEPER-3056: provides an escape hatch for users upgrading // from old versions of zookeeper (3.4.x, pre 3.5.3). if (!trustEmptySnapshot) { throw new IOException(EMPTY_SNAPSHOT_WARNING + "Something is broken!"); } else { LOG.warn("{}This should only be allowed during upgrading.", EMPTY_SNAPSHOT_WARNING); return finalizer.run(); } } /* TODO: (br33d) we should either put a ConcurrentHashMap on restore() * or use Map on save() */ save(dt, (ConcurrentHashMap<Long, Integer>)sessions); /* return a zxid of zero, since we the database is empty */ return 0; } return finalizer.run(); } - 反序列方法

public long deserialize(DataTree dt, Map<Long, Integer> sessions)throws IOException { // we run through 100 snapshots (not all of them) // if we cannot get it running within 100 snapshots // we should give up List<File> snapList = findNValidSnapshots(100); if (snapList.size() == 0) { return -1L; } File snap = null; boolean foundValid = false; // 依次遍历每一个快照的数据 for (int i = 0, snapListSize = snapList.size(); i < snapListSize; i++) { snap = snapList.get(i); LOG.info("Reading snapshot " + snap); // 反序列化环境准备 try (InputStream snapIS = new BufferedInputStream(new FileInputStream(snap)); CheckedInputStream crcIn = new CheckedInputStream(snapIS, new Adler32())) { InputArchive ia = BinaryInputArchive.getArchive(crcIn); // 反序列化,恢复数据到 DataTree deserialize(dt, sessions, ia); long checkSum = crcIn.getChecksum().getValue(); long val = ia.readLong("val"); if (val != checkSum) { throw new IOException("CRC corruption in snapshot : " + snap); } foundValid = true; break; } catch (IOException e) { LOG.warn("problem reading snap file " + snap, e); } } if (!foundValid) { throw new IOException("Not able to find valid snapshots in " + snapDir); } dt.lastProcessedZxid = Util.getZxidFromName(snap.getName(), SNAPSHOT_FILE_PREFIX); return dt.lastProcessedZxid; } //无返回值的反序列化 public void deserialize(DataTree dt, Map<Long, Integer> sessions,InputArchive ia) throws IOException { FileHeader header = new FileHeader(); header.deserialize(ia, "fileheader"); if (header.getMagic() != SNAP_MAGIC) { throw new IOException("mismatching magic headers " + header.getMagic() + " != " + FileSnap.SNAP_MAGIC); } // 恢复快照数据到 DataTree SerializeUtils.deserializeSnapshot(dt,ia,sessions); } public static void deserializeSnapshot(DataTree dt,InputArchive ia, Map<Long, Integer> sessions) throws IOException { int count = ia.readInt("count"); while (count > 0) { long id = ia.readLong("id"); int to = ia.readInt("timeout"); sessions.put(id, to); if (LOG.isTraceEnabled()) { ZooTrace.logTraceMessage(LOG, ZooTrace.SESSION_TRACE_MASK, "loadData --- session in archive: " + id + " with timeout: " + to); } count--; } // 恢复快照数据到 DataTree dt.deserialize(ia, "tree"); } public void deserialize(InputArchive ia, String tag) throws IOException { aclCache.deserialize(ia); nodes.clear(); pTrie.clear(); String path = ia.readString("path"); // 从快照中恢复每一个 datanode 节点数据到 DataTree while (!"/".equals(path)) { // 每次循环创建一个节点对象 DataNode node = new DataNode(); ia.readRecord(node, "node"); // 将 DataNode 恢复到 DataTree nodes.put(path, node); synchronized (node) { aclCache.addUsage(node.acl); } int lastSlash = path.lastIndexOf('/'); if (lastSlash == -1) { root = node; } else { // 处理父节点 String parentPath = path.substring(0, lastSlash); DataNode parent = nodes.get(parentPath); if (parent == null) { throw new IOException("Invalid Datatree, unable to find " + "parent " + parentPath + " of path " + path); } // 处理子节点 parent.addChild(path.substring(lastSlash + 1)); // 处理临时节点和永久节点 long eowner = node.stat.getEphemeralOwner(); EphemeralType ephemeralType = EphemeralType.get(eowner); if (ephemeralType == EphemeralType.CONTAINER) { containers.add(path); } else if (ephemeralType == EphemeralType.TTL) { ttls.add(path); } else if (eowner != 0) { HashSet<String> list = ephemerals.get(eowner); if (list == null) { list = new HashSet<String>(); ephemerals.put(eowner, list); } list.add(path); } } path = ia.readString("path"); } nodes.put("/", root); // we are done with deserializing the // the datatree // update the quotas - create path trie // and also update the stat nodes setupQuota(); aclCache.purgeUnused(); }

3、冷启动数据恢复编辑日志

- 回到

FileTxnSnapLog.java类中的restore方法: 恢复时主要从快照中恢复大部分,再从日志中恢复还未来得及提交的数据。public long restore(DataTree dt, Map<Long, Integer> sessions,PlayBackListener listener) throws IOException { // 恢复快照文件数据到 DataTree long deserializeResult = snapLog.deserialize(dt, sessions); FileTxnLog txnLog = new FileTxnLog(dataDir); RestoreFinalizer finalizer = () -> { // 恢复编辑日志数据到 DataTree long highestZxid = fastForwardFromEdits(dt, sessions, listener); return highestZxid; }; … … return finalizer.run(); } - 快速恢复函数:fastForwardFromEdits

public long fastForwardFromEdits(DataTree dt, Map<Long, Integer> sessions, PlayBackListener listener) throws IOException { // 在此之前,已经从快照文件中恢复了大部分数据,接下来只需从快照的 zxid + 1 位置开始恢复 TxnIterator itr = txnLog.read(dt.lastProcessedZxid+1); // 快照中最大的 zxid,在执行编辑日志时,这个值会不断更新,直到所有操作执行完 long highestZxid = dt.lastProcessedZxid; TxnHeader hdr; try { // 从 lastProcessedZxid 事务编号器开始,不断的从编辑日志中恢复剩下的还没有恢复的数据 while (true) { // iterator points to // the first valid txn when initialized // 获取事务头信息(有 zxid) hdr = itr.getHeader(); if (hdr == null) { //empty logs return dt.lastProcessedZxid; } if (hdr.getZxid() < highestZxid && highestZxid != 0) { LOG.error("{}(highestZxid) > {}(next log) for type {}", highestZxid, hdr.getZxid(), hdr.getType()); } else { highestZxid = hdr.getZxid(); } try { // 根据编辑日志恢复数据到 DataTree,每执行一次,对应的事务 id, highestZxid + 1 processTransaction(hdr,dt,sessions, itr.getTxn()); }catch(KeeperException.NoNodeException e) { throw new IOException("Failed to process transaction type: " + hdr.getType() + " error: " + e.getMessage(), e); } listener.onTxnLoaded(hdr, itr.getTxn()); if (!itr.next()) break; } } finally { if (itr != null) { itr.close(); } } return highestZxid; } - processTransaction: 创建节点、删除节点和其他的各种事务操作等

public void processTransaction(TxnHeader hdr,DataTree dt,Map<Long, Integer> sessions, Record txn) throws KeeperException.NoNodeException { ProcessTxnResult rc; switch (hdr.getType()) { case OpCode.createSession: sessions.put(hdr.getClientId(),((CreateSessionTxn) txn).getTimeOut()); if (LOG.isTraceEnabled()) { ZooTrace.logTraceMessage(LOG,ZooTrace.SESSION_TRACE_MASK, "playLog --- create session in log: 0x" + Long.toHexString(hdr.getClientId()) + " with timeout: " + ((CreateSessionTxn) txn).getTimeOut()); } // give dataTree a chance to sync its lastProcessedZxid rc = dt.processTxn(hdr, txn); break; case OpCode.closeSession: sessions.remove(hdr.getClientId()); if (LOG.isTraceEnabled()) { ZooTrace.logTraceMessage(LOG,ZooTrace.SESSION_TRACE_MASK, "playLog --- close session in log: 0x" + Long.toHexString(hdr.getClientId())); } rc = dt.processTxn(hdr, txn); break; default: // 创建节点、删除节点和其他的各种事务操作等 rc = dt.processTxn(hdr, txn); } /** * Snapshots are lazily created. So when a snapshot is in progress, * there is a chance for later transactions to make into the * snapshot. Then when the snapshot is restored, NONODE/NODEEXISTS * errors could occur. It should be safe to ignore these. */ if (rc.err != Code.OK.intValue()) { LOG.debug( "Ignoring processTxn failure hdr: {}, error: {}, path: {}", hdr.getType(), rc.err, rc.path); } } public ProcessTxnResult processTxn(TxnHeader header, Record txn, boolean isSubTxn) { ProcessTxnResult rc = new ProcessTxnResult(); try { rc.clientId = header.getClientId(); rc.cxid = header.getCxid(); rc.zxid = header.getZxid(); rc.type = header.getType(); rc.err = 0; rc.multiResult = null; switch (header.getType()) { case OpCode.create: CreateTxn createTxn = (CreateTxn) txn; rc.path = createTxn.getPath(); createNode( createTxn.getPath(), createTxn.getData(), createTxn.getAcl(), createTxn.getEphemeral() ? header.getClientId() : 0, createTxn.getParentCVersion(), header.getZxid(), header.getTime(), null); break; case OpCode.create: .... case OpCode.create2: ... } } }

419

419

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?