本文为Samsung Auto V7平台cameraCoreTest工具代码导出camera raw图的代码流程

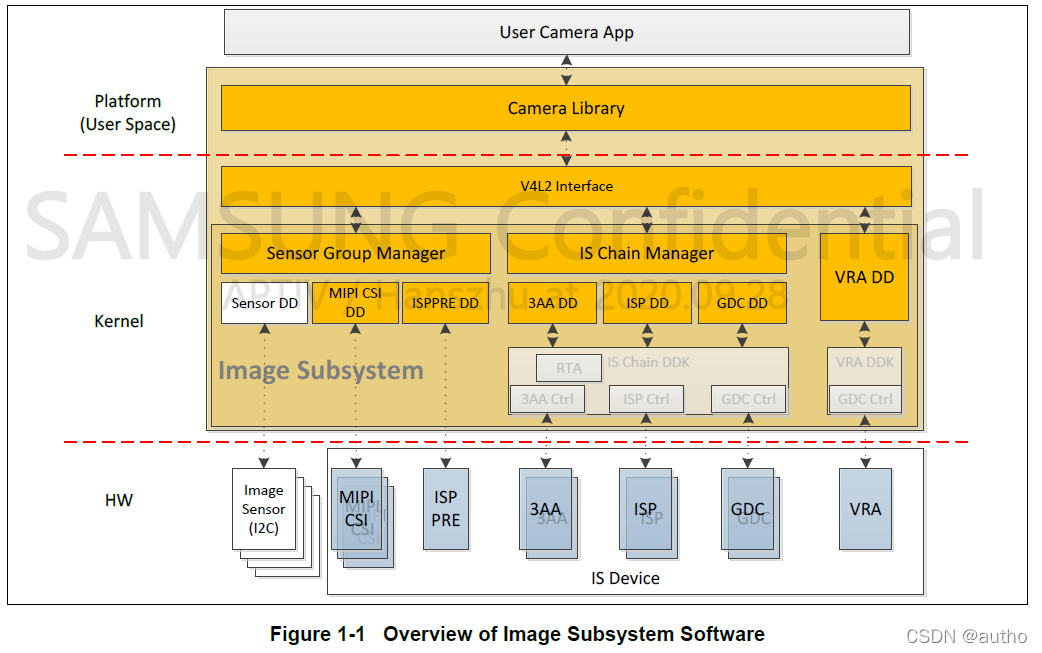

1. samsung camera subsystem brief

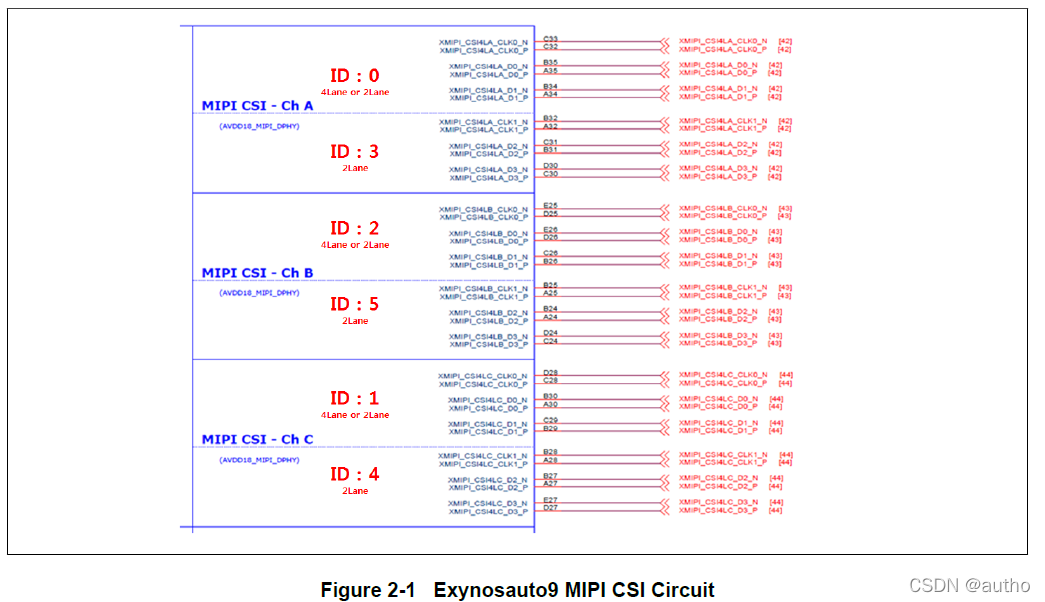

IS Chain manager部分暂时没有使用,SOC包含三路4lane CSI(CSIA,CSIB,CSIC),可通过寄存器配置为六路,如下图,分出来的包括ID3/4/5仅为2lane

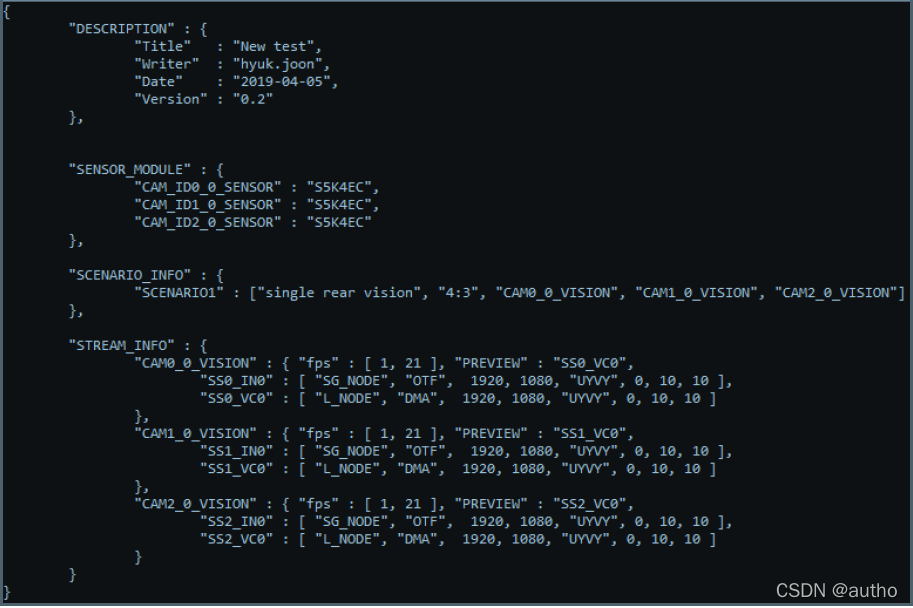

2. Camera library Json

分为scenario, stream部分,会通过cameraCoreTest工具解析出配置数据,具体配置数据参考ExynosAuto9_Miscellaneous_ImageSubsystem_ALL_REV 0.05.pdf,下载链接

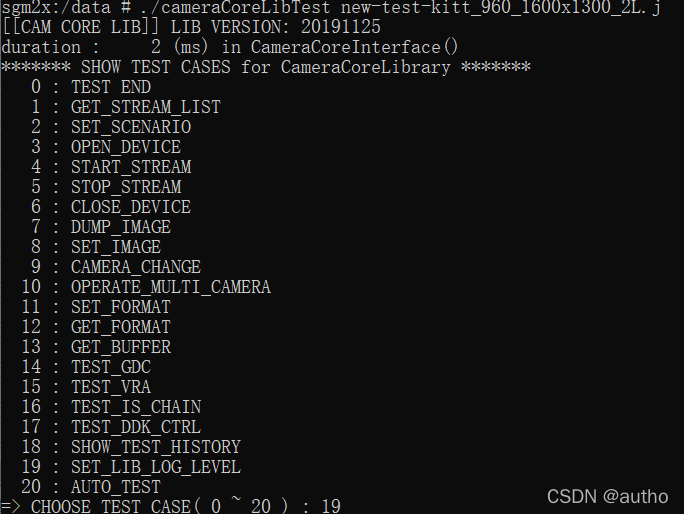

3. cameraCoreTest 测试步骤

- ./cameraCoreLibTest new-test-kitt_960_1600x1300.j

- Test the vch0

2->1

3->4->0

4->4

7->4

>>>Get the cameralib_1600x1300_UYVY_8bit_20221110_05_11_13.raw file

4. 具体步骤及代码

下面按执行顺序解析代码,跟踪代码流程可打开选项19的log等级

4.1 初始化CAMCORE LIB

执行./cameraCoreLibTest new-test-kitt_960_1600x1300.j时会初始化CameraCoreTestCase类

int main(int argc, char* argv[])

{

(void)argc;

CameraCoreTestCase* camTc = new CameraCoreTestCase(argv[1]);

camTc->choose_test_cases();

delete camTc;

return 0;

}

CameraCoreTestCase类_initialize函数中会new CameraCoreInterface(conf_file_name); 并_register_test_cases();

CameraCoreInterface::_initialize中会初始化CameraCoreScenario、CameraCorePipeline、CameraCoreFile,然后解析Json文件获取scenario,stream相关信息

bool CameraCoreInterface::_initialize()

{

CCL_LOGI("initilaize CameraCoreLib.");

scenarioMgr[_obj_order] = new CameraCoreScenario();

pipelineMgr[_obj_order] = new CameraCorePipeline();

fileMgr[_obj_order] = new CameraCoreFile();

pipelineMgr[_obj_order]->register_scenario_object((IScenarioExternalCallback*)

scenarioMgr[_obj_order]);

pipelineMgr[_obj_order]->register_file_object((ICameraCoreFileCallback*)

fileMgr[_obj_order]);

fileMgr[_obj_order]->register_scenario_object((IScenarioExternalCallback*)

scenarioMgr[_obj_order]);

if(!_set_default_configuration())

{

return false;

}

stream_info = scenarioMgr[_obj_order]->get_stream_list();

for(int i = 0; i < NUM_STREAM; ++i)

{

_libState[i] = CAMCORE_INITIALIZED;

}

CCL_LOGI("CameraCoreLib is initilaized.");

return true;

}

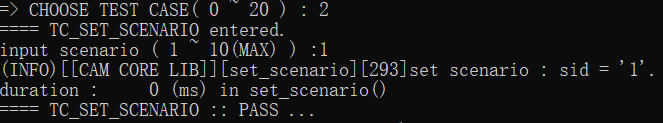

4.2 set scenario

平台仅设置了一个scenario

# "SCENARIO_INFO"

# <stream> : [<brief description>, <feature>, 1st(=rear) stream, 2nd(=front) stream, 3rd, ..., 5th stream, 1st, 2nd, ..., 5th reprocessing(=capture) stream ]

"SCENARIO_INFO" : {

"SCENARIO1" : ["Sensor only", "4:3", "CAM1_0_VISION","CAM1_1_VISION","CAM1_2_VISION","CAM1_3_VISION"]

# Other scenarios ...

},

CAM1_0_VISION中 1表示camera使用的CSI编号,0表示使用的virtual channel

4.3 open device

=> CHOOSE TEST CASE( 0 ~ 20 ) : 3

==== TC_OPEN_DEVICE entered.

duration : 0 (ms) in get_stream_list()

stream id : 1_0(=4), state : CAN_USE

stream id : 1_1(=5), state : CAN_USE

stream id : 1_2(=6), state : CAN_USE

stream id : 1_3(=7), state : CAN_USE

input stream among the above stream :4

input buffer type ( DMA_CALLBACK : 0, DMA_DIRECT_CALL : 1, DMA_KEEP_CALL : 2,

USERPTR_CALLBACK : 4, USERPTR_DIRECT_CALL : 5, USERPTR_KEEP_CALL : 6 )0

(INFO)[[CAM CORE LIB]][open_device][190]open stream : '4'.

(DEBUG)[[CAM CORE LIB]][_set_cap_nodes][724]sid = 1, using[4] = 64, node count = 2

(INFO)[[CAM CORE LIB]][_set_group_dqbuf][842]===== DQBUF THREAD GROUP =====

(INFO)[[CAM CORE LIB]][_set_group_dqbuf][860]Thread 0 : SS1_IN0 ( leader : SS1_IN0 ) SS1_VC0 ( leader : SS1_IN0 )

(INFO)[[CAM CORE LIB]][_set_group_dqbuf][862]==============================

(INFO)[[CAM CORE LIB]][_set_group_dqbuf][923]= DQBUF NODE SEARCH FOR QBUF =

(INFO)[[CAM CORE LIB]][_set_group_dqbuf][941]Thread 0 : SS1_IN0 -> SS1_IN0 SS1_VC0 -> SS1_VC0

(INFO)[[CAM CORE LIB]][_set_group_dqbuf][943]==============================

(INFO)[[CAM CORE LIB]][_set_group_qbuf][978]===== QBUF THREAD GROUP ======

(INFO)[[CAM CORE LIB]][_set_group_qbuf][994]Thread 0 : SS1_IN0 SS1_VC0

(INFO)[[CAM CORE LIB]][_set_group_qbuf][996]==============================

(INFO)[[CAM CORE LIB]][_set_bufs][1032]SET BUFFERS : CAM1_0_VISION

====> calc_planes_and_buffer_size 59565955,0

(DEBUG)[[CAM CORE LIB]][_set_bufs][1050]SS1_IN0 node : 1600x1300, alloc_buffers = 4, planes = 2

====> ion_alloc_modern

ID 0, TYPE 0, NAME ion_system_heap(DEBUG)[[CAM CORE LIB]][_alloc_buffers][191]alloc buffer: plane 0, dmafd 0x5, addr 0x7acbf5c000, length 4160000

====> ion_alloc_modern

ID 0, TYPE 0, NAME ion_system_heap(DEBUG)[[CAM CORE LIB]][_alloc_buffers][191]alloc buffer: plane 1, dmafd 0x6, addr 0x7acbf52000, length 40960

====> ion_alloc_modern

ID 0, TYPE 0, NAME ion_system_heap(DEBUG)[[CAM CORE LIB]][_alloc_buffers][191]alloc buffer: plane 0, dmafd 0x7, addr 0x7acbb5a000, length 4160000

====> ion_alloc_modern

ID 0, TYPE 0, NAME ion_system_heap(DEBUG)[[CAM CORE LIB]][_alloc_buffers][191]alloc buffer: plane 1, dmafd 0x8, addr 0x7acbb50000, length 40960

====> ion_alloc_modern

ID 0, TYPE 0, NAME ion_system_heap(DEBUG)[[CAM CORE LIB]][_alloc_buffers][191]alloc buffer: plane 0, dmafd 0x9, addr 0x7acb758000, length 4160000

====> ion_alloc_modern

ID 0, TYPE 0, NAME ion_system_heap(DEBUG)[[CAM CORE LIB]][_alloc_buffers][191]alloc buffer: plane 1, dmafd 0xa, addr 0x7acb74e000, length 40960

====> ion_alloc_modern

ID 0, TYPE 0, NAME ion_system_heap(DEBUG)[[CAM CORE LIB]][_alloc_buffers][191]alloc buffer: plane 0, dmafd 0xb, addr 0x7acb356000, length 4160000

====> ion_alloc_modern

ID 0, TYPE 0, NAME ion_system_heap(DEBUG)[[CAM CORE LIB]][_alloc_buffers][191]alloc buffer: plane 1, dmafd 0xc, addr 0x7acb34c000, length 40960

====> calc_planes_and_buffer_size 59565955,0

(DEBUG)[[CAM CORE LIB]][_set_bufs][1050]SS1_VC0 node : 1600x1300, alloc_buffers = 4, planes = 2

====> ion_alloc_modern

ID 0, TYPE 0, NAME ion_system_heap(DEBUG)[[CAM CORE LIB]][_alloc_buffers][191]alloc buffer: plane 0, dmafd 0xd, addr 0x7acaf54000, length 4160000

====> ion_alloc_modern

ID 0, TYPE 0, NAME ion_system_heap(DEBUG)[[CAM CORE LIB]][_alloc_buffers][191]alloc buffer: plane 1, dmafd 0xe, addr 0x7acaf4a000, length 40960

====> ion_alloc_modern

ID 0, TYPE 0, NAME ion_system_heap(DEBUG)[[CAM CORE LIB]][_alloc_buffers][191]alloc buffer: plane 0, dmafd 0xf, addr 0x7acab52000, length 4160000

====> ion_alloc_modern

ID 0, TYPE 0, NAME ion_system_heap(DEBUG)[[CAM CORE LIB]][_alloc_buffers][191]alloc buffer: plane 1, dmafd 0x10, addr 0x7acab48000, length 40960

====> ion_alloc_modern

ID 0, TYPE 0, NAME ion_system_heap(DEBUG)[[CAM CORE LIB]][_alloc_buffers][191]alloc buffer: plane 0, dmafd 0x11, addr 0x7aca750000, length 4160000

====> ion_alloc_modern

ID 0, TYPE 0, NAME ion_system_heap(DEBUG)[[CAM CORE LIB]][_alloc_buffers][191]alloc buffer: plane 1, dmafd 0x12, addr 0x7aca746000, length 40960

====> ion_alloc_modern

ID 0, TYPE 0, NAME ion_system_heap(DEBUG)[[CAM CORE LIB]][_alloc_buffers][191]alloc buffer: plane 0, dmafd 0x13, addr 0x7aca34e000, length 4160000

====> ion_alloc_modern

ID 0, TYPE 0, NAME ion_system_heap(DEBUG)[[CAM CORE LIB]][_alloc_buffers][191]alloc buffer: plane 1, dmafd 0x14, addr 0x7aca344000, length 40960

(INFO)[[CAM CORE LIB]][_set_default_metadata][1166]==============================

(INFO)[[CAM CORE LIB]][_set_default_metadata][1167]METADATA BUFFER COPY

(INFO)[[CAM CORE LIB]][_set_default_metadata][1168]MAGICNUMBER : 0x23456789

(INFO)[[CAM CORE LIB]][_set_default_metadata][1196]Thread 0 : SS1_IN0->0x23456789 SS1_VC0->0x23456789

(INFO)[[CAM CORE LIB]][_set_default_metadata][1198]==============================

(INFO)[[CAM CORE LIB]][_set_default_metadata][1211]==============================

(INFO)[[CAM CORE LIB]][_set_default_metadata][1212]METADATA GROUP NODE SETTING

(INFO)[[CAM CORE LIB]][show_metadata_for_group][360]Leader 105: input 0 0 1600 1300 / out 0 0 1600 1300

(INFO)[[CAM CORE LIB]][show_metadata_for_group][379]Capture[0] 129 : input 0 0 1600 1300 / output 0 0 1600 1300

(INFO)[[CAM CORE LIB]][_set_default_metadata][1223]==============================

(INFO)[[CAM CORE LIB]][open_device][197]stream ( '4' ) is opened.

duration : 301 (ms) in open_device()

==== TC_OPEN_DEVICE :: PASS ...

可以看到有4路Steam可以使用,我们这里选择的是channel 0的steam,选择DMA,接下来是open device

CameraCoreInterface::open_device

—> pipelineMgr[_obj_order]->prepare_pipeline

--------->CameraCorePipeline::_set_bufs

--------------->bufferMgr->calc_planes_and_buffer_size通过Json中的width、height、bitwidth、plane计算出size

—>bufferMgr->alloc_node_buffer根据size和plane分配buffer

------->_alloc_buffers(buffer, prevBuffer, size, buf_type, NULL);

------------>buffer->dmafd[i] = exynos_ion_alloc(_ion_fd, buffer->length[i],

EXYNOS_ION_HEAP_SYSTEM_MASK, 0);通过系统调用利用ION分配buffer,ION已在初始化时打开ion设备bufferMgr->open_ion();

底层ION代码可以参考Android之ION内存管理分析,包含ION设备的注册,__ion_alloc内存分配函数,最后使用alloc_pages分配内存并创建scatterlist。

分配完buffer后会利用dma_buf机制dma_buf_export,dma_buf可以参考Linux内核笔记之DMA_BUF,ion_alloc最后会返回文件描述符fd

底层返回fd之后,会利用mmap函数内存映射,mmap会调用dma_buf的dma_buf_ops中的.mmap,最终调用到buffer->heap->ops->map_user

4.4 start stream

=> CHOOSE TEST CASE( 0 ~ 20 ) : 4

==== TC_START_STREAM entered.

duration : 0 (ms) in get_stream_list()

stream id : 1_0(=4), state : OPENED

stream id : 1_1(=5), state : CAN_USE

stream id : 1_2(=6), state : CAN_USE

stream id : 1_3(=7), state : CAN_USE

input stream among the above stream :4

(INFO)[[CAM CORE LIB]][start_stream][518]start stream : '4'.

====CameraCorePipeline::start_pipeline create qbuf/dqbuf threads!====>CameraCorePipeline::_create_threads

====>CameraCoreThread::create_thread

(DEBUG)[[CAM CORE LIB]][create_thread][98]QBUF_THREAD : thread ( id = 0XC9B42090) is created.

====>CameraCoreThread::create_thread

(DEBUG)[[CAM CORE LIB]][create_thread][98]DQBUF_THREAD : thread ( id = 0XCA343090) is created.

(INFO)[[CAM CORE LIB]][_create_threads][1613]a====>CameraCoreThread _thread_thisll the threads are created.

=====CAM THREAD - dqbuf [name = DQBUF_THREAD : id = 0XCA343090](VERBOSE)[[CAM CORE LIB]][chmod_device][45]pid = 20985 [device : /dev/video205]

=====CAM THREAD - dqbuf [name = DQBUF_THREAD : id = 0XCA343090](VERBOSE)[[CAM CORE LIB]][chmod_device][45]pid = 0 [device : /dev/video205]

====>CameraCoreThread _thread_this====CAM THREAD - qbuf [name = QBUF_THREAD : id = 0XC9B42090]

(DEBUG)[[CAM CORE LIB]][open_node][124]SS1_IN0 node is opened.

(DEBUG)[[CAM CORE LIB]][set_config_leader][344]SS1_IN0 group input type = OTF

(DEBUG)[[CAM CORE LIB]][set_config_leader][345]SS1_IN0 group leader vindex = 105

(DEBUG)[[CAM CORE LIB]][set_config_leader][348]SS1_IN0 s_input index = 0x4016911

(VERBOSE)[[CAM CORE LIB]][chmod_device][45]pid = 20986 [device : /dev/video229]

(VERBOSE)[[CAM CORE LIB]][chmod_device][45]pid = 0 [device : /dev/video229]

(DEBUG)[[CAM CORE LIB]][open_node][124]SS1_VC0 node is opened.

(DEBUG)[[CAM CORE LIB]][set_config_leader][348]SS1_VC0 s_input index = 0x0

(DEBUG)[[CAM CORE LIB]][check_has_leader][442]SS1_IN0 node is leader of all of groups

(VERBOSE)[[CAM CORE LIB]][set_ctrl][207]set control VIDIOC_S_CTRL ( id : value = 10097542 : 1 ).

(VERBOSE)[[CAM CORE LIB]][set_input][382]set input VIDIOC_S_INPUT ( index = 67201297 ).

(VERBOSE)[[CAM CORE LIB]][set_param][414]set param VIDIOC_S_PARM ( max_fps = 30 ).

(VERBOSE)[[CAM CORE LIB]][set_format][462]set format VIDIOC_S_FMT ( 1600 X 1300, buftype = 10, type = 1498831189 ).

(VERBOSE)[[CAM CORE LIB]][request_buffers][534]request buffers VIDIOC_REQBUFS ( count = 4 ).

(VERBOSE)[[CAM CORE LIB]][set_input][382]set input VIDIOC_S_INPUT ( index = 0 ).

(VERBOSE)[[CAM CORE LIB]][set_format][462]set format VIDIOC_S_FMT ( 1600 X 1300, buftype = 9, type = 1498831189 ).

(VERBOSE)[[CAM CORE LIB]][request_buffers][534]request buffers VIDIOC_REQBUFS ( count = 4 ).

(VERBOSE)[[CAM CORE LIB]][set_ctrl][207]set control VIDIOC_S_CTRL ( id : value = 10096691 : 0 ).

(INFO)[[CAM CORE LIB]][_set_DDI][1400]V4L2_CID_IS_SET_SETFILE done

(VERBOSE)[[CAM CORE LIB]][stream_on][693]set VIDIOC_STREAMON.

(INFO)[[CAM CORE LIB]][_set_stream_on][1433]Node SS1_VC0: ON

(VERBOSE)[[CAM CORE LIB]][stream_on][693]set VIDIOC_STREAMON.

(INFO)[[CAM CORE LIB]][_set_stream_on][1433]Node SS1_IN0: ON

(INFO)[[CAM CORE LIB]][start_pipeline][204]setting stream on done

(DEBUG)[[CAM CORE LIB]][prepare_buffer_for_group][2365]prime node = SS1_IN0

(DEBUG)[[CAM CORE LIB]][prepare_buffer_for_group][2380]junction node = SS1_VC0

====> CameraCoreBufferManager::queue_buffer

(VERBOSE)[[CAM CORE LIB]][queue_buffer][1460]node SS1_VC0 : index : 0

(DEBUG)[[CAM CORE LIB]][prepare_buffer_for_group][2397]SS1_VC0 junction node prepare qbuf

====> CameraCoreBufferManager::queue_buffer

(VERBOSE)[[CAM CORE LIB]][queue_buffer][1460]node SS1_IN0 : index : 0

(INFO)[[CAM CORE LIB]][prepare_buffer_for_group][2408]SS1_IN0 node prepare qbuf

====> CameraCoreBufferManager::queue_buffer

(VERBOSE)[[CAM CORE LIB]][queue_buffer][1460]node SS1_VC0 : index : 1

(DEBUG)[[CAM CORE LIB]][prepare_buffer_for_group][2397]SS1_VC0 junction node prepare qbuf

====> CameraCoreBufferManager::queue_buffer

(VERBOSE)[[CAM CORE LIB]][queue_buffer][1460]node SS1_IN0 : index : 1

(INFO)[[CAM CORE LIB]][prepare_buffer_for_group][2408]SS1_IN0 node prepare qbuf

(INFO)[[CAM CORE LIB]][start_pipeline][209]preparing buffer for group done

(VERBOSE)[[CAM CORE LIB]][set_ctrl][207]set control VIDIOC_S_CTRL ( id : value = 10096654 : 1 ).

(INFO)[[CAM CORE LIB]][_run_threads][1653]all the created threads are started.

(INFO)[[CAM CORE LIB]][_start_stream][1502]Sensor ID 4 : started and threads are also.

(INFO)[[CAM CORE LIB]][start_pipeline][218]starting stream is done

====starting stream is done

(INFO)[[CAM CORE LIB]][start_stream][533]stream ( '4' ) is started.

duration : 2565 (ms) in start_stream()

==== TC_START_STREAM :: PASS ...

******* SHOW TEST CASES for CameraCoreLibrary *******

0 : TEST END

1 : GET_STREAM_LIST

2 : SET_SCENARIO

3 : OPEN_DEVICE

4 : START_STREAM

5 : STOP_STREAM

6 : CLOSE_DEVICE

7 : DUMP_IMAGE

8 : SET_IMAGE

9 : CAMERA_CHANGE

10 : OPERATE_MULTI_CAMERA

11 : SET_FORMAT

12 : GET_FORMAT

13 : GET_BUFFER

14 : TEST_GDC

15 : TEST_VRA

16 : TEST_IS_CHAIN

17 : TEST_DDK_CTRL

18 : SHOW_TEST_HISTORY

19 : SET_LIB_LOG_LEVEL

20 : AUTO_TEST

=> CHOOSE TEST CASE( 0 ~ 20 ) : ====> CameraCoreBufferManager::queue_buffer

(VERBOSE)[[CAM CORE LIB]][queue_buffer][1460]node SS1_VC0 : index : 2

====> CameraCoreBufferManager::dequeue_buffer

====>CameraCoreDDI::dequeue_buffer

====> do VIDIOC_DQBUF

====> out VIDIOC_DQBUF

====> CameraCoreDDI::dequeue_buffer done

====> SS1_VC0 : index : 0====> check_if_sensor_out 1

====> get image data

==== enter cameraCoreFile to dump image!

====> CameraCoreTestCase::notify_done

(VERBOSE)[[CAM CORE LIB]][_thread_buf][95]done dqbuf SS1_VC0 - 0

====> CameraCoreBufferManager::dequeue_buffer

done qbuf SS1_VC0 - 2

====> CameraCoreBufferManager::queue_buffer

(VERBOSE)[[CAM CORE LIB]][queue_buffer][1460]node SS1_IN0 : index : 2

(VERBOSE)[[CAM CORE LIB]][update_metadata_for_group][317][SS1_IN0] 1(SS1_VC0)

done qbuf SS1_IN0 - 2

====> CameraCoreBufferManager::queue_buffer

(VERBOSE)[[CAM CORE LIB]][queue_buffer][1460]node SS1_VC0 : index : 3

====>CameraCoreDDI::dequeue_buffer

====> do VIDIOC_DQBUF

====> out VIDIOC_DQBUF

====> CameraCoreDDI::dequeue_buffer done

====> SS1_IN0 : index : 0====> check_if_sensor_out 0

(VERBOSE)[[CAM CORE LIB]][_thread_buf][95]done dqbuf SS1_IN0 - 0

====> CameraCoreBufferManager::dequeue_buffer

====>CameraCoreDDI::dequeue_buffer

====> do VIDIOC_DQBUF

====> out VIDIOC_DQBUF

====> CameraCoreDDI::dequeue_buffer done

====> SS1_VC0 : index : 1====> check_if_sensor_out 1

====> get image data

==== enter cameraCoreFile to dump image!

====> CameraCoreTestCase::notify_done

(VERBOSE)[[CAM CORE LIB]][_thread_buf][95]done dqbuf SS1_VC0 - 1

====> CameraCoreBufferManager::dequeue_buffer

done qbuf SS1_VC0 - 3

====> CameraCoreBufferManager::queue_buffer

(VERBOSE)[[CAM CORE LIB]][queue_buffer][1460]node SS1_IN0 : index : 3

(VERBOSE)[[CAM CORE LIB]][update_metadata_for_group][317][SS1_IN0] 1(SS1_VC0)

====>CameraCoreDDI::dequeue_buffer

====> do VIDIOC_DQBUF

====> out VIDIOC_DQBUF

====> CameraCoreDDI::dequeue_buffer done

CameraCoreInterface::start_stream

调用流程

pipelineMgr[_obj_order]->start_pipeline(tgtStid)

_create_threads(tgtStid);先创建qbuffer/dqbuffer线程

qbuf_thread[tgtStid][i]->create_thread开始时会初始化stop_thread和enable_thread

CameraCoreThread::create_thread

CameraCoreThread::_thread_this

_thread_buf 由于enable_thread为FALSE。线程进入死循环在,直到run_thread函数将enable_thread置为TRUE,才会开始qbuffer

回到start_pipeline,接着执行

_set_DDI

CameraCoreDDI::open_node(curNode)

fd = ::open(dev_name, O_RDONLY & O_CREAT, 0);打开/dev/videoxxx,这里打开依次的是JSON中定义的node SS1_IN0和SS1_VC0

CameraCoreDDI::set_ctrl;IOCTRL调用,v4l2 hal version setting

CameraCoreDDI::set_input

CameraCoreDDI::set_param

CameraCoreDDI::set_format

CameraCoreDDI::request_buffers

_set_stream_on

bufferMgr->prepare_buffer_for_group

_start_stream

接下来用单独的小节来分析上面的IOCTRL流程

4.4.1 open

具体是调用到sam-is-video-ssin0.c中的sam_is_ssxin0_video_fops中的sam_is_ssxin0_video_open

static int sam_is_ssxin0_video_open(struct file *file)

{

int ret = 0;

int ret_err = 0;

struct sam_is_video *video;

struct sam_is_video_ctx *vctx;

struct sam_is_device_sensor *sensor;

struct sam_is_device_sub_sensor *sub_sensor;

struct sam_is_resourcemgr *resourcemgr;

struct sam_is_core *core;

char name[SAM_IS_NAME_LEN];

u32 position;

u32 resource_type;

vctx = NULL;

video = video_drvdata(file);

sub_sensor = container_of(video, struct sam_is_device_sub_sensor, video);

sensor = sub_sensor->sensor;

resourcemgr = video->resourcemgr;

if (!resourcemgr) {

err("resourcemgr is NULL");

ret = -EINVAL;

goto err_resource_null;

}

core = (struct sam_is_core *)sensor->private_data;

if (core && core->reboot) {

smwarn("[SS%d:V]%s: fail to open ssxin0_video_open() - reboot(%d)\n",

sensor->sub_sensor[ENTRY_SSIN0], GET_SSX_ID(video), __func__, core->reboot);

ret = -EINVAL;

goto err_reboot;

}

if (!core) {

warn("%s: core(NULL)", __func__);

ret = -EINVAL;

goto err_resource_null;

}

position = ((video->id - SAM_IS_VIDEO_SS0_IN0_NUM) >> 2);

switch (position) {

case 0:

resource_type = RESOURCE_TYPE_SENSOR0;

break;

case 1:

resource_type = RESOURCE_TYPE_SENSOR1;

break;

case 2:

resource_type = RESOURCE_TYPE_SENSOR2;

break;

case 3:

resource_type = RESOURCE_TYPE_SENSOR3;

break;

case 4:

resource_type = RESOURCE_TYPE_SENSOR4;

break;

case 5:

resource_type = RESOURCE_TYPE_SENSOR5;

break;

default:

err("video id was invalid %d", video->id);

ret = -EINVAL;

goto err_video_id;

}

//这个函数没做什么事,仅仅判断result = &core->sensor[RESOURCE_TYPE_SENSOR0];是否为空

ret = sam_is_resource_open(resourcemgr, resource_type, NULL);

if (ret) {

err("sam_is_resource_open is fail(%d)", ret);

goto err_resource_open;

}

sensor->sub_sensor[ENTRY_SSIN0].instance = atomic_read(&core->instance);

sminfo("[SS%d:V] %s\n", sensor->sub_sensor[ENTRY_SSIN0],

sensor->sub_sensor[ENTRY_SSIN0].sub_sensor_id, __func__);

snprintf(name, sizeof(name), "SS%d",

sensor->sub_sensor[ENTRY_SSIN0].sub_sensor_id);

//为vctx分配空间,初始化,vctx结构体包含了video,subdev,queue以及video buffer的操作函数

ret = open_vctx(file, video, &vctx, sensor->sub_sensor[ENTRY_SSIN0].instance,

FRAMEMGR_ID_SS0, name);

if (ret) {

smerr("open_vctx is fail(%d)", sub_sensor[ENTRY_SSIN0], ret);

goto err_vctx_open;

}

vctx->sub_sensor_id = sensor->sub_sensor[ENTRY_SSIN0].sub_sensor_id;

/* sam_is_video_open是vctx的一些初始化,queue的初始化

vctx->device = device;

vctx->next_device = NULL;

vctx->subdev = NULL;

vctx->video = video;

vctx->vb2_ops = vb2_ops;

vctx->vb2_mem_ops = video->vb2_mem_ops;

vctx->sam_is_vb2_buf_ops = video->sam_is_vb2_buf_ops;

vctx->vops.qbuf = sam_is_video_qbuf;

vctx->vops.dqbuf = sam_is_video_dqbuf;

vctx->vops.done = sam_is_video_buffer_done;

*/

ret = sam_is_video_open(vctx,

sensor,

VIDEO_SSX_READY_BUFFERS,

video,

&sam_is_ssxin0_qops,

&sam_is_sensor_ops);

if (ret) {

smerr("sam_is_video_open is fail(%d)", sensor->sub_sensor[ENTRY_SSIN0], ret);

goto err_video_open;

}

//看下面具体函数的分析

ret = sam_is_sensor_open(sensor, ENTRY_SSIN0, vctx);

if (ret) {

smerr("sam_is_ssxin0_open is fail(%d)", sensor->sub_sensor[ENTRY_SSIN0], ret);

goto err_ischain_open;

}

atomic_inc(&core->instance);

return 0;

err_ischain_open:

ret_err = sam_is_video_close(vctx);

if (ret_err)

smerr("sam_is_video_close is fail(%d)", sensor->sub_sensor[ENTRY_SSIN0],

ret_err);

err_video_open:

ret_err = close_vctx(file, video, vctx);

if (ret_err < 0)

smerr("close_vctx is fail(%d)", sensor->sub_sensor[ENTRY_SSIN0], ret_err);

err_vctx_open:

err_resource_open:

err_reboot:

err_video_id:

err_resource_null:

return ret;

}

/**

* @cnotice

* @prdcode

* @unit_name{device sensor::core}

* @purpose open sensor device

* @logic open csi, isppre device and frame manager

* @params

* @param{in/out, sensor, struct * ::sam_is_device_sensor, Not null}

* @param{in/out, sub_sensor_id, int, >= 0, <= 3}

* @param{in/out, vctx, struct * ::sam_is_video_ctx, Not null}

* @endparam

* @retval{ret, int, 0, 0, < 0}

*/

int sam_is_sensor_open(struct sam_is_device_sensor *sensor, int sub_sensor_id,

struct sam_is_video_ctx *vctx)

{

int ret = 0;

int ret_err = 0;

struct sam_is_groupmgr *groupmgr;

struct sam_is_group *group;

struct sam_is_core *core;

SAM_BUG(!sensor);

SAM_BUG(!sensor->subdev_csi);

SAM_BUG(!sensor->subdev_isppre);

SAM_BUG(!sensor->private_data);

SAM_BUG(!vctx);

SAM_BUG(!GET_VIDEO(vctx));

core = sensor->private_data;

mutex_lock(&core->sensor_open_close_lock);

if (test_bit(SAM_IS_SENSOR_OPEN, &sensor->sub_sensor[sub_sensor_id].state)) {

smerr("already subsensor open", sensor->sub_sensor[sub_sensor_id]);

ret = -EMFILE;

goto err_open_state;

}

if (atomic_read(&sensor->sub_sensor_open_count) >= 4) {

if (test_bit(SAM_IS_SENSOR_OPEN, &sensor->state)) {

smerr("already open", sensor->sub_sensor[sub_sensor_id]);

ret = -EMFILE;

goto err_open_state;

}

}

/*

* Sensor's mclk can be accessed by other ips(ex. preprocessor)

* There's a problem that mclk_on can be called twice due to clear_bit.

* - clear_bit(SAM_IS_SENSOR_MCLK_ON, &device->state);

* Clear_bit of MCLK_ON should be skiped.

*/

clear_bit(SAM_IS_SENSOR_S_INPUT, &sensor->state);

clear_bit(SAM_IS_SENSOR_S_CONFIG, &sensor->state);

clear_bit(SAM_IS_SENSOR_DRIVING, &sensor->state);

clear_bit(SAM_IS_SENSOR_STAND_ALONE, &sensor->state);

clear_bit(SAM_IS_SENSOR_FRONT_START, &sensor->state);

clear_bit(SAM_IS_SENSOR_FRONT_DTP_STOP, &sensor->state);

clear_bit(SAM_IS_SENSOR_BACK_START, &sensor->state);

clear_bit(SAM_IS_SENSOR_OTF_OUTPUT, &sensor->state);

clear_bit(SAM_IS_SENSOR_WAIT_STREAMING, &sensor->state);

set_bit(SAM_IS_SENSOR_BACK_NOWAIT_STOP, &sensor->state);

sensor->sub_sensor[sub_sensor_id].vctx = vctx;

sensor->sub_sensor[sub_sensor_id].fcount = 0;

sensor->sub_sensor[sub_sensor_id].csi_fcount = 0;

sensor->instant_cnt = 0;

sensor->instant_ret = 0;

sensor->sub_sensor[sub_sensor_id].ischain = NULL;

sensor->exposure_time = 0;

sensor->frame_duration = 0;

groupmgr = sensor->groupmgr;

group = &sensor->sub_sensor[sub_sensor_id].group_sensor;

if (atomic_read(&sensor->sub_sensor_open_count) == 0) {

ret = sam_is_virtual_ch_cfg((void *)sensor);

//isppre->dma_subdev[CSI_VIRTUAL_CH_0]赋值,具体还不清楚上面作用

if (ret) {

smerr("sam_is_virtual_ch_cfg is fail", sensor->sub_sensor[sub_sensor_id]);

goto err_virtual_ch_cfg;

}

//上面都没做,一些初始化

ret = sam_is_csi_open(sensor->subdev_csi, GET_FRAMEMGR(vctx));

if (ret) {

smerr("sam_is_csi_open is fail(%d)", sensor->sub_sensor[sub_sensor_id], ret);

goto err_csi_open;

}

ret = sam_is_isppre_open(sensor->subdev_isppre, GET_FRAMEMGR(vctx));

if (ret) {

smerr("sam_is_isppre_open is fail(%d)", sensor->sub_sensor[sub_sensor_id], ret);

goto err_csi_open;

}

}

ret = sam_is_devicemgr_open(sensor->devicemgr, sub_sensor_id, (void *)sensor,

SAM_IS_DEVICE_SENSOR);

if (ret) {

err("sam_is_devicemgr_open is fail(%d)", ret);

goto err_devicemgr_open;

}

/* for mediaserver force close */

if (atomic_read(&sensor->sub_sensor_open_count) == 0) {

ret = sam_is_resource_get(sensor->resourcemgr, sensor->position);

if (ret) {

smerr("sam_is_resource_get is fail", sensor->sub_sensor[sub_sensor_id]);

goto err_resource_get;

}

}

set_bit(SAM_IS_SENSOR_OPEN, &sensor->sub_sensor[sub_sensor_id].state);

atomic_inc(&sensor->sub_sensor_open_count);

if (atomic_read(&sensor->sub_sensor_open_count) == 1)

set_bit(SAM_IS_SENSOR_OPEN, &sensor->state);

sminfo("[SEN:D] %s():%d\n", sensor->sub_sensor[sub_sensor_id], __func__, ret);

mutex_unlock(&core->sensor_open_close_lock);

return 0;

err_resource_get:

err_devicemgr_open:

ret_err = sam_is_devicemgr_close(sensor->devicemgr, sub_sensor_id,

(void *)sensor, SAM_IS_DEVICE_SENSOR);

if (ret_err)

smerr("sam_is_devicemgr_close is fail(%d)", sensor->sub_sensor[sub_sensor_id],

ret_err);

err_csi_open:

err_virtual_ch_cfg:

err_open_state:

sminfo("[SEN:D] %s():%d\n", sensor->sub_sensor[sub_sensor_id], __func__, ret);

mutex_unlock(&core->sensor_open_close_lock);

return ret;

}

sam_is_ssxvc0_video_open和以上内容差别不大

4.4.2 set_input

调用sam_is_ssxin0_video_s_input,具体调用sam_is_sensor_s_input

/**

* @cnotice

* @prdcode

* @unit_name{device sensor::core}

* @purpose set input sensor device

* @logic sensor clock and gpio on, subdev init call

* @params

* @param{in/out, sensor, struct * ::sam_is_device_sensor, Not null}

* @param{in/out, sub_sensor_id, int, >= 0, <= 3}

* @param{in/out, input, u32, 0 ~ (2^32 - 1)}

* @param{in/out, scenario, u32, 0 ~ (2^32 - 1)}

* @param{in/out, video_id, u32, 0 ~ (2^32 - 1)}

* @endparam

* @retval{ret, int, 0, 0, < 0}

*/

int sam_is_sensor_s_input(struct sam_is_device_sensor *sensor,

int sub_sensor_id,

u32 input,

u32 scenario,

u32 video_id)

{

int ret = 0;

struct sam_is_core *core = (struct sam_is_core *)dev_get_drvdata(sam_is_dev);

struct v4l2_subdev *subdev_module;

struct v4l2_subdev *subdev_csi;

struct v4l2_subdev *subdev_isppre;

struct sam_is_module *module;

struct sam_is_device_sensor_peri *sensor_peri;

struct sam_is_group *group;

struct sam_is_groupmgr *groupmgr;

SAM_BUG(!sensor);

SAM_BUG(!sensor->pdata);

SAM_BUG(!sensor->subdev_csi);

SAM_BUG(!sensor->subdev_isppre);

SAM_BUG(input >= SENSOR_NAME_END);

if (test_bit(SAM_IS_SENSOR_S_INPUT, &sensor->state)) {

smerr("already s_input", sensor->sub_sensor[sub_sensor_id]);

ret = -EINVAL;

goto p_err;

}

module = NULL;

module = &sensor->sub_sensor[sub_sensor_id].module;

groupmgr = sensor->groupmgr;

group = &sensor->sub_sensor[sub_sensor_id].group_sensor;

/*初始化group成员

smp_shot_init(group, BASE_SHOT_RESOURCE);

group->asyn_shots = NONE_SHOT_RESOURCE;

group->skip_shots = NONE_SHOT_RESOURCE;

group->init_shots = NONE_SHOT_RESOURCE;

group->sync_shots = BASE_SHOT_RESOURCE;

*/

ret = sam_is_group_init(groupmgr, group, GROUP_INPUT_OTF, video_id, true);

if (ret) {

smerr("sam_is_group_init is fail(%d)", sensor->sub_sensor[sub_sensor_id], ret);

goto p_err;

}

subdev_module = module->subdev;

if (!subdev_module) {

smerr("subdev module is not probed", sensor->sub_sensor[sub_sensor_id]);

ret = -EINVAL;

goto p_err;

}

subdev_csi = sensor->subdev_csi;

subdev_isppre = sensor->subdev_isppre;

sensor->position = module->position;

sensor->image.framerate = min_t(u32, SENSOR_DEFAULT_FRAMERATE,

module->max_framerate);

sensor->image.window.width = module->pixel_width;

sensor->image.window.height = module->pixel_height;

sensor->image.window.o_width = sensor->image.window.width;

sensor->image.window.o_height = sensor->image.window.height;

/* send csi chennel to FW */

module->ext.sensor_con.csi_ch = sensor->pdata->csi_ch;

module->ext.sensor_con.csi_ch |= 0x0100;

sensor_peri = (struct sam_is_device_sensor_peri *)module->private_data;

/* set cis data */

{

u32 i2c_channel = module->ext.sensor_con.peri_setting.i2c.channel;

if (i2c_channel < SENSOR_CONTROL_I2C_MAX) {

sensor_peri->cis.i2c_lock = &core->i2c_lock[i2c_channel];

info("%s[%d]enable cis i2c client. position = %d\n",

__func__, __LINE__, module->position);

} else

smwarn("wrong cis i2c_channel(%d)", sensor->sub_sensor[sub_sensor_id],

i2c_channel);

}

sam_is_sensor_peri_init_work(sensor_peri);

sensor->pdata->scenario = scenario;

if (scenario == SENSOR_SCENARIO_NORMAL)

clear_bit(SAM_IS_SENSOR_STAND_ALONE, &sensor->state);

else

set_bit(SAM_IS_SENSOR_STAND_ALONE, &sensor->state);

if (sensor->sub_sensor[sub_sensor_id].subdev_module) {

smwarn("subdev_module is already registered",

sensor->sub_sensor[sub_sensor_id]);

v4l2_device_unregister_subdev(sensor->sub_sensor[sub_sensor_id].subdev_module);

}

ret = v4l2_device_register_subdev(&sensor->v4l2_dev, subdev_module);

if (ret) {

smerr("v4l2_device_register_subdev is fail(%d)",

sensor->sub_sensor[sub_sensor_id], ret);

goto p_err;

}

sensor->sub_sensor[sub_sensor_id].subdev_module = subdev_module;

// enable mclk

ret = sam_is_sensor_mclk_on(sensor, scenario, module->pdata->mclk_ch);

if (ret) {

smerr("sam_is_sensor_mclk_on is fail(%d)", sensor->sub_sensor[sub_sensor_id],

ret);

goto p_err;

}

/* Sensor power on */

ret = sam_is_sensor_gpio_on(sensor, sub_sensor_id);

if (ret) {

smerr("sam_is_sensor_gpio_on is fail(%d)", sensor->sub_sensor[sub_sensor_id],

ret);

goto p_err;

}

//subdev_csi在sam_is_csi_probe时赋值了ops,此时调用csi_init,设置csi reg进行reset csi

ret = v4l2_subdev_call(subdev_csi, core, init, sub_sensor_id);

if (ret) {

smerr("v4l2_csi_call(init) is fail(%d)", sensor->sub_sensor[sub_sensor_id],

ret);

goto p_err;

}

/*subdev_isppre会在sam_is_isppre_probe时被赋值

Set ISPPRE Common config (global enable, dma init, input, output mux) */

ret = v4l2_subdev_call(subdev_isppre, core, init, sub_sensor_id);

if (ret) {

smerr("v4l2_csi_call(init) is fail(%d)", sensor->sub_sensor[sub_sensor_id],

ret);

goto p_err;

}

//上面的调用都是空的,下面的调用具体掉sam-is-device-module-960-serdes.c中的sensor_module_init,再调用sam-is-cis-960-serdes.c中的sensor_960_serdes_cis_init进行解串器的初始化

ret = v4l2_subdev_call(subdev_module, core, init, 0);

if (ret) {

smerr("v4l2_module_call(init) is fail(%d)", sensor->sub_sensor[sub_sensor_id],

ret);

goto p_err;

}

//group还是没弄清楚有什么用

ret = sam_is_devicemgr_binding(sensor->devicemgr, sub_sensor_id, sensor,

group->ischain, SAM_IS_DEVICE_SENSOR);

if (ret) {

smerr("sam_is_devicemgr_binding is fail", sensor->sub_sensor[sub_sensor_id]);

goto p_err;

}

set_bit(SAM_IS_SENSOR_S_INPUT, &sensor->state);

p_err:

sminfo("[SEN:D] %s(%d, %d):%d\n", sensor->sub_sensor[sub_sensor_id], __func__,

input, scenario, ret);

return ret;

}

4.4.3 set_param

这个函数是设置 帧率的,底层函数上面都没有操作

4.4.4 set_format

根据log,会调用到sam_is_sensor_s_format

/**

* @cnotice

* @prdcode

* @unit_name{device sensor::core}

* @purpose set format sensor device

* @logic find sensor setting and call csi/isppre set format

* @params

* @param{in/out, qdevice, void *, Not null}

* @param{in/out, sub_sensor_id, int, >= 0, <= 3}

* @param{in/out, queue, struct * ::sam_is_queue, Not null}

* @endparam

* @retval{ret, int, 0, 0, < 0}

*/

static int sam_is_sensor_s_format(void *qdevice, int sub_sensor_id,

struct sam_is_queue *queue)

{

int ret = 0;

struct sam_is_device_sensor *sensor = qdevice;

struct v4l2_subdev *subdev_module;

struct v4l2_subdev *subdev_csi;

struct v4l2_subdev *subdev_isppre;

struct v4l2_subdev_format subdev_format;

struct sam_is_fmt *format;

u32 width;

u32 height;

SAM_BUG(!sensor);

SAM_BUG(!sensor->sub_sensor[sub_sensor_id].subdev_module);

SAM_BUG(!sensor->subdev_csi);

SAM_BUG(!queue);

subdev_module = sensor->sub_sensor[sub_sensor_id].subdev_module;

subdev_csi = sensor->subdev_csi;

subdev_isppre = sensor->subdev_isppre;

format = queue->framecfg.format;

width = queue->framecfg.width;

height = queue->framecfg.height;

memcpy(&sensor->image.format, format, sizeof(struct sam_is_fmt));

sensor->image.window.offs_h = 0;

sensor->image.window.offs_v = 0;

sensor->image.window.width = width;

sensor->image.window.o_width = width;

sensor->image.window.height = height;

sensor->image.window.o_height = height;

subdev_format.format.code = format->pixelformat;

subdev_format.format.field = format->field;

subdev_format.format.width = width;

subdev_format.format.height = height;

//根据JSON文件中设置的width和height,找到对应的sensor mode配置,同时查看是否sensor mode change

sensor->cfg = sam_is_sensor_g_mode(sensor, sub_sensor_id);

if (!sensor->cfg) {

smerr("sensor cfg is invalid", sensor->sub_sensor[sub_sensor_id]);

ret = -EINVAL;

goto p_err;

}

set_bit(SAM_IS_SENSOR_S_CONFIG, &sensor->state);

//没什么实质性操作

ret = v4l2_subdev_call(subdev_module, pad, set_fmt, NULL, &subdev_format);

if (ret) {

smerr("v4l2_module_call(s_format) is fail(%d)",

sensor->sub_sensor[sub_sensor_id], ret);

goto p_err;

}

//没什么实质性操作

ret = v4l2_subdev_call(subdev_csi, pad, set_fmt, NULL, &subdev_format);

if (ret) {

smerr("v4l2_csi_call(s_format) is fail(%d)", sensor->sub_sensor[sub_sensor_id],

ret);

goto p_err;

}

//isppre dma, output format set

ret = v4l2_subdev_call(subdev_isppre, pad, set_fmt, NULL, &subdev_format);

if (ret) {

smerr("v4l2_isppre_call(s_format) is fail(%d)",

sensor->sub_sensor[sub_sensor_id], ret);

goto p_err;

}

p_err:

return ret;

}

4.4.5 request_buffers

sam_is_ssxin0_video_reqbufs ====>sam_is_video_reqbufs

/**

* @cnotice

* @prdcode

* @unit_name{coregroup::core}

* @purpose request buffer of video node

* @logic If vctx/request are valid, call vb2_reqbufs and frame_manager_open

* @params

* @param{in, file, struct* ::file, Not null}

* @param{in, vctx, struct* ::sam_is_video_ctx, Not null}

* @param{in, request, struct* ::v4l2_requestbuffers, Not null}

* @endparam

* @retval{ret, int, 0, 0, !0}

*/

int sam_is_video_reqbufs(struct file *file,

struct sam_is_video_ctx *vctx,

struct v4l2_requestbuffers *request)

{

int ret = 0;

struct sam_is_queue *queue;

struct sam_is_framemgr *framemgr;

struct sam_is_video *video;

SAM_BUG(!vctx);

SAM_BUG(!request);

video = GET_VIDEO(vctx);

if (!(vctx->state & (BIT(SAM_IS_VIDEO_S_FORMAT) | BIT(SAM_IS_VIDEO_STOP) | BIT(

SAM_IS_VIDEO_S_BUFS)))) {

mverr("invalid reqbufs is requested(%lX)", vctx, video, vctx->state);

return -EINVAL;

}

queue = GET_QUEUE(vctx);

if (test_bit(SAM_IS_QUEUE_STREAM_ON, &queue->state)) {

mverr("video is stream on, not applied", vctx, video);

ret = -EINVAL;

goto p_err;

}

//根据JSON文件中定义的buffer数,上层传过来的buffer count为4

/* before call queue ops if request count is zero */

if (!request->count) {

ret = CALL_QOPS(queue, request_bufs, GET_DEVICE(vctx), queue, request->count);

if (ret) {

mverr("request_bufs is fail(%d)", vctx, video, ret);

goto p_err;

}

}

//检查memory type以及相关操作函数是否存在,在调用vb2_core_reqbufs,下面我们具体分析

ret = vb2_reqbufs(queue->vbq, request);

if (ret) {

mverr("vb2_reqbufs is fail(%d)", vctx, video, ret);

goto p_err;

}

framemgr = &queue->framemgr;

queue->buf_maxcount = request->count;

if (queue->buf_maxcount == 0) {

queue->buf_refcount = 0;

clear_bit(SAM_IS_QUEUE_BUFFER_READY, &queue->state);

clear_bit(SAM_IS_QUEUE_BUFFER_PREPARED, &queue->state);

frame_manager_close(framemgr);

} else {

if (queue->buf_maxcount < queue->buf_rdycount) {

mverr("buffer count is not invalid(%d < %d)", vctx, video,

queue->buf_maxcount, queue->buf_rdycount);

ret = -EINVAL;

goto p_err;

}

//建立buffer管理,初始化queued_list

ret = frame_manager_open(framemgr, queue->buf_maxcount);

if (ret) {

mverr("sam_is_frame_open is fail(%d)", vctx, video, ret);

goto p_err;

}

}

/* after call queue ops if request count is not zero */

if (request->count) {

ret = CALL_QOPS(queue, request_bufs, GET_DEVICE(vctx), queue, request->count);

if (ret) {

mverr("request_bufs is fail(%d)", vctx, video, ret);

goto p_err;

}

}

vctx->state = BIT(SAM_IS_VIDEO_S_BUFS);

p_err:

return ret;

}

int vb2_reqbufs(struct vb2_queue *q, struct v4l2_requestbuffers *req)

{

//检查memory type及相关类型的操作函数ops是否存在

int ret = vb2_verify_memory_type(q, req->memory, req->type);

return ret ? ret : vb2_core_reqbufs(q, req->memory, &req->count);

}

int vb2_core_reqbufs(struct vb2_queue *q, enum vb2_memory memory,

unsigned int *count)

{

unsigned int num_buffers, allocated_buffers, num_planes = 0;

unsigned plane_sizes[VB2_MAX_PLANES] = { };

int ret;

if (q->streaming) {

dprintk(1, "streaming active\n");

return -EBUSY;

}

if (*count == 0 || q->num_buffers != 0 || q->memory != memory) {

/*

* We already have buffers allocated, so first check if they

* are not in use and can be freed.

*/

mutex_lock(&q->mmap_lock);

if (q->memory == VB2_MEMORY_MMAP && __buffers_in_use(q)) {

mutex_unlock(&q->mmap_lock);

dprintk(1, "memory in use, cannot free\n");

return -EBUSY;

}

/*

* Call queue_cancel to clean up any buffers in the PREPARED or

* QUEUED state which is possible if buffers were prepared or

* queued without ever calling STREAMON.

*/

__vb2_queue_cancel(q);

ret = __vb2_queue_free(q, q->num_buffers);

mutex_unlock(&q->mmap_lock);

if (ret)

return ret;

/*

* In case of REQBUFS(0) return immediately without calling

* driver's queue_setup() callback and allocating resources.

*/

if (*count == 0)

return 0;

}

/*

* Make sure the requested values and current defaults are sane.

*/

num_buffers = min_t(unsigned int, *count, VB2_MAX_FRAME);

num_buffers = max_t(unsigned int, num_buffers, q->min_buffers_needed);

memset(q->alloc_devs, 0, sizeof(q->alloc_devs));

q->memory = memory;

//这里打印出的是DMA buffer

printk("====> vb2_core_reqbufs memory %d\n",memory);

/*

* Ask the driver how many buffers and planes per buffer it requires.

* Driver also sets the size and allocator context for each plane.

*/

//queue是在sam_is_ssxin0_video_open时,在sam_is_video_open中初始化的,具体调用sam_is_ssxin0_queue_setup====>sam_is_queue_setup,没有具体分配buffer,仅做了queue->framecfg相关计算和赋值

ret = call_qop(q, queue_setup, q, &num_buffers, &num_planes,

plane_sizes, q->alloc_devs);

if (ret)

return ret;

/*在__vb2_queue_alloc中分配q->buf_struct_size,这个大小是在queue_init中初始化的vbq->buf_struct_size = sizeof(struct sam_is_vb2_buf);

* for (buffer = 0; buffer < num_buffers; ++buffer) {

* /* Allocate videobuf buffer structures */

* vb = kzalloc(q->buf_struct_size, GFP_KERNEL);

* if (!vb) {

* dprintk(1, "memory alloc for buffer struct failed\n");

* break;

* }

* vb->state = VB2_BUF_STATE_DEQUEUED;

* vb->vb2_queue = q;

* vb->num_planes = num_planes;

* vb->index = q->num_buffers + buffer;

* vb->type = q->type;

* vb->memory = memory;

* INIT_WORK(&vb->qbuf_work, __qbuf_work);

* spin_lock_init(&vb->fence_cb_lock);

* for (plane = 0; plane < num_planes; ++plane) {

* vb->planes[plane].length = plane_sizes[plane];

* vb->planes[plane].min_length = plane_sizes[plane];

* }

* vb->out_fence_fd = -1;

* q->bufs[vb->index] = vb;

*/

/* Finally, allocate buffers and video memory */

allocated_buffers =

__vb2_queue_alloc(q, memory, num_buffers, num_planes, plane_sizes);

if (allocated_buffers == 0) {

dprintk(1, "memory allocation failed\n");

return -ENOMEM;

}

/*

* There is no point in continuing if we can't allocate the minimum

* number of buffers needed by this vb2_queue.

*/

if (allocated_buffers < q->min_buffers_needed)

ret = -ENOMEM;

/*

* Check if driver can handle the allocated number of buffers.

*/

if (!ret && allocated_buffers < num_buffers) {

num_buffers = allocated_buffers;

/*

* num_planes is set by the previous queue_setup(), but since it

* signals to queue_setup() whether it is called from create_bufs()

* vs reqbufs() we zero it here to signal that queue_setup() is

* called for the reqbufs() case.

*/

num_planes = 0;

ret = call_qop(q, queue_setup, q, &num_buffers,

&num_planes, plane_sizes, q->alloc_devs);

if (!ret && allocated_buffers < num_buffers)

ret = -ENOMEM;

/*

* Either the driver has accepted a smaller number of buffers,

* or .queue_setup() returned an error

*/

}

mutex_lock(&q->mmap_lock);

q->num_buffers = allocated_buffers;

if (ret < 0) {

/*

* Note: __vb2_queue_free() will subtract 'allocated_buffers'

* from q->num_buffers.

*/

__vb2_queue_free(q, allocated_buffers);

mutex_unlock(&q->mmap_lock);

return ret;

}

mutex_unlock(&q->mmap_lock);

/*

* Return the number of successfully allocated buffers

* to the userspace.

*/

*count = allocated_buffers;

q->waiting_for_buffers = !q->is_output;

return 0;

}

4.4.6 _set_stream_on

具体调用sam_is_video_streamon==>vb2_streamon(vbq, type);==>vb2_core_streamon==>vb2_start_streaming==>sam_is_ssxin0_start_streaming==>sam_is_queue_start_streaming==>sam_is_sensor_back_start

/**

* @cnotice

* @prdcode

* @unit_name{device sensor::core}

* @purpose sensor device back start

* @logic sensor device frame manager, device manager start

* @params

* @param{in/out, qdevice, void *, Not null}

* @param{in/out, sub_sensor_id, int, >= 0, <= 3}

* @param{in/out, queue, struct * ::sam_is_queue, Not null}

* @endparam

* @retval{ret, int, 0, 0, < 0}

*/

static int sam_is_sensor_back_start(void *qdevice, int sub_sensor_id,

struct sam_is_queue *queue)

{

int ret = 0;

struct sam_is_device_sensor *sensor = qdevice;

struct sam_is_groupmgr *groupmgr;

struct sam_is_group *group;

SAM_BUG(!sensor);

if (test_bit(SAM_IS_SENSOR_BACK_START, &sensor->state)) {

err("already back start");

ret = -EINVAL;

goto p_err;

}

groupmgr = sensor->groupmgr;

group = &sensor->sub_sensor[sub_sensor_id].group_sensor;

ret = sam_is_group_start(groupmgr, group);

if (ret) {

smerr("sam_is_group_start is fail(%d)", sensor->sub_sensor[sub_sensor_id], ret);

goto p_err;

}

ret = sam_is_devicemgr_start(sensor->devicemgr, sub_sensor_id, (void *)sensor,

SAM_IS_DEVICE_SENSOR);

if (ret) {

smerr("sam_is_group_start is fail(%d)", sensor->sub_sensor[sub_sensor_id], ret);

goto p_err;

}

set_bit(SAM_IS_SENSOR_BACK_START, &sensor->state);

p_err:

sminfo("[SEN:D] %s(%dx%d, %d)\n", sensor->sub_sensor[sub_sensor_id], __func__,

sensor->image.window.width, sensor->image.window.height, ret);

return ret;

}

4.4.7 set_ctrl V4L2_CID_IS_S_STREAM

调用sam_is_ssxin0_video_s_ctrl ==>sam_is_sensor_front_start

//具体调用csi_s_stream ==>csi_stream_on

ret = v4l2_subdev_call(subdev_csi, video, s_stream, s_stream_param);

if (ret) {

smerr("v4l2_csi_call(s_stream) is fail(%d)", sensor->sub_sensor[sub_sensor_id],

ret);

goto p_err;

}

ret = v4l2_subdev_call(subdev_isppre, video, s_stream, s_stream_param);

csi_stream_on

/**

* @cnotice

* @prdcode

* @unit_name{csi}

* @purpose csi device stream on

* @logic tasklet setting and request interrupt, configurate phy and csi hw

* end enable

* @params

* @param{in/out, subdev, struct * ::v4l2_subdev, Not null}

* @param{in/out, csi, struct * ::sam_is_device_csi, Not null}

* @param{in/out, sub_sensor_id, int, >= 0, <= 3}

* @endparam

* @retval{ret, int, 0, 0, < 0}

*/

static int csi_stream_on(struct v4l2_subdev *subdev,

struct sam_is_device_csi *csi, int sub_sensor_id)

{

int ret = 0;

u32 settle;

u32 __iomem *base_reg;

struct sam_is_device_sensor *sensor = v4l2_get_subdev_hostdata(subdev);

struct sam_is_sensor_cfg *sensor_cfg;

#ifdef ENABLE_IRQ_MULTI_TARGET

unsigned int cpu = 0xff;

#endif

SAM_BUG(!csi);

SAM_BUG(!sensor);

if (test_bit(CSIS_DMA_ENABLE, &csi->state)) {

csi->sw_checker = EXPECT_FRAME_START;

/* Tasklet Setting */

//现在camera走的是issensor不是ischain,建立tastlet,当CSIS CORE IRQ有中断时表示frame start或end会进入中断函数sam_is_isr_csi,并执行csi_frame_start_inline,会执行tasklet中的关联函数

if (sensor->sub_sensor[sub_sensor_id].ischain &&

test_bit(SAM_IS_GROUP_OTF_INPUT,

&sensor->sub_sensor[sub_sensor_id].ischain->group_3aa.state)) {

switch (sub_sensor_id) {

case 0:

tasklet_init(&csi->tasklet_csis_str[CSI_VIRTUAL_CH_0], tasklet_csis_str_otf_vc0,

(unsigned long)subdev);

tasklet_init(&csi->tasklet_csis_end[CSI_VIRTUAL_CH_0], tasklet_csis_end_vc0,

(unsigned long)subdev);

set_bit(CSIS_VC0_ENABLED, &csi->state);

break;

case 1:

tasklet_init(&csi->tasklet_csis_str[CSI_VIRTUAL_CH_1], tasklet_csis_str_otf_vc1,

(unsigned long)subdev);

tasklet_init(&csi->tasklet_csis_end[CSI_VIRTUAL_CH_1], tasklet_csis_end_vc1,

(unsigned long)subdev);

set_bit(CSIS_VC1_ENABLED, &csi->state);

break;

case 2:

tasklet_init(&csi->tasklet_csis_str[CSI_VIRTUAL_CH_2], tasklet_csis_str_otf_vc2,

(unsigned long)subdev);

tasklet_init(&csi->tasklet_csis_end[CSI_VIRTUAL_CH_2], tasklet_csis_end_vc2,

(unsigned long)subdev);

set_bit(CSIS_VC2_ENABLED, &csi->state);

break;

case 3:

tasklet_init(&csi->tasklet_csis_str[CSI_VIRTUAL_CH_3], tasklet_csis_str_otf_vc3,

(unsigned long)subdev);

tasklet_init(&csi->tasklet_csis_end[CSI_VIRTUAL_CH_3], tasklet_csis_end_vc3,

(unsigned long)subdev);

set_bit(CSIS_VC3_ENABLED, &csi->state);

break;

default:

tasklet_init(&csi->tasklet_csis_str[CSI_VIRTUAL_CH_0], tasklet_csis_str_otf_vc0,

(unsigned long)subdev);

tasklet_init(&csi->tasklet_csis_end[CSI_VIRTUAL_CH_0], tasklet_csis_end_vc0,

(unsigned long)subdev);

set_bit(CSIS_VC0_ENABLED, &csi->state);

break;

}

} else {

switch (sub_sensor_id) {

case 0:

tasklet_init(&csi->tasklet_csis_str[CSI_VIRTUAL_CH_0], tasklet_csis_str_m2m_vc0,

(unsigned long)subdev);

tasklet_init(&csi->tasklet_csis_end[CSI_VIRTUAL_CH_0], tasklet_csis_end_vc0,

(unsigned long)subdev);

set_bit(CSIS_VC0_ENABLED, &csi->state);

break;

case 1:

tasklet_init(&csi->tasklet_csis_str[CSI_VIRTUAL_CH_1], tasklet_csis_str_m2m_vc1,

(unsigned long)subdev);

tasklet_init(&csi->tasklet_csis_end[CSI_VIRTUAL_CH_1], tasklet_csis_end_vc1,

(unsigned long)subdev);

set_bit(CSIS_VC1_ENABLED, &csi->state);

break;

case 2:

tasklet_init(&csi->tasklet_csis_str[CSI_VIRTUAL_CH_2], tasklet_csis_str_m2m_vc2,

(unsigned long)subdev);

tasklet_init(&csi->tasklet_csis_end[CSI_VIRTUAL_CH_2], tasklet_csis_end_vc2,

(unsigned long)subdev);

set_bit(CSIS_VC2_ENABLED, &csi->state);

break;

case 3:

tasklet_init(&csi->tasklet_csis_str[CSI_VIRTUAL_CH_3], tasklet_csis_str_m2m_vc3,

(unsigned long)subdev);

tasklet_init(&csi->tasklet_csis_end[CSI_VIRTUAL_CH_3], tasklet_csis_end_vc3,

(unsigned long)subdev);

set_bit(CSIS_VC3_ENABLED, &csi->state);

break;

default:

tasklet_init(&csi->tasklet_csis_str[CSI_VIRTUAL_CH_0], tasklet_csis_str_m2m_vc0,

(unsigned long)subdev);

tasklet_init(&csi->tasklet_csis_end[CSI_VIRTUAL_CH_0], tasklet_csis_end_vc0,

(unsigned long)subdev);

set_bit(CSIS_VC0_ENABLED, &csi->state);

break;

}

}

}

csi->vc_enable_count++;

if (test_bit(CSIS_START_STREAM, &csi->state)) {

warn("[CSI%d] already start", csi->position);

return ret;

}

sensor_cfg = csi->sensor_cfg;

if (!sensor_cfg) {

err("[CSI%d] sensor cfg is null", csi->position);

ret = -EINVAL;

goto p_err;

}

base_reg = csi->base_reg;

if (test_bit(CSIS_DMA_ENABLE, &csi->state)) {

/* Registeration of CSIS CORE IRQ */

ret = request_irq(csi->irq,

sam_is_isr_csi,

SAM_IS_HW_IRQ_FLAG,

"mipi-csi",

csi);

if (ret) {

err("[CSI%d] request_irq(IRQ_MIPICSI %d) is fail(%d)", csi->position, csi->irq,

ret);

goto p_err;

}

csi_hw_s_irq_msk(base_reg, true);

#ifdef ENABLE_IRQ_MULTI_TARGET

irq_set_affinity(csi->irq, (const struct cpumask *)&cpu);

#endif

}

if (!sensor->cfg) {

err("[CSI%d] cfg is NULL", csi->position);

ret = -EINVAL;

goto p_err;

}

settle = sensor->cfg->settle;

if (!settle) {

if (sensor_cfg->mipi_speed)

settle = csi_hw_find_settle(sensor_cfg->mipi_speed);

else

err("[CSI%d] mipi_speed is invalid", csi->position);

}

sminfo("[CSI] settle(%dx%d@%d) = %d, speed(%u Mbps), %u lane\n",

sensor->sub_sensor[sub_sensor_id],

csi->image.window.width,

csi->image.window.height,

csi->image.framerate,

settle, sensor_cfg->mipi_speed, sensor_cfg->lanes + 1);

//把从sensor cfg获取到的配置参数设置到CSI相关寄存器

/* PHY control */

/* PHY be configured in CSI driver */

csi_hw_s_phy_default_value(csi->phy_reg, csi->position);

csi_hw_s_settle(csi->phy_reg, settle);

ret = csi_hw_s_phy_config(csi->phy_reg, csi->sys_reg, sensor_cfg->lanes,

sensor_cfg->mipi_speed, settle, csi->position);

if (ret) {

err("[CSI%d] csi_hw_s_phy_config is fail", csi->position);

goto p_err;

}

/* PHY be configured in PHY driver */

ret = csi_hw_s_phy_set(csi->phy, sensor_cfg->lanes, sensor_cfg->mipi_speed,

settle, csi->position);

if (ret) {

err("[CSI%d] csi_hw_s_phy_set is fail", csi->position);

goto p_err;

}

csi_hw_s_lane(base_reg, &csi->image, sensor_cfg->lanes, sensor_cfg->mipi_speed);

csi_hw_s_control(base_reg, CSIS_CTRL_INTERLEAVE_MODE,

sensor_cfg->interleave_mode);

if (sensor_cfg->interleave_mode == CSI_MODE_CH0_ONLY) {

csi_hw_s_config(base_reg,

CSI_VIRTUAL_CH_0,

&sensor_cfg->input[CSI_VIRTUAL_CH_0],

csi->image.window.width,

csi->image.window.height,

sensor_cfg->rgb_swap);

} else {

u32 vc = 0;

for (vc = CSI_VIRTUAL_CH_0; vc < CSI_VIRTUAL_CH_MAX; vc++) {

csi_hw_s_config(base_reg,

vc, &sensor_cfg->input[vc],

sensor_cfg->input[vc].width,

sensor_cfg->input[vc].height,

sensor_cfg->rgb_swap);

sminfo("[CSI] VC%d: size(%dx%d)\n", sensor->sub_sensor[sub_sensor_id], vc,

sensor_cfg->input[vc].width, sensor_cfg->input[vc].height);

}

}

csi_hw_enable(base_reg);

if (unlikely(debug_csi)) {

csi_hw_dump(base_reg);

csi_hw_phy_dump(csi->phy_reg, csi->position);

}

set_bit(CSIS_START_STREAM, &csi->state);

p_err:

return ret;

}

至此处cameraCoreTest工具调用流程就结束了,后面我们分析数据流

qbuffer和dqbuffer 部分参考如下文章:

高通msm-V4L2-Camera驱动浅析5-buffer

Linux V4L2子系统-videobuf2框架分析(三)

V4L2 videobuffer2的介绍,数据流分析

vb2_buffer结构探究

本文详细解析了Samsung AutoV7平台中使用cameraCoreTest工具导出相机原始图像的完整流程,包括初始化CAMCORELIB、设置场景、设备打开、流设置和图像捕获等关键步骤。

本文详细解析了Samsung AutoV7平台中使用cameraCoreTest工具导出相机原始图像的完整流程,包括初始化CAMCORELIB、设置场景、设备打开、流设置和图像捕获等关键步骤。

342

342

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?