扩散模型(Diffusion Model)数学推导

1. 基本概念

扩散模型是一种基于马尔可夫链的生成模型,包含两个核心过程:

1.1 扩散过程(前向过程)

逐步向数据添加高斯噪声,将数据分布转化为标准正态分布:

q ( x 1 : T ∣ x 0 ) = ∏ t = 1 T q ( x t ∣ x t − 1 ) q(x_{1:T}|x_0) = \prod_{t=1}^T q(x_t|x_{t-1}) q(x1:T∣x0)=t=1∏Tq(xt∣xt−1)

1.2 逆扩散过程(反向过程)

学习从噪声中重建原始数据:

p θ ( x 0 : T ) = p ( x T ) ∏ t = 1 T p θ ( x t − 1 ∣ x t ) p_\theta(x_{0:T}) = p(x_T)\prod_{t=1}^T p_\theta(x_{t-1}|x_t) pθ(x0:T)=p(xT)t=1∏Tpθ(xt−1∣xt)

2. 前向过程详细推导

2.1 单步扩散

每一步的扩散过程定义为:

q ( x t ∣ x t − 1 ) = N ( x t ; 1 − β t x t − 1 , β t I ) q(x_t|x_{t-1}) = \mathcal{N}(x_t; \sqrt{1-\beta_t}x_{t-1}, \beta_t \boldsymbol{I}) q(xt∣xt−1)=N(xt;1−βtxt−1,βtI)

使用重参数化技巧表示为:

x t = α t x t − 1 + 1 − α t ϵ t − 1 , α t = 1 − β t x_t = \sqrt{\alpha_t}x_{t-1} + \sqrt{1-\alpha_t}\epsilon_{t-1}, \quad \alpha_t = 1-\beta_t xt=αtxt−1+1−αtϵt−1,αt=1−βt

2.2 任意时刻表示

通过递归展开可得:

x t = α ˉ t x 0 + 1 − α ˉ t ϵ x_t = \sqrt{\bar{\alpha}_t}x_0 + \sqrt{1-\bar{\alpha}_t}\epsilon xt=αˉtx0+1−αˉtϵ

其中: α ˉ t = ∏ i = 1 t α i \bar{\alpha}_t = \prod_{i=1}^t \alpha_i αˉt=∏i=1tαi, ϵ ∼ N ( 0 , I ) \epsilon \sim \mathcal{N}(0, \boldsymbol{I}) ϵ∼N(0,I)。

递归推导:

x

t

=

α

t

x

t

−

1

+

1

−

α

t

ϵ

t

−

1

=

α

t

(

α

t

−

1

x

t

−

2

+

1

−

α

t

−

1

ϵ

t

−

2

)

+

1

−

α

t

ϵ

t

−

1

=

α

t

α

t

−

1

x

t

−

2

+

α

t

(

1

−

α

t

−

1

)

ϵ

t

−

2

+

1

−

α

t

ϵ

t

−

1

=

α

t

α

t

−

1

x

t

−

2

+

1

−

α

t

−

1

α

t

ϵ

ˉ

t

−

2

⋯

=

α

ˉ

t

x

0

+

1

−

α

ˉ

t

ϵ

\begin{aligned} {x}_t & =\sqrt{\alpha_t} {x}_{t-1}+\sqrt{1-\alpha_t} {\epsilon}_{t-1} \\ & =\sqrt{\alpha_t}\left(\sqrt{\alpha_{t-1}} x_{t-2}+\sqrt{1-\alpha_{t-1}} \epsilon_{t-2}\right)+\sqrt{1-\alpha_t} \epsilon_{t-1} \\ & =\sqrt{\alpha_t \alpha_{t-1}} x_{t-2}+\sqrt{\alpha_t\left(1-\alpha_{t-1}\right)} \epsilon_{t-2}+\sqrt{1-\alpha_t} \epsilon_{t-1} \\ & =\sqrt{\alpha_t \alpha_{t-1}} x_{t-2}+ \textcolor{blue}{\sqrt{1-\alpha_{t-1} \alpha_t} \bar{\epsilon}_{t-2}} \\ & \cdots \\ & =\sqrt{\bar{\alpha}_t} {x}_0+\sqrt{1-\bar{\alpha}_t} {\epsilon} \end{aligned}

xt=αtxt−1+1−αtϵt−1=αt(αt−1xt−2+1−αt−1ϵt−2)+1−αtϵt−1=αtαt−1xt−2+αt(1−αt−1)ϵt−2+1−αtϵt−1=αtαt−1xt−2+1−αt−1αtϵˉt−2⋯=αˉtx0+1−αˉtϵ

注解:

ϵ

t

−

1

\epsilon_{t-1}

ϵt−1和

ϵ

t

−

2

\epsilon_{t-2}

ϵt−2是相互独立的高斯分布,因此可以合成一个新的高斯分布

ϵ

ˉ

t

−

2

\bar{\epsilon}_{t-2}

ϵˉt−2,标准差为

1

−

α

t

−

1

α

t

\sqrt{1-\alpha_{t-1} \alpha_t}

1−αt−1αt。参考公式:给定两个独立的正态分布

X

1

∼

N

(

μ

1

,

σ

1

2

)

X_1 \sim N\left(\mu_1, \sigma_1^2\right)

X1∼N(μ1,σ12) 和

X

2

∼

N

(

μ

2

,

σ

2

2

)

X_2 \sim N\left(\mu_2, \sigma_2^2\right)

X2∼N(μ2,σ22) ,且

a

b

a b

ab 均为实数则

a

X

1

+

b

X

2

∼

N

(

a

μ

1

+

b

μ

2

,

a

2

σ

1

2

+

b

2

σ

2

2

)

{aX_1}+{bX_2} \sim N\left({a} \mu_1+b \mu_2, {a}^2 \sigma_1^2+b^2 \sigma_2^2\right)

aX1+bX2∼N(aμ1+bμ2,a2σ12+b2σ22)

2.3 性质分析

当 T → ∞ T \to \infty T→∞时: α ˉ t → 0 \bar{\alpha}_t \to 0 αˉt→0 ⇒ \Rightarrow ⇒ x T → N ( 0 , I ) x_T \to \mathcal{N}(0, \boldsymbol{I}) xT→N(0,I)。

3. 反向过程推导

如果我们能够逆转上述过程并从 q ( x t − 1 ∣ x t ) q\left(x_{t-1} \mid x_t\right) q(xt−1∣xt) 采样,就可以从高斯噪声 x T ∼ N ( 0 , I ) x_T \sim \mathcal{N}(0, \boldsymbol{I}) xT∼N(0,I) 还原出原图分布 x 0 ∼ q ( x ) x_0 \sim q(x) x0∼q(x) 。因为我们无法直接推断出 q ( x t − 1 ∣ x t ) q\left(x_{t-1} \mid x_t\right) q(xt−1∣xt) ,所以通过神经网络去预测/拟合这样的一个逆向的分布 p θ ( x t − 1 ∣ x t ) p_\theta\left(x_{t-1} \mid x_t\right) pθ(xt−1∣xt)。

3.1 真实反向分布结论

当已知 x 0 x_0 x0时,反向分布可解析求得:

q ( x t − 1 ∣ x t , x 0 ) = N ( x t − 1 ; μ ~ t , β ~ t I ) q(x_{t-1}|x_t,x_0) = \mathcal{N}(x_{t-1}; \tilde{\mu}_t, \tilde{\beta}_t \boldsymbol{I}) q(xt−1∣xt,x0)=N(xt−1;μ~t,β~tI)

其中: μ ~ t = 1 α t ( x t − 1 − α t 1 − α ˉ t ϵ t ) \tilde{\mu}_t = \frac{1}{\sqrt{\alpha_t}}\left(x_t - \frac{1-\alpha_t}{\sqrt{1-\bar{\alpha}_t}}\epsilon_t\right) μ~t=αt1(xt−1−αˉt1−αtϵt), β ~ t = 1 − α ˉ t − 1 1 − α ˉ t ⋅ β t \tilde{\beta}_t=\frac{1-\bar{\alpha}_{t-1}}{1-\bar{\alpha}_t} \cdot \beta_t β~t=1−αˉt1−αˉt−1⋅βt

3.2 推导

q

(

x

t

−

1

∣

x

t

,

x

0

)

=

q

(

x

t

,

x

0

,

x

t

−

1

)

q

(

x

t

,

x

0

)

=

q

(

x

0

)

q

(

x

t

−

1

∣

x

0

)

q

(

x

t

∣

x

t

−

1

,

x

0

)

q

(

x

0

)

q

(

x

t

∣

x

0

)

=

q

(

x

t

∣

x

t

−

1

,

x

0

)

q

(

x

t

−

1

∣

x

0

)

q

(

x

t

∣

x

0

)

=

q

(

x

t

∣

x

t

−

1

)

q

(

x

t

−

1

∣

x

0

)

q

(

x

t

∣

x

0

)

∝

exp

(

−

1

2

(

(

x

t

−

α

t

x

t

−

1

)

2

β

t

+

(

x

t

−

1

−

α

ˉ

t

−

1

x

0

)

2

1

−

a

ˉ

t

−

1

−

(

x

t

−

a

ˉ

t

x

0

)

2

1

−

a

ˉ

t

)

)

=

exp

(

−

1

2

(

(

(

α

t

β

t

+

1

1

−

α

ˉ

t

−

1

)

x

t

−

1

2

⏟

x

t

−

1

万差

−

(

2

α

t

β

t

x

t

+

2

a

ˉ

t

−

1

1

−

α

ˉ

t

−

1

x

0

)

x

t

−

1

⏟

x

t

−

1

均值

+

C

(

x

t

,

x

0

)

⏟

与

x

t

−

1

无关

)

.

\begin{aligned} & q\left(x_{t-1} \mid x_t, x_0\right) =\frac{q\left(x_t, x_0, x_{t-1}\right)}{q\left(x_t, x_0\right)} =\frac{q\left(x_0\right) q\left(x_{t-1} \mid x_0\right) q\left(x_t \mid x_{t-1}, x_0\right)}{q\left(x_0\right) q\left(x_t \mid x_0\right)} \\ & =q\left(x_t \mid x_{t-1}, x_0\right) \frac{q\left(x_{t-1} \mid x_0\right)}{q\left(x_t \mid x_0\right)} = q\left(x_t \mid x_{t-1}\right) \frac{q\left(x_{t-1} \mid x_0\right)}{q\left(x_t \mid x_0\right)} \\ & \propto \exp \left(-\frac{1}{2}\left(\frac{\left(x_t-\sqrt{\alpha_t} x_{t-1}\right)^2}{\beta_t}+\frac{\left(x_{t-1}-\sqrt{\bar{\alpha}_{t-1}} x_0\right)^2}{1-\bar{a}_{t-1}}-\frac{\left(x_t-\sqrt{\bar{a}_t} x_0\right)^2}{1-\bar{a}_t}\right)\right) \\ & =\exp (-\frac{1}{2}(\underbrace{\left(\left(\frac{\alpha_t}{\beta_t}+\frac{1}{1-\bar{\alpha}_{t-1}}\right) x_{t-1}^2\right.}_{x_{t-1} \text { 万差 }}-\underbrace{\left(\frac{2 \sqrt{\alpha_t}}{\beta_t} x_t+\frac{2 \sqrt{\bar{a}_{t-1}}}{1-\bar{\alpha}_{t-1}} x_0\right) x_{t-1}}_{x_{t-1} \text { 均值 }}+\underbrace{C\left(x_t, x_0\right)}_{\text {与 } x_{t-1} \text { 无关 }}) . \end{aligned}

q(xt−1∣xt,x0)=q(xt,x0)q(xt,x0,xt−1)=q(x0)q(xt∣x0)q(x0)q(xt−1∣x0)q(xt∣xt−1,x0)=q(xt∣xt−1,x0)q(xt∣x0)q(xt−1∣x0)=q(xt∣xt−1)q(xt∣x0)q(xt−1∣x0)∝exp(−21(βt(xt−αtxt−1)2+1−aˉt−1(xt−1−αˉt−1x0)2−1−aˉt(xt−aˉtx0)2))=exp(−21(xt−1 万差

((βtαt+1−αˉt−11)xt−12−xt−1 均值

(βt2αtxt+1−αˉt−12aˉt−1x0)xt−1+与 xt−1 无关

C(xt,x0)).

注解: 推导过程用到了贝叶斯公式,马尔科夫性,以及高斯公式

贝叶斯公式:

P

(

A

∣

B

)

=

P

(

B

∣

A

)

P

(

A

)

P

(

B

)

P(A|B) = \frac{P(B|A)P(A)}{P(B)}

P(A∣B)=P(B)P(B∣A)P(A);

因为扩散过程是一个马尔科夫过程,所以有

q

(

x

t

∣

x

t

−

1

,

x

0

)

=

q

(

x

t

∣

x

t

−

1

)

q\left(x_t \mid x_{t-1}, x_0\right) = q\left(x_t \mid x_{t-1}\right)

q(xt∣xt−1,x0)=q(xt∣xt−1);

将条件概率转化为概率密度的表达形式,它们是成正相关或认为成正比关系的。高斯分布

x

∼

N

(

μ

,

σ

2

)

x \sim N \left(\mu,\sigma^2\right)

x∼N(μ,σ2) 的概率密度函数为:

f

(

x

)

=

1

2

π

σ

exp

(

−

(

x

−

μ

)

2

2

σ

2

)

f(x)=\frac{1}{\sqrt{2 \pi} \sigma} \exp \left(\frac{-(x-\mu)^2}{2 \sigma^2}\right)

f(x)=2πσ1exp(2σ2−(x−μ)2)

根据上述公式,进一步可以获得方差和均值:

方差:(观察可知方差为常数值)

β

~

t

=

1

/

(

α

t

β

t

+

1

1

−

α

ˉ

t

−

1

)

=

1

/

(

α

t

−

α

ˉ

t

+

β

t

β

t

(

1

−

α

ˉ

t

−

1

)

)

=

1

−

α

ˉ

t

−

1

1

−

α

ˉ

t

⋅

β

t

\tilde{\beta}_t=1 /\left(\frac{\alpha_t}{\beta_t}\right. \left.+\frac{1}{1-\bar{\alpha}_{t-1}}\right)=1 /\left(\frac{\alpha_t-\bar{\alpha}_t+\beta_t}{\beta_t\left(1-\bar{\alpha}_{t-1}\right)}\right)=\frac{1-\bar{\alpha}_{t-1}}{1-\bar{\alpha}_t} \cdot \beta_t

β~t=1/(βtαt+1−αˉt−11)=1/(βt(1−αˉt−1)αt−αˉt+βt)=1−αˉt1−αˉt−1⋅βt

均值:

μ

~

t

(

x

t

,

x

0

)

=

(

α

t

β

t

x

t

+

α

ˉ

t

−

1

1

−

α

ˉ

t

−

1

x

0

)

/

(

α

t

β

t

+

1

1

−

α

ˉ

t

−

1

)

=

(

α

t

β

t

x

t

+

α

ˉ

t

−

1

1

−

α

ˉ

t

−

1

x

0

)

1

−

α

ˉ

t

−

1

1

−

α

ˉ

t

⋅

β

t

=

α

t

(

1

−

α

ˉ

t

−

1

)

1

−

α

ˉ

t

x

t

+

α

ˉ

t

−

1

β

t

1

−

α

ˉ

t

x

0

\begin{aligned} \tilde{{\mu}}_t\left({x}_t, {x}_0\right) & =\left(\frac{\sqrt{\alpha_t}}{\beta_t} {x}_t+\frac{\sqrt{\bar{\alpha}_{t-1}}}{1-\bar{\alpha}_{t-1}} {x}_0\right) /\left(\frac{\alpha_t}{\beta_t}+\frac{1}{1-\bar{\alpha}_{t-1}}\right) \\ & =\left(\frac{\sqrt{\alpha_t}}{\beta_t} {x}_t+\frac{\sqrt{\bar{\alpha}_{t-1}}}{1-\bar{\alpha}_{t-1}} {x}_0\right) \frac{1-\bar{\alpha}_{t-1}}{1-\bar{\alpha}_t} \cdot \beta_t \\ & =\frac{\sqrt{\alpha_t}\left(1-\bar{\alpha}_{t-1}\right)}{1-\bar{\alpha}_t} {x}_t+\frac{\sqrt{\bar{\alpha}_{t-1}} \beta_t}{1-\bar{\alpha}_t} {x}_0 \end{aligned}

μ~t(xt,x0)=(βtαtxt+1−αˉt−1αˉt−1x0)/(βtαt+1−αˉt−11)=(βtαtxt+1−αˉt−1αˉt−1x0)1−αˉt1−αˉt−1⋅βt=1−αˉtαt(1−αˉt−1)xt+1−αˉtαˉt−1βtx0

将

x

0

x_0

x0表示为:

x

0

=

1

α

ˉ

t

(

x

t

−

1

−

α

ˉ

t

ϵ

t

)

x_0 = \frac{1}{\sqrt{\bar{\alpha}_t}}(x_t - \sqrt{1-\bar{\alpha}_t}\epsilon_t)

x0=αˉt1(xt−1−αˉtϵt),代入上式得:

μ

~

t

(

x

t

,

x

0

)

=

α

t

(

1

−

α

ˉ

t

−

1

)

1

−

α

ˉ

t

x

t

+

α

ˉ

t

−

1

β

t

1

−

α

ˉ

t

x

0

=

α

t

(

1

−

α

ˉ

t

−

1

)

1

−

α

ˉ

t

x

t

+

α

ˉ

t

−

1

β

t

1

−

α

ˉ

t

⋅

1

α

ˉ

t

(

x

t

−

1

−

α

ˉ

t

z

t

)

=

α

t

⋅

α

t

(

1

−

α

ˉ

t

−

1

)

α

t

⋅

(

1

−

α

ˉ

t

)

x

t

+

α

ˉ

t

−

1

β

t

1

−

α

ˉ

t

⋅

1

α

ˉ

t

(

x

t

−

1

−

α

ˉ

t

z

t

)

=

α

t

−

α

ˉ

t

α

t

(

1

−

α

ˉ

t

)

x

t

+

β

t

(

1

−

α

ˉ

t

)

α

t

(

x

t

−

1

−

α

ˉ

t

z

t

)

=

1

−

α

ˉ

t

α

t

(

1

−

α

ˉ

t

)

x

t

−

β

t

(

1

−

α

ˉ

t

)

α

t

(

1

−

α

ˉ

t

z

t

)

=

1

α

t

x

t

−

β

t

(

1

−

α

ˉ

t

)

α

t

z

t

=

1

α

t

(

x

t

−

β

t

(

1

−

α

ˉ

t

)

z

t

)

\begin{aligned} \tilde{\mu}_t\left( {x}_t, {x}_0\right) & =\frac{\sqrt{\alpha_t}\left(1-\bar{\alpha}_{t-1}\right)}{1-\bar{\alpha}_t} {x}_t+\frac{\sqrt{\bar{\alpha}_{t-1}} \beta_t}{1-\bar{\alpha}_t} {x}_0 \\ & =\frac{\sqrt{\alpha_t}\left(1-\bar{\alpha}_{t-1}\right)}{1-\bar{\alpha}_t} {x}_t+\frac{\sqrt{\bar{\alpha}_{t-1}} \beta_t}{1-\bar{\alpha}_t} \cdot \frac{1}{\sqrt{\bar{\alpha}_t}}\left( {x}_t-\sqrt{1-\bar{\alpha}_t} z_t\right) \\ & =\frac{\sqrt{\alpha_t} \cdot \sqrt{\alpha_t}\left(1-\bar{\alpha}_{t-1}\right)}{\sqrt{\alpha_t} \cdot\left(1-\bar{\alpha}_t\right)} {x}_t+\frac{\sqrt{\bar{\alpha}_{t-1}} \beta_t}{1-\bar{\alpha}_t} \cdot \frac{1}{\sqrt{\bar{\alpha}_t}}\left(x_t-\sqrt{1-\bar{\alpha}_t} z_t\right) \\ & =\frac{\alpha_t-\bar{\alpha}_t}{\sqrt{\alpha_t}\left(1-\bar{\alpha}_t\right)} {x}_t+\frac{\beta_t}{\left(1-\bar{\alpha}_t\right) \sqrt{\alpha_t}}\left(x_t-\sqrt{1-\bar{\alpha}_t} z_t\right) \\ & =\frac{1-\bar{\alpha}_t}{\sqrt{\alpha_t}\left(1-\bar{\alpha}_t\right)} {x}_t-\frac{\beta_t}{\left(1-\bar{\alpha}_t\right) \sqrt{\alpha_t}}\left(\sqrt{1-\bar{\alpha}_t} z_t\right) \\ & =\frac{1}{\sqrt{\alpha_t}} {x}_t-\frac{\beta_t}{\sqrt{\left(1-\bar{\alpha}_t\right)} \sqrt{\alpha_t}} z_t \\ & =\frac{1}{\sqrt{\alpha_t}}\left( {x}_t-\frac{\beta_t}{\sqrt{\left(1-\bar{\alpha}_t\right)}} z_t\right) \end{aligned}

μ~t(xt,x0)=1−αˉtαt(1−αˉt−1)xt+1−αˉtαˉt−1βtx0=1−αˉtαt(1−αˉt−1)xt+1−αˉtαˉt−1βt⋅αˉt1(xt−1−αˉtzt)=αt⋅(1−αˉt)αt⋅αt(1−αˉt−1)xt+1−αˉtαˉt−1βt⋅αˉt1(xt−1−αˉtzt)=αt(1−αˉt)αt−αˉtxt+(1−αˉt)αtβt(xt−1−αˉtzt)=αt(1−αˉt)1−αˉtxt−(1−αˉt)αtβt(1−αˉtzt)=αt1xt−(1−αˉt)αtβtzt=αt1(xt−(1−αˉt)βtzt)

其中

β

t

=

1

−

α

t

\beta_t=1-\alpha_t

βt=1−αt,则最终结果为:

μ

~

t

=

1

α

t

(

x

t

−

1

−

α

t

1

−

α

ˉ

t

ϵ

t

)

\tilde{\mu}_t = \frac{1}{\sqrt{\alpha_t}}\left(x_t - \frac{1-\alpha_t}{\sqrt{1-\bar{\alpha}_t}}\epsilon_t\right)

μ~t=αt1(xt−1−αˉt1−αtϵt)

4. 训练目标

为什么预测噪声而不是直接预测图像?1

- 简化建模问题

不同时间步共享相同的噪声预测目标。考虑到我们每次只需要预测一个特定的时间步(t)的噪声,网络的目标变得更加明确且简单。直接预测图像 x_0 的像素值,则意味着模型需要从噪声中恢复整个图像结构,这在高维空间中是一个非常复杂的问题。

更进一步,预测噪声本质上是一个去噪过程,这个过程相对更加容易拟合和收敛。

- 稳定性和收敛性

在扩散模型中,噪声是添加到每个像素上的随机扰动。通过学习从噪声中恢复出原始图像的噪声成分,网络本质上是在学习图像的细节,而不是整个图像结构。因此,通过减少噪声的预测误差,模型能够更加稳定地训练。

1: 第 4 期:DDPM中的损失函数——为什么只预测噪声? 原文链接:https://blog.youkuaiyun.com/m0_45101613/article/details/147340147

4.1 变分下界

似然函数 p θ ( x 0 ) p_\theta\left(x_0\right) pθ(x0) 表示在模型参数 θ \theta θ 下,观测数据 x 0 x_0 x0 出现的概率。最大化似然即:

max θ log p θ ( x 0 ) \max _\theta \log p_\theta\left(x_0\right) θmaxlogpθ(x0)

等价于让模型生成的数据分布 p θ p_\theta pθ 尽可能接近真实数据分布 q ( x 0 ) q\left(x_0\right) q(x0) 。

由于似然函数求积分通常无法直接计算,因此我们通过变分下界(ELBO)来近似:

log

p

(

x

)

=

log

∫

p

(

x

0

:

T

)

d

x

1

:

T

=

log

∫

p

(

x

0

:

T

)

q

(

x

1

:

T

∣

x

0

)

q

(

x

1

:

T

∣

x

0

)

d

x

1

:

T

=

log

E

q

(

x

1

:

T

∣

x

0

)

[

p

(

x

0

:

T

)

q

(

x

1

:

T

∣

x

0

)

]

≥

E

q

(

x

1

:

T

∣

x

0

)

[

log

p

(

x

0

:

T

)

q

(

x

1

:

T

∣

x

0

)

]

\begin{aligned} \log p(\boldsymbol{x}) & =\log \int p\left(\boldsymbol{x}_{0: T}\right) d \boldsymbol{x}_{1: T} \\ & =\log \int \frac{p\left(\boldsymbol{x}_{0: T}\right) q\left(\boldsymbol{x}_{1: T} \mid \boldsymbol{x}_0\right)}{q\left(\boldsymbol{x}_{1: T} \mid \boldsymbol{x}_0\right)} d \boldsymbol{x}_{1: T} \\ & =\log \mathbb{E}_{q\left(\boldsymbol{x}_{1: T} \mid \boldsymbol{x}_0\right)}\left[\frac{p\left(\boldsymbol{x}_{0: T}\right)}{q\left(\boldsymbol{x}_{1: T} \mid \boldsymbol{x}_0\right)}\right] \\ & \geq \mathbb{E}_{q\left(\boldsymbol{x}_{1: T} \mid \boldsymbol{x}_0\right)}\left[\log \frac{p\left(\boldsymbol{x}_{0: T}\right)}{q\left(\boldsymbol{x}_{1: T} \mid \boldsymbol{x}_0\right)}\right] \end{aligned}

logp(x)=log∫p(x0:T)dx1:T=log∫q(x1:T∣x0)p(x0:T)q(x1:T∣x0)dx1:T=logEq(x1:T∣x0)[q(x1:T∣x0)p(x0:T)]≥Eq(x1:T∣x0)[logq(x1:T∣x0)p(x0:T)]

注解: 上式中不等式,由琴生不等式得到。琴生不等式:对于凹函数(Concave Function) ϕ 和随机变量 X,有(E[X])≥E[ϕ(X)]。对数函数 log(⋅) 是凹函数(其二阶导数为负)。

E

q

(

x

1

:

T

∣

x

0

)

[

log

p

(

x

0

:

T

)

q

(

x

1

:

T

∣

x

0

)

]

=

E

q

(

x

1

:

T

∣

x

0

)

[

log

p

(

x

T

)

∏

t

=

1

T

p

θ

(

x

t

−

1

∣

x

t

)

∏

t

=

1

T

q

(

x

t

∣

x

t

−

1

)

]

=

E

q

(

x

1

:

T

∣

x

0

)

[

log

p

(

x

T

)

p

θ

(

x

0

∣

x

1

)

∏

t

=

2

T

p

θ

(

x

t

−

1

∣

x

t

)

q

(

x

T

∣

x

T

−

1

)

∏

t

=

1

T

−

1

q

(

x

t

∣

x

t

−

1

)

]

=

E

q

(

x

1

:

T

∣

x

0

)

[

log

p

(

x

T

)

p

θ

(

x

0

∣

x

1

)

∏

t

=

1

T

−

1

p

θ

(

x

t

∣

x

t

+

1

)

q

(

x

T

∣

x

T

−

1

)

∏

t

=

1

T

−

1

q

(

x

t

∣

x

t

−

1

)

]

=

E

q

(

x

1

:

T

∣

x

0

)

[

log

p

(

x

T

)

p

θ

(

x

0

∣

x

1

)

q

(

x

T

∣

x

T

−

1

)

]

+

E

q

(

x

1

:

T

∣

x

0

)

[

log

∏

t

=

1

T

−

1

p

θ

(

x

t

∣

x

t

+

1

)

q

(

x

t

∣

x

t

−

1

)

]

=

E

q

(

x

1

:

T

∣

x

0

)

[

log

p

θ

(

x

0

∣

x

1

)

]

+

E

q

(

x

1

:

T

∣

x

0

)

[

log

p

(

x

T

)

q

(

x

T

∣

x

T

−

1

)

]

+

E

q

(

x

1

:

T

∣

x

0

)

[

∑

t

=

1

T

−

1

log

p

θ

(

x

t

∣

x

t

+

1

)

q

(

x

t

∣

x

t

−

1

)

]

=

E

q

(

x

1

:

T

∣

x

0

)

[

log

p

θ

(

x

0

∣

x

1

)

]

+

E

q

(

x

1

:

T

∣

x

0

)

[

log

p

(

x

T

)

q

(

x

T

∣

x

T

−

1

)

]

+

∑

t

=

1

T

−

1

E

q

(

x

1

:

T

∣

x

0

)

[

log

p

θ

(

x

t

∣

x

t

+

1

)

q

(

x

t

∣

x

t

−

1

)

]

=

E

q

(

x

1

∣

x

0

)

[

log

p

θ

(

x

0

∣

x

1

)

]

+

E

q

(

x

T

−

1

,

x

T

∣

x

0

)

[

log

p

(

x

T

)

q

(

x

T

∣

x

T

−

1

)

]

+

∑

t

=

1

T

−

1

E

q

(

x

t

−

1

,

x

t

,

x

t

+

1

∣

x

0

)

[

log

p

θ

(

x

t

∣

x

t

+

1

)

q

(

x

t

∣

x

t

−

1

)

]

=

E

q

(

x

1

∣

x

0

)

[

log

p

θ

(

x

0

∣

x

1

)

]

⏟

L0 reconstruction term

−

E

q

(

x

T

−

1

∣

x

0

)

[

D

K

L

(

q

(

x

T

∣

x

T

−

1

)

∥

p

(

x

T

)

)

]

⏟

LT prior matching term

−

∑

t

=

1

T

−

1

E

q

(

x

t

−

1

,

x

t

+

1

∣

x

0

)

[

D

K

L

(

q

(

x

t

∣

x

t

−

1

)

∥

p

θ

(

x

t

∣

x

t

+

1

)

)

]

⏟

Lt consistency term

\begin{aligned} & \mathbb{E}_{q\left(\boldsymbol{x}_{1: T} \mid \boldsymbol{x}_0\right)}\left[\log \frac{p\left(\boldsymbol{x}_{0: T}\right)}{q\left(\boldsymbol{x}_{1: T} \mid \boldsymbol{x}_0\right)}\right] \\ & =\mathbb{E}_{q\left(\boldsymbol{x}_{1: T} \mid \boldsymbol{x}_0\right)}\left[\log \frac{p\left(\boldsymbol{x}_T\right) \prod_{t=1}^T p_{\boldsymbol{\theta}}\left(\boldsymbol{x}_{t-1} \mid \boldsymbol{x}_t\right)}{\prod_{t=1}^T q\left(\boldsymbol{x}_t \mid \boldsymbol{x}_{t-1}\right)}\right] \\ & =\mathbb{E}_{q\left(\boldsymbol{x}_{1: T} \mid \boldsymbol{x}_0\right)}\left[\log \frac{p\left(\boldsymbol{x}_T\right) p_{\boldsymbol{\theta}}\left(\boldsymbol{x}_0 \mid \boldsymbol{x}_1\right) \prod_{t=2}^T p_{\boldsymbol{\theta}}\left(\boldsymbol{x}_{t-1} \mid \boldsymbol{x}_t\right)}{q\left(\boldsymbol{x}_T \mid \boldsymbol{x}_{T-1}\right) \prod_{t=1}^{T-1} q\left(\boldsymbol{x}_t \mid \boldsymbol{x}_{t-1}\right)}\right] \\ & =\mathbb{E}_{q\left(\boldsymbol{x}_{1: T} \mid \boldsymbol{x}_0\right)}\left[\log \frac{p\left(\boldsymbol{x}_T\right) p_{\boldsymbol{\theta}}\left(\boldsymbol{x}_0 \mid \boldsymbol{x}_1\right) \prod_{t=1}^{T-1} p_{\boldsymbol{\theta}}\left(\boldsymbol{x}_t \mid \boldsymbol{x}_{t+1}\right)}{q\left(\boldsymbol{x}_T \mid \boldsymbol{x}_{T-1}\right) \prod_{t=1}^{T-1} q\left(\boldsymbol{x}_t \mid \boldsymbol{x}_{t-1}\right)}\right] \\ & =\mathbb{E}_{q\left(\boldsymbol{x}_{1: T} \mid \boldsymbol{x}_0\right)}\left[\log \frac{p\left(\boldsymbol{x}_T\right) p_{\boldsymbol{\theta}}\left(\boldsymbol{x}_0 \mid \boldsymbol{x}_1\right)}{q\left(\boldsymbol{x}_T \mid \boldsymbol{x}_{T-1}\right)}\right]+\mathbb{E}_{q\left(\boldsymbol{x}_{1: T} \mid \boldsymbol{x}_0\right)}\left[\log \prod_{t=1}^{T-1} \frac{p_{\boldsymbol{\theta}}\left(\boldsymbol{x}_t \mid \boldsymbol{x}_{t+1}\right)}{q\left(\boldsymbol{x}_t \mid \boldsymbol{x}_{t-1}\right)}\right] \\ & =\mathbb{E}_{q\left(\boldsymbol{x}_{1: T} \mid \boldsymbol{x}_0\right)}\left[\log p_{\boldsymbol{\theta}}\left(\boldsymbol{x}_0 \mid \boldsymbol{x}_1\right)\right]+\mathbb{E}_{q\left(\boldsymbol{x}_{1: T} \mid \boldsymbol{x}_0\right)}\left[\log \frac{p\left(\boldsymbol{x}_T\right)}{q\left(\boldsymbol{x}_T \mid \boldsymbol{x}_{T-1}\right)}\right]+\mathbb{E}_{q\left(\boldsymbol{x}_{1: T} \mid \boldsymbol{x}_0\right)}\left[\sum_{t=1}^{T-1} \log \frac{p_{\boldsymbol{\theta}}\left(\boldsymbol{x}_t \mid \boldsymbol{x}_{t+1}\right)}{q\left(\boldsymbol{x}_t \mid \boldsymbol{x}_{t-1}\right)}\right] \\ & =\mathbb{E}_{q\left(\boldsymbol{x}_{1: T} \mid \boldsymbol{x}_0\right)}\left[\log p_{\boldsymbol{\theta}}\left(\boldsymbol{x}_0 \mid \boldsymbol{x}_1\right)\right]+\mathbb{E}_{q\left(\boldsymbol{x}_{1: T} \mid \boldsymbol{x}_0\right)}\left[\log \frac{p\left(\boldsymbol{x}_T\right)}{q\left(\boldsymbol{x}_T \mid \boldsymbol{x}_{T-1}\right)}\right]+\sum_{t=1}^{T-1} \mathbb{E}_{q\left(\boldsymbol{x}_{1: T} \mid \boldsymbol{x}_0\right)}\left[\log \frac{p_{\boldsymbol{\theta}}\left(\boldsymbol{x}_t \mid \boldsymbol{x}_{t+1}\right)}{q\left(\boldsymbol{x}_t \mid \boldsymbol{x}_{t-1}\right)}\right] \\ & =\mathbb{E}_{q\left(\boldsymbol{x}_1 \mid \boldsymbol{x}_0\right)}\left[\log p_{\boldsymbol{\theta}}\left(\boldsymbol{x}_0 \mid \boldsymbol{x}_1\right)\right]+\mathbb{E}_{q\left(\boldsymbol{x}_{T-1}, \boldsymbol{x}_T \mid \boldsymbol{x}_0\right)}\left[\log \frac{p\left(\boldsymbol{x}_T\right)}{q\left(\boldsymbol{x}_T \mid \boldsymbol{x}_{T-1}\right)}\right]+\sum_{t=1}^{T-1} \mathbb{E}_{q\left(\boldsymbol{x}_{t-1}, \boldsymbol{x}_t, \boldsymbol{x}_{t+1} \mid \boldsymbol{x}_0\right)}\left[\log \frac{p_{\boldsymbol{\theta}}\left(\boldsymbol{x}_t \mid \boldsymbol{x}_{t+1}\right)}{q\left(\boldsymbol{x}_t \mid \boldsymbol{x}_{t-1}\right)}\right] \\ & =\underbrace{\mathbb{E}_{q\left(\boldsymbol{x}_1 \mid \boldsymbol{x}_0\right)}\left[\log p_\theta\left(\boldsymbol{x}_0 \mid \boldsymbol{x}_1\right)\right]}_{\text {L0 reconstruction term }} -\underbrace{\mathbb{E}_{q\left(\boldsymbol{x}_{T-1} \mid \boldsymbol{x}_0\right)}\left[D_{ \boldsymbol{KL}}\left(q\left(\boldsymbol{x}_T \mid \boldsymbol{x}_{T-1}\right) \| p\left(\boldsymbol{x}_T\right)\right)\right]}_{\text {LT prior matching term }} \\ & -\sum_{t=1}^{T-1} \underbrace{\mathbb{E}_{q\left(\boldsymbol{x}_{t-1}, \boldsymbol{x}_{t+1} \mid \boldsymbol{x}_0\right)}\left[D_{ \boldsymbol{KL}}\left(q\left(\boldsymbol{x}_t \mid \boldsymbol{x}_{t-1}\right) \| p_\theta\left(\boldsymbol{x}_t \mid \boldsymbol{x}_{t+1}\right)\right)\right]}_{\text {Lt consistency term }} \end{aligned}

Eq(x1:T∣x0)[logq(x1:T∣x0)p(x0:T)]=Eq(x1:T∣x0)[log∏t=1Tq(xt∣xt−1)p(xT)∏t=1Tpθ(xt−1∣xt)]=Eq(x1:T∣x0)[logq(xT∣xT−1)∏t=1T−1q(xt∣xt−1)p(xT)pθ(x0∣x1)∏t=2Tpθ(xt−1∣xt)]=Eq(x1:T∣x0)[logq(xT∣xT−1)∏t=1T−1q(xt∣xt−1)p(xT)pθ(x0∣x1)∏t=1T−1pθ(xt∣xt+1)]=Eq(x1:T∣x0)[logq(xT∣xT−1)p(xT)pθ(x0∣x1)]+Eq(x1:T∣x0)[logt=1∏T−1q(xt∣xt−1)pθ(xt∣xt+1)]=Eq(x1:T∣x0)[logpθ(x0∣x1)]+Eq(x1:T∣x0)[logq(xT∣xT−1)p(xT)]+Eq(x1:T∣x0)[t=1∑T−1logq(xt∣xt−1)pθ(xt∣xt+1)]=Eq(x1:T∣x0)[logpθ(x0∣x1)]+Eq(x1:T∣x0)[logq(xT∣xT−1)p(xT)]+t=1∑T−1Eq(x1:T∣x0)[logq(xt∣xt−1)pθ(xt∣xt+1)]=Eq(x1∣x0)[logpθ(x0∣x1)]+Eq(xT−1,xT∣x0)[logq(xT∣xT−1)p(xT)]+t=1∑T−1Eq(xt−1,xt,xt+1∣x0)[logq(xt∣xt−1)pθ(xt∣xt+1)]=L0 reconstruction term

Eq(x1∣x0)[logpθ(x0∣x1)]−LT prior matching term

Eq(xT−1∣x0)[DKL(q(xT∣xT−1)∥p(xT))]−t=1∑T−1Lt consistency term

Eq(xt−1,xt+1∣x0)[DKL(q(xt∣xt−1)∥pθ(xt∣xt+1))]

展开后包含三项:

- L T L_T LT: 先验匹配项。作用:约束最终噪声分布接近标准正态。当 T T T足够大时, q ( x T ∣ x 0 ) q\left(\boldsymbol{x}_{T} \mid \boldsymbol{x}_0\right) q(xT∣x0) 已是标准正态,此项可忽略。

- L t L_{t} Lt: 去噪匹配项(核心项)。真实分布与模型分布的KL散度。

- L 0 L_0 L0: 重构项。通常用离散化后的高斯分布或固定方差处理。

优化目标为:

L

VLB

=

E

q

[

−

log

p

θ

(

x

0

:

T

)

q

(

x

1

:

T

∣

x

0

)

]

\mathcal{L}_{\text{VLB}} = \mathbb{E}_q\left[-\log\frac{p_\theta(x_{0:T})}{q(x_{1:T}|x_0)}\right]

LVLB=Eq[−logq(x1:T∣x0)pθ(x0:T)]

L

t

=

E

x

0

,

ϵ

[

1

2

∥

Σ

θ

(

x

t

,

t

)

∥

2

2

∥

μ

θ

(

x

t

,

t

)

∥

2

]

=

E

x

0

,

ϵ

[

1

2

∥

Σ

θ

∥

2

2

∥

1

α

t

(

x

t

−

1

−

α

t

1

−

α

ˉ

t

ϵ

θ

(

x

t

,

t

)

)

∥

2

]

=

E

x

0

,

ϵ

[

(

1

−

α

t

)

2

2

α

t

(

1

−

α

ˉ

t

)

∥

Σ

θ

∥

2

2

∥

ϵ

t

−

ϵ

θ

(

x

t

,

t

)

∥

2

]

=

E

x

0

,

ϵ

[

(

1

−

α

t

)

2

2

α

t

(

1

−

α

ˉ

t

)

∥

Σ

θ

∥

2

2

∥

ϵ

t

−

ϵ

θ

(

α

ˉ

t

x

0

+

1

−

α

ˉ

t

ϵ

t

,

t

)

∥

2

]

\begin{aligned} L_t & =\mathbb{E}_{ \boldsymbol{x}_0, \epsilon} \left[\frac{1}{2\left\|\boldsymbol{\Sigma}_\theta\left( \boldsymbol{x}_t, t\right)\right\|_2^2}\left\| \mu_\theta\left( \boldsymbol{x}_t, t\right)\right\|^2\right] \\ & =\mathbb{E}_{ \boldsymbol{x}_0, \epsilon}\left[\frac{1}{2\left\|\boldsymbol{\Sigma}_\theta\right\|_2^2}\left\| \frac{1}{\sqrt{\alpha_t}}\left( \boldsymbol{x}_t-\frac{1-\alpha_t}{\sqrt{1-\bar{\alpha}_t}} \epsilon_\theta\left( \boldsymbol{x}_t, t\right)\right)\right\|^2\right] \\ & =\mathbb{E}_{ \boldsymbol{x}_0, \epsilon}\left[\frac{\left(1-\alpha_t\right)^2}{2 \alpha_t\left(1-\bar{\alpha}_t\right)\left\|\boldsymbol{\Sigma}_\theta\right\|_2^2}\left\|\boldsymbol{\epsilon}_t-\boldsymbol{\epsilon}_\theta\left( \boldsymbol{x}_t, t\right)\right\|^2\right] \\ & =\mathbb{E}_{ \boldsymbol{x}_0, \epsilon}\left[\frac{\left(1-\boldsymbol{\alpha}_t\right)^2}{2 \alpha_t\left(1-\bar{\alpha}_t\right)\left\|\boldsymbol{\Sigma}_\theta\right\|_2^2}\left\|\boldsymbol{\epsilon}_t-\boldsymbol{\epsilon}_\theta\left(\sqrt{\bar{\alpha}_t} \boldsymbol{x}_0+\sqrt{1-\bar{\alpha}_t} \boldsymbol{\epsilon}_t, t\right)\right\|^2\right] \end{aligned}

Lt=Ex0,ϵ[2∥Σθ(xt,t)∥221∥μθ(xt,t)∥2]=Ex0,ϵ[2∥Σθ∥221

αt1(xt−1−αˉt1−αtϵθ(xt,t))

2]=Ex0,ϵ[2αt(1−αˉt)∥Σθ∥22(1−αt)2∥ϵt−ϵθ(xt,t)∥2]=Ex0,ϵ[2αt(1−αˉt)∥Σθ∥22(1−αt)2

ϵt−ϵθ(αˉtx0+1−αˉtϵt,t)

2]

其中

μ

θ

(

x

t

,

t

)

=

1

α

t

(

x

t

−

1

−

α

t

1

−

α

ˉ

t

ϵ

θ

(

x

t

,

t

)

)

\begin{aligned} \boldsymbol{\mu}_\theta\left( \boldsymbol{x}_t, t\right) =\frac{1}{\sqrt{\alpha_t}}\left( \boldsymbol{x}_t-\frac{1-\alpha_t}{\sqrt{1-\bar{\alpha}_t}} \boldsymbol{\epsilon}_\theta\left( \boldsymbol{x}_t, t\right)\right) \\ \end{aligned}

μθ(xt,t)=αt1(xt−1−αˉt1−αtϵθ(xt,t)),

x

t

−

1

=

N

(

x

t

−

1

;

1

α

t

(

x

t

−

1

−

α

t

1

−

α

ˉ

t

ϵ

θ

(

x

t

,

t

)

)

,

Σ

θ

(

x

t

,

t

)

)

\boldsymbol{x}_{t-1} =\mathcal{N}\left( \boldsymbol{x}_{t-1} ; \frac{1}{\sqrt{\alpha_t}}\left( \boldsymbol{x}_t-\frac{1-\alpha_t}{\sqrt{1-\bar{\alpha}_t}} \boldsymbol{\epsilon}_\theta\left( \boldsymbol{x}_t, t\right)\right), \boldsymbol{\Sigma}_\theta\left( \boldsymbol{x}_t, t\right)\right)

xt−1=N(xt−1;αt1(xt−1−αˉt1−αtϵθ(xt,t)),Σθ(xt,t))

实际使用简化形式:

L simple = E t , x 0 , ϵ [ ∥ ϵ − ϵ θ ( x t , t ) ∥ 2 ] = E t ∼ [ 1 , T ] , x 0 , ϵ t [ ∥ ϵ t − ϵ θ ( α ˉ t x 0 + 1 − α ˉ t ϵ t , t ) ∥ 2 ] \begin{aligned} \mathcal{L}_{\text{simple}} &= \mathbb{E}_{t,x_0,\epsilon}\left[\|\epsilon - \epsilon_\theta(x_t,t)\|^2\right] \\ &=\mathbb{E}_{t \sim[1, T], \boldsymbol{x}_{0, \epsilon_t}}\left[\left\|\epsilon_t-\epsilon_\theta\left(\sqrt{\bar{\alpha}_t} \boldsymbol{x}_0+\sqrt{1-\bar{\alpha}_t} \epsilon_t, t\right)\right\|^2\right] \end{aligned} Lsimple=Et,x0,ϵ[∥ϵ−ϵθ(xt,t)∥2]=Et∼[1,T],x0,ϵt[ ϵt−ϵθ(αˉtx0+1−αˉtϵt,t) 2]

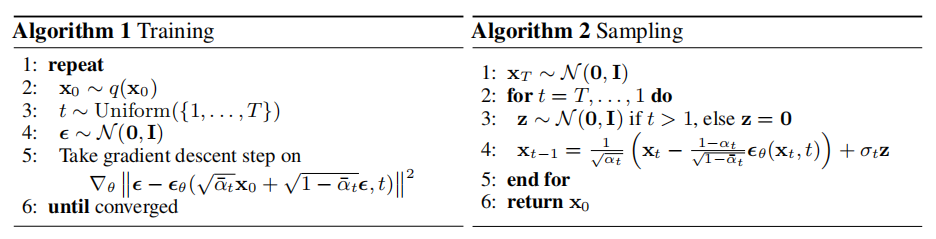

5. 采样算法

- 从 x T ∼ N ( 0 , I ) x_T \sim \mathcal{N}(0, \boldsymbol{I}) xT∼N(0,I)开始

- 对于

t

=

T

,

.

.

.

,

1

t = T,...,1

t=T,...,1:

- 预测噪声 ϵ θ ( x t , t ) \epsilon_\theta(x_t,t) ϵθ(xt,t)

- 计算均值 μ θ ( x t , t ) \mu_\theta(x_t,t) μθ(xt,t)

- 采样 x t − 1 ∼ N ( μ θ , σ t 2 I ) x_{t-1} \sim \mathcal{N}(\mu_\theta,\sigma_t^2 \boldsymbol{I}) xt−1∼N(μθ,σt2I)

- 输出 x 0 x_0 x0

6. 实现细节

| 超参数 | 典型值 | 说明 |

|---|---|---|

| T | 1000 | 总步数 |

| β min \beta_{\text{min}} βmin | 1e-4 | 起始噪声 |

| β max \beta_{\text{max}} βmax | 0.02 | 最终噪声 |

| 网络架构 | U-Net | 带时间嵌入 |

7. 核心公式总结

-

前向过程:

x t = α ˉ t x 0 + 1 − α ˉ t ϵ x_t = \sqrt{\bar{\alpha}_t}x_0 + \sqrt{1-\bar{\alpha}_t}\epsilon xt=αˉtx0+1−αˉtϵ -

反向均值:

μ ~ t = 1 α t ( x t − β t 1 − α ˉ t ϵ θ ) \tilde{\mu}_t = \frac{1}{\sqrt{\alpha_t}}\left(x_t - \frac{\beta_t}{\sqrt{1-\bar{\alpha}_t}}\epsilon_\theta\right) μ~t=αt1(xt−1−αˉtβtϵθ) -

训练目标:

min θ ∥ ϵ − ϵ θ ( x t , t ) ∥ 2 \min_\theta \|\epsilon - \epsilon_\theta(x_t,t)\|^2 θmin∥ϵ−ϵθ(xt,t)∥2

参考资料

- 扩散模型原理+DDPM案例代码解析

- DDPM扩散模型公式推理----扩散和逆扩散过程

- What are Diffusion Models?

- 原始论文:Denoising Diffusion Probabilistic Models

如有错误欢迎指正。

如有疑问欢迎留言讨论。

1万+

1万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?