import torch

x_data = torch.Tensor([[1.0], [2.0], [3.0]])

y_data = torch.Tensor([[0], [0], [1]])

#x_data和y_data为三行一列的矩阵,即总共有三个数据,每个数据只有一个特征

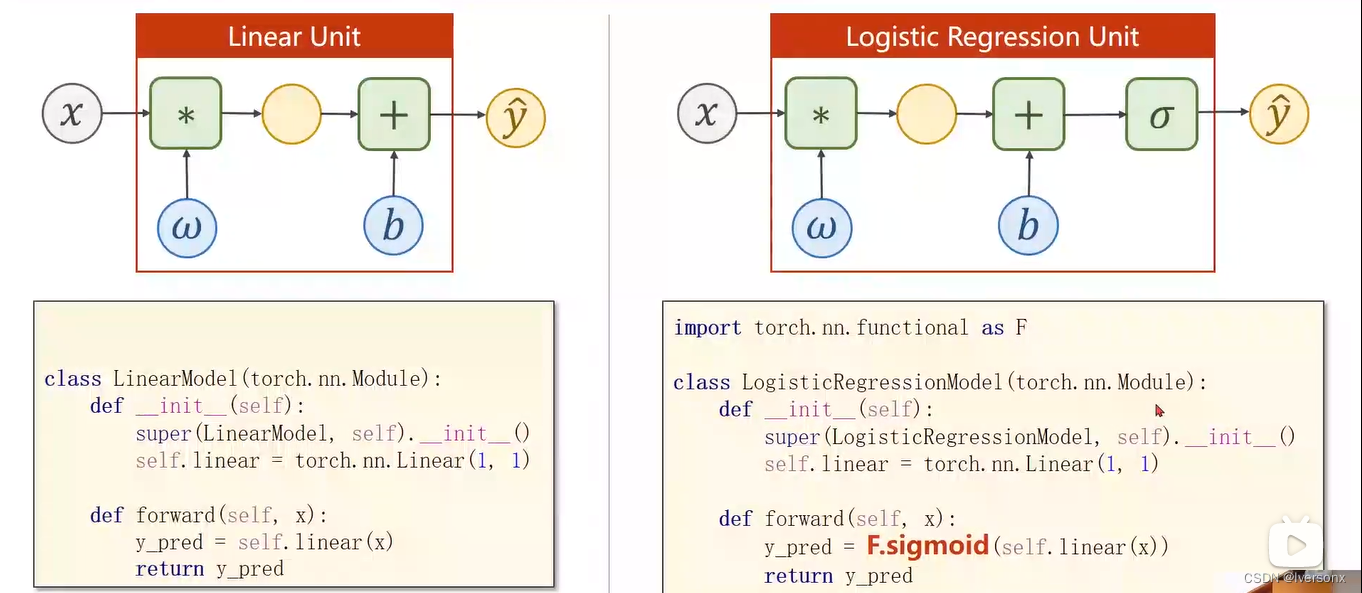

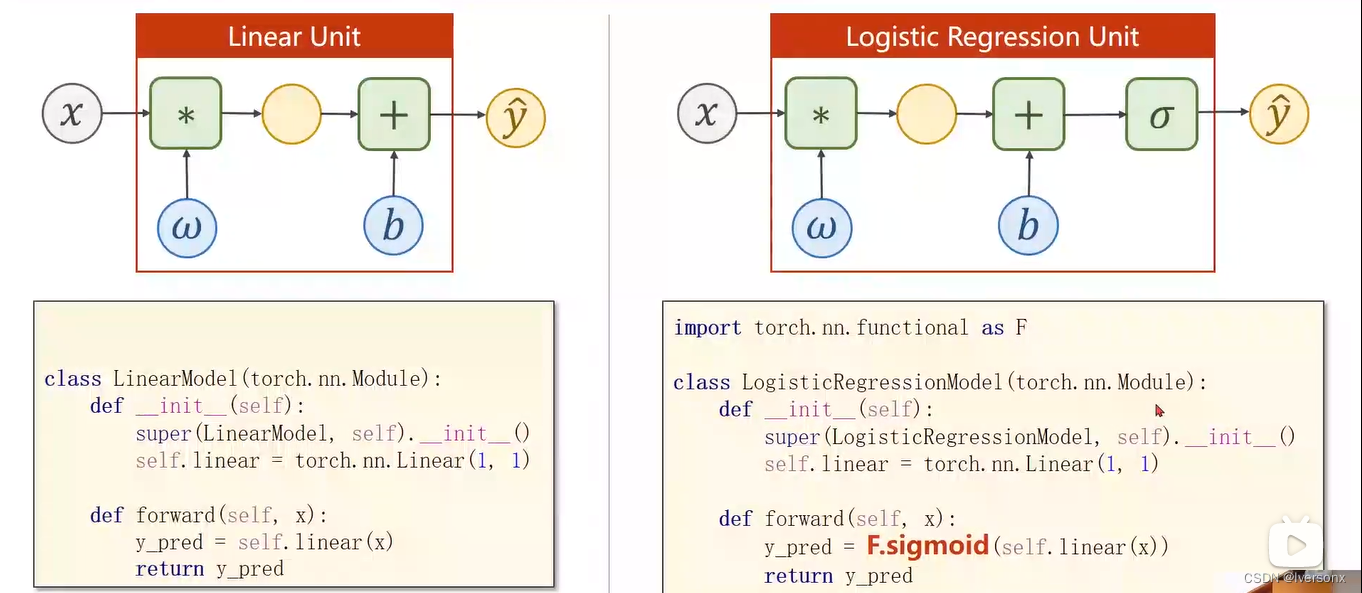

class LogisticRegressionModel(torch.nn.Module):

def __init__(self):

super(LogisticRegressionModel,self).__init__()

self.linear = torch.nn.Linear(1,1)

#(1,1)指输入x和输出y的特征维度,此处数据集中x、y的特征都是一维的

#该线性层需要学习的参数是w和b,获取w、b的方式是linear.weight和linear.bias

def forward(self,x):

y_pred = torch.sigmoid(self.linear(x))

return y_pred

model = LogisticRegressionModel()

criterion = torch.nn.MSELoss(reduction='sum')

optimizer = torch.optim.SGD(model.parameters(),lr=0.01)

for epoch in range(100):

y_pred = model(x_data) #forward:predict

loss = criterion(y_pred,y_data) #forward:loss

print(epoch,loss.item())

optimizer.zero_grad() #将梯度置为零

loss.backward() #backward:autograd,自动计算梯度

optimizer.step() #update参数,即更新w、b的值

print('w = ',model.linear.weight.item())

print('b = ',model.linear.bias.item())

x_test = torch.Tensor([[4.0]])

y_test = model(x_test)

print('y_pred = ',y_test.data)

本文介绍了如何使用PyTorch库创建一个简单的逻辑回归模型,从数据预处理到模型训练和预测,涵盖了梯度下降和权重更新的过程。适合初学者了解基本的机器学习算法实现。

本文介绍了如何使用PyTorch库创建一个简单的逻辑回归模型,从数据预处理到模型训练和预测,涵盖了梯度下降和权重更新的过程。适合初学者了解基本的机器学习算法实现。

9万+

9万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?