Graph Neural Networks for Social Recommendation

-

LINK: https://arxiv.org/abs/1902.07243

-

CLASSIFICATION: RECOMMENDER-SYSTEM, HETEROGENEOUS NETWORK, GCN

-

YEAR: Submitted on 19 Feb 2019 (v1), last revised 23 Nov 2019 (this version, v2)

-

FROM: WWW 2019

-

WHAT PROBLEM TO SOLVE: Building social recommender systems based on GNNs faces challenges. The first challenge is how to inherently combine the social graph and the user-item graph. Moreover, the user-item graph not only contains interactions between users and items but also includes users’ opinions on items. The second challenge is how to capture interactions and opinions between users and items jointly. The third challenge is how to distinguish social relations with heterogeneous strengths.

-

SOLUTION:

Specially, we propose a novel graph neural network GraphRec for social recommendations, which can address three aforementioned challenges simultaneously. Our major contributions are summarized as follows:

- We propose a novel graph neural network GraphRec, which can model graph data in social recommendations coherently;

- We provide a principled approach to jointly capture interactions and opinions in the user-item graph;

- We introduce a method to consider heterogeneous strengths of social relations mathematically; and

- We demonstrate the effectiveness of the proposed framework on various real-world datasets.

-

CORE POINT:

-

The Proposed Framework

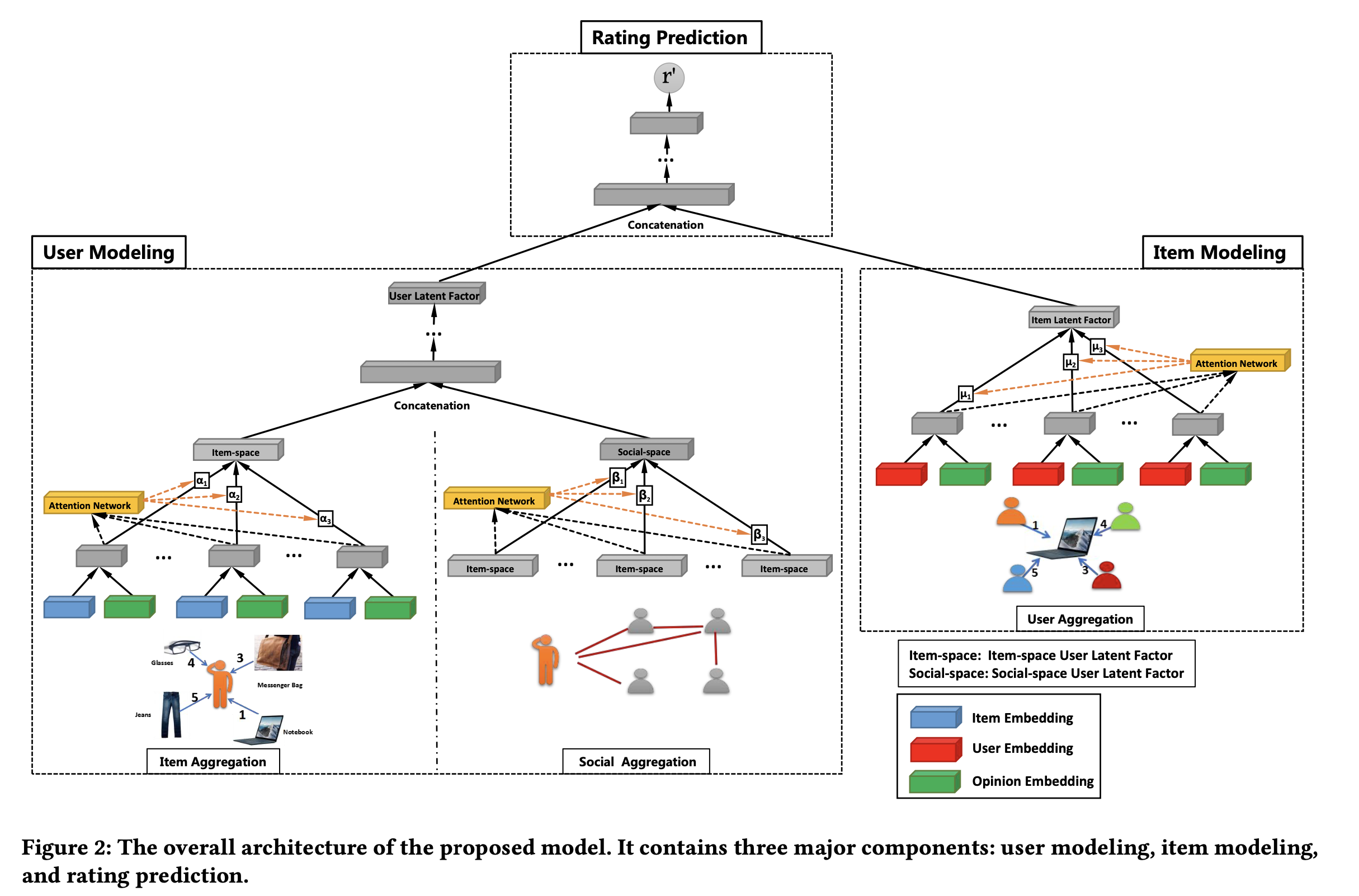

The model consists of three components: user modeling, item modeling, and rating prediction.

-

User Modeling

The first component is user modeling, which is to learn latent factors of users. As data in social recommender systems includes two different graphs, i.e., a social graph and a user-item graph, we are provided with a great opportunity to learn user representations from different perspectives. Two aggregations are introduced to respectively process these two different graphs.

-

Item Aggregation

To understand users via interactions between users and items in the user-item graph (or item-space).

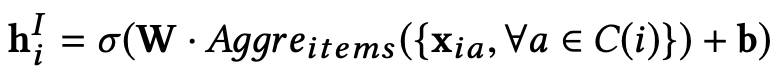

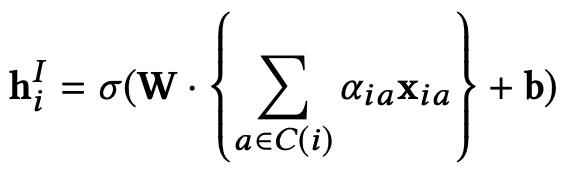

The purpose of item aggregation is to learn item-space user latent factor h i I h^I_i hiI by considering items a user u i u_i ui has interacted with and users’ opinions on these items. To mathematically represent this aggregation, we use the following function as:

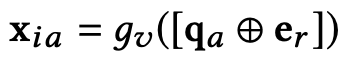

The MLP takes the concatenation of item embedding q a q_a qa and its opinion embedding e r e_r er as input. The output of MLP is the opinion-aware representation of the interaction between u i u_i ui and v a v_a va, x i a x_{ia} xia, as follows:

To alleviate the limitation of mean-based aggregator, inspired by attention mechanisms, an intuitive solution is to tweak α i α_i αi to be aware of the target user u i u_i ui, i.e., assigning an individualized weight for each ( v a v_a va, u i u_i ui) pair,

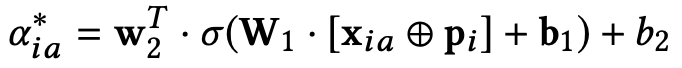

Formally, the attention network is defined as,

The final attention weights are obtained by normalizing the above attentive scores using Softmax function.

-

Social Aggregation

The relationship between users in the social graph, which can help model users from the social perspective (or social-space). The learning of social-space user latent factors should consider heterogeneous strengths of social relations. Therefore, we introduce an attention mechanism to select social friends that are representative to characterize users social information and then aggregate their information.

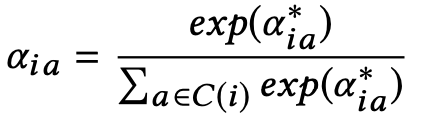

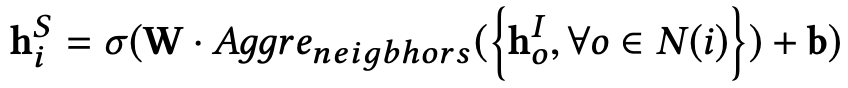

The social-space user latent factor of u i u_i ui, h i S h^S_i hiS, is to aggregate the item-space user latent factors of users in u i u_i ui’s neighbors N ( i ) N(i) N(i), as the follows:

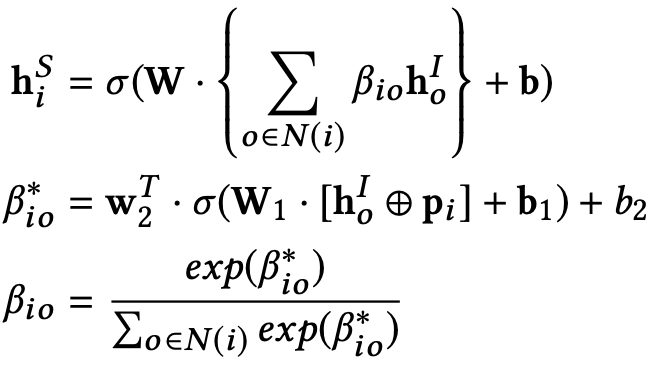

We perform an attention mechanism with a two-layer neural network to extract these users that are important to influence u i u_i ui, and model their tie strengths, by relating social attention β i o β_{io} βio with h o I h^I_o hoI and the target user embedding p i p_i pi, as below.

-

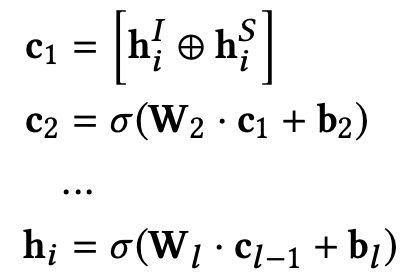

Learning User Latent Factor

It is intuitive to obtain user latent factors by combining information from both item space and social space.

We propose to combine these two latent factors to the final user latent factor via a standard MLP where the item-space user latent factor hI iand the social-space user latent factor hS iare concatenated before feeding into MLP. Formally, the user latent factor hiis defined as,

-

-

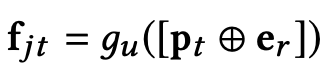

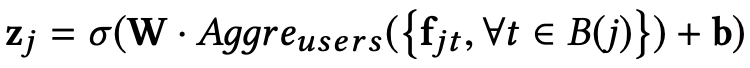

Item Modeling

The second component is item modeling, which is to learn latent factors of items. In order to consider both interactions and opinions in the user-item graph, we introduce user aggregation, which is to aggregate users’ opinions in item modeling.

-

User Aggregation

Same as Item Aggregation

-

-

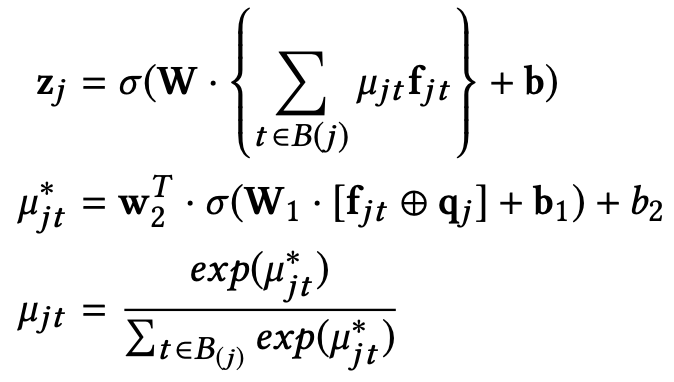

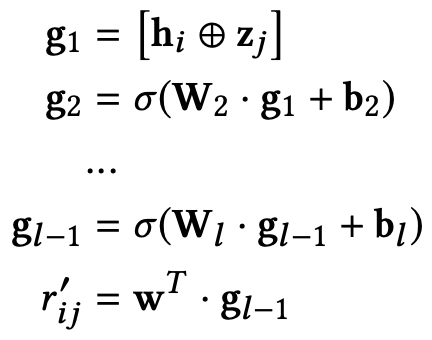

Rating Prediction

The third component is to learn model parameters via prediction by integrating user and item modeling components.

With the latent factors of users and items (i.e., h i h_i hi and z j z_j zj ), we can first concatenate them [ h i h_i hi ⊕ z j z_j zj] and then feed it into MLP for rating prediction as:

-

-

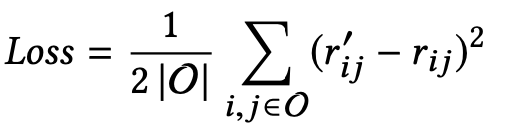

Objective Function

-

Experiment

-

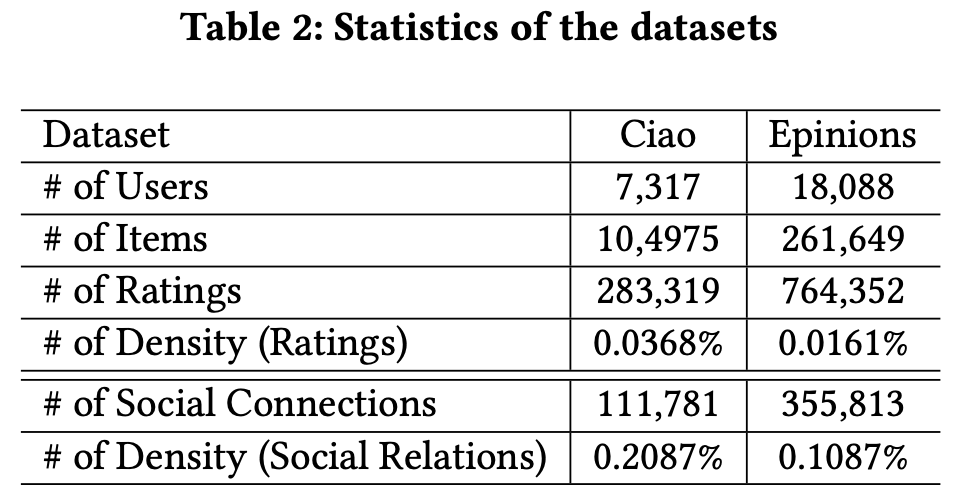

Datasets

-

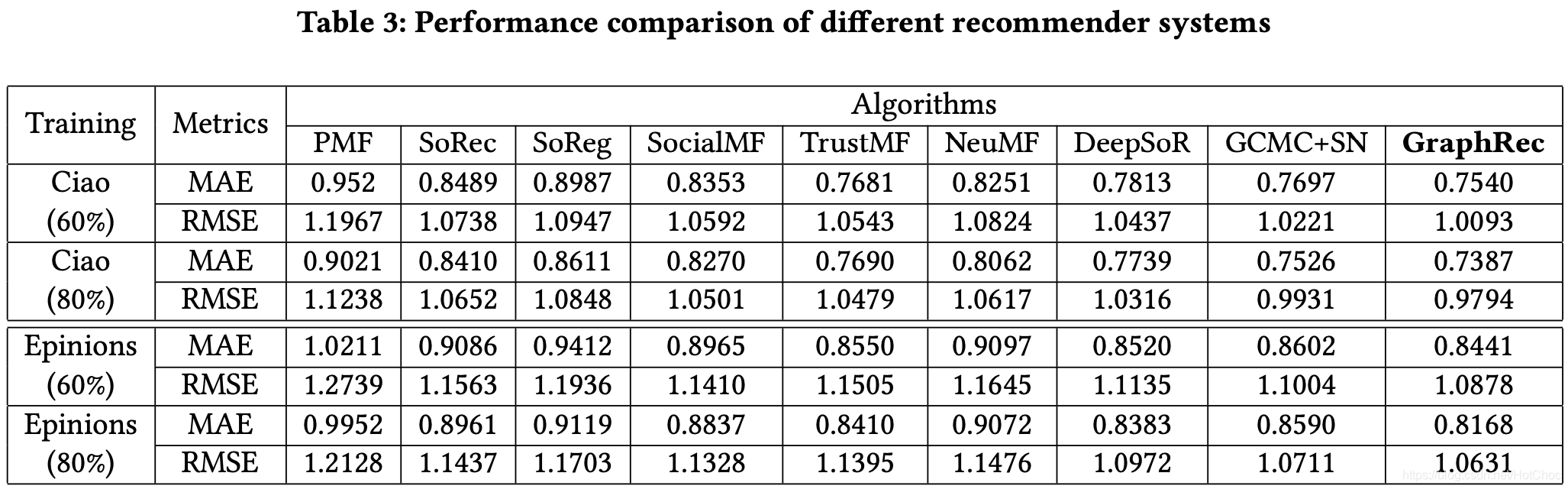

Performance Comparison of Recommender Systems

-

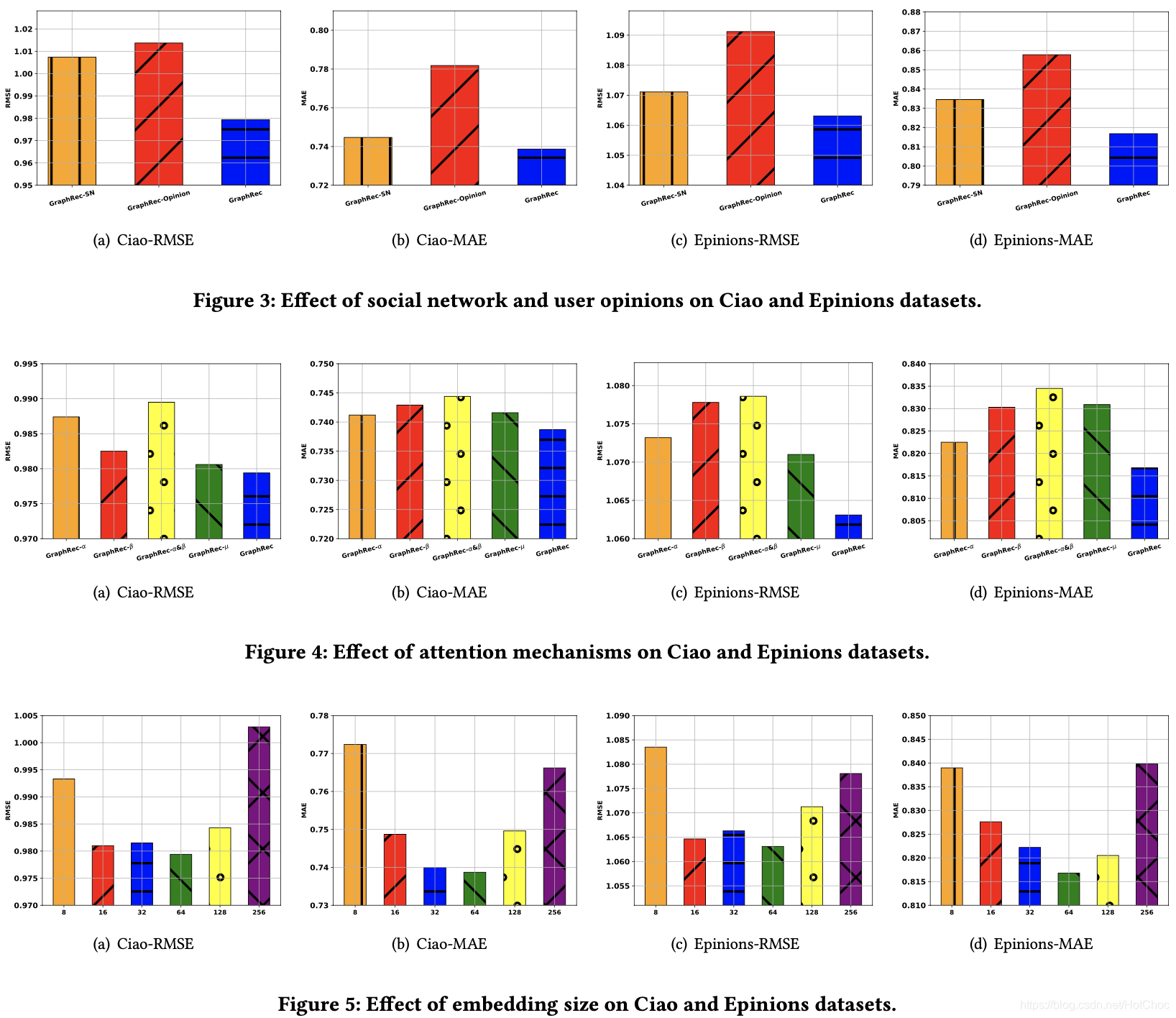

Model Analysis

- Effect of Social Network and User Opinions

- Effect of Attention Mechanisms

- Effect of Embedding Size

-

-

-

EXISTING PROBLEMS: 1. Currently we only incorporate the social graph into recommendation, while many real-world industries are associated rich other side information on users as well as items. 2. Now we consider both rating and social information static. However, rating and social information are naturally dynamic.

-

IMPROVEMENT IDEAS: 1. Users and items are associated with rich attributes. Therefore, exploring graph neural networks for recommendation with attributes would be an interesting future direction. 2. Consider building dynamic graph neural networks for social recommendations with dynamic.

提出GraphRec,一种结合社交图和用户-物品图的图神经网络,解决社交推荐系统的三大挑战:融合不同图、同时捕捉互动与意见、区分社会关系强度。通过用户建模、物品建模和评分预测组件,有效提升推荐精度。

提出GraphRec,一种结合社交图和用户-物品图的图神经网络,解决社交推荐系统的三大挑战:融合不同图、同时捕捉互动与意见、区分社会关系强度。通过用户建模、物品建模和评分预测组件,有效提升推荐精度。

3094

3094

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?