进入pod

# kubectl exec kubia-p465w -it bash

root@kubia-p465w:/#

root@kubia-p465w:/#

root@kubia-p465w:/# pwd

/

root@kubia-p465w:/# ls -l

total 68

-rw-r--r-- 1 root root 354 Apr 17 07:36 app.js

drwxr-xr-x 1 root root 4096 Jul 24 2017 bin

drwxr-xr-x 2 root root 4096 Jul 13 2017 boot

drwxr-xr-x 5 root root 360 Apr 18 07:05 dev

drwxr-xr-x 1 root root 4096 Apr 18 07:04 etc

drwxr-xr-x 1 root root 4096 Jul 26 2017 home

drwxr-xr-x 1 root root 4096 Jul 24 2017 lib

drwxr-xr-x 2 root root 4096 Jul 23 2017 lib64

drwxr-xr-x 2 root root 4096 Jul 23 2017 media

drwxr-xr-x 2 root root 4096 Jul 23 2017 mnt

drwxr-xr-x 1 root root 4096 Aug 18 2017 opt

dr-xr-xr-x 265 root root 0 Apr 18 07:05 proc

drwx------ 1 root root 4096 Aug 18 2017 root

drwxr-xr-x 1 root root 4096 Apr 18 07:05 run

drwxr-xr-x 2 root root 4096 Jul 23 2017 sbin

drwxr-xr-x 2 root root 4096 Jul 23 2017 srv

dr-xr-xr-x 12 root root 0 Apr 18 07:04 sys

drwxrwxrwt 1 root root 4096 Jul 24 2017 tmp

drwxr-xr-x 1 root root 4096 Aug 18 2017 usr

drwxr-xr-x 1 root root 4096 Jul 26 2017 var

显示标签

# kubectl get pods --show-labels

NAME READY STATUS RESTARTS AGE LABELS

kubia-p465w 1/1 Running 0 25h run=kubia

kubia-tqrvp 1/1 Running 0 25h run=kubia

kubia-wzmcz 1/1 Running 0 27h run=kubia

创建服务

yaml 配置文件

# cat kubia-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: kubia

spec:

ports:

- port: 80

targetPort: 8080

selector:

run: kubia

创建

# kubectl create -f kubia-svc.yaml

service/kubia created

显示

# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 10d

kubia ClusterIP 10.104.122.250 <none> 80/TCP 4s

kubia-http LoadBalancer 10.98.198.100 <pending> 8080:32099/TCP 27h

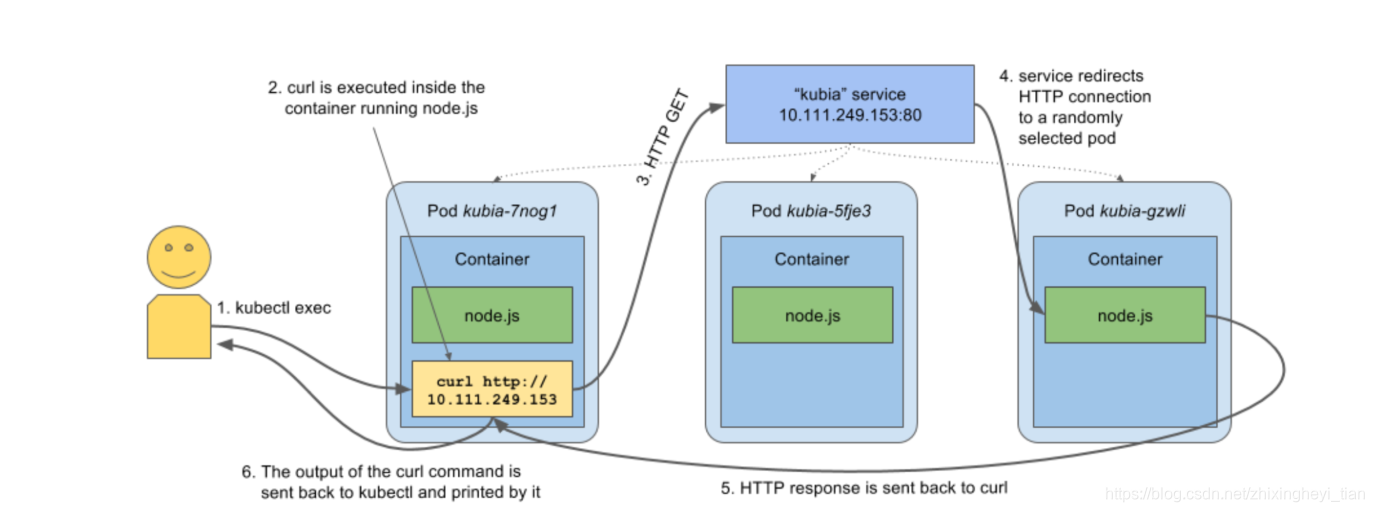

TESTING A SERVICE FROM WITHIN THE CLUSTER

So, how can we test our service from within the cluster? We want to perform an HTTP request against our service and see the response. There are a few ways we can do that. Here’s a few:

- The obvious way is to create a pod that will perform the request and log the response. We can then examine the pod’s log to see what the service’s response was.

- We can ssh into one of the Kubernetes nodes and use the curl command(这一条方法似乎不可行), or

- We can execute the curl command inside one of our existing pods through the kubectl exec command.

EXECUTING COMMANDS IN RUNNING CONTAINERS

# kubectl exec kubia-wzmcz -- curl -s 10.104.122.250:80

You've hit kubia-tqrvp

# kubectl exec kubia-wzmcz -- curl -s 10.104.122.250:80

You've hit kubia-p465w

或者先登陆一台pod

# kubectl exec kubia-p465w -it bash

root@kubia-p465w:/#

root@kubia-p465w:/opt/yarn/bin# curl 10.104.122.250:80

You've hit kubia-wzmcz

root@kubia-p465w:/opt/yarn/bin# curl 10.104.122.250:80

You've hit kubia-tqrvp

root@kubia-p465w:/opt/yarn/bin# curl 10.104.122.250:80

You've hit kubia-tqrvp

root@kubia-p465w:/opt/yarn/bin# curl 10.104.122.250:80

Figure : Using kubectl exec to run curl in one of the pods and test out a connection to the service

CONFIGURING SESSION AFFINITY ON THE SERVICE

If we execute the same command a few more times, we should hit a different pod with every invocation(调用), because the service proxy normally forwards each connection to a randomly selected backing pod, even if the connections are being opened by the same client.

If, on the other hand(另一方面), we want all requests made by a certain client to be redirected(重定向) to the same pod every time, we can set the service’s sessionAffinity property to ClientIP

(instead of None, which is the default)

apiVersion: v1

kind: Service

spec:

sessionAffinity: ClientIP

...

Environment variables in a container

kubectl exec kubia-p465w env

PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin

HOSTNAME=kubia-p465w

SPARK_PI_UI_SVC_PORT_4040_TCP_ADDR=10.109.107.84

KUBIA_HTTP_SERVICE_HOST=10.98.198.100

KUBIA_HTTP_SERVICE_PORT=8080

KUBERNETES_SERVICE_HOST=10.96.0.1

KUBERNETES_PORT_443_TCP=tcp://10.96.0.1:443

KUBERNETES_PORT_443_TCP_PORT=443

KUBERNETES_PORT_443_TCP_ADDR=10.96.0.1

SPARK_PI_UI_SVC_SERVICE_PORT=4040

KUBIA_HTTP_PORT=tcp://10.98.198.100:8080

SPARK_PI_UI_SVC_SERVICE_HOST=10.109.107.84

SPARK_PI_UI_SVC_SERVICE_PORT_SPARK_DRIVER_UI_PORT=4040

SPARK_PI_UI_SVC_PORT=tcp://10.109.107.84:4040

KUBIA_HTTP_PORT_8080_TCP_ADDR=10.98.198.100

KUBERNETES_SERVICE_PORT=443

SPARK_PI_UI_SVC_PORT_4040_TCP=tcp://10.109.107.84:4040

SPARK_PI_UI_SVC_PORT_4040_TCP_PORT=4040

KUBIA_HTTP_PORT_8080_TCP=tcp://10.98.198.100:8080

KUBIA_HTTP_PORT_8080_TCP_PROTO=tcp

KUBIA_HTTP_PORT_8080_TCP_PORT=8080

KUBERNETES_SERVICE_PORT_HTTPS=443

KUBERNETES_PORT=tcp://10.96.0.1:443

KUBERNETES_PORT_443_TCP_PROTO=tcp

SPARK_PI_UI_SVC_PORT_4040_TCP_PROTO=tcp

NPM_CONFIG_LOGLEVEL=info

NODE_VERSION=7.10.1

YARN_VERSION=0.24.4

HOME=/root

FQDN

fully-qualified domain name (FQDN)

CONNECTING TO THE SERVICE THROUGH ITS FQDN

default stands for the namespace the service is defined in and svc.cluster.local is a configurable cluster domain suffix.

root@kubia-3inly:/# curl http://kubia.default.svc.cluster.local

You’ve hit kubia-5asi2

root@kubia-3inly:/# curl http://kubia.default

You’ve hit kubia-3inly

root@kubia-3inly:/# curl http://kubia

You’ve hit kubia-8awf3

we can hit our service simply by using the service’s name as the hostname in the

requested URL. We can omit(省略) the namespace and the svc.cluster.local suffix because of how the DNS resolver inside each pod’s container is configured. Look at the /etc/resolv.conf file in the container and you’ll understand:

root@kubia-p465w:/# cat /etc/resolv.conf

nameserver 10.96.0.10

search default.svc.cluster.local svc.cluster.local cluster.local

options ndots:5

curl-ing the service works, but ping-ing it doesn’t. This is because the service’s cluster IP is just a virtual IP, and only has meaning when combined with the service port.

service endpoints

# kubectl get endpoints kubia

NAME ENDPOINTS AGE

kubia 172.17.0.10:8080,172.17.0.7:8080,172.17.0.9:8080 27h

kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

kubia-p465w 1/1 Running 0 2d5h 172.17.0.10 minikube <none> <none>

kubia-tqrvp 1/1 Running 0 2d5h 172.17.0.9 minikube <none> <none>

kubia-wzmcz 1/1 Running 0 2d7h 172.17.0.7 minikube <none> <none>

Keep the pod starting

kubectl run dnsutils --image=tutum/dnsutils --generator=run-pod/v1 \

--command -- sleep infinity

本文探讨了在Kubernetes环境中如何通过Pod访问集群内的服务,包括使用kubectl exec命令执行curl测试,配置服务会话亲和性,以及通过FQDN连接服务。展示了环境变量在容器中的作用,并解释了如何通过服务端点查看后端Pod。

本文探讨了在Kubernetes环境中如何通过Pod访问集群内的服务,包括使用kubectl exec命令执行curl测试,配置服务会话亲和性,以及通过FQDN连接服务。展示了环境变量在容器中的作用,并解释了如何通过服务端点查看后端Pod。

1万+

1万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?