3.2 HTTP请求的Python实现

3.2.1 urllib实现

1. 首先实现一个完整的请求与响应模型

# Get

import urllib

response = urllib.request.urlopen("http://www.zhihu.com")

html = response.read()

print(html)

# Get

import urllib

# 请求

request = urllib.request.Request(url="http://www.zhihu.com")

# 响应

response = urllib.request.urlopen(request)

html = response.read()

print(html)

# Post

import urllib

url = "https://www.xxxx.com/login"

postdata = {"username": "tmp",

"password": "tmp",

}

# info需要url编码

data = urllib.parse.urlencode(postdata)

req = urllib.request.Request(url, data)

response = url.request.urlopen(req)

html = response.read()

2. 请求头headers处理

import urllib

url = "http://www.xxxx.com/login"

user_agent = """

Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko)

Chrome/63.0.3239.26 Safari/537.36 Core/1.63.6821.400 QQBrowser/10.3.3040.400

"""

referer = "http://www.xxxx.com"

postdata = {"username": "tmp",

"password": "tmp",

}

data = urllib.parse.urlencode(postdata)

req = urllib.request.Request(url)

# 将user_agent, referer写入头信息

req.add_header("User-Agent", user_agent)

req.add_header("Referer", referer)

req.add_data(data)

response = urllib.request.urlopen(req)

html = response.read()

3. Cookie处理

import http.cookiejar

import urllib

cookie = http.cookiejar.CookieJar()

opener = urllib.request.build_opener(urllib.request.HTTPCookieProcessor(cookie))

response = opener.open("http://www.zhihu.com")

for item in cookie:

print(item.name + ": " + item.value)

# 手动添加Cookie

import urllib

opener = urllib.request.build_opener()

opener.addheaders.append(("Cookie", "email=" + "xxxxxx@163.com"))

req = urllib.request.Request("http://www.zhihu.com")

response = opener.open(req)

print(response.headers)

retdata = response.read()

4. Timeout设置超时

import urllib

req = urllib.request.Request("http://www.zhihu.com")

response = urllib.request.urlopen(req, timeout=2)

html = response.read()

print(html)

5. 获取HTTP响应码

import urllib

try:

response = urllib.request.urlopen("http://www.qq.com")

print(response)

except urllib.error.HTTPError as e:

print("Error coed: {}".format(e.code))

except Exception as e:

print("Exception: ".format(e))

6. 重定向

import urllib

response = urllib.request.urlopen("http://www.zhihu.cn")

isRedirected = response.geturl() == "http://www.zhihu.cn"

print(isRedirected)

# 禁止重定向

import urllib

class RedirectHandler(urllib.request.HTTPRedirectHandler):

def http_error_301(self, req, fp, code, msg, headers):

pass

def http_error_302(self, req, fp, code, msg, headers):

result = urllib.request.HTTPRedirectHandler.http_error_301(self, req, fp, code, msg, headers)

result.status = code

result.newurl = result.geturl()

return result

opener = urllib.request.build_opener(RedirectHandler)

response = opener.open("http://www.zhihu.cn")

print(response.read())

7. Proxy的设置

# 全局proxy

import urllib

proxy = urllib.request.ProxyHandler({"http": "127.0.0.1:8087"})

opener = urllib.request.build_opener(proxy, )

urllib.request.install_opener(opener)

response = urllib.request.urlopen("http://www.zhihu.com")

print(response.read())

# 局部proxy

import urllib

proxy = urllib.request.ProxyHandler({"http": "127.0.0.1:8087"})

opener = urllib.request.build_opener(proxy, )

response = opener.open("http://www.zhihu.com")

print(response.read())

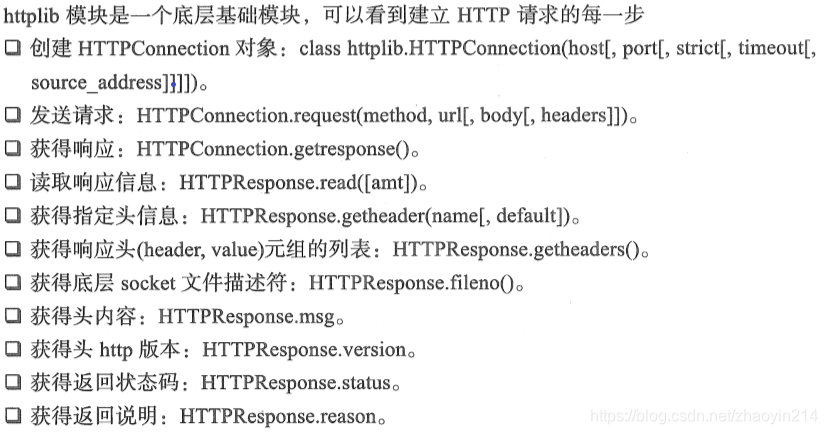

3.2.2 httplib/urllib实现

# Get

import httplib

conn = None

try:

conn = httplib.HTTPConnection("www.zhihu.com")

conn.request("GET", "/")

response = conn.getresponse()

print(response.status, response.reason)

print("-" * 40)

headers = response.getheaders()

for h in headers:

print(h)

print("-" * 40)

print(response.msg)

except Exception as e:

print(e)

finally:

if conn:

conn.close()

# Post

import httplib

import urllib

conn = None

try:

params = urllib.parse.urlencode({"name": "qiye", "age": 22})

headers = {"Content-type": "application/x-www-form-urlencoded",

"Accept": "text/plain"

}

conn = httplib.HTTPConnection("www.zhihu.com", 80, timeout=3)

conn.request("POST", "/login", params, headers)

response = conn.getresponse()

print(response.getheaders()) # 获取头信息

print(response.status)

print(response.read())

except Exception as e:

print(e)

finally:

if conn:

conn.close()

3.2.3 Requests

1. 请求与响应

# Get

import requests

r = requests.get("http://www.baidu.com")

print(r.content)

# Post

import requests

postdata = {"key": "value"}

r = requests.post("http://www.xxxx.com/login", data=postdata)

print(r.content)

r = requests.put("http://www.xxxxxx.com/put", data={"key": "value"})

r = requests.delete("http://www.baidu.com/")

print(r.content)

r = requests.head("http://www.xxxxxx.com/")

print(r.content)

r = requests.options("http://www.baidu.com/")

print(r.text)

help(r)

import requests

payload = {"Keywords": "blog:qiyeboy", "pageindex": 1}

r = requests.get("http://zzk.cnblogs.com/s/blogpost", params=payload)

print(r.url)

2. 响应与编码

import requests

r = requests.get("http://www.baidu.com")

print("content --> {}".format(r.content))

print("text --> {}".format(r.text))

print("encoding --> {}".format(r.encoding))

r.encoding = "utf-8"

print("new text --> {}".format(r.text))

import requests

import chardet

r = requests.get("http://www.baidu.com")

print(chardet.detect(r.content))

r.encoding = chardet.detect(r.content)["encoding"]

print(r.text)

import requests

r = requests.get("http://www.baidu.com", stream = True)

print(r.raw.read(10))

3. 请求头headers处理

import requests

user_agent = "Mozilla/5.0 (Windows NT 10.0; WOW64)"

headers = {"User-Agent": user_agent}

response = requests.get("http://www.baidu.com", headers=headers)

print(response.content)

4. 响应码code和响应头headers处理

响应码为4xx或5xx时,raise_for_status()抛出异常;响应码为200时,raise_for_status()返回None。

import requests

response = requests.get("http://www.baidu.com")

if response.status_code == requests.codes.ok:

print(response.status_code) # 响应码

print(response.headers) # 响应头

print(response.headers.get("content-type")) # 推荐使用该方式获取其中某个字段,无该字段时返回None

print(response.headers["content-type"]) # 不推荐使用该方式获取,无该字段时抛出异常

else:

response.raise_for_status()

5. Cookie处理

# 获取Cookie

import requests

user_agent = "Chrome/63.0.3239.26 (Windows NT 10.0; WOW64)"

headers = {"User_Agent": user_agent}

response = requests.get("http://www.baidu.com", headers=headers)

# 遍历所有cookie字段的值

for cookie in response.cookies.keys():

print(cookie + ": " + response.cookies.get(cookie))

# 发送Cookie

import requests

user_agent = "Chrome/63.0.3239.26 (Windows NT 10.0; WOW64)"

headers = {"User_Agent": user_agent}

cookies = dict(name="qiye", age="10")

response = requests.get("http://www.baidu.com", headers=headers, cookies=cookies)

print(response.text)

# Session

import requests

loginUrl = "http://www.xxxxxx.com/login"

s = requests.Session()

# 首先访问登录界面,作为游客,服务器会先分配一个cookie

response = s.get(loginUrl, allow_redirects=True)

data = {"name": "qiye", "passwd": "qiye"}

# 向登录链接发送post请求,验证成功,游客权限转为会员权限

response = s.post(loginUrl, data=data, allow_redirects=True)

print(response.text)

6. 重定向与历史信息

# 通过response.history字段查看历史信息

import requests

response = requests.get("http://github.com")

print(response.url)

print(response.status_code)

print(response.history)

7. 超时设置

import requests

try:

response = requests.get("http://www.google.com", timeout=2)

except Exception as e:

print(e)

8. 代理设置

import requests

proxies = {

"http": "http://0.10.1.10:3128",

"https": "https://10.10.1.10:1080",

}

response = requests.get("http://www.google.com", proxies=proxies)

HTTP请求Python实践

HTTP请求Python实践

1675

1675

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?