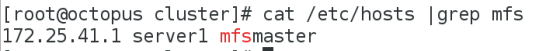

172.25.41.1----server1----master节点

172.25.41.2----server2----chunkserver节点

172.25.41.3----server3----chunkserver节点

172.25.41.4----server4----back-master节点

172.25.41.250----octopus----client节点

在172.25.41.1/172.25.41.4主机上同样完成master结点的搭建

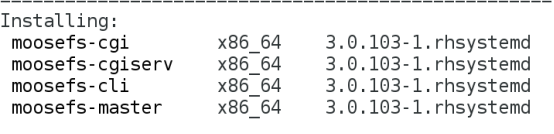

yum install -y moosefs-master-3.0.103-1.rhsystemd.x86_64.rpm moosefs-cli-3.0.103-1.rhsystemd.x86_64.rpm moosefs-cgiserv-3.0.103-1.rhsystemd.x86_64.rpm moosefs-cgi-3.0.103-1.rhsystemd.x86_64.rpm

同样添加相应的解析

修改启动文件并启动

vim /usr/lib/systemd/system/moosefs-master.service

文件编辑内容如下: ExecStart=/usr/sbin/mfsmaster -a start

解决服务异常启动后metadata.mfs.back文件为恢复的问题

systemctl daemon-reload

systemctl start moosefs-master

配置高可用的yum源

[octopus]

name=octopus

baseurl=http://172.25.41.250/octopus

gpgcheck=0

[HA]

name=HA

baseurl=http://172.25.41.250/octopus/addons/HighAvailability

gpgcheck=0

[RS]

name=RS

baseurl=http://172.25.41.250/octopus/addons/ResilientStorage

gpgcheck=0

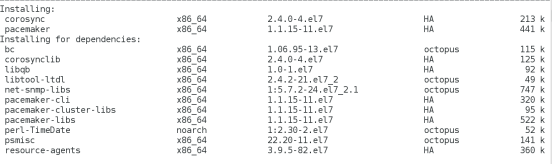

在主节点server1和server4上安装pacemaker+corosync

yum install pacemaker corosync -y

在server1和server4之间做免密操作

Ssh-keygen

Ssh-copy-id server1

Ssh-copy-id server4

在server1和server4上安装pcs

yum install -y pcs

systemctl start pcsd

systemctl enable pcsd

配置高可用集群管理用户的密码

passwd hacluster

在server1上创建集群并启动

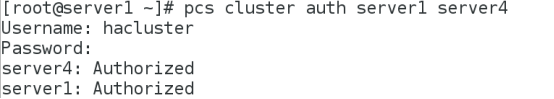

pcs cluster auth server1 server4

启动集群

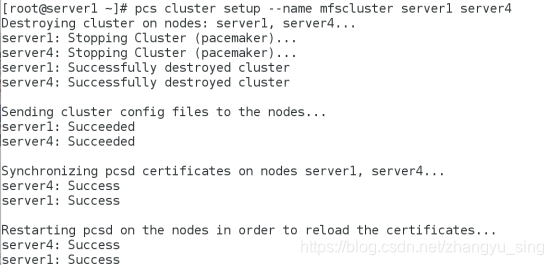

pcs cluster setup --name mfscluster server1 server4

pcs cluster start --all

pcs property set stonith-enabled=false

crm_verify -L -V

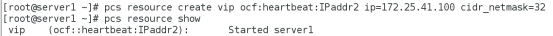

创建vip

pcs resource create vip ocf:heartbeat:IPaddr2 ip=172.25.41.100 cidr_netmask=32 op monitor interval=30s

查看

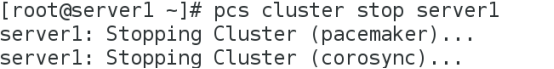

此时停止server1

pcs cluster stop server1

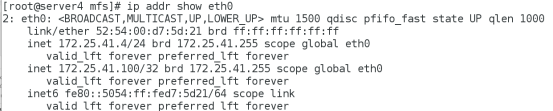

Vip漂移至server4此时server1下线开启server1,vip不漂移

设置mfs文件系统,并用iscsi做数据共享

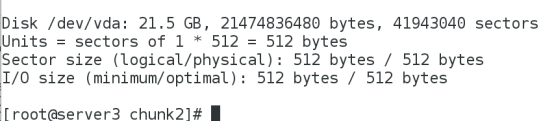

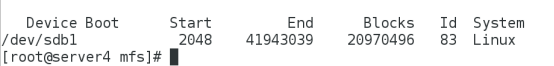

在server3添加一块磁盘

实现iscsi共享

yum install -y targetcli

配置

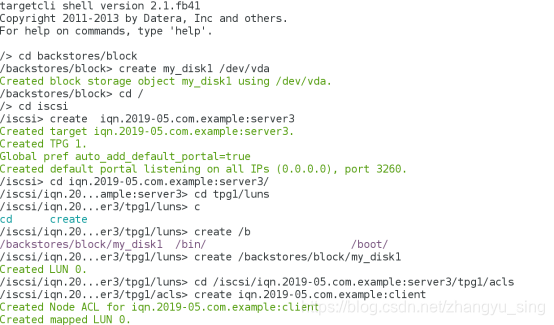

targetcli

cd backstores/block

create my_disk1 /dev/vda

cd /

cd iscsi

create iqn.2019-05.com.example:server3

cd tpg1/luns

create /backstores/block/my_disk1

cd /iscsi/iqn.2019-05.com.example:server3/tpg1/acls

create iqn.2019-05.com.example:client

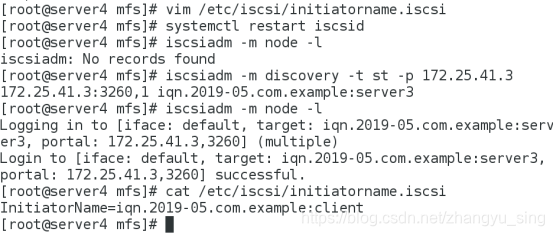

Server1和server4作为客户端

systemctl restart iscsid

iscsiadm -m node -l

iscsiadm -m discovery -t st -p 172.25.41.3

iscsiadm -m node -l

cat /etc/iscsi/initiatorname.iscsi

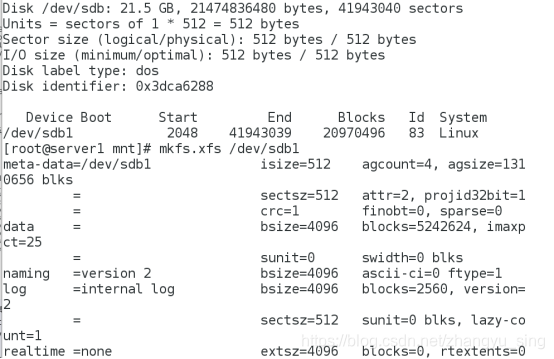

在server1上添加,并格式化

拷贝mfs数据至/dev/sdb1

mount /dev/sdb1 /mnt

cd /var/lib/mfs

cp -p * /mnt

chown mfs.mfs /mnt

umount /mnt

mount /dev/sdb1 /var/lib/mfs/

systemctl start moosefs-master

Server4上进行相同配置

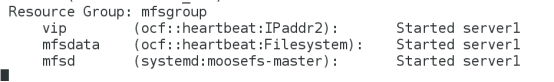

开启集群,添加mfs资源

pcs resource create mfsdata ocf:heartbeat:Filesystem device=/dev/sdb1 directory=/var/lib/mfs/ fstype=xfs op monitor interval=30s

集中服务到一台主机

pcs resource create mfsd systemd:moosefs-master op monitor interval=1min

pcs resource group add mfsgroup vip mfsdata mfsd

添加fence设置

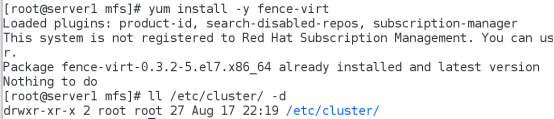

Server1、server4安装fence服务,建立秘钥目录

物理机上安装fence-virtd及其组件

yum install fence-virtd.x86_64 fence-virtd-libvirt.x86_64 fence-virtd-multicast.x86_64 fence-virtd-serial.x86_64 -y

创建目录。生成fence信息(注意网卡选择br0)生成密钥

mkdir /etc/cluster/

cd /etc/cluster/

fence_virtd -c

dd if=/dev/urandom of=/etc/cluster/fence_xvm.key bs=128 count=1

scp fence_xvm.key root@172.25.41.1:/etc/cluster/

scp fence_xvm.key root@172.25.41.4:/etc/cluster/

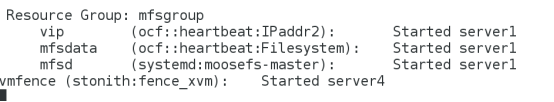

添加fence资源

pcs stonith create vmfence fence_xvm pcmk_host_map="server1:server1;server4:server4" op monitor interval=1min

开启fence设置

pcs property set stonith-enabled=true

crm_verify -L -V

查看

此时,尝试破坏server1内核,此时server1重启,服务迁移

报错解决

vmfence_start_0 on server1 ‘unknown error’

注意启动服务之前将配置文件先写入配置目录中,二次配置时可以使用下列命令

检查防火墙

使用以下命令清除记录

pcs resource cleanup vmfence

1541

1541

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?