工具是智能体的重要组件,是对大模型能力的扩展。但实际上大模型本身并不直接调用工具,而仅仅是根据上下文确定调用哪个工具,并把调用参数结构化,工具的实际执行由智能体完成。

1.概述

在大模型语境中,工具本质上就是一个可执行的函数。创建一个工具时,可使用@Tool注解,以下定义了一个简单加法工具:

from langchain_core.tools import tool

@tool

def sum(a: int, b: int) -> int:

"""Multiply two numbers."""

return a + b

工具作为一个函数当然可以直接被调用:

sub({"a":2, "b":5})

也可以通过invoke调用:

sub.invoe({"a":2, "b":5})

如果已tool_call方式调用,则返回langchain中ToolMessage类型数据:

tool_call = {

"type": "tool_call",

"id": "1",

"args": {"a": 2, "b": 5}

}

add.invoke(tool_call)

输出如下:

ToolMessage(content='7', name='add', tool_call_id='1')

2.在agent中使用工具

在agent中使用工具时,agent根据上下文判断调用哪个工具,如下agent使用两个工具,一个搜索引擎,一个是上面的加法工具,具体代码如下:

from langchain.chat_models import init_chat_model

from langgraph.prebuilt import create_react_agent

from langchain_tavily import TavilySearch

import osfrom langchain_core.tools import tool

@tool

def sum(a: int, b: int) -> int:

"""Multiply two numbers."""

return a + b

#创建搜索引擎工具

os.environ["TAVILY_API_KEY"] = "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"

search_tool = TavilySearch(max_results=2)#创建大模型

os.environ["OPENAI_API_KEY"] = "sk-*"

model = init_chat_model(model='qwen-plus', model_provider='openai', base_url='https://dashscope.aliyuncs.com/compatible-mode/v1')

tools = [search_tool, add]

agent = create_react_agent(model, tools=tools)

result = agent.invoke({"messages": [{"role": "user", "content": "what's 42 + 7?"}]})

print(result)

运行结果如下:

{'messages': [HumanMessage(content="what's 42 + 7?", additional_kwargs={}, response_metadata={}, id='18c4964c-f9c8-4f6f-9429-3badc2a792a9'), AIMessage(content='', additional_kwargs={'tool_calls': [{'id': 'call_a771141e7a284414a7f8ce', 'function': {'arguments': '{"a": 42, "b": 7}', 'name': 'add'}, 'type': 'function', 'index': 0}], 'refusal': None}, response_metadata={'token_usage': {'completion_tokens': 25, 'prompt_tokens': 1871, 'total_tokens': 1896, 'completion_tokens_details': None, 'prompt_tokens_details': {'audio_tokens': None, 'cached_tokens': 0}}, 'model_name': 'qwen-plus', 'system_fingerprint': None, 'id': 'chatcmpl-e7581940-9449-49cf-9c6f-d0a850d17a4c', 'service_tier': None, 'finish_reason': 'tool_calls', 'logprobs': None}, id='run--7dc40639-52d3-458b-8668-fbf6a2a75f1f-0', tool_calls=[{'name': 'add', 'args': {'a': 42, 'b': 7}, 'id': 'call_a771141e7a284414a7f8ce', 'type': 'tool_call'}], usage_metadata={'input_tokens': 1871, 'output_tokens': 25, 'total_tokens': 1896, 'input_token_details': {'cache_read': 0}, 'output_token_details': {}}), ToolMessage(content='49', name='add', id='a8d5e314-f183-4165-9ea2-cbce014a3d71', tool_call_id='call_a771141e7a284414a7f8ce'), AIMessage(content='The sum of 42 and 7 is 49.', additional_kwargs={'refusal': None}, response_metadata={'token_usage': {'completion_tokens': 14, 'prompt_tokens': 1912, 'total_tokens': 1926, 'completion_tokens_details': None, 'prompt_tokens_details': {'audio_tokens': None, 'cached_tokens': 0}}, 'model_name': 'qwen-plus', 'system_fingerprint': None, 'id': 'chatcmpl-2d0df4af-704c-44a8-84b0-877a09797921', 'service_tier': None, 'finish_reason': 'stop', 'logprobs': None}, id='run--eb926e5e-6f91-4efa-bb9d-99b02f4cceb6-0', usage_metadata={'input_tokens': 1912, 'output_tokens': 14, 'total_tokens': 1926, 'input_token_details': {'cache_read': 0}, 'output_token_details': {}})]}

很多的大模型在工具集中工具数量超过一定值时表现不佳,所以需要让大模型聚焦,在运行时根据上下文的配置让大模型使用所有工具的子集。以下代码工具集中有两个工具add和search_tool,在创建agent时,传入

from langchain.chat_models import init_chat_model

from langgraph.prebuilt import create_react_agent

from langchain_tavily import TavilySearch

import os

from dataclasses import dataclass

from langgraph.prebuilt.chat_agent_executor import AgentState

from typing import Literal

from langgraph.runtime import Runtime

from langchain_core.tools import tool#以下代码先创建两个工具add和search_tool

@tool

def add(a: int, b: int) -> int:

"""add two numbers."""

return a + bos.environ["TAVILY_API_KEY"] = "tvly-*"

search_tool = TavilySearch(max_results=2, name="search_tool")#设置工具集

tools = [search_tool, add]#创建大模型实例

os.environ["OPENAI_API_KEY"] = "sk-*"

model = init_chat_model(model='qwen-plus', model_provider='openai', base_url='https://dashscope.aliyuncs.com/compatible-mode/v1')#运行时上下文,仅在一个轮次的对话中有效

@dataclass

class CustomContext:

tools: list[Literal["search_tool", "add"]]#根据运行时上下文选择大模型能访问的工具集,是tools的子集

def configure_model(state: AgentState, runtime: Runtime[CustomContext]):

"""Configure the model with tools based on runtime context."""

selected_tools = [

tool

for tool in tools

if tool.name in runtime.context.tools

]

return model.bind_tools(selected_tools)#创建agent,tools参数不影响最终选择的工具集

agent = create_react_agent(

configure_model,

tools=tools

)#检查工具选择情况

result = agent.invoke(

{

"messages": [

{

"role": "user",

"content": "What tools do you have access to?",

}

]

},

context=CustomContext(tools=["search_tool",]),

)

result['messages'][-1].text()

运行结果如下:

"I have access to one main tool:\n\n**search_tool** - A comprehensive search engine that provides:\n- Web search with accurate, trusted results\n- Advanced search capabilities including domain filtering, time range filters, and search depth control\n- Image search functionality when needed\n- Support for different topics (general, news, finance)\n- Date range filtering with start and end dates\n- Favicon inclusion for UI purposes\n\nThis tool is particularly useful for answering questions about current events, finding specific information, and providing citation-backed results. It can be configured for basic or advanced search depth depending on the complexity of the query.\n\nIs there something specific you'd like me to search for?"

3.在工作流中使用工具

3.1绑定工具

在工作流中使用工具时,需要调用bind_tools让大模型显式绑定拟使用的工具集,如下以搜索工具作为示例说明绑定工具:

from langchain_tavily import TavilySearch

from langchain.chat_models import init_chat_modelos.environ["TAVILY_API_KEY"] = "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"

search_tool = TavilySearch(max_results=2, name="search_tool")

os.environ["OPENAI_API_KEY"] = "sk-*"

model = init_chat_model(model='qwen-plus', model_provider='openai', base_url='https://dashscope.aliyuncs.com/compatible-mode/v1')

model = model.bind_tools(tools=[search_tool,])

result = model.invoke("who is current president of American? please look up internet")

tool_call = result.tool_calls[0]

search_tool.invoke(tool_call)

运行结果如下:

ToolMessage(content='{"query": "current president of the United States", "follow_up_questions": null, "answer": null, "images": [], "results": [{"url": "https://www.usa.gov/presidents", "title": "Presidents, vice presidents, and first ladies | USAGov", "content": "The 47th and current president of the United States is Donald John Trump. He was sworn into office on January 20, 2025.", "score": 0.8952393, "raw_content": null}, {"url": "https://en.wikipedia.org/wiki/President_of_the_United_States", "title": "President of the United States - Wikipedia", "content": "Donald Trump is the 47th and current president since January 20, 2025. Contents. 1 History and development. 1.1 Origins; 1.2 1789–1933; 1.3 Imperial presidency", "score": 0.86052185, "raw_content": null}], "response_time": 1.11, "request_id": "3b8772b6-18ea-41a7-9892-ffdfa7166cb0"}', name='search_tool', tool_call_id='call_965d0da4a4884fa7b8eb93')

3.2创建工具节点

大模型仅确定调用哪个工具,在工作流中需要专门的节点调用工具,可直接使用ToolNode封装工具节点:

from langgraph.prebuilt import ToolNode

tools = [search_tool,]

tool_node = ToolNode(tools=[tool]) #创建了工具节点

3.3使用工具

以下代码是一个集成了搜索引擎的简单聊天机器人:

import os

from langchain_tavily import TavilySearch

from langchain_openai import ChatOpenAI

from typing import Annotated

from typing_extensions import TypedDict

from langgraph.graph import StateGraph, START, END

from langgraph.graph.message import add_messages

import jsonfrom langchain_core.messages import ToolMessage

from langgraph.prebuilt import ToolNode, tools_conditionos.environ["TAVILY_API_KEY"] = "tvly-*"

tool = TavilySearch(max_results=2) #创建工具

tools = [tool]

llm = ChatOpenAI(

model = 'qwen-plus',

api_key = "sk-*",

base_url = "https://dashscope.aliyuncs.com/compatible-mode/v1")

llm_with_tools = llm.bind_tools(tools) #大模型绑定工具集"""

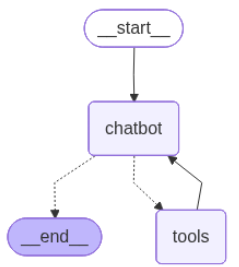

工作流如下图所示:

"""

class State(TypedDict):

messages: Annotated[list, add_messages]graph_builder = StateGraph(State)

def chatbot(state: State): #聊天节点

return {"messages": [llm_with_tools.invoke(state["messages"])]}graph_builder.add_node("chatbot", chatbot)

tool_node = ToolNode(tools=[tool,]) #工具节点

graph_builder.add_node("tools", tool_node)

graph_builder.add_conditional_edges(

"chatbot",

tools_condition,

)

graph_builder.add_edge("tools", "chatbot")

graph_builder.add_edge(START, "chatbot")

graph = graph_builder.compile()

4.工具定制

使用@Tool注解可以对工具行为进行定制,包括为工具指定名称和显式指定工具输入模式。

4.1指定名称

、 使用@Tool注解一个函数成为工具时,缺省以函数名作为工具名,你可以根据自己的需要修改工具名,比如:

@tool('add_tool')

def add(a: int, b: int) -> int:

"""add two numbers."""

return a + b

print(add)

运行结果如下:

name='add_tool' description='add two numbers.' args_schema=<class 'langchain_core.utils.pydantic.add_tool'> func=<function add at 0x7fb9230b2f20>

4.2显式指定输入模式

如下代码定义AddInputSchema作为输入模式,并在注解中指定args_schema:

from pydantic import BaseModel, Field

from langchain_core.tools import toolclass AddInputSchema(BaseModel):

"""Add two numbers"""

a: int = Field(description="First operand")

b: int = Field(description="Second operand")@tool(args_schema=AddInputSchema)

def add(a: int, b: int) -> int:

"""add two numbers."""

return a + b

print(add)

输出如下:

name='add' description='add two numbers.' args_schema=<class '__main__.AddInputSchema'> func=<function add at 0x7fb92380b600>

1762

1762

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?