揭秘!如何在Docker和K8S中高效调用GPU资源

参考:

安装Docker插件

https://docs.nvidia.com/datacenter/cloud-native/container-toolkit/latest/install-guide.html

Unbntu使用Docker调用GPU

https://blog.youkuaiyun.com/dw14132124/article/details/140534628

https://www.cnblogs.com/li508q/p/18444582

- 环境查看

系统环境

# lsb_release -a

NoLSBmodulesareavailable.

Distributor ID:Ubuntu

Description:Ubuntu22.04.4LTS

Release:22.04

Codename:jammy

# cat /etc/redhat-release

RockyLinuxrelease9.3(BlueOnyx)

软件环境

# kubectl version

Client Version:v1.30.2

Kustomize Version:v5.0.4-0.20230601165947-6ce0bf390ce3

Server Version:v1.25.16

WARNING:versiondifferencebetweenclient(1.30)andserver(1.25)exceedsthesupportedminorversionskewof+/-1

- 安装Nvidia的Docker插件

在有GPU资源的主机安装,改主机作为K8S集群的Node

设置源

# curl -fsSL https://nvidia.github.io/libnvidia-container/gpgkey | sudo gpg --dearmor -o /usr/share/keyrings/nvidia-container-toolkit-keyring.gpg \

&& curl -s -L https://nvidia.github.io/libnvidia-container/stable/deb/nvidia-container-toolkit.list | \

sed 's#deb https://#deb [signed-by=/usr/share/keyrings/nvidia-container-toolkit-keyring.gpg] https://#g' | \

sudo tee /etc/apt/sources.list.d/nvidia-container-toolkit.list

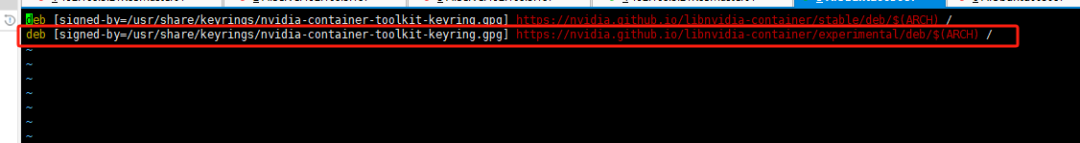

配置存储库以使用实验性软件包

# sed -i -e '/experimental/ s/^#//g' /etc/apt/sources.list.d/nvidia-container-toolkit.list

修改后把以下注释取消

更新

# sudo apt-getupdate

安装Toolkit

# sudo apt-get install -y nvidia-container-toolkit

配置Docker以使用Nvidia

# sudo nvidia-ctk runtime configure --runtime=docker

INFO[0000] Loading config from /etc/docker/daemon.json

INFO[0000] Wrote updated config to /etc/docker/daemon.json

INFO[0000] It is recommended that docker daemon be restarted.

这条命令会修改配置文件/etc/docker/daemon.json添加runtimes配置

# cat /etc/docker/daemon.json

{

"insecure-registries": [

"192.168.3.61"

],

"registry-mirrors": [

"https://7sl94zzz.mirror.aliyuncs.com",

"https://hub.atomgit.com",

"https://docker.awsl9527.cn"

],

"runtimes": {

"nvidia": {

"args": [],

"path": "nvidia-container-runtime"

}

}

重启docker

# systemctl daemon-reload

# systemctl restart docker

- 使用Docker调用GPU

验证配置

启动一个镜像查看GPU信息

~# docker run --rm --runtime=nvidia --gpus all ubuntu nvidia-smi

Sat Oct 1201:33:332024

+-----------------------------------------------------------------------------------------+

| NVIDIA-SMI 555.42.06 Driver Version: 555.42.06 CUDA Version: 12.5 |

|-----------------------------------------+------------------------+----------------------+

| GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|=========================================+========================+======================|

| 0 NVIDIA GeForce RTX 4090 Off | 00000000:01:00.0 Off | Off |

| 0% 53C P2 59W / 450W | 4795MiB / 24564MiB | 0% Default |

| | | N/A |

+-----------------------------------------+------------------------+----------------------+

+-----------------------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID TypeProcess name GPU Memory |

| ID ID Usage |

|=========================================================================================|

| No running processes found |

+-----------------------------------------------------------------------------------------+

该输出结果显示了 GPU 的详细信息,包括型号、温度、功率使用情况和内存使用情况等。这表明 Docker 容器成功地访问到了 NVIDIA GPU,并且 NVIDIA Container Toolkit 安装和配置成功。

- 使用K8S集群Pod调用GPU

以下操作在K8S机器的Master节点操作

安装K8S插件

下载最新版本

$ kubectl create -f https://raw.githubusercontent.com/NVIDIA/k8s-device-plugin/v0.16.1/deployments/static/nvidia-device-plugin.yml

yml文件内容如下

# cat nvidia-device-plugin.yml

apiVersion:apps/v1

kind:DaemonSet

metadata:

name:nvidia-device-plugin-daemonset

namespace:kube-system

spec:

selector:

matchLabels:

name:nvidia-device-plugin-ds

updateStrategy:

type:RollingUpdate

template:

metadata:

labels:

name:nvidia-device-plugin-ds

spec:

tolerations:

-key:nvidia.com/gpu

operator:Exists

effect:NoSchedule

# Mark this pod as a critical add-on; when enabled, the critical add-on

# scheduler reserves resources for critical add-on pods so that they can

# be rescheduled after a failure.

# See https://kubernetes.io/docs/tasks/administer-cluster/guaranteed-scheduling-critical-addon-pods/

priorityClassName:"system-node-critical"

containers:

-image:nvcr.io/nvidia/k8s-device-plugin:v0.16.1

name:nvidia-device-plugin-ctr

env:

-name:FAIL_ON_INIT_ERROR

value:"false"

securityContext:

allowPrivilegeEscalation:false

capabilities:

drop: ["ALL"]

volumeMounts:

-name:device-plugin

mountPath:/var/lib/kubelet/device-plugins

volumes:

-name:device-plugin

hostPath:

path:/var/lib/kubelet/device-plugins

使用DaemonSet方式部署在每一台node服务器部署

查看Pod日志

# kubectl logs -f nvidia-device-plugin-daemonset-8bltf -n kube-system

I1012 02:15:37.1710561 main.go:199] Starting FS watcher.

I1012 02:15:37.1712391 main.go:206] Starting OS watcher.

I1012 02:15:37.1721771 main.go:221] Starting Plugins.

I1012 02:15:37.1722361 main.go:278] Loading configuration.

I1012 02:15:37.1732241 main.go:303] Updating config withdefault resource matching patterns.

I1012 02:15:37.1737171 main.go:314]

Running with config:

{

"version": "v1",

"flags": {

"migStrategy": "none",

"failOnInitError": false,

"mpsRoot": "",

"nvidiaDriverRoot": "/",

"nvidiaDevRoot": "/",

"gdsEnabled": false,

"mofedEnabled": false,

"useNodeFeatureAPI": null,

"deviceDiscoveryStrategy": "auto",

"plugin": {

"passDeviceSpecs": false,

"deviceListStrategy": [

"envvar"

],

"deviceIDStrategy": "uuid",

"cdiAnnotationPrefix": "cdi.k8s.io/",

"nvidiaCTKPath": "/usr/bin/nvidia-ctk",

"containerDriverRoot": "/driver-root"

}

},

"resources": {

"gpus": [

{

"pattern": "*",

"name": "nvidia.com/gpu"

}

]

},

"sharing": {

"timeSlicing": {}

}

}

I1012 02:15:37.1737601 main.go:317] Retrieving plugins.

E1012 02:15:37.1740521factory.go:87] Incompatible strategy detected auto

E1012 02:15:37.1740861factory.go:88] If thisis a GPU node, did you configure the NVIDIA Container Toolkit?

E1012 02:15:37.1740961factory.go:89] You can check the prerequisites at: https://github.com/NVIDIA/k8s-device-plugin#prerequisites

E1012 02:15:37.1741041factory.go:90] You can learn how to set the runtime at: https://github.com/NVIDIA/k8s-device-plugin#quick-start

E1012 02:15:37.1741131factory.go:91] If thisis not a GPU node, you should set up a toleration or nodeSelector to only deploy this plugin on GPU nodes

I1012 02:15:37.1741231 main.go:346] No devices found. Waiting indefinitely.

驱动失败,错误提示已经清楚说明了失败原因

- 该Node部署GPU节点即该Node没有GPU资源

- 该Node有GPU资源,没有安装Docker驱动

没有GPU资源的节点肯定无法使用,但是已经有GPU资源的Node节点也会报这个错误

有GPU节点的修复方法,修改配置文件添加配置

# cat /etc/docker/daemon.json

{

"insecure-registries": [

"192.168.3.61"

],

"registry-mirrors": [

"https://7sl94zzz.mirror.aliyuncs.com",

"https://hub.atomgit.com",

"https://docker.awsl9527.cn"

],

"default-runtime": "nvidia",

"runtimes": {

"nvidia": {

"args": [],

"path": "/usr/bin/nvidia-container-runtime"

}

}

}

关键配置是以下行

再次查看Pod日志

# kubectl logs -f nvidia-device-plugin-daemonset-mp5ql -n kube-system

I1012 02:22:00.9902461 main.go:199] Starting FS watcher.

I1012 02:22:00.9902781 main.go:206] Starting OS watcher.

I1012 02:22:00.9903731 main.go:221] Starting Plugins.

I1012 02:22:00.9903821 main.go:278] Loading configuration.

I1012 02:22:00.9906921 main.go:303] Updating config with default resource matching patterns.

I1012 02:22:00.9907761 main.go:314]

Running with config:

{

"version": "v1",

"flags": {

"migStrategy": "none",

"failOnInitError": false,

"mpsRoot": "",

"nvidiaDriverRoot": "/",

"nvidiaDevRoot": "/",

"gdsEnabled": false,

"mofedEnabled": false,

"useNodeFeatureAPI": null,

"deviceDiscoveryStrategy": "auto",

"plugin": {

"passDeviceSpecs": false,

"deviceListStrategy": [

"envvar"

],

"deviceIDStrategy": "uuid",

"cdiAnnotationPrefix": "cdi.k8s.io/",

"nvidiaCTKPath": "/usr/bin/nvidia-ctk",

"containerDriverRoot": "/driver-root"

}

},

"resources": {

"gpus": [

{

"pattern": "*",

"name": "nvidia.com/gpu"

}

]

},

"sharing": {

"timeSlicing": {}

}

}

I1012 02:22:00.9907801 main.go:317] Retrieving plugins.

I1012 02:22:01.0109501 server.go:216] Starting GRPC server for'nvidia.com/gpu'

I1012 02:22:01.0112811 server.go:147] Starting to serve 'nvidia.com/gpu' on /var/lib/kubelet/device-plugins/nvidia-gpu.sock

I1012 02:22:01.0123761 server.go:154] Registered device plugin for'nvidia.com/gpu' with Kubelet

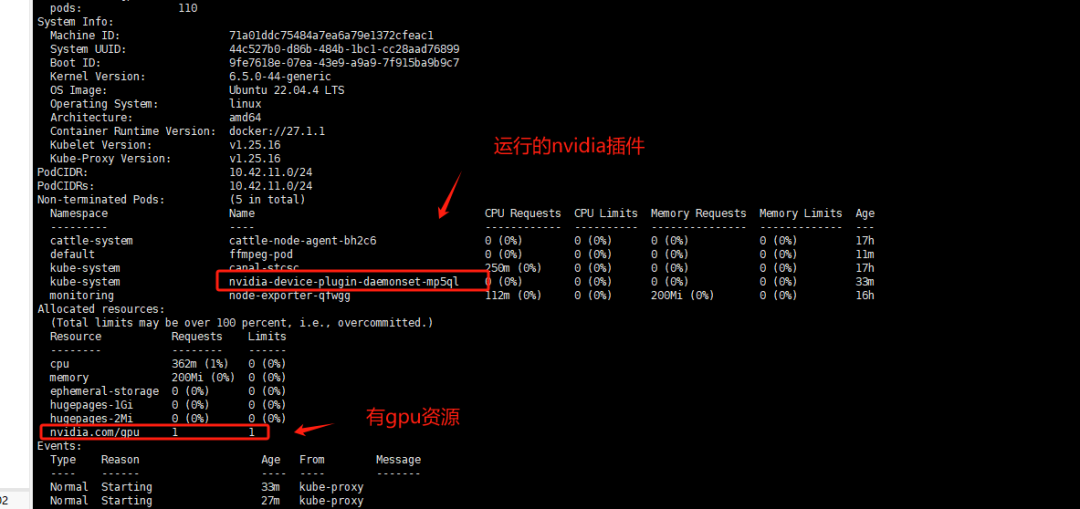

查看GPU节点信息

# kubectl describe node aiserver003087

在k8s中测试GPU资源调用

测试Pod

# cat gpu_test.yaml

apiVersion:v1

kind:Pod

metadata:

name:ffmpeg-pod

spec:

nodeName:aiserver003087#指定有gpu的节点

containers:

-name:ffmpeg-container

image:nightseas/ffmpeg:latest#k8s中配置阿里的私有仓库遇到一些问题,暂时用公共镜像

command: [ "/bin/bash", "-ce", "tail -f /dev/null" ]

resources:

limits:

nvidia.com/gpu:1# 请求分配 1个 GPU

创建Pod

# kubectl apply -f gpu_test.yaml

pod/ffmpeg-pod configured

往Pod内倒入一个视频进行转换测试

# kubectl cp test.mp4 ffmpeg-pod:/root

进入Pod

# kubectl exec -it ffmpeg-pod bash

转换测试视频

# ffmpeg -hwaccel cuvid -c:v h264_cuvid -i test.mp4 -vf scale_npp=1280:720 -vcodec h264_nvenc out.mp4

成功转换并且输出out.mp4则代表调用GPU资源成功

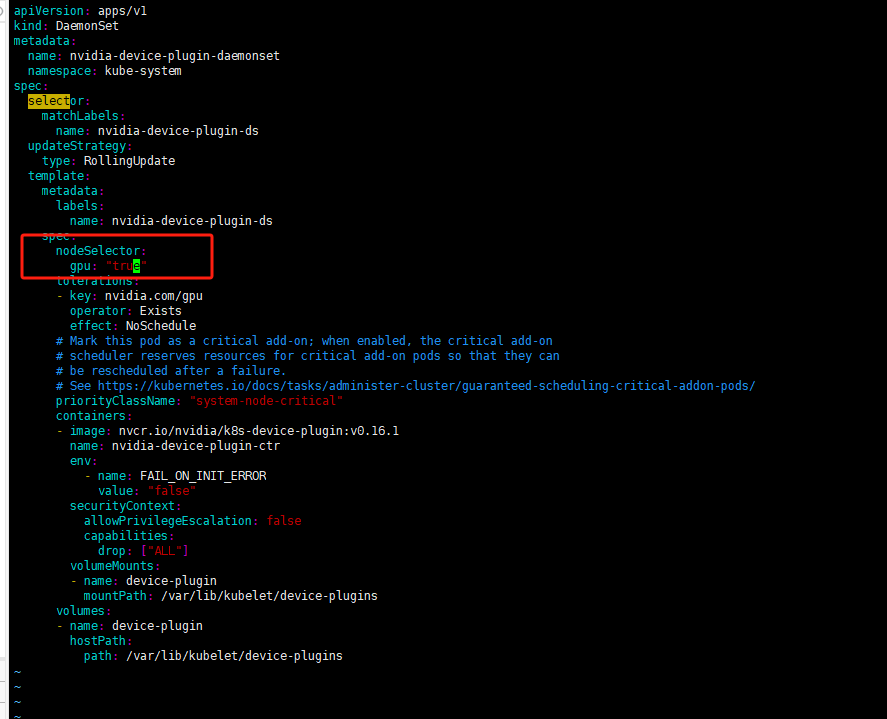

为保证DaemonSet至部署至带GPU资源的服务器可以做一个node标签选择器

设置给节点标签

# kubectl label nodes aiserver003087 gpu=true

修改DaemonSet配置文件添加标签选择保证DaemonSet至部署至带gpu=true标签的Node上

deployment配置文件修改位置是一致的

修改gpu测试Pod的yaml文件使用标签选择器

# cat gpu_test.yaml

apiVersion:v1

kind:Pod

metadata:

name:ffmpeg-pod

spec:

#nodeName: aiserver003087 #指定有gpu的节点

containers:

-name:ffmpeg-container

image:nightseas/ffmpeg:latest#k8s中配置阿里的私有仓库遇到一些问题,暂时用公共镜像

command: [ "/bin/bash", "-ce", "tail -f /dev/null" ]

resources:

limits:

nvidia.com/gpu:1

nodeSelector:

gpu:"true"

#kubernetes.io/os: linux

注意: 标签选择器需要值需要添加双引号"true"否则apply会报错,不能把bool值作为对应的值应用至标签选择器

网络安全学习资源分享:

给大家分享一份全套的网络安全学习资料,给那些想学习 网络安全的小伙伴们一点帮助!

对于从来没有接触过网络安全的同学,我们帮你准备了详细的学习成长路线图。可以说是最科学最系统的学习路线,大家跟着这个大的方向学习准没问题。

因篇幅有限,仅展示部分资料,朋友们如果有需要全套《网络安全入门+进阶学习资源包》,需要点击下方链接即可前往获取

读者福利 | 优快云大礼包:《网络安全入门&进阶学习资源包》免费分享 (安全链接,放心点击)

👉1.成长路线图&学习规划👈

要学习一门新的技术,作为新手一定要先学习成长路线图,方向不对,努力白费。

对于从来没有接触过网络安全的同学,我们帮你准备了详细的学习成长路线图&学习规划。可以说是最科学最系统的学习路线,大家跟着这个大的方向学习准没问题。

👉2.网安入门到进阶视频教程👈

很多朋友都不喜欢晦涩的文字,我也为大家准备了视频教程,其中一共有21个章节,每个章节都是当前板块的精华浓缩。(全套教程文末领取哈)

👉3.SRC&黑客文档👈

大家最喜欢也是最关心的SRC技术文籍&黑客技术也有收录

SRC技术文籍:

黑客资料由于是敏感资源,这里不能直接展示哦!(全套教程文末领取哈)

👉4.护网行动资料👈

其中关于HW护网行动,也准备了对应的资料,这些内容可相当于比赛的金手指!

👉5.黑客必读书单👈

👉6.网络安全岗面试题合集👈

当你自学到这里,你就要开始思考找工作的事情了,而工作绕不开的就是真题和面试题。

所有资料共282G,朋友们如果有需要全套《网络安全入门+进阶学习资源包》,可以扫描下方二维码或链接免费领取~

读者福利 | 优快云大礼包:《网络安全入门&进阶学习资源包》免费分享 (安全链接,放心点击)

3454

3454

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?