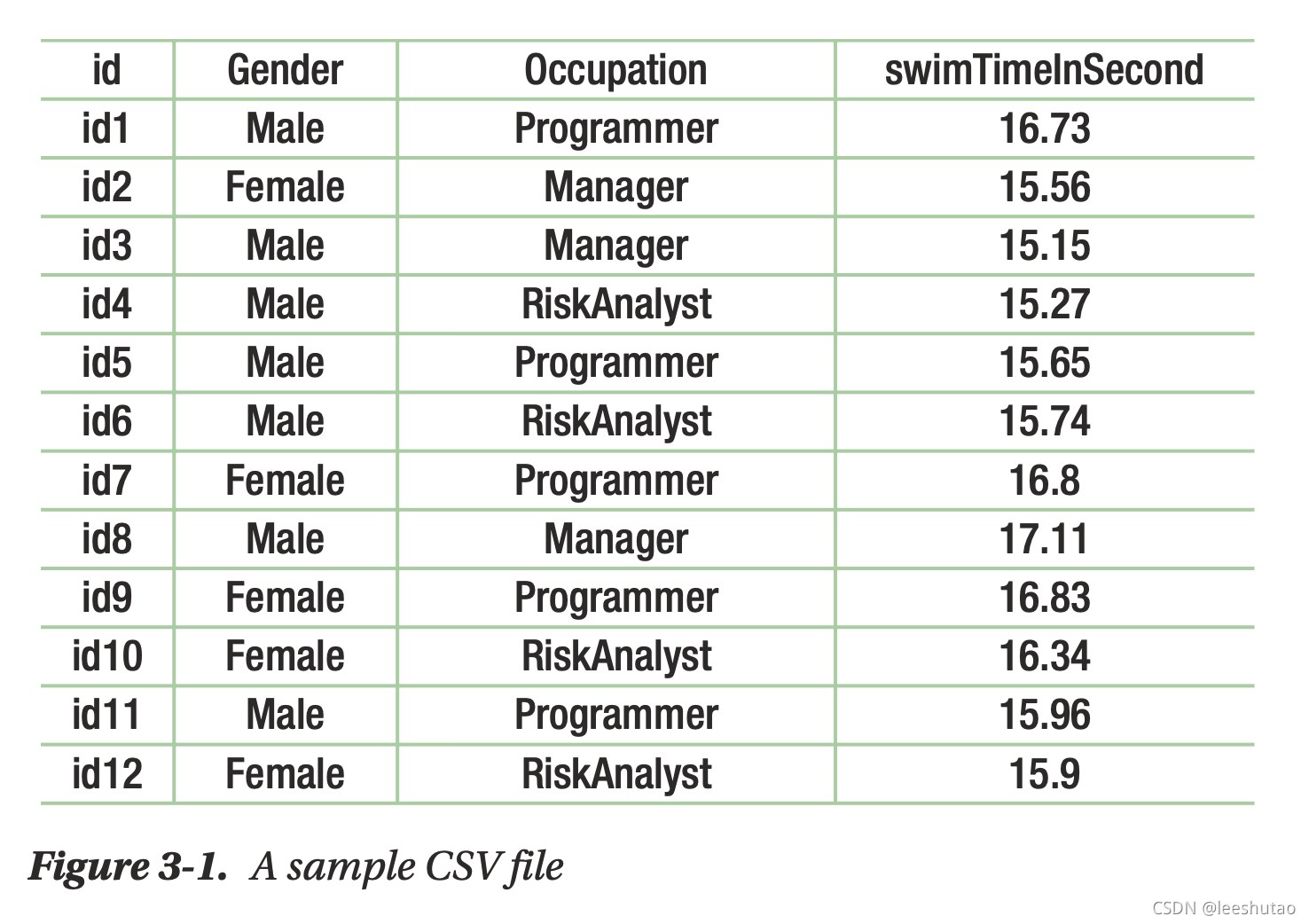

数据格式如下:

1. 读取csv数据

from pyspark.sql.types import *

#All datatypes for PySpark SQL have been defined in the submodule named pyspark.sql.types.

idColumn = StructField("id",StringType(),True)

#Let’s look at the arguments of StructField(). The first argument is the column name. We provide the column name as id. The second argument is the datatype of the elements of the column. The datatype of the first column is StringType(). If some ID is missing then some element of a column might be null. The last argument, whose value is True, tells you that this column might have null values or missing data.

genderColumn = StructField("Gender",StringType(),True)

OccupationColumn = StructField("Occupation",StringType(), True)

swimTimeInSecondColumn = StructField("swimTimeInSecond", DoubleType(),True)

columnList = [idColumn, genderColumn, OccupationColumn,swimTimeInSecondColumn]

swimmerDfSchema = StructType(columnList)

swimmerDfSchema

#output:

#StructType(List(StructField(uid,StringType,false),StructField(Mcn,IntegerType,true),StructField(Usertagblk,IntegerType,true),StructField(Usertagwt,IntegerType,true)))

swimmerDf = spark.read.csv('data/swimmerData.csv',header=True,schema=swimmerDfSchema)

swimmerDf.show(4)

swimmerDf.printSchema()2. 读取orc数据

duplicateDataDf = spark.read.orc(path='duplicateData')

duplicateDataDf.show(6)3. 保存DataFrame为csv数据

We can access DataFrameWriter using DataFrame.write. So, if we want to save our DataFrame as a CSV file, we have to use the DataFrame.write.csv() function.

Note you can read more about the DataFrameWriter class from the following link

#pyspark

corrData.write.csv(path='csvFileDir', header=True,sep=',')

#shell

csvFileDir$ ls

#shell

$ head -5 part-00000-eb3df2e6-8098-488d-be22-5e9db4a5cb08-c000.csv

4. 保存DataFrame为orc数据

#python

swimmerDf.write.orc(path='orcData')

http://DataFrameWriter

http://DataFrameWriter

274

274

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?