目录

一、针对问题

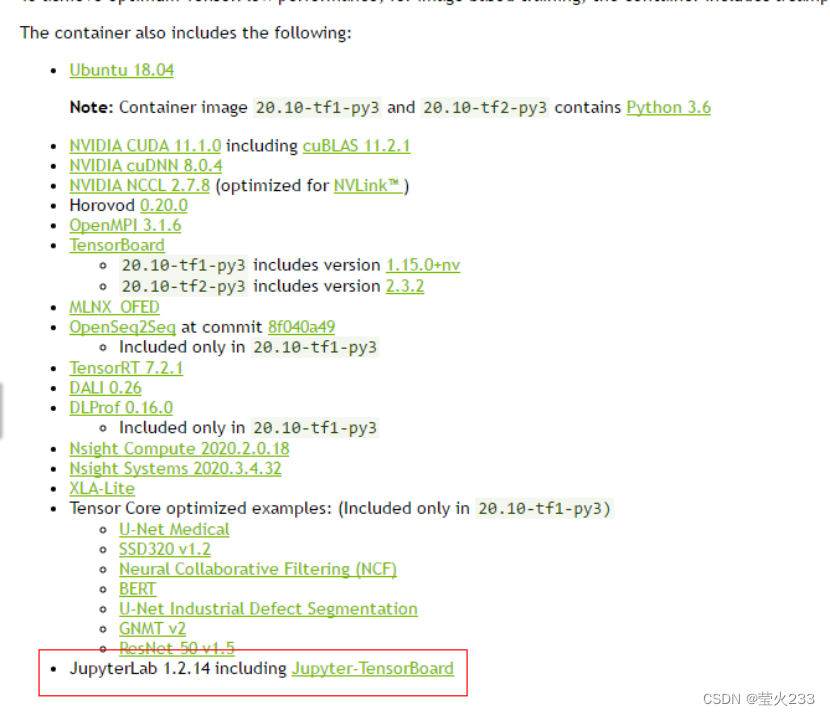

在制作适配A100等新的GPU卡镜像时,由于一些pytorch、tensorflow等框架可能还不支持该GPU卡,自己制作的镜像,可能无法使用A100 GPU卡。在使用NVIDIA官方NGC提供的框架镜像时,官方在镜像里面已经配置jupyter等组件,配置方式和我们不一样,导致镜像导入AI平台时,无法使用jupyter。即使我们重新配置,也无法生效,因为配置方式不一样, 为了提高效率和节省时间,针对类似现象,提供一种解决该问题的参考方式。

以NGC官方tensorflow镜像为例:

docker pull nvcr.io/nvidia/tensorflow:20.09-tf2-py3镜像官方说明链接:

TensorFlow Release Notes :: NVIDIA Deep Learning Frameworks Documentation

二、解析镜像的Dockerfile

通过Dockerfile制作的镜像,解析出Dockerfile, 该方式不适用于所有镜像,只针对Dockerfile制作的镜像,而且解析出的Dockerfile仅供参考,和实际的原Dockerfile文件是有差别的。不过可以用来了解,Dockerfile安装了哪些组件,以及对镜像进行了哪些操作和配置,然后进行有针对性的修改,解决适配AIStation平台的相关问题。

2.1 下载github的解析工具

$ cd /home/wjy

$ git clone https://github.com/lukapeschke/dockerfile-from-image.git

$ cd dockerfile-from-image

$ docker build --rm -t lukapeschke/dockerfile-from-image .

查看buid完成的镜像

![]()

2.2 解析出Dockerfile文件

注意:<IMAGE_ID>只能使用镜像的image id,不能使用镜像名和tag

docker run --rm -v '/var/run/docker.sock:/var/run/docker.sock' lukapeschke/dockerfile-from-image <IMAGE_ID>示例:

![]()

docker run --rm -v '/var/run/docker.sock:/var/run/docker.sock' lukapeschke/dockerfile-from-image > /home/wjy/cuda11-dockerfile/Dockerfile查看解析出的Dockerfile:

cat /home/wjy/cuda11-dockerfile/DockerfileFROM nvcr.io/nvidia/tensorflow:20.09-tf2-py3

ADD file:5c125b7f411566e9daa738d8cb851098f36197810f06488c2609074296f294b2 in /

RUN /bin/sh -c [ -z "$(apt-get indextargets)" ]

RUN /bin/sh -c set -xe \

&& echo '#!/bin/sh' > /usr/sbin/policy-rc.d \

&& echo 'exit 101' >> /usr/sbin/policy-rc.d \

&& chmod +x /usr/sbin/policy-rc.d \

&& dpkg-divert --local --rename --add /sbin/initctl \

&& cp -a /usr/sbin/policy-rc.d /sbin/initctl \

&& sed -i 's/^exit.*/exit 0/' /sbin/initctl \

&& echo 'force-unsafe-io' > /etc/dpkg/dpkg.cfg.d/docker-apt-speedup \

&& echo 'DPkg::Post-Invoke { "rm -f /var/cache/apt/archives/*.deb /var/cache/apt/archives/partial/*.deb /var/cache/apt/*.bin || true"; };' > /etc/apt/apt.conf.d/docker-clean \

&& echo 'APT::Update::Post-Invoke { "rm -f /var/cache/apt/archives/*.deb /var/cache/apt/archives/partial/*.deb /var/cache/apt/*.bin || true"; };' >> /etc/apt/apt.conf.d/docker-clean \

&& echo 'Dir::Cache::pkgcache ""; Dir::Cache::srcpkgcache "";' >> /etc/apt/apt.conf.d/docker-clean \

&& echo 'Acquire::Languages "none";' > /etc/apt/apt.conf.d/docker-no-languages \

&& echo 'Acquire::GzipIndexes "true"; Acquire::CompressionTypes::Order:: "gz";' > /etc/apt/apt.conf.d/docker-gzip-indexes \

&& echo 'Apt::AutoRemove::SuggestsImportant "false";' > /etc/apt/apt.conf.d/docker-autoremove-suggests

RUN /bin/sh -c mkdir -p /run/systemd \

&& echo 'docker' > /run/systemd/container

CMD ["/bin/bash"]

RUN RUN /bin/sh -c export DEBIAN_FRONTEND=noninteractive \

&& apt-get update \

&& apt-get install -y --no-install-recommends apt-utils build-essential ca-certificates curl patch wget jq gnupg libtcmalloc-minimal4 \

&& curl -fsSL https://developer.download.nvidia.com/compute/cuda/repos/ubuntu1804/x86_64/7fa2af80.pub | apt-key add - \

&& echo "deb https://developer.download.nvidia.com/compute/cuda/repos/ubuntu1804/x86_64 /" > /etc/apt/sources.list.d/cuda.list \

&& rm -rf /var/lib/apt/lists/* # buildkit

RUN ARG CUDA_VERSION

RUN ARG CUDA_DRIVER_VERSION

RUN ENV CUDA_VERSION=11.0.221 CUDA_DRIVER_VERSION=450.51.06 CUDA_CACHE_DISABLE=1

RUN RUN |2 CUDA_VERSION=11.0.221 CUDA_DRIVER_VERSION=450.51.06 /bin/sh -c /nvidia/build-scripts/installCUDA.sh # buildkit

RUN COPY cudaCheck /tmp/cudaCheck # buildkit

RUN RUN |2 CUDA_VERSION=11.0.221 CUDA_DRIVER_VERSION=450.51.06 /bin/sh -c cp /tmp/cudaCheck/$(uname -m)/sh-wrap /bin/sh-wrap # buildkit

RUN RUN |2 CUDA_VERSION=11.0.221 CUDA_DRIVER_VERSION=450.51.06 /bin/sh -c cp /tmp/cudaCheck/$(uname -m)/cudaCheck /usr/local/bin/ # buildkit

RUN RUN |2 CUDA_VERSION=11.0.221 CUDA_DRIVER_VERSION=450.51.06 /bin/sh -c rm -rf /tmp/cudaCheck # buildkit

RUN COPY cudaCheck/shinit_v2 /etc/shinit_v2 # buildkit

RUN COPY cudaCheck/startup_scripts.patch /tmp # buildkit

RUN COPY singularity /.singularity.d # buildkit

RUN RUN |2 CUDA_VERSION=11.0.221 CUDA_DRIVER_VERSION=450.51.06 /bin/sh -c patch -p0 < /tmp/startup_scripts.patch \

&& rm -f /tmp/startup_scripts.patch \

&& ln -sf /.singularity.d/libs /usr/local/lib/singularity \

&& ln -sf sh-wrap /bin/sh # buildkit

RUN ENV _CUDA_COMPAT_PATH=/usr/local/cuda/compat ENV=/etc/shinit_v2 BASH_ENV=/etc/bash.bashrc NVIDIA_REQUIRE_CUDA=cuda>=9.0

RUN LABEL com.nvidia.volumes.needed=nvi

最低0.47元/天 解锁文章

最低0.47元/天 解锁文章

270

270

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?