LRU(Least Recently Used)缓存淘汰算法,可以理解为淘汰最近最少使用的缓存,是目前应用范围最广的缓存淘汰机制。

我们使用两种数据结构来实现 LRU Cache。

- 使用双向链表实现一个队列。队列的最大大小将等于可用帧的总数(缓存大小)。最近使用的页面将靠近队列前端,最近最少使用的页面将靠近后端。

- 一个以页码为键,以对应队列节点的地址为值的哈希表 。

当一个页面被引用时,需要的页面可能在内存中。如果它在内存中,我们需要将链表的节点分离出来,放到队列的最前面。

如果所需的页面不在内存中,我们将其加入内存。简单来说,就是在队列的最前面增加一个新节点,并更新哈希表中对应的节点地址。如果队列已满,即所有帧都已满,我们从队列的尾部移除一个节点,并将新节点添加到队列的前端。

LRU淘汰机制本质上很好理解,但实现起来并不那么容易,尤其是最佳的实现方式?

我们先来看最经典的实现方法

#include <bits/stdc++.h>

using namespace std;

class LRUCache {

// store keys of cache

list<int> dq;

// store references of key in cache

unordered_map<int, list<int>::iterator> ma;

int csize; // maximum capacity of cache

public:

LRUCache(int);

void refer(int);

void display();

};

// Declare the size

LRUCache::LRUCache(int n)

{

csize = n;

}

// Refers key x with in the LRU cache

void LRUCache::refer(int x)

{

// not present in cache

if (ma.find(x) == ma.end()) {

// cache is full

if (dq.size() == csize) {

// delete least recently used element

int last = dq.back();

// Pops the last element

dq.pop_back();

// Erase the last

ma.erase(last);

}

}

// present in cache

else

dq.erase(ma[x]);

// update reference

dq.push_front(x);

ma[x] = dq.begin();

}

// Function to display contents of cache

void LRUCache::display()

{

// Iterate in the deque and print

// all the elements in it

for (auto it = dq.begin(); it != dq.end();

it++)

cout << (*it) << " ";

cout << endl;

}

// Driver Code

int main()

{

LRUCache ca(4);

ca.refer(1);

ca.refer(2);

ca.refer(3);

ca.refer(1);

ca.refer(4);

ca.refer(5);

ca.display();

return 0;

}

输出: 5 4 1 3

借助Java特有的LinkedHashSet我们可以将上面的代码变得更加简洁易懂

import java.util.*;

class LRUCache {

Set<Integer> cache;

int capacity;

public LRUCache(int capacity)

{

this.cache = new LinkedHashSet<Integer>(capacity);

this.capacity = capacity;

}

// This function returns false if key is not

// present in cache. Else it moves the key to

// front by first removing it and then adding

// it, and returns true.

public boolean get(int key)

{

if (!cache.contains(key))

return false;

cache.remove(key);

cache.add(key);

return true;

}

/* Refers key x with in the LRU cache */

public void refer(int key)

{

if (get(key) == false)

put(key);

}

// displays contents of cache in Reverse Order

public void display()

{

LinkedList<Integer> list = new LinkedList<>(cache);

// The descendingIterator() method of java.util.LinkedList

// class is used to return an iterator over the elements

// in this LinkedList in reverse sequential order

Iterator<Integer> itr = list.descendingIterator();

while (itr.hasNext())

System.out.print(itr.next() + " ");

}

public void put(int key)

{

if (cache.size() == capacity) {

int firstKey = cache.iterator().next();

cache.remove(firstKey);

}

cache.add(key);

}

public static void main(String[] args)

{

LRUCache ca = new LRUCache(4);

ca.refer(1);

ca.refer(2);

ca.refer(3);

ca.refer(1);

ca.refer(4);

ca.refer(5);

ca.display();

}

}

输出: 5 4 1 3

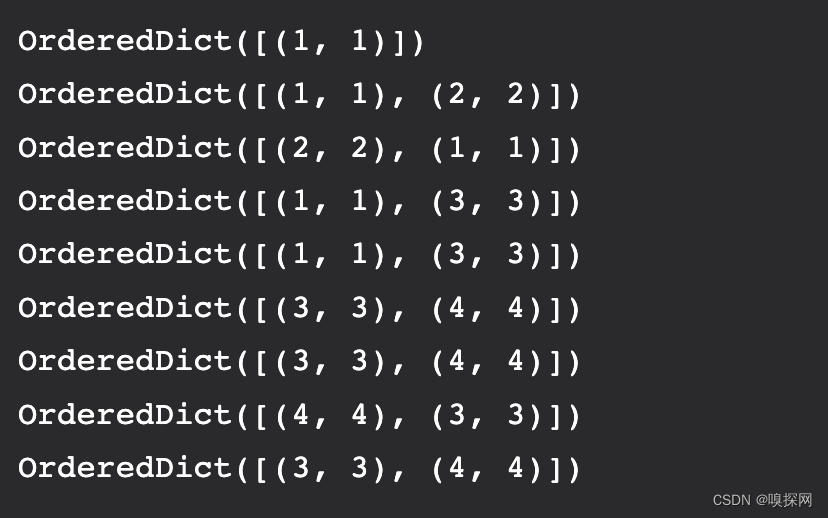

还可以用Python特有的OrderedDict实现

from collections import OrderedDict

class LRUCache:

# initialising capacity

def __init__(self, capacity: int):

self.cache = OrderedDict()

self.capacity = capacity

# we return the value of the key

# that is queried in O(1) and return -1 if we

# don't find the key in out dict / cache.

# And also move the key to the end

# to show that it was recently used.

def get(self, key: int) -> int:

if key not in self.cache:

return -1

else:

self.cache.move_to_end(key)

return self.cache[key]

# first, we add / update the key by conventional methods.

# And also move the key to the end to show that it was recently used.

# But here we will also check whether the length of our

# ordered dictionary has exceeded our capacity,

# If so we remove the first key (least recently used)

def put(self, key: int, value: int) -> None:

self.cache[key] = value

self.cache.move_to_end(key)

if len(self.cache) > self.capacity:

self.cache.popitem(last = False)

# RUNNER

# initializing our cache with the capacity of 2

cache = LRUCache(2)

cache.put(1, 1)

print(cache.cache)

cache.put(2, 2)

print(cache.cache)

cache.get(1)

print(cache.cache)

cache.put(3, 3)

print(cache.cache)

cache.get(2)

print(cache.cache)

cache.put(4, 4)

print(cache.cache)

cache.get(1)

print(cache.cache)

cache.get(3)

print(cache.cache)

cache.get(4)

print(cache.cache)

输出

完整实现下载链接:

(包含各种语言:C、Python、Java、C++、C#等)

免费资源下载:LRU Cache

本文介绍了LRU(Least Recently Used)缓存淘汰算法的工作原理及其常见实现方式,包括使用双向链表和哈希表的C++实现,Java中利用LinkedHashSet的简化实现,以及Python中OrderedDict的应用。这些实现都展示了如何在缓存满时删除最近最少使用的数据项。通过这些示例,读者可以更好地理解和掌握LRU算法的实现细节。

本文介绍了LRU(Least Recently Used)缓存淘汰算法的工作原理及其常见实现方式,包括使用双向链表和哈希表的C++实现,Java中利用LinkedHashSet的简化实现,以及Python中OrderedDict的应用。这些实现都展示了如何在缓存满时删除最近最少使用的数据项。通过这些示例,读者可以更好地理解和掌握LRU算法的实现细节。

346

346

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?