kafka是如何管理group的,为什么./kafka-consumer-groups.sh --new-consumer --bootstrap-server 127.0.0.1:9092 --describe --group my_test10 这个命令查不到不在线的group?

发送消息的时候key的作用,是否为了做hash映射,是的,似的消息相对有序,那么如果reblance之后,还是有序的吗?

https://blog.youkuaiyun.com/mdj67887500/article/details/50404979?utm_source=blogkpcl11

https://blog.youkuaiyun.com/luanpeng825485697/article/details/81036028 消息消费offset

OffsetNewest(从服务端的offset开始消费) 和OffsetOldest 真正区别是什么?

创建一个group并来消费topic数据之前,这个topic可能就存在并已经被写入数据了, OffsetNewest只获取group被创建后没有被标记为消费的数据,因为才创建group,所以该group的offset还为unknow,则OffsetOldest 消费这个分区从创建到现在的所有数据,当group 中有多个成员时,则每个成员只消费被分配到的分区上的数据,可以查看sarama中的注释:

// The initial offset to use if no offset was previously committed.

// Should be OffsetNewest or OffsetOldest. Defaults to OffsetNewest.

config.Consumer.Offsets.Initial = sarama.OffsetNewest

如果消费,但是没有提交offset,当group中有新的成员加入,发生rebalance的时候,会自动把没有提交的offset数据再重复消费一遍(悲剧,谁让这些数据还没有被标志为已消费呢),那么生产环境下,如果是自动提交offset,那reblace时候,这些临界的数据是不是会被重复消费?

---------------------

kafka管理神器-kafkamanager

向集群中多个分区顺序写入数据,延时读取,看看数据是否有序

-------------------------------------

1.搭建集群

https://www.cnblogs.com/RUReady/p/6479464.html

每个节点的broker.id都不能一样

2.常用命令

启动

./kafka-server-start.sh -daemon ../config/server.properties

运行位置日志,默认是 $base_dir/logs就是与bin同级目录下,可以通过修改 kafka-run-class.sh中的LOG_DIR项目

数据日志: server.properties文件中 log.dirs=/tmp/kafka-logs

创建主题,指定的是zookeeper端口 (replication-factor为副本数)

./kafka-topics.sh --create --zookeeper 192.168.160.128:2181,192.168.160.129:2181,192.168.160.130:2181 --replication-factor 1 --partitions 1 --topic hello

//显示主题信息,指定的是zookeeper端口

./kafka-topics.sh --describe --zookeeper 192.168.160.128:2181,192.168.160.129:2181,192.168.160.130:2181 --topic ruready

启动消费者,端口是kafka端口

./kafka-console-consumer.sh --bootstrap-server 192.168.160.128:9092,192.168.160.129:9092,192.168.160.130:9092 --topic ruready --from-beginning

启动生产者,端口是kafka端口

./kafka-console-producer.sh --broker-list 192.168.160.128:9092,192.168.160.129:9092,192.168.160.130:9092 --topic ruready

//查看list

./kafka-topics.sh --list --zookeeper localhost:2181

删除topic

./kafka-topics.sh --delete --zookeeper 127.0.0.1:2181 --topic KafkaMsgsTopic

3.go使用sarama-cluster连接kafa

如果报 Error: dial tcp: lookup VM_0_13_centos: no such host, 则需要在hosts文件中显示配置 kafka服务器IP VM_0_13_centos

4.group基本概念

group 这个,哎,kafka安全很差,随便创建一个group都可以来消费,新版本group保存在broker中,老版本保存在zookeeper中,去删除掉节点就可以了

5.查看topic的分组group 信息

bin/kafka-consumer-groups.sh --new-consumer --bootstrap-server 127.0.0.1:9092 --list KafkaOriginTopic

--new-consumer(每次使用kafka-console-consumer消费消息的时候,会创建名称为: console-consumer-17964,新版本不再需要--new-consumer) Use new consumer. This is the default.

历史上消费过本topic的group都会显示出来,会清除吗?如何手动删除呢? 下次看

6.查看分组my_test10的offset,没有消费的信息

./kafka-consumer-groups.sh --bootstrap-server 127.0.0.1:9092 --describe --group my_test10

127.0.0.1:9092 这里最好写实际listen的IP地址,如10.10.1.100,如:配置文件里配置的listeners=PLAINTEXT://10.10.1.100:9092

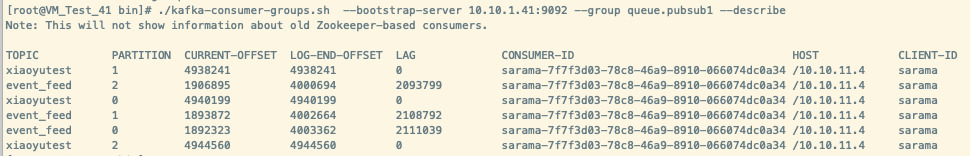

一个group,同时消费多个topic数据

./kafka-consumer-groups.sh --bootstrap-server 10.10.1.41:9092 --group queue.pubsub1 --describe

go连接Kafka(sarama-cluster 可以同时订阅多个topic的消息)

指定分组和分组ID

别想多了,同一个分组名上去,会自动分配不同的分组ID,不需要kafka 消费者自己去管理,

比如groupid 是kafkasub,启动多个进程,每启动一个进程(相同的group名的新客户端连接上来),都会触发Rebalanced,会依次不重复的消费同一个topic中的消息

consumer, err := cluster.NewConsumer(brokers, groupId, topics, config)

手动提交offset丢数据的问题

https://blog.youkuaiyun.com/weixin_38399962/article/details/90057102

GROUP TOPIC PARTITION CURRENT-OFFSET LOG-END-OFFSET LAG OWNER

my_test10 newstest 0 unknown 13 unknown sarama_/172.16.14.133

my_test10 newstest 1 unknown 16 unknown sarama_/172.16.14.133

my_test10 newstest 2 unknown 21 unknown sarama_/172.16.14.133offset为unknown,说明这个group,从来没有被commit offset,offset还没有初始化

sub

没有自动提交功能,要consumer.MarkOffset后,config.Consumer.Offsets.CommitInterval 间隔到达之后,提交offset

consumer.CommitOffsets() ,立即将标记为已经消费的offset提交,注意,在CommitOffsets之前还是一定要调用consumer.MarkOffset(msg, ""),否则提交offset不成功

package main

import (

"os"

"os/signal"

"sync"

"time"

"github.com/Shopify/sarama"

cluster "github.com/bsm/sarama-cluster"

log "github.com/thinkboy/log4go"

)

type KafkaMsgHandler func(byteMsg []byte) bool

type IKafkaMsgHandler interface {

//返回true表示正确的处理了消息,kafka客户端才会提交offset

HandleMsg(byteMsg []byte) bool

}

type Perform struct {

iTimeTotal int64

iTimes int64

iBeginTime int64

}

func (per *Perform) Count() {

if 0 == per.iTimes {

per.iBeginTime = time.Now().UnixNano()

log.Info("begin count")

}

per.iTimes = per.iTimes + 1

if 10001 == per.iTimes {

iEndTime := time.Now().UnixNano()

per.iTimeTotal = iEndTime - per.iBeginTime

log.Info("time per 10000 %d", per.iTimeTotal)

per.iTimeTotal = 0

per.iTimes = 0

per.iBeginTime = iEndTime

}

}

// 支持brokers cluster的消费者

func SubKafkaTopic(pWg *sync.WaitGroup, brokers, topics []string, groupId string, msgHandle IKafkaMsgHandler) {

defer pWg.Done()

// var strTopics string

// for _, strTopic := range topics{

// strTopics += strTopic

// }

log.Info("brokers: %s, topic:%s, groupid:%s, will start ", brokers, topics, groupId)

config := cluster.NewConfig()

config.Consumer.Return.Errors = true

config.Group.Return.Notifications = true

config.Consumer.Offsets.CommitInterval = 1 * time.Second

config.Consumer.Offsets.Initial = sarama.OffsetNewest

//config.Consumer.Offsets.Initial = sarama.OffsetOldest

// init consumer

//连接失败暂时没有重试,失败后退出进程,有守护进程拉起来

consumer, err := cluster.NewConsumer(brokers, groupId, topics, config)

if err != nil {

log.Error("sarama.NewSyncProducer err: %s", err)

return

}

defer consumer.Close()

// trap SIGINT to trigger a shutdown

signals := make(chan os.Signal, 1)

signal.Notify(signals, os.Interrupt)

//var perform Perform

// consume errors

go func() {

for err := range consumer.Errors() {

log.Error("%s : Error %s", groupId, err.Error())

}

}()

// consume notifications

go func() {

for ntf := range consumer.Notifications() {

log.Info("group %s:Rebalanced: %+v ", groupId, ntf)

//Type: RebalanceError (3)

}

}()

// consume messages, watch signals

//var successes int

Loop:

for {

select {

case msg, ok := <-consumer.Messages():

if ok {

//log.Debug("%s:%s/%d/%d\t%s\n", groupId, msg.Topic, msg.Partition, msg.Offset, msg.Key)

// strValue := string(msg.Value)

//log.Debug("kafka recv msg: %s", strValue)

//perform.Count()

if true == msgHandle.HandleMsg(msg.Value) {

consumer.MarkOffset(msg, "")

}

// mark message as processed

//successes++ 如果要重试机制的话,要对消息类型先做判断

}

case <-signals:

break Loop

}

}

}

type FriendInti struct {

}

func (friendInti *FriendInti) HandleMsg(byteMsg []byte) bool {

log.Info("recv msg %s!", string(byteMsg))

//time.Sleep(time.Second * 2)

return true

}

func main(){

var pWg = &sync.WaitGroup{}

friendInti := FriendInti{}

pWg.Add(1)

//sAddrs := []string{"118.89.177.83:9092"}

sAddrs := []string{"127.0.0.1:9092"}

go SubKafkaTopic(pWg, sAddrs, []string{"newstest"}, "my_test10", &friendInti)

pWg.Wait()

}pub

package main

import (

//"os"

//"os/signal"

//"sync"

"time"

"strconv"

"github.com/Shopify/sarama"

//cluster "github.com/bsm/sarama-cluster"

log "github.com/thinkboy/log4go"

)

var (

producer sarama.AsyncProducer

storageProducer sarama.AsyncProducer

)

func InitKafkaProducer(kafkaAddrs []string) (err error) {

config := sarama.NewConfig()

config.Producer.RequiredAcks = sarama.NoResponse

config.Producer.Partitioner = sarama.NewHashPartitioner

config.Producer.Return.Successes = true

config.Producer.Return.Errors = true

producer, err = sarama.NewAsyncProducer(kafkaAddrs, config)

if err != nil {

log.Error(err)

}

go handleSuccess()

go handleError()

return

}

func handleSuccess() {

var (

pm *sarama.ProducerMessage

)

for {

pm = <-producer.Successes()

if pm != nil {

//log.Info("producer message success, partition:%d offset:%d key:%v valus:%s", pm.Partition, pm.Offset, pm.Key, pm.Value)

}

}

}

func handleError() {

var (

err *sarama.ProducerError

)

for {

err = <-producer.Errors()

if err != nil {

log.Error("producer message error, partition:%d offset:%d key:%v valus:%s error(%v)", err.Msg.Partition, err.Msg.Offset, err.Msg.Key, err.Msg.Value, err.Err)

}

}

}

func PushKafka(strTopic string, byteDate []byte, strKey string) (err error) {

//producer.Input() <- &sarama.ProducerMessage{Topic: strTopic, Value: sarama.ByteEncoder(byteDate), Key: sarama.StringEncoder(strKey)}

producer.Input() <- &sarama.ProducerMessage{Topic: strTopic, Value: sarama.ByteEncoder(byteDate)}

return

}

func main(){

i := int64(50)

//sAddrs := []string{"10.10.1.41:9092"}

//sAddrs := []string{"118.89.177.83:9092"}

sAddrs := []string{"127.0.0.1:9092"}

InitKafkaProducer(sAddrs)

for ; i < 60; {

strMsg := "this is my new msg: " + strconv.FormatInt(i, 10)

PushKafka("newstest", []byte(strMsg), "")

log.Info(strMsg)

i++

}

time.Sleep(30 * time.Second)

}

6. go发送kafka消息的时候,key填 ""和不填,有区别吗?

当生产者向Kafka中写入基于key的消息时,Kafka通过消息的key来计算出消息将要写入到哪个具体的分区中,这样具有相同key的数据可以写入到同一个分区中

如果分区的数量发生变化,那么有序性就得不到保证。在创建主题时,最好能够确定好分区数,这样也可以省去后期增加所带来的多余操作

3578

3578

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?