(这部分代码如果对你有帮助,下面这个最新的链接,说不定你更需要:【深度学习数据增强处理】imgaug Augment Polygons 对标注图片和polygons的数据增强)

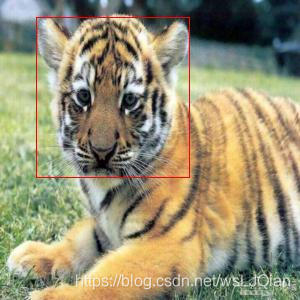

后面的所有变换,均以此为原图:

一、改变尺寸resize

import math

import random

import torch

from PIL import Image, ImageDraw

import torchvision.transforms.functional as F

def resize(img, boxes, size, max_size=1000):

w, h = img.size #(800, 600)

print('wwww',w)

print('hhhh',h)

if isinstance(size, int): #这里是按照高和宽等比例缩放,短边缩放

print('is============')

size_min = min(w, h) #这里我以输入300为例

size_max = max(w, h) #首先找到最小额一边,缩放的边是600

# 等比例缩放

sw = sh = float(size) / size_min #计算出高缩放的比例,将宽缩放到同等比例:300.0/600=0.5

print('sw', sw)

print('sh', sh)

if sw * size_max > max_size: #放置缩放过大:0.5*800=400>1000 false

sw = sh = float(max_size) / size_max

print('ifl====',sw)

ow = int(w * sw + 0.5) # 取整,800*0.5+0.5=400

print('ow',ow)

oh = int(h * sh + 0.5) # 取整,600*0.5+0.5=300

print('oh',oh)

else:

print('else============') #这里输入的就是(300,300)

ow, oh = size

print('ow',ow)

print('oh',oh)

sw = float(ow) / w # 300.0/800=0.375

print('sw', sw)

sh = float(oh) / h # 300.0/600=0.5 计算高和框的缩放比例,boxes乘以这个比例即可

print('sh', sh)

print(boxes, [sw, sh, sw, sh])

print('boxes', boxes*torch.Tensor([sw, sh, sw, sh]))

# return img.resize((ow, oh), Image.BILINEAR), boxes*torch.Tensor([sw,sh,sw,sh]) # 方式一:Image.resize

return F.resize(img, (ow, oh)), boxes*torch.Tensor([sw,sh,sw,sh]) # 方式二:torchvision

def draw(img, boxes):

draw = ImageDraw.Draw(img)

for box in boxes:

draw.rectangle(list(box), outline='red')

img.show()

def test():

img = Image.open('F:\lingjun2019\Body_Recog\Data-Augment-master\data\image/01.jpg')

boxes = torch.Tensor([[96, 34, 504, 355]])

img_resized, boxes = resize(img, boxes, (300, 300))

print(img_resized.size)

draw(img_resized, boxes)

if __name__=='__main__':

test()

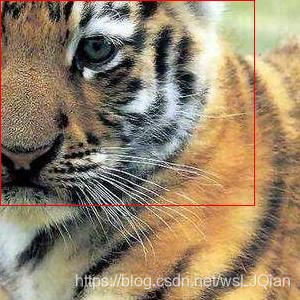

resize后的图像显示:

此外,上述简单代码参考了DETR官方案例:

1.这块参考这里:Deformable-DETR/transforms.py at main · fundamentalvision/Deformable-DETR · GitHub

2.https://github.com/facebookresearch/detectron2/blob/master/detectron2/data/transforms/transform.py

后续的内容也会以此作为参考,进行附录,希望能够对你的理解有帮助。当你有进一步的修改,比如分割时候,均能够有很好的借鉴。

import random

import torch

import torchvision

import torchvision.transforms.functional as F

def interpolate(input, size=None, scale_factor=None, mode="nearest", align_corners=None):

# type: (Tensor, Optional[List[int]], Optional[float], str, Optional[bool]) -> Tensor

"""

Equivalent to nn.functional.interpolate, but with support for empty batch sizes.

This will eventually be supported natively by PyTorch, and this

class can go away.

"""

if float(torchvision.__version__[:3]) < 0.7:

if input.numel() > 0:

return torch.nn.functional.interpolate(

input, size, scale_factor, mode, align_corners

)

output_shape = _output_size(2, input, size, scale_factor)

output_shape = list(input.shape[:-2]) + list(output_shape)

if float(torchvision.__version__[:3]) < 0.5:

return _NewEmptyTensorOp.apply(input, output_shape)

return _new_empty_tensor(input, output_shape)

else:

return torchvision.ops.misc.interpolate(input, size, scale_factor, mode, align_corners)

def resize(image, target, size, max_size=None):

# size can be min_size (scalar) or (w, h) tuple

def get_size_with_aspect_ratio(image_size, size, max_size=None):

w, h = image_size

if max_size is not None:

min_original_size = float(min((w, h)))

max_original_size = float(max((w, h)))

if max_original_size / min_original_size * size > max_size:

size = int(round(max_size * min_original_size / max_original_size))

# 有一个边,与resize后的边相同,直接返回原图大小

if (w <= h and w == size) or (h <= w and h == size):

return (h, w)

if w < h:

ow = size

oh = int(size * h / w)

else:

oh = size

ow = int(size * w / h)

return (oh, ow)

def get_size(image_size, size, max_size=None):

if isinstance(size, (list, tuple)):

return size[::-1]

else:

return get_size_with_aspect_ratio(image_size, size, max_size)

size = get_size(image.size, size, max_size)

rescaled_image = F.resize(image, size)

if target is None:

return rescaled_image, None

ratios = tuple(float(s) / float(s_orig) for s, s_orig in zip(rescaled_image.size, image.size))

ratio_width, ratio_height = ratios

target = target.copy()

if "boxes" in target:

boxes = target["boxes"]

scaled_boxes = boxes * torch.as_tensor([ratio_width, ratio_height, ratio_width, ratio_height])

target["boxes"] = scaled_boxes

if "area" in target:

area = target["area"]

scaled_area = area * (ratio_width * ratio_height)

target["area"] = scaled_area

h, w = size

target["size"] = torch.tensor([h, w])

if "masks" in target:

# 对target['masks']进行插值处理

target['masks'] = interpolate(

target['masks'][:, None].float(), size, mode="nearest")[:, 0] > 0.5

return rescaled_image, target

class RandomResize(object):

'''

T.RandomResize([600,800,1000], max_size=1333),

'''

def __init__(self, sizes, max_size=None):

assert isinstance(sizes, (list, tuple))

self.sizes = sizes

self.max_size = max_size

def __call__(self, img, target=None):

size = random.choice(self.sizes)

return resize(img, target, size, self.max_size)二、裁剪crop

裁剪这里主要就是看如何裁剪?也就是按什么方式进行裁剪。比如:

- 中心裁剪,以图像中心为基准,裁剪固定大小图像

- 随机图像大小裁剪,给定图像大小变换的范围,这样根据这个范围,去原图里面裁剪

- 随机裁剪,前两种尽管随机,但还都是有限制的,这种就是毫无约束

2.1 中心裁剪center_crop

import math

import random

import torch

from PIL import Image, ImageDraw

import torchvision.transforms.functional as F

def center_crop(img, boxes, size):

"""

1.计算定位到裁剪的区域坐标top, left, height, width

2.img.crop裁剪图像

3.原boxes-裁剪区域box,去除掉超出边界的像素块

:param img: 原图

:param boxes: 原图boxes坐标

:param size: 中心裁剪后的大小

:return:

"""

w, h = img.size

ow, oh = size

i = int(round((h - oh) / 2.)) #同样我们只需要将照片二边需要减掉的高和宽计算出来

print('i',i)

j = int(round((w - ow) / 2.))

print('j',j)

img = img.crop((j, i, j+ow, i+oh)) #利用自带的图像处理,选取图像固定位置:top, left, height, width

print(img.size)

print('bo', boxes)

boxes = boxes - torch.Tensor([j,i,j,i]) #将boxes减去就可以需要的boxes位置信息

print('boxes', boxes) # (x1,y1,x2,y2)

print(boxes[:, ::2])

boxes[:, ::2].clamp_(min=0, max=ow-1) # clamp函数是用来防止超出边界, boxes[:, ::2]=tensor([[-154., 254.]])

print('bo1', boxes)

boxes[:, 1::2].clamp_(min=0, max=oh-1)

print('bo2', boxes)

return img, boxes

def draw(img, boxes):

draw = ImageDraw.Draw(img)

for box in boxes:

draw.rectangle(list(box), outline='red')

img.show()

img.save('center_crop_300.jpg')

def test():

img = Image.open('F:\lingjun2019\Body_Recog\Data-Augment-master\data\image/01.jpg')

boxes = torch.Tensor([[96, 34, 504, 355]])

# img_resized, boxes = resize(img, boxes, (300, 300))

img_resized, boxes = center_crop(img, boxes, (300, 300))

print(img_resized.size)

draw(img_resized, boxes)

if __name__=='__main__':

test()

展示结果:

附录参考:

#######################CenterCrop###################################

import torch

import torchvision.transforms.functional as F

def crop(image, target, region):

cropped_image = F.crop(image, *region)

target = target.copy()

i, j, h, w = region

# should we do something wrt the original size?

target["size"] = torch.tensor([h, w])

fields = ["labels", "area", "iscrowd"]

if "boxes" in target:

boxes = target["boxes"]

max_size = torch.as_tensor([w, h], dtype=torch.float32)

cropped_boxes = boxes - torch.as_tensor([j, i, j, i])

cropped_boxes = torch.min(cropped_boxes.reshape(-1, 2, 2), max_size)

cropped_boxes = cropped_boxes.clamp(min=0)

area = (cropped_boxes[:, 1, :] - cropped_boxes[:, 0, :]).prod(dim=1)

target["boxes"] = cropped_boxes.reshape(-1, 4)

target["area"] = area

fields.append("boxes")

if "masks" in target:

# FIXME should we update the area here if there are no boxes?

target['masks'] = target['masks'][:, i:i + h, j:j + w] # masks截取这个区域作为新masks

fields.append("masks")

# remove elements for which the boxes or masks that have zero area

# 上面就crop修改完了,下面就是对修改后的结果,更新到coco数据库中

if "boxes" in target or "masks" in target:

# favor boxes selection when defining which elements to keep

# this is compatible with previous implementation

if "boxes" in target:

cropped_boxes = target['boxes'].reshape(-1, 2, 2)

keep = torch.all(cropped_boxes[:, 1, :] > cropped_boxes[:, 0, :], dim=1)

else:

keep = target['masks'].flatten(1).any(1)

for field in fields:

target[field] = target[field][keep]

return cropped_image, target

class CenterCrop(object):

def __init__(self, size):

self.size = size

def __call__(self, img, target):

image_width, image_height = img.size

crop_height, crop_width = self.size

crop_top = int(round((image_height - crop_height) / 2.))

crop_left = int(round((image_width - crop_width) / 2.))

return crop(img, target, (crop_top, crop_left, crop_height, crop_width))2.2 随机图像大小裁剪RandomSize_crop

########################RandomSize_crop############################

import math

import random

import torch

from PIL import Image, ImageDraw

import torchvision.transforms.functional as F

import torchvision.transforms as T

def RandomSize_crop(img, boxes, min_size: int, max_size: int):

"""

1.计算定位到裁剪的区域坐标top, left, height, width

2.img.crop裁剪图像

3.原boxes-裁剪区域box,去除掉超出边界的像素块

:param img: 原图

:param boxes: 原图boxes坐标

:param size: 随机裁剪的最小、最大尺寸

:return:

"""

w, h = img.size

w = random.randint(min_size, min(w, max_size))

h = random.randint(min_size, min(h, max_size))

print('w,h:', w, h)

# 使用torchvision.transforms.RandomCrop,获取随机尺寸

region = T.RandomCrop.get_params(img, [h, w])

i, j, oh, ow = region

print('i,j,h,w:', i,j,oh,ow)

img = img.crop((j, i, j+ow, i+oh)) #利用自带的图像处理,选取图像固定位置:top, left, height, width

print(img.size)

print('bo', boxes)

boxes = boxes - torch.Tensor([j,i,j,i]) #将boxes减去就可以需要的boxes位置信息

print('boxes', boxes) # (x1,y1,x2,y2)

print(boxes[:, ::2])

boxes[:, ::2].clamp_(min=0, max=ow-1) # clamp函数是用来防止超出边界, boxes[:, ::2]=tensor([[-154., 254.]])

print('bo1', boxes)

boxes[:, 1::2].clamp_(min=0, max=oh-1)

print('bo2', boxes)

return img, boxes

def draw(img, boxes, i):

draw = ImageDraw.Draw(img)

for box in boxes:

draw.rectangle(list(box), outline='red')

# img.show()

img.save('crop_'+str(i)+'.jpg')

def test():

img = Image.open('F:\lingjun2019\Body_Recog\Data-Augment-master\data\image/01.jpg')

boxes = torch.Tensor([[96, 34, 504, 355]])

# img_resized, boxes = resize(img, boxes, (300, 300))

# img_resized, boxes = center_crop(img, boxes, (300, 300))

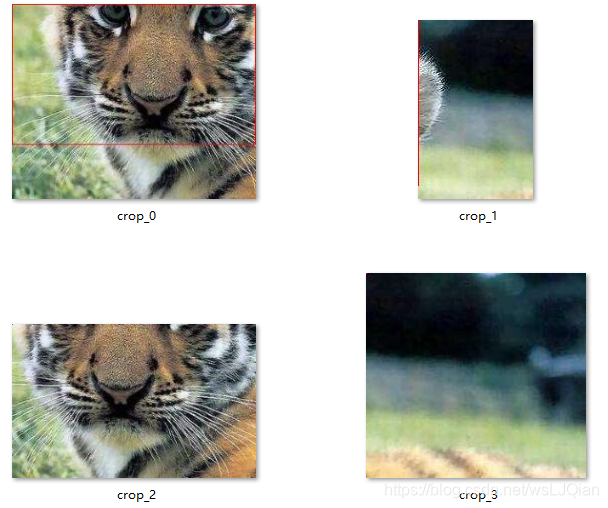

for i in range(4):

img_resized, boxes = RandomSize_crop(img, boxes, 100, 350)

print(img_resized.size)

draw(img_resized, boxes, i)

if __name__=='__main__':

test()展示结果:

附录参考:

# T.RandomSizeCrop(384, 600),

class RandomSizeCrop(object):

def __init__(self, min_size: int, max_size: int):

self.min_size = min_size

self.max_size = max_size

def __call__(self, img: PIL.Image.Image, target: dict):

w = random.randint(self.min_size, min(img.width, self.max_size))

h = random.randint(self.min_size, min(img.height, self.max_size))

region = T.RandomCrop.get_params(img, [h, w])

return crop(img, target, region)2.3 随机裁剪Random_crop

def Random_crop(img, boxes, size):

"""

1.计算定位到裁剪的区域坐标top, left, height, width

2.img.crop裁剪图像

3.原boxes-裁剪区域box,去除掉超出边界的像素块

:param img: 原图

:param boxes: 原图boxes坐标

:param size: 固定大小裁剪的size

:return:

"""

w, h = img.size

print('w,h:', w, h)

# 使用torchvision.transforms.RandomCrop,获取随机尺寸

region = T.RandomCrop.get_params(img, size)

i, j, oh, ow = region

print('i,j,h,w:', i,j,oh,ow)

img = img.crop((j, i, j+ow, i+oh)) #利用自带的图像处理,选取图像固定位置:top, left, height, width

print(img.size)

print('bo', boxes)

boxes = boxes - torch.Tensor([j,i,j,i]) #将boxes减去就可以需要的boxes位置信息

print('boxes', boxes) # (x1,y1,x2,y2)

print(boxes[:, ::2])

boxes[:, ::2].clamp_(min=0, max=ow-1) # clamp函数是用来防止超出边界, boxes[:, ::2]=tensor([[-154., 254.]])

print('bo1', boxes)

boxes[:, 1::2].clamp_(min=0, max=oh-1)

print('bo2', boxes)

return img, boxes附录参考:

class RandomCrop(object):

def __init__(self, size):

self.size = size

def __call__(self, img, target):

region = T.RandomCrop.get_params(img, self.size)

return crop(img, target, region)三、随机翻转random_flip

import math

import random

import torch

from PIL import Image, ImageDraw

import torchvision.transforms.functional as F

def random_flip(img, boxes):

if random.random() < 0.5:

# transpose有这么几种模式FLIP_LEFT_RIGHT ,FLIP_TOP_BOTTOM ,ROTATE_90 ,

# ROTATE_180 ,ROTATE_270,TRANSPOSE ,TRANSVERSE

img = img.transpose(Image.FLIP_LEFT_RIGHT) #0.5的概率随机翻转

w = img.width

# method 1

print(boxes, boxes[:, 2])

# boxes[:, 2] 取所有行,取第3列

xmin = w - boxes[:, 2] #计算左顶角的位置 高的位置都不变

xmax = w - boxes[:, 0] #计算右低角的位置

boxes[:, 0] = xmin

boxes[:, 2] = xmax

# method 2

# boxes = boxes[:, [2, 1, 0, 3]] * torch.as_tensor([-1, 1, -1, 1]) + torch.as_tensor([w, 0, w, 0])

return img, boxes

def draw(img, boxes):

draw = ImageDraw.Draw(img)

for box in boxes:

draw.rectangle(list(box), outline='red')

img.show()

img.save('flip.jpg')

def test():

img = Image.open('F:\lingjun2019\Body_Recog\Data-Augment-master\data\image/01.jpg')

boxes = torch.Tensor([[96, 34, 504, 355]])

img_resized, boxes = random_flip(img, boxes)

print(img_resized.size)

draw(img_resized, boxes)

if __name__=='__main__':

test()

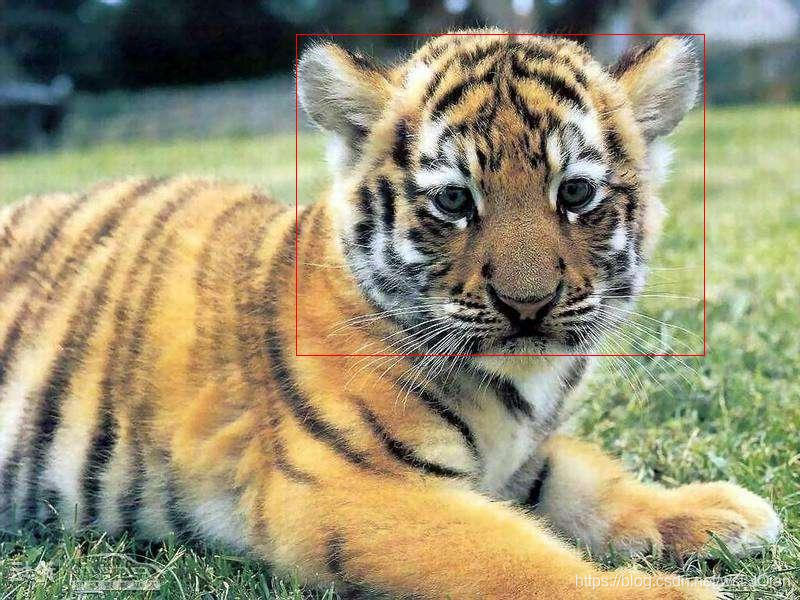

展示结果:

附录参考:

##################RandomHorizontalFlip#####################

def hflip(image, target):

flipped_image = F.hflip(image)

w, h = image.size

target = target.copy()

if "boxes" in target:

boxes = target["boxes"]

boxes = boxes[:, [2, 1, 0, 3]] * torch.as_tensor([-1, 1, -1, 1]) + torch.as_tensor([w, 0, w, 0])

target["boxes"] = boxes

if "masks" in target:

target['masks'] = target['masks'].flip(-1)

return flipped_image, target

class RandomHorizontalFlip(object):

def __init__(self, p=0.5):

self.p = p

def __call__(self, img, target):

if random.random() < self.p:

return hflip(img, target)

return img, target说明几点:

1.target['masks'] = target['masks'].flip(-1),意思是直接按列,水平对调

2.boxes[:, [2, 1, 0, 3]],所有行,按列调换

In [15]: c

Out[15]:

tensor([[1, 2, 3],

[4, 5, 6],

[7, 8, 9]], dtype=torch.int32)

In [16]: d=c.flip(-1)

In [17]: d

Out[17]:

tensor([[3, 2, 1],

[6, 5, 4],

[9, 8, 7]], dtype=torch.int32)

In [18]: d=c.flip(0)

In [19]: d

Out[19]:

tensor([[7, 8, 9],

[4, 5, 6],

[1, 2, 3]], dtype=torch.int32)

In [20]: d=c.flip(1)

In [21]: d

Out[21]:

tensor([[3, 2, 1],

[6, 5, 4],

[9, 8, 7]], dtype=torch.int32)

In [22]: f=c[:,[0,1,2]]

In [23]: f

Out[23]:

tensor([[1, 2, 3],

[4, 5, 6],

[7, 8, 9]], dtype=torch.int32)

In [24]: c

Out[24]:

tensor([[1, 2, 3],

[4, 5, 6],

[7, 8, 9]], dtype=torch.int32)

In [25]: f1=c[:,[1,0,2]]

In [26]: f1

Out[26]:

tensor([[2, 1, 3],

[5, 4, 6],

[8, 7, 9]], dtype=torch.int32)四、随机旋转Rotation

import math

import random

import torch

from PIL import Image, ImageDraw

import cv2

import numpy as np

import torchvision.transforms.functional as F

def create_rotation_matrix(angle, center, image_center, bound_w, bound_h, expand, offset=0):

center = (center[0] + offset, center[1] + offset)

rm = cv2.getRotationMatrix2D(tuple(center), angle, 1)

if expand:

# Find the coordinates of the center of rotation in the new image

# The only point for which we know the future coordinates is the center of the image

rot_im_center = cv2.transform(image_center[None, None, :] + offset, rm)[0, 0, :]

new_center = np.array([bound_w / 2, bound_h / 2]) + offset - rot_im_center

# shift the rotation center to the new coordinates

rm[:, 2] += new_center

return rm

def Rotation(img, boxes, angle, expand=True, center=None, interp=None):

w, h = img.size #(800, 600)

print('wwww',w)

print('hhhh',h)

img = np.asarray(img)

if len(img) == 0 or angle % 360 == 0:

return img, boxes

image_center = np.array((w / 2, h / 2))

if center is None:

center = image_center

if interp is None:

interp = cv2.INTER_LINEAR

abs_cos, abs_sin = (abs(np.cos(np.deg2rad(angle))), abs(np.sin(np.deg2rad(angle))))

if expand:

# find the new width and height bounds

bound_w, bound_h = np.rint(

[h * abs_sin + w * abs_cos, h * abs_cos + w * abs_sin]

).astype(int)

else:

bound_w, bound_h = w, h

rm_coords = create_rotation_matrix(angle, center, image_center, bound_w, bound_h, expand)

rm_image = create_rotation_matrix(angle, center, image_center, bound_w, bound_h, expand, offset=-0.5)

coords = np.asarray(boxes, dtype=float)

print('coords:', coords)

return cv2.warpAffine(img, rm_image, (bound_w, bound_h), flags=interp), cv2.transform(coords[:, np.newaxis, :], rm_coords)[:, 0, :]

def draw(img, boxes):

draw = ImageDraw.Draw(img)

for box in boxes:

draw.rectangle(list(box), outline='red')

# img.show()

img.save('rotate_180.jpg')

def test():

img = Image.open('F:\Data-Augment-master\data\image/01.jpg')

boxes = [[96, 34], [504, 355]]

img_rotated, coor = Rotation(img, boxes, 180)

img_rotated = Image.fromarray(np.uint8(img_rotated))

print(coor.shape)

coor_list = []

print(coor)

for i in range(coor.shape[0]):

for j in range(coor.shape[1]):

coor_list.append(coor[i][j])

print(coor_list)

draw(img_rotated, torch.Tensor(coor_list).unsqueeze_(0))

# img_rotated.show()

if __name__=='__main__':

test()

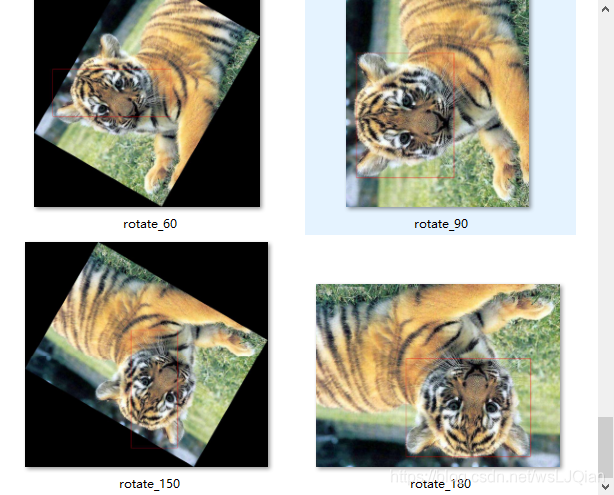

展示结果:(目前发现90,180,270,360的旋转还挺好,其他数字的旋转不太行,待改进)

附录参考:

class RotationTransform(Transform):

"""

This method returns a copy of this image, rotated the given

number of degrees counter clockwise around its center.

"""

def __init__(self, h, w, angle, expand=True, center=None, interp=None):

"""

Args:

h, w (int): original image size

angle (float): degrees for rotation

expand (bool): choose if the image should be resized to fit the whole

rotated image (default), or simply cropped

center (tuple (width, height)): coordinates of the rotation center

if left to None, the center will be fit to the center of each image

center has no effect if expand=True because it only affects shifting

interp: cv2 interpolation method, default cv2.INTER_LINEAR

"""

super().__init__()

image_center = np.array((w / 2, h / 2))

if center is None:

center = image_center

if interp is None:

interp = cv2.INTER_LINEAR

abs_cos, abs_sin = (abs(np.cos(np.deg2rad(angle))), abs(np.sin(np.deg2rad(angle))))

if expand:

# find the new width and height bounds

bound_w, bound_h = np.rint(

[h * abs_sin + w * abs_cos, h * abs_cos + w * abs_sin]

).astype(int)

else:

bound_w, bound_h = w, h

self._set_attributes(locals())

self.rm_coords = self.create_rotation_matrix()

# Needed because of this problem https://github.com/opencv/opencv/issues/11784

self.rm_image = self.create_rotation_matrix(offset=-0.5)

def apply_image(self, img, interp=None):

"""

img should be a numpy array, formatted as Height * Width * Nchannels

"""

if len(img) == 0 or self.angle % 360 == 0:

return img

assert img.shape[:2] == (self.h, self.w)

interp = interp if interp is not None else self.interp

return cv2.warpAffine(img, self.rm_image, (self.bound_w, self.bound_h), flags=interp)

def apply_coords(self, coords):

"""

coords should be a N * 2 array-like, containing N couples of (x, y) points

"""

coords = np.asarray(coords, dtype=float)

if len(coords) == 0 or self.angle % 360 == 0:

return coords

return cv2.transform(coords[:, np.newaxis, :], self.rm_coords)[:, 0, :]

def apply_segmentation(self, segmentation):

segmentation = self.apply_image(segmentation, interp=cv2.INTER_NEAREST)

return segmentation

def create_rotation_matrix(self, offset=0):

center = (self.center[0] + offset, self.center[1] + offset)

rm = cv2.getRotationMatrix2D(tuple(center), self.angle, 1)

if self.expand:

# Find the coordinates of the center of rotation in the new image

# The only point for which we know the future coordinates is the center of the image

rot_im_center = cv2.transform(self.image_center[None, None, :] + offset, rm)[0, 0, :]

new_center = np.array([self.bound_w / 2, self.bound_h / 2]) + offset - rot_im_center

# shift the rotation center to the new coordinates

rm[:, 2] += new_center

return rm

def inverse(self):

"""

The inverse is to rotate it back with expand, and crop to get the original shape.

"""

if not self.expand: # Not possible to inverse if a part of the image is lost

raise NotImplementedError()

rotation = RotationTransform(

self.bound_h, self.bound_w, -self.angle, True, None, self.interp

)

crop = CropTransform(

(rotation.bound_w - self.w) // 2, (rotation.bound_h - self.h) // 2, self.w, self.h

)

return TransformList([rotation, crop])

五、总结:具体使用

上述都是一个个具体的增强模型,究竟具体到加入数据变换里面,可以参考下面的案例。仅做参考哦

def __getitem__(self, idx):

boxes = self.boxes[idx].clone()

labels = self.labels[idx]

size = self.input_size

# Data augmentation.

if self.train:

img, boxes = random_flip(img, boxes)

img, boxes = random_crop(img, boxes)

img, boxes = resize(img, boxes, (size,size))

else:

img, boxes = resize(img, boxes, size)

img, boxes = center_crop(img, boxes, (size,size))

img = self.transform(img)

return img, boxes, labels

最后,如果您觉得本篇文章对你有帮助,欢迎点赞,让更多人看到,这是对我继续写下去的鼓励。如果能再点击下方的红包打赏,给博主来一杯咖啡,那就太好了。

本文深入探讨了深度学习中用于图像和目标检测的数据增强技术,包括等比例缩放、中心裁剪、随机大小裁剪和随机翻转。通过这些方法,可以增加模型的泛化能力,防止过拟合。文中还提供了详细的代码示例,如resize函数考虑了保持图像比例并限制最大尺寸,center_crop函数用于中心裁剪,RandomSize_crop实现了随机大小裁剪,random_flip函数则实现了随机水平翻转。此外,还介绍了旋转操作,包括旋转矩阵的创建和应用。这些技术对于构建高性能的深度学习模型至关重要。

本文深入探讨了深度学习中用于图像和目标检测的数据增强技术,包括等比例缩放、中心裁剪、随机大小裁剪和随机翻转。通过这些方法,可以增加模型的泛化能力,防止过拟合。文中还提供了详细的代码示例,如resize函数考虑了保持图像比例并限制最大尺寸,center_crop函数用于中心裁剪,RandomSize_crop实现了随机大小裁剪,random_flip函数则实现了随机水平翻转。此外,还介绍了旋转操作,包括旋转矩阵的创建和应用。这些技术对于构建高性能的深度学习模型至关重要。

2069

2069

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?