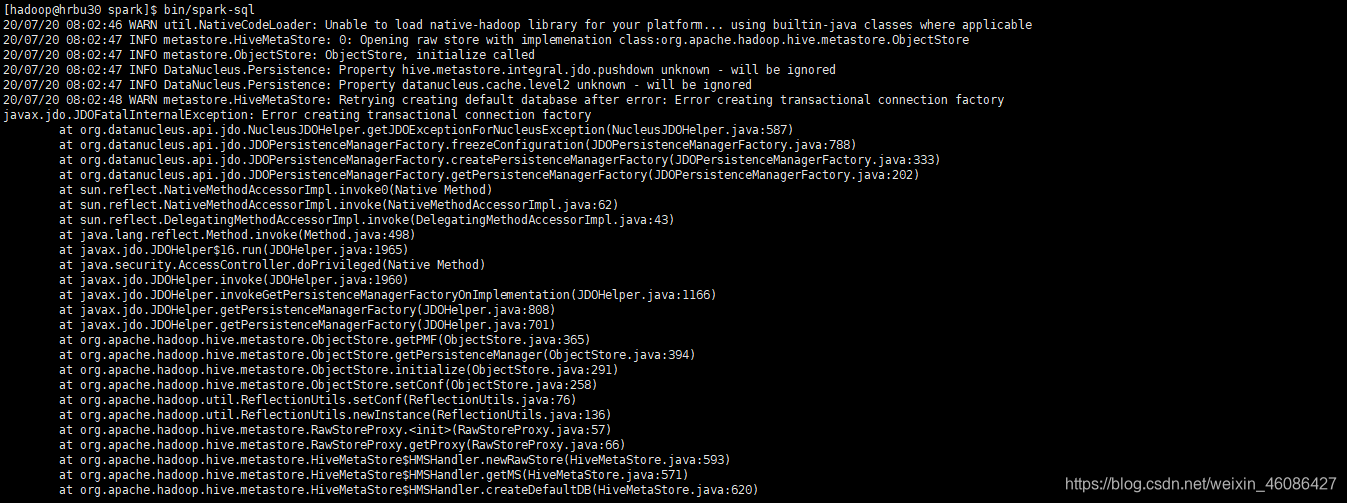

启动SparkSql,报错如下:

20/07/20 08:02:48 WARN metastore.HiveMetaStore: Retrying creating default database after error: Error creating transactional connection factory

javax.jdo.JDOFatalInternalException: Error creating transactional connection factory

NestedThrowablesStackTrace:

java.lang.reflect.InvocationTargetException

Caused by: org.datanucleus.exceptions.NucleusException: Attempt to invoke the "BONECP" plugin to create a ConnectionPool gave an error : The specified datastore driver ("com.mysql.jdbc.Driver") was not found in the CLASSPATH. Please check your CLASSPATH specification, and the name of the driver.

Caused by: org.datanucleus.store.rdbms.connectionpool.DatastoreDriverNotFoundException: The specified datastore driver ("com.mysql.jdbc.Driver") was not found in the CLASSPATH. Please check your CLASSPATH specification, and the name of the driver.

报错原因:

因为spark与hive配置的Mysql作为元数据,需要对应的jar包依赖,缺少了mysql-connector的jar包

解决办法:

拷贝hive的lib下的mysql-connector-java-5.1.46-bin.jar这个jar包到spark的jars下,这样就搞定了

[hadoop@hrbu30 lib]$ cp /opt/wdp/hive/lib/mysql-connector-java-5.1.46-bin.jar /opt/wdp/spark/jars/

1208

1208

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?