一、搭建虚拟机

搭建三台虚拟机分别命名master1,slave1,slave2

二、修改主机名

ifconfig #查询主机ip

vi /etc/hosts #插入主机名和IP

#插入:

192.168.43.176 master1

192.168.43.133 slave1

192.168.43.68 slave2

#检验

ping 主机名三、免密

//全部在master1的~目录执行:

ssh-keygen //一路回车

//复制公匙到每台虚拟机:

ssh-copy-id master1

ssh-copy-id slave1

ssh-copy-id slave2

//验证:

ssh slave1 //主机名变成slave1

exit //退出回到master四、搭建jdk环境

1.修改源

//备份原来的源

mv /etc/apt/source.list /etc/apt/source.list.backup

//修改源

vi /etc/apt/source.list

//插入:(选择一个就好了)

//阿里源

deb http://mirrors.aliyun.com/ubuntu/ bionic main restricted universe multiverse

deb http://mirrors.aliyun.com/ubuntu/ bionic-security main restricted universe multiverse

deb http://mirrors.aliyun.com/ubuntu/ bionic-updates main restricted universe multiverse

deb http://mirrors.aliyun.com/ubuntu/ bionic-proposed main restricted universe multiverse

deb http://mirrors.aliyun.com/ubuntu/ bionic-backports main restricted universe multiverse

deb-src http://mirrors.aliyun.com/ubuntu/ bionic main restricted universe multiverse

deb-src http://mirrors.aliyun.com/ubuntu/ bionic-security main restricted universe multiverse

deb-src http://mirrors.aliyun.com/ubuntu/ bionic-updates main restricted universe multiverse

deb-src http://mirrors.aliyun.com/ubuntu/ bionic-proposed main restricted universe multiverse

deb-src http://mirrors.aliyun.com/ubuntu/ bionic-backports main restricted universe multiverse

//清华源

deb https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ bionic main restricted universe multiverse

deb https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ bionic-updates main restricted universe multiverse

deb https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ bionic-backports main restricted universe multiverse

deb https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ bionic-security main restricted universe multiverse

deb https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ bionic-proposed main restricted universe multiverse

deb-src https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ bionic main restricted universe multiverse

deb-src https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ bionic-updates main restricted universe multiverse

deb-src https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ bionic-backports main restricted universe multiverse

deb-src https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ bionic-security main restricted universe multiverse

deb-src https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ bionic-proposed main restricted universe multiverse

//保存退出

apt-get update

2.查询jdk版本

这里能查询到8版本就可以直接命令下载,如果没有则可能需要外部上传,这里如果是外部上传直接跳到第6步

// /etc/apt目录:

apt-cache search jdk ( | grep openjdk) //括号内可省略

3.下载jdk

//下载jdk

apt-get install openjdk-8-jdk openjdk-8-source

4.检验

回到~目录:

java -version

//查java路径(三个方法)

which java

whereis java

man java5.配置java环境

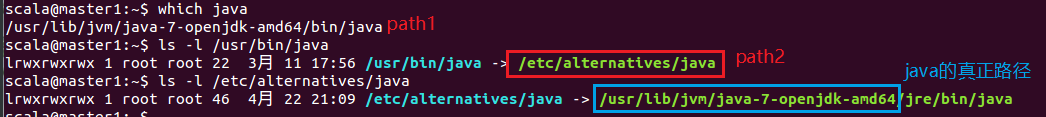

可以先which java查软链的位置路径path1(此处用path1代替)

ls -l path1 找到相关链接路径path2

继续ls -l path2

找到路径后设置环境变量

sudo vi ~/.bashrc

#插入:

export JAVA_HOME="/usr/lib/jvm/java-8-openjdk-amd64"

export JRE_HOME=${JAVA_HOME}/jre

export CLASSPATH=.:{JAVA_HOME}/lib:${JRE_HOME}/lib

export PATH=${JAVA_HOME}/bin:$PATH

#保存退出

#使变量生效

source ~/.bashrc

#检验,以下命令输出不是空白

echo $JAVA_HOME

以上执行完直接跳转第五步配置spark

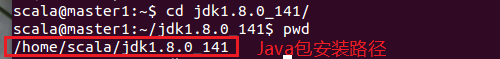

6.解压上传的jdk

tar zxvf jdk压缩包名

#进入jdk解压包

cd jdk解压包

#查看包安装路径

pwd

7. 配置java环境

sudo vi ~/.bashrc

#插入:

export JAVA_HOME="/home/scala/jdk1.8.0_141"

export JRE_HOME=${JAVA_HOME}/jre

export CLASSPATH=.:{JAVA_HOME}/lib:${JRE_HOME}/lib

export PATH=${JAVA_HOME}/bin:$PATH

#保存退出

#使变量生效

source ~/.bashrc

#检验,以下命令输出不是空白

echo $JAVA_HOME五、配置spark

1.下载spark

网址:https://spark.apache.org/downloads.html

2.解压

tar zxvf spark-2.4.5-bin-hadoop2.7.tar.gz3.传输到slave1,slave2并解压

scp spark-2.4.5-bin-hadoop2.7 slave1:~/

scp spark-2.4.5-bin-hadoop2.7 slave2:~/解压过程参照master1的步骤

4.配置spark文件

cd spark-2.4.5-bin-hadoop2.7/conf/

#备份并修改spark-evn.sh.template文件

cp spark-evn.sh.template spark-evn.sh

vi spark-evn.sh

#插入:

SPARK_MASTER_HOST="192.168.43.176"

SPARK_MASTER_PORT="7077"

SPARK_WORKER_CORES="1"

#备份并修改slaves.template文件

cp slaves.template slaves

vi slaves

#插入:

master1

slave1

slave25.启动spark

回到spark-2.4.5-bin-hadoop2.7目录

cd spark-2.4.5-bin-hadoop2.7

#执行启动命令

./sbin/start-all.sh

#检查是否启动

jps启动内容查询命令

ps aux | grep java

6.尝试web登陆

六、运行一个例子

在spark-2.4.5-bin-hadoop2.7的目录下执行命令:

./bin/run-example --master spark://192.168.43.176:7077 SparkPi

本文详细介绍了如何在三台虚拟机上搭建Spark集群环境,包括虚拟机的配置、免密登录设置、JDK环境搭建、Spark下载与配置,以及如何在集群上运行示例程序。

本文详细介绍了如何在三台虚拟机上搭建Spark集群环境,包括虚拟机的配置、免密登录设置、JDK环境搭建、Spark下载与配置,以及如何在集群上运行示例程序。

750

750

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?