【DataX】使用案例和原理分析

【一】DataX概述

DataX是阿里巴巴开源的一个异构数据源(多种不同数据源)离线同步工具,用于实现包括关系型数据库(MySQL、Oracle等)、HDFS、Hive、ODPS、HBase、FTP等各种异构数据源之间稳定高效的数据同步功能。

支持的数据源如下:

【二】DataX架构原理

【1】设计理念

为了解决异构数据源同步问题,DataX把复杂的网状的同步链路变成了星型数据链路,DataX作为重甲传输载体负责连接各种数据源。当需要接入一个新的数据源的时候,只需要把此数据源对接到DataX,就能跟已有的数据源做到无缝数据同步。

【2】框架设计

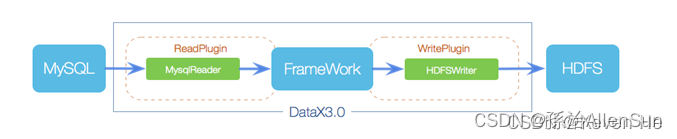

ataX 采用了框架和插件分离的架构设计,整体上由核心框架(DataX Core)和各种数据源插件(Reader 和 Writer)组成,把数据源读取和写入抽象成Reader/Writer插件,纳入到整个同步框架中。

(1)Reader:数据采集模块,负责采集数据源的数据,把数据发给Framework

(2)Writer:数据写入模块,负责不断地向Framework取数据,并把数据写入到目的端

(3)Framework:用于连接Reader和Writer,作为两者的数据传输通道,并处理缓存、流控、并发、数据转换等核心问题

其架构主要分为三层:

(1)调度层:负责任务的调度和资源分配,根据配置文件将同步任务拆分成多个子任务,并分配到合适的执行节点上。

(2)核心框架层:实现了数据同步的核心逻辑,包括任务切分、数据传输、状态监控等。

(3)插件层:包含各种数据源的 Reader 和 Writer 插件,负责从源数据源读取数据和将数据写入目标数据源。

【3】核心组件及功能

(1)DataX Core

DataX Core 是 DataX 的核心框架,主要负责以下几个方面的工作:

(1)任务调度:

根据配置文件中的任务信息,将大的同步任务拆分成多个小的子任务,并分配到不同的线程或进程中执行。

(2)数据传输:

负责在 Reader 和 Writer 之间进行数据的传输,采用了流式处理的方式,避免了大量数据在内存中的缓存,减少了内存压力。

(3)状态监控:

实时监控任务的执行状态,包括任务的开始时间、结束时间、处理数据量等,并将这些信息反馈给用户。

(4)异常处理:

在任务执行过程中,如果出现异常情况,能够及时捕获并进行相应的处理,保证任务的可靠性。

(2)Reader 插件

Reader 插件负责从源数据源读取数据,不同的数据源有不同的 Reader 插件实现。例如,对于 MySQL 数据源,有 MySQLReader 插件;对于 HDFS 数据源,有 HdfsReader 插件。Reader 插件的主要工作流程如下:

(1)初始化:根据配置文件中的信息,初始化与源数据源的连接。

(2)数据读取:从源数据源中读取数据,并将数据封装成 DataX 内部的数据格式(Record)。

(3)数据发送:将读取到的数据发送给 DataX Core,由 Core 负责将数据传输到 Writer 插件。

(3)Writer 插件

Writer 插件负责将数据写入目标数据源,同样,不同的数据源有不同的 Writer 插件实现。例如,对于 MySQL 数据源,有 MySQLWriter 插件;对于 HDFS 数据源,有 HdfsWriter 插件。Writer 插件的主要工作流程如下:

(1)初始化:根据配置文件中的信息,初始化与目标数据源的连接。

(2)数据接收:从 DataX Core 接收数据(Record)。

(3)数据写入:将接收到的数据写入目标数据源。

【4】运行流程

用一个DataX作业生命周期的时序图说明DataX的运行流程、核心概念和每个概念之间的关系

(1)组件介绍

(1)Job:单个作业同步的作业,称为一个job,一个job启动一个进程

(2)Task:根据不同数据源切分策略,一个job会切分为多个task,task是DataX作业的最小单元,每个Task负责一部分数据的同步工作。

(3)TaskGroup:Scheduler调度模块对Task进行分组,每个Task组叫做一个Task Group,每个Task Group负责以一定的并发度运行其分得得Task,单个Task Group的并发度为5。

(4)Reader->Channel->Writer:每个Task启动后,都会固定启动Reader->Channel->Writer的线程来完成同步工作。

(2)数据传输流程

DataX 采用了流式处理的方式进行数据传输,具体过程如下:

(1)任务切分

DataX Core 根据配置文件中的信息,将大的同步任务拆分成多个小的子任务。例如,对于一个大的表数据同步任务,可以按照表的主键范围进行切分,每个子任务负责同步一部分数据。

(2)内存队列

DataX 核心框架在 Reader 插件和 Writer 插件之间使用内存队列(RecordChannel)来实现数据的传输。RecordChannel 本质上是一个基于内存的数据缓冲区,它的主要作用是解耦 Reader 和 Writer,使得它们可以独立地进行数据的读取和写入操作。

在这个过程中,核心框架会监控 RecordChannel 的状态,确保数据的传输稳定和高效。

(3)Reader 读取数据:

每个子任务对应的 Reader 插件从源数据源中读取数据,并将读取到的数据封装成 Record 对象,然后将 Record 对象放入 RecordChannel 中。这一过程就像是一个生产者不断地将数据生产出来并放入缓冲区。

(4)数据传输:

Reader 插件将 Record 对象发送给 DataX Core,Core 通过内存队列将数据传输给 Writer 插件。

(5)Writer 写入数据:

Writer 插件则从 RecordChannel 中获取 Record 对象,并将这些数据写入目标数据源。这类似于一个消费者从缓冲区中取出数据进行消费。

(6)任务合并:

当所有子任务都执行完毕后,DataX Core 将子任务的执行结果进行合并,并将最终的执行结果反馈给用户。

【5】调度决策思路

例子:用户提交了一个DataX作业,并且配置了总的并发度为20,目的是对一个有100张分表的mysql数据源进行同步。DataX的调度决策思路是:

(1)DataX Job根据分库分表切分策略,将同步工作分成100个Task。

(2)根据配置的总的并发度20,以及每个Task Group的并发度5,DataX计算共需要分配4个TaskGroup。

(3)4个TaskGroup平分100个Task,每一个TaskGroup负责运行25个Task。

【6】DataX和Sqoop对比

【7】总结:datax为什么快

DataX 通过框架和插件分离的架构设计,实现了多种不同数据源之间的数据同步。其核心框架负责任务调度、数据传输和状态监控等工作,而各种数据源的 Reader 和 Writer 插件则负责具体的数据读取和写入操作。通过流式处理的方式,DataX 能够高效地处理大量数据的同步任务。

(1)架构设计层面

(1)框架与插件分离:DataX 采用了框架和插件分离的架构。核心框架专注于数据同步的通用逻辑,如任务调度、数据传输和状态监控等;而各种数据源的 Reader 和 Writer 插件则负责具体的数据读写操作。这种设计使得 DataX 能够方便地扩展支持新的数据源,同时也提高了代码的可维护性和复用性。不同数据源的读写逻辑可以独立开发和优化,不会相互影响,从而提升了整体的同步效率。

(2)分布式架构支持:DataX 可以在分布式环境下运行,通过将大的同步任务拆分成多个小的子任务,并分配到不同的节点上并行执行,充分利用了集群的计算资源,大大缩短了数据同步的时间。例如,对于一个大数据量的表同步任务,可以按照数据的范围或分区将任务切分成多个子任务,每个子任务在不同的节点上同时进行数据读取和写入操作,从而实现高效的并行处理。

(1)数据处理层面

(1)流式处理:DataX 采用流式处理的方式进行数据传输,即读取一条数据就传输一条数据,而不是将所有数据都缓存到内存中再进行处理。这种方式避免了大量数据在内存中的缓存,减少了内存压力,同时也降低了数据处理的延迟。例如,在从一个大文件中读取数据并写入到数据库的过程中,DataX 可以一边读取文件中的数据,一边将数据写入到数据库,而不需要将整个文件加载到内存中。

(2)数据批量处理:在数据写入目标数据源时,DataX 支持批量写入操作。通过将多条数据合并成一个批次进行写入,可以减少与目标数据源的交互次数,提高写入效率。例如,在向 MySQL 数据库写入数据时,DataX 可以将多条记录合并成一个 SQL 语句进行批量插入,从而减少了数据库的事务开销和网络传输开销。

(1)资源管理层面

(1)资源合理分配:DataX 核心框架可以根据任务的配置和系统资源的使用情况,合理分配资源给各个子任务。例如,可以根据数据量的大小和数据源的性能,动态调整每个子任务的并发度,确保系统资源得到充分利用,同时避免资源的过度竞争。

(2)状态监控与错误处理:DataX 实时监控任务的执行状态,包括任务的开始时间、结束时间、处理数据量等,并在出现异常情况时能够及时捕获并进行相应的处理。这种机制可以保证任务的可靠性,避免因局部错误导致整个任务失败,从而提高了数据同步的效率。

(1)数据传输层面

(1)高效的数据传输协议:DataX 在数据传输过程中采用了高效的数据传输协议,减少了数据传输的开销。同时,它还支持数据压缩和加密等功能,可以进一步提高数据传输的效率和安全性。

(2)内存队列优化:DataX 内部使用内存队列来缓冲数据,通过优化内存队列的设计和管理,确保数据在 Reader 和 Writer 之间能够快速、稳定地传输,避免了数据传输过程中的瓶颈。

【三】DataX部署

1)下载DataX安装包并上传到hadoop102的/opt/software

下载地址:http://datax-opensource.oss-cn-hangzhou.aliyuncs.com/datax.tar.gz

2)解压datax.tar.gz到/opt/module tar -zxvf datax.tar.gz -C /opt/module

3)自检,执行如下命令python /opt/module/datax/bin/datax.py /opt/module/datax/job/job.json

出现如下内容,则表明安装成功

……

2021-10-12 21:51:12.335 [job-0] INFO JobContainer -

任务启动时刻 : 2021-10-12 21:51:02

任务结束时刻 : 2021-10-12 21:51:12

任务总计耗时 : 10s

任务平均流量 : 253.91KB/s

记录写入速度 : 10000rec/s

读出记录总数 : 100000

读写失败总数 : 0

【四】DataX上手

【1】使用概述

任务提交命令:用户需要根据同步数据的数据源和目的地选择相应的Reader和Writer,并将Reader和Writer的信息配置在一个json文件中,然后执行命令提交数据同步任务即可。python bin/datax.py path/to/your/job.json

【2】配置文件格式

查看DataX配置文件模板:python bin/datax.py -r mysqlreader -w hdfswriter

json最外层是一个job,job包含setting和content两部分,其中setting用于对整个job进行配置,content用户配置数据源和目的地。

Reader和Writer的具体参数参考官方文档:

https://github.com/alibaba/DataX/blob/master/README.md

https://gitee.com/mirrors/DataX/blob/master/README.md

【3】同步Mysql数据到HDFS

使用一个栗子来完成同步MySQL数据->HDFS的应用

要求:同步gmall数据库中base_province表数据到HDFS的/base_province目录

分析:需选用MySQLReader和HDFSWriter,MySQLReader具有两种模式分别是TableMode和QuerySQLMode,前者使用table,column,where等属性声明需要同步的数据;后者使用一条SQL查询语句声明需要同步的数据。

【五】Linux系统安装和使用datax

【1】Linux系统准备

安装虚拟机和centos的过程不谈

先安装linux的常用工具

yum install iproute ftp bind-utils net-tools wget -y

iproute 用来执行 ip address 查看本机地址

ftp 用来测试ftp 服务器

bind_utils 用来运行 nslookup

net-tools 用来执行 netstate

wget 既是执行 wget的

这些工具都是比较常见的,尤其在检验某个服务是否正常工作的时候,会起到很好的作用

【2】安装jdk、python和mysql

(1)安装jdk

yum -y install java-1.8.0-openjdk.x86_64

查看jdk版本,命令:java -version

JDK(1.6以上,1.8即可)

(2)安装python

centos是自带python2的,所以不需要单独安装

查看python的版本,命令(大写V):python -V

Python(一般2.7都可以)一定要为python2,因为后面执行datax.py的时候,里面的python的print会执行不了,导致运行不成功,会提示你print语法要加括号,python2中加不加都行 python3中必须要加,否则报语法错

(3)安装mysql

yum上mysql的资源有问题,所以不能仅仅之用yum。在使用yum之前还需要用其他命令获取mysql社区版

cd /tmp

wget http://repo.mysql.com/mysql-community-release-el7-5.noarch.rpm

rpm -ivh mysql-community-release-el7-5.noarch.rpm

通过yum进行安装

yum install mysql mysql-server mysql-devel -y

执行如下命令启动mysql服务器

systemctl start mysql.service

安装后会自动启动,启动后会占用3306端口。 使用如下命令查看3306端口是否启动,如果启动了则表示mysql处于运行状态。

netstat -anp|grep 3306

(4)本地连接mysql

关闭所有防火墙

service firewalld status

service firewalld stop

(5)mysql准备数据

新建两个mysql库:datax1、datax2,分别使用运行以下建表sql:

CREATE TABLE `datax_test` (

`id` int(10) NOT NULL COMMENT '主键ID',

`name` varchar(255) DEFAULT NULL COMMENT '名称',

PRIMARY KEY (`id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

运行成功后在datax2中新增几条测试数据,datax1中不新增。

INSERT INTO datax2.datax_test (id,name) VALUES

(1,'张三'),

(2,'李四'),

(3,'王五');

【3】安装datax

(1)下载datax

下载地址:https://github.com/alibaba/DataX?tab=readme-ov-file

下载后使用文件传输工具传输到linux上的opt目录下

解压命令

cd /opt

tar -zxvf datax.tar.gz -C /opt/

(2)DataX自检

解压成功后,进入bin目录,开始自检,命令:

cd datax/bin

python datax.py /opt/datax/job/job.json

查看日志,完成自检!

【4】datax简单使用案例

(1)建立新的job作业json文件,如下

job_mysql2mysql.json:

{

"job": {

"setting": {

"speed": {

"channel": 1

},

"errorLimit": {

"record": 0,

"percentage": 0.02

}

},

"content": [

{

"reader": {

"name": "mysqlreader",

"parameter": {

"column": [

"`id`",

"`name`"

],

"connection": [

{

"jdbcUrl": ["jdbc:mysql://localhost:3306/datax2"],

"table": ["datax_test"]

}

],

"username": "root",

"password": "******"

}

},

"writer": {

"name": "mysqlwriter",

"parameter": {

"writeMode": "insert",

"username": "root",

"password": "******",

"column": [

"`id`",

"`name`"

],

"session": [

"set session sql_mode='ANSI'"

],

"preSql": [

"delete from datax_test"

],

"connection": [

{

"jdbcUrl": "jdbc:mysql://localhost:3306/datax1",

"table": ["datax_test"]

}

]

}

}

}

]

}

}

“writeMode”: “insert”:表示数据是新增,可改为update,但是表中要有唯一主键

preSql:预先执行的sql,在其他操作之前执行

以上的json是新增时使用,有个preSql(预执行sql),看情况使用

(2)将job_mysql2mysql.json文件放入/opt/datax/目录下

执行以下命令运行,即可将读库中的表数据写入写库的表中:

python datax.py /opt/datax/job/job_mysql2mysql.json

(3)注意点

大家可以看到JSON文件中的column中写了详细的参数,而不用*号,以及字段用`符号包起来。

先说第一点,详细的字段方便在以后的定时增量同步起到很大的避免错误的作用,如果读库和写库的表结构一样,在读库(reader)中的表增加了一个字段,而写库(writer)并没有这个字段,执行脚本时会报错,所有不用*号,并且新增和修改字段都要双方同步。

第二点,为什么用`符号将字段包起来。因为日常开发中一个数据库是很多开发人员维护的,难免会出现MySQL关键字为字段名的情况,DataX执行时发现某个字段是MySQL关键字则会直接报错,所以每个字段最好包起来。

【六】Springboot整合datax案例

【1】下载

下载地址:http://datax-opensource.oss-cn-hangzhou.aliyuncs.com/datax.tar.gz

或者git获取:git clone git@github.com:alibaba/DataX.git

将核心包安装到本地的maven仓库

mvn install:install-file -DgroupId=com.datax -DartifactId=datax-core -Dversion=1.0.0 -Dpackaging=jar -Dfile=datax-core-0.0.1-SNAPSHOT.jar

mvn install:install-file -DgroupId=com.datax -DartifactId=datax-common -Dversion=1.0.0 -Dpackaging=jar -Dfile=datax-common-0.0.1-SNAPSHOT.jar

【2】引入依赖

在springboot项目里引入datax的依赖

<!--datax-->

<dependency>

<groupId>com.datax</groupId>

<artifactId>datax-core</artifactId>

<version>1.0.0</version>

</dependency>

<dependency>

<groupId>com.datax</groupId>

<artifactId>datax-common</artifactId>

<version>1.0.0</version>

</dependency>

【3】准备环境

(1)python环境

mac电脑自带python3环境

验证python3版本

python3 --version

测试执行datax.py脚本,验证python环境和datax环境是否可用

(2)mysql密码

datax不允许数据库的密码password为空,所以要设置密码

【4】编写工具类

封装数据源的参数类

package com.allen.study.test_common.utils.datax;

import lombok.Data;

/**

* @ClassName: DataSourceConfig

* @Author: AllenSun

* @Date: 2025/3/5 00:50

*/

@Data

public class DataSourceConfig {

// 用户名

private String user;

// 密码

private String password;

// JDBC连接地址

private String jdbcUrl;

// 表名

private String table;

}

方法工具类

package com.allen.study.test_common.utils.datax;

import cn.hutool.core.collection.ListUtil;

import cn.hutool.core.date.StopWatch;

import cn.hutool.core.util.ObjectUtil;

import com.alibaba.fastjson.JSONArray;

import com.alibaba.fastjson.JSONObject;

import com.allen.study.test_common.exception.CustomRuntimeException;

import lombok.extern.slf4j.Slf4j;

import org.apache.commons.io.FileUtils;

import org.springframework.beans.factory.annotation.Value;

import org.springframework.cloud.context.config.annotation.RefreshScope;

import org.springframework.stereotype.Component;

import java.io.File;

import java.io.IOException;

import java.sql.*;

import java.util.ArrayList;

import java.util.List;

import java.util.concurrent.TimeUnit;

import java.util.stream.Collectors;

/**

* @ClassName: DataXUtils

* @Author: AllenSun

* @Date: 2025/3/4 23:49

*/

@Slf4j

@Component

@RefreshScope

public class DataXUtils {

@Value("${datax.home}")

private String dataxHomePath ;

@Value("${datax.jsonFilePath}")

private String jsonFilePath ;

// TODO 支持动态传参mysql模板执行

// TODO 多写几个模板案例

// TODO 加入线程池

public void syncDataxMysql2MysqlByCommand (DataSourceConfig source,

DataSourceConfig target) {

String jobCommand = syncMysqlToMysqlJobCommand(source, target);

try {

// 执行数据同步任务

String result = this.executeDataXCommand(jobCommand);

log.info("数据同步结果:" + result);

} catch (Exception e) {

e.printStackTrace();

log.info("数据同步失败:" + e.getMessage());

throw new CustomRuntimeException("数据同步失败:" + e.getMessage());

}

}

public void syncDataxMysql2MysqlByJsonFile (DataSourceConfig source,

DataSourceConfig target,String jobFileName) {

// String jobFileName = "testCreateMysqlJob.json";

StopWatch stopWatch = new StopWatch("datax同步数据耗时");

stopWatch.start("开始");

try {

// 生成 Job 文件

JSONObject job = generateMysqlToMysqlJobJson(source, target);

this.generateJobFile(job, jobFileName);

// 执行数据同步任务

this.executeDataXJob(jobFileName);

} catch (IOException e) {

e.printStackTrace();

log.info("数据同步失败:" + e.getMessage());

throw new CustomRuntimeException("数据同步失败:" + e.getMessage());

}

stopWatch.stop();

log.info(stopWatch.prettyPrint(TimeUnit.SECONDS));

}

/**

* 生成 MySQL 到 MySQL 的 DataX Job JSON 配置

* @return Job 的 JSON 配置

*/

public JSONObject generateMysqlToMysqlJobJson(DataSourceConfig source,

DataSourceConfig target) {

String sourceUrl=source.getJdbcUrl();

String sourceUsername=source.getUser();

String sourcePassword=source.getPassword();

String sourceTable=source.getTable();

String targetUrl=target.getJdbcUrl();

String targetUsername=target.getUser();

String targetPassword=target.getPassword();

String targetTable=target.getTable();

List<String> preSql = ListUtil.of("TRUNCATE TABLE "+targetTable);

// 查询表字段

List<String> sourceColumns = getTableColumns(sourceUrl, sourceUsername, sourcePassword, sourceTable);

List<String> targetColumns = getTableColumns(targetUrl, targetUsername, targetPassword, targetTable);

List<String> combineColumns = sourceColumns.stream().filter(it->targetColumns.contains(it)).collect(Collectors.toList());

if(ObjectUtil.isEmpty(combineColumns)){

throw new CustomRuntimeException("源表和目标表不存在匹配字段");

}

JSONObject job = new JSONObject();

JSONObject setting = new JSONObject();

JSONObject speed = new JSONObject();

speed.put("channel", "3");

setting.put("speed", speed);

job.put("setting", setting);

JSONObject content = new JSONObject();

JSONObject reader = new JSONObject();

reader.put("name", "mysqlreader");

JSONObject readerParameter = new JSONObject();

readerParameter.put("username", sourceUsername);

readerParameter.put("password", sourcePassword);

JSONArray connection = new JSONArray();

JSONObject conn = new JSONObject();

JSONArray jdbcUrl = new JSONArray();

jdbcUrl.add(sourceUrl);

conn.put("jdbcUrl", jdbcUrl);

conn.put("table", new String[]{sourceTable});

connection.add(conn);

readerParameter.put("connection", connection);

readerParameter.put("column", combineColumns);

reader.put("parameter", readerParameter);

JSONObject writer = new JSONObject();

writer.put("name", "mysqlwriter");

JSONObject writerParameter = new JSONObject();

writerParameter.put("username", targetUsername);

writerParameter.put("password", targetPassword);

writerParameter.put("connection", new JSONArray() {{

add(new JSONObject() {{

put("jdbcUrl", targetUrl);

put("table", new String[]{targetTable});

}});

}});

writerParameter.put("column", combineColumns);

writerParameter.put("preSql", preSql);// 前置执行sql,先清空表再执行同步数据逻辑

writer.put("parameter", writerParameter);

content.put("reader", reader);

content.put("writer", writer);

JSONArray contentArray = new JSONArray();

contentArray.add(content);

job.put("content", contentArray);

JSONObject allJobContent = new JSONObject();

allJobContent.put("job",job);

return allJobContent;

}

/**

* 生成 MySQL 到 MySQL 的 DataX Job JSON 配置

* @return Job 的 JSON 配置

*/

public String syncMysqlToMysqlJobCommand(DataSourceConfig source,

DataSourceConfig target) {

String sourceUrl=source.getJdbcUrl();

String sourceUsername=source.getUser();

String sourcePassword=source.getPassword();

String sourceTable=source.getTable();

String targetUrl=target.getJdbcUrl();

String targetUsername=target.getUser();

String targetPassword=target.getPassword();

String targetTable=target.getTable();

// 查询表字段

List<String> sourceColumns = getTableColumns(sourceUrl, sourceUsername, sourcePassword, sourceTable);

List<String> targetColumns = getTableColumns(targetUrl, targetUsername, targetPassword, targetTable);

List<String> combineColumns = sourceColumns.stream().filter(it->targetColumns.contains(it)).collect(Collectors.toList());

if(ObjectUtil.isEmpty(combineColumns)){

log.error("源表和目标表不存在匹配字段");

throw new CustomRuntimeException("源表和目标表不存在匹配字段");

}

StringBuilder columnStr = new StringBuilder();

for (int i = 0; i < combineColumns.size(); i++) {

if (i > 0) {

columnStr.append(",");

}

columnStr.append("\"").append(combineColumns.get(i)).append("\"");

}

String readerJson = "{\"name\":\"mysqlreader\",\"parameter\":{\"username\":\"" + sourceUsername + "\",\"password\":\"" + sourcePassword + "\",\"connection\":[{\"jdbcUrl\":[\"" + sourceUrl + "\"],\"table\":[\"" + sourceTable + "\"]}],\"column\":[" + columnStr + "]}}";

String writerJson = "{\"name\":\"mysqlwriter\",\"parameter\":{\"username\":\"" + targetUsername + "\",\"password\":\"" + targetPassword + "\",\"connection\":[{\"jdbcUrl\":[\"" + targetUrl + "\"],\"table\":[\"" + targetTable + "\"]}],\"column\":[" + columnStr + "]}}";

String contentJson = "{\"reader\":" + readerJson + ",\"writer\":" + writerJson + "}";

String jobJson = "{\"job\":{\"setting\":{\"speed\":{\"channel\":\"3\"}},\"content\":[" + contentJson + "]}}";

// TODO mac电脑自带python3环境

// String finalCommand = "python3 " + dataxHomePath + "/bin/datax.py '" + jsonFilePath + jobJson + "'";

String finalCommand =

"python3 " + dataxHomePath + "/bin/datax.py -r streamreader -w streamwriter '" + jobJson + "'";

log.info("DataX完整命令:{}",finalCommand);

return finalCommand;

}

/**

* 查询 MySQL 表的字段

* @param url 数据库连接 URL

* @param username 数据库用户名

* @param password 数据库密码

* @param tableName 表名

* @return 字段列表

* @throws SQLException SQL 异常

*/

public List<String> getTableColumns(String url, String username, String password, String tableName) {

List<String> columns = new ArrayList<>();

try (Connection connection = DriverManager.getConnection(url, username, password);

Statement statement = connection.createStatement();

ResultSet resultSet = statement.executeQuery("SHOW COLUMNS FROM " + tableName)) {

while (resultSet.next()) {

columns.add(resultSet.getString("Field"));

}

} catch (Exception e) {

log.error("查询表字段失败:{}",e.getMessage());

throw new CustomRuntimeException("查询表字段失败");

}

return columns;

}

/**

* 生成 DataX Job 文件

* @param jobJson Job 的 JSON 配置

* @param filePath Job 文件保存路径

* @throws IOException 文件操作异常

*/

public void generateJobFile(JSONObject jobJson, String filePath) throws IOException {

String fullFilePath = jsonFilePath+filePath;

File jobFile = new File(fullFilePath);

FileUtils.writeStringToFile(jobFile, jobJson.toJSONString(), "UTF-8");

}

/**

* 执行 DataX 数据同步任务

* @param jobFileName Job 文件的名称

* @return 执行结果

* @throws IOException 执行命令异常

* @throws InterruptedException 线程中断异常

*/

public String executeDataXJob(String jobFileName) {

String command =

"python3 " + dataxHomePath + "/bin/datax.py " + jsonFilePath + jobFileName;

log.info("执行Datax命令:{}",command);

StringBuilder result = new StringBuilder();

try {

Process process = Runtime.getRuntime().exec(command);

java.io.InputStream is = process.getInputStream();

java.io.BufferedReader reader = new java.io.BufferedReader(new java.io.InputStreamReader(is));

String line;

while ((line = reader.readLine()) != null) {

result.append(line).append("\n");

}

process.waitFor();

} catch (Exception e) {

log.info("datax命令执行失败:{}",e.getMessage());

throw new CustomRuntimeException("datax命令执行失败:"+e.getMessage());

}

log.info("datax命令执行结果:{}",result);

return result.toString();

}

/**

* 执行 DataX 数据同步任务

* @param command DataX 执行命令

* @return 执行结果

* @throws Exception 执行命令异常

*/

public String executeDataXCommand(String command) throws Exception {

Process process = Runtime.getRuntime().exec(command);

java.io.InputStream is = process.getInputStream();

java.io.BufferedReader reader = new java.io.BufferedReader(new java.io.InputStreamReader(is));

StringBuilder result = new StringBuilder();

String line;

while ((line = reader.readLine()) != null) {

result.append(line).append("\n");

}

process.waitFor(10, TimeUnit.MINUTES);

return result.toString();

}

}

【5】controller实现调用案例

@Autowired

private DataXUtils dataXUtils;

@GetMapping("/syncDataxByJobJson")

@Operation(summary = "测试datax同步用户信息-固定模板", description = "测试datax同步用户信息-固定模板")

public ApiResponse<String> syncDataxByJobJson() {

// 生成 mysql表同步的命令并执行

dataXUtils.executeDataXJob("test.json");

return ApiResponse.ok();

}

@GetMapping("/syncDataxByCommand")

@Operation(summary = "测试datax同步用户信息-动态传参给固定模板", description = "测试datax同步用户信息-动态传参给固定模板")

public ApiResponse<String> syncDataxByCommand() {

DataSourceConfig souce = new DataSourceConfig();

souce.setJdbcUrl("jdbc:mysql://localhost:3306/allen_camunda?useSSL=false&useUnicode=true&characterEncoding=utf-8&serverTimezone=GMT%2B8&nullCatalogMeansCurrent=true&allowPublicKeyRetrieval=true");

souce.setUser("root");

souce.setPassword("P@ssw0rd");

souce.setTable("employee_info");

DataSourceConfig target = new DataSourceConfig();

target.setJdbcUrl("jdbc:mysql://localhost:3306/allen_camunda?useSSL=false&useUnicode=true&characterEncoding=utf-8&serverTimezone=GMT%2B8&nullCatalogMeansCurrent=true&allowPublicKeyRetrieval=true");

target.setUser("root");

target.setPassword("P@ssw0rd");

target.setTable("employee_info_copy1");

// 生成 mysql表同步的命令并执行

dataXUtils.syncDataxMysql2MysqlByCommand(souce,target);

return ApiResponse.ok();

}

@GetMapping("/generateAndSyncDataxByJobJson")

@Operation(summary = "测试datax同步用户信息-生成JSON文件并执行", description = "测试datax同步用户信息-生成JSON文件并执行")

public ApiResponse<String> generateAndSyncDataxByJobJson() {

DataSourceConfig souce = new DataSourceConfig();

souce.setJdbcUrl("jdbc:mysql://localhost:3306/allen_camunda?useSSL=false&useUnicode=true&characterEncoding=utf-8&serverTimezone=GMT%2B8&nullCatalogMeansCurrent=true&allowPublicKeyRetrieval=true");

souce.setUser("root");

souce.setPassword("P@ssw0rd");

souce.setTable("employee_info");

DataSourceConfig target = new DataSourceConfig();

target.setJdbcUrl("jdbc:mysql://localhost:3306/allen_camunda?useSSL=false&useUnicode=true&characterEncoding=utf-8&serverTimezone=GMT%2B8&nullCatalogMeansCurrent=true&allowPublicKeyRetrieval=true");

target.setUser("root");

target.setPassword("P@ssw0rd");

target.setTable("employee_info_copy1");

String jobFileName = "testCreateMysqlJob.json";

// 生成 mysql表同步的命令并执行

threadPoolConfig.customThreadPool().execute(()->{

dataXUtils.syncDataxMysql2MysqlByJsonFile(souce,target,jobFileName);

});

return ApiResponse.ok();

}

【6】datax命令分析

(1)JSON配置文件(job_mysql2mysql.json)

{"job": {

"setting": {"speed": {"channel": 3}},

"content": [{

"reader": {

"name": "mysqlreader",

"parameter": {

"username": "root",

"password": "password",

"column": ["*"],

"splitPk": "id",

"connection": [{

"table": ["user"],

"jdbcUrl": ["jdbc:mysql://source_host:3306/source_db?useSSL=false"]

}]

}

},

"writer": {

"name": "mysqlwriter",

"parameter": {

"username": "root",

"password": "password",

"writeMode": "insert",

"column": ["*"],

"connection": [{

"table": "user",

"jdbcUrl": "jdbc:mysql://target_host:3306/target_db?useSSL=false"

}]

}

}

}]

}}

(1)splitPk:指定分片字段(如主键id),用于多线程并行读取

(2)channel:并发线程数(默认3,根据服务器性能调整)

(3)writeMode:写入模式(insert/replace/update)

(4)jdbcUrl:需替换为实际数据库的IP、端口、库名

(5)column: [“*”] 表示同步所有字段,也可指定具体字段如 [“id”, “name”]

执行同步的命令

python /path/to/datax/bin/datax.py /path/to/job_mysql2mysql.json

(1)/path/to/datax/bin/datax.py:DataX主程序路径(需替换为实际安装路径)

(2)/path/to/job_mysql2mysql.json:配置文件路径

(2)动态参数传递

【7】datax命令执行结果

输出日志:任务启动后会打印进度、同步行数、耗时及错误信息

【8】注意事项

(1)权限检查:确保MySQL账户对源表有SELECT权限,目标表有INSERT权限

(2)字段映射:源表和目标表字段需名称、类型、顺序一致

(3)性能优化:

增大 channel 参数提升并发(需配合 splitPk 使用)

目标库开启批量提交(如MySQL的 rewriteBatchedStatements=true)

(4)错误处理:监控日志中的 errorLimit 参数,设置错误记录阈值

【9】功能优化

【七】Springboot整合DataX的Module

【1】封装datax的jar包

(1)下载安装包

(1)下载DataX安装包并上传到hadoop102的/opt/software

下载地址:http://datax-opensource.oss-cn-hangzhou.aliyuncs.com/datax.tar.gz

(2)解压datax.tar.gz到/opt/module tar -zxvf datax.tar.gz -C /opt/module

(3)自检,执行如下命令python /opt/module/datax/bin/datax.py /opt/module/datax/job/job.json

出现如下内容,则表明安装成功

……

2021-10-12 21:51:12.335 [job-0] INFO JobContainer -

任务启动时刻 : 2021-10-12 21:51:02

任务结束时刻 : 2021-10-12 21:51:12

任务总计耗时 : 10s

任务平均流量 : 253.91KB/s

记录写入速度 : 10000rec/s

读出记录总数 : 100000

读写失败总数 : 0

(2)补充自定义的reader和writer

(3)datax项目打成jar包供引用

datax部署单独的一个项目,供其他服务直接调用

(4)配置yml配置

配置datax的信息,包括host、登录信息等等

## datax相关配置

datax:

server:

host: 172.17.0.46

port: 22

user_name: root

passwd: aaaaaa

## 同步指标目录

sync_index_dir: /tmp/damp_index

## 同步表目录

sync_table_dir: /tmp/damp_table

## datax home目录

datax_home: /home/datax/datax

## 删除临时文件,项目上线之初建议配置为false,方便问题排查,后期稳定可配置为true

delete_tmp_file: true

## 表分割符

table:

separator: ^

batch_size: 20000

## datax中votldb客户端

voltdb:

path: /home/voltdb/voltdb-ent-11.3.1

添加datax配置信息的类

@Data

@Configuration

@ToString

public class DataxServerConfig {

@Value("${datax.server.host}")

private String host;

@Value("${datax.server.port}")

private Integer port;

@Value("${datax.server.user_name}")

private String userName;

@Value("${datax.server.passwd}")

private String passWd;

@Value("${datax.server.sync_index_dir}")

private String syncIndexDir;

@Value("${datax.server.sync_table_dir}")

private String syncTableDir;

@Value("${datax.server.datax_home}")

private String dataxHome;

@Value("${datax.server.delete_tmp_file}")

private Boolean deleteTmpFile;

@Value("${datax.table.separator}")

private String separator;

@Value("${datax.table.batch_size}")

private String batchSize;

}

(5)在具体使用的服务里配置datax的实体类

(1)Parameter类型

@Data

public class Csv2dbParameter {

private String dataxHome;

private String cmdJsonFile = "csv2db.json";

private String filePath;

private String fileName;

private String fieldDelimiter = "^";

private int record = 50000;

private String writer;

private String username;

private String password;

private int batchSize = 50000;

private String preSql;

private String jdbcUrl;

private String tableName;

public String generateCmd(){

StringBuilder shellBuilder = baseCmd();

shellBuilder.append("-p \"");

shellBuilder.append("-Drecord=");

shellBuilder.append(record + " ");

shellBuilder.append("-DfullFilePath=");

String fullPath = (filePath + "/" + fileName).replace("\\","/");

shellBuilder.append(fullPath + " ");

shellBuilder.append("-DfieldDelimiter=");

shellBuilder.append(fieldDelimiter + " ");

shellBuilder.append("-DbatchSize=");

shellBuilder.append(batchSize + " ");

shellBuilder.append("-Dwriter=");

shellBuilder.append(writer + " ");

shellBuilder.append("-Dusername=");

shellBuilder.append(username + " ");

shellBuilder.append("-Dpassword=");

shellBuilder.append(password + " ");

shellBuilder.append("-DjdbcUrl=");

shellBuilder.append(jdbcUrl + " ");

shellBuilder.append("-DpreSql=");

shellBuilder.append("\\\"" + preSql+ "\\\"" + " ");

shellBuilder.append("-DtableName=");

shellBuilder.append(tableName + " ");

shellBuilder.append("\"");

return shellBuilder.toString();

}

private StringBuilder baseCmd(){

StringBuilder stringBuilder = new StringBuilder("python " + dataxHome + "/bin/datax.py ");

stringBuilder.append(dataxHome);

stringBuilder.append("/script/");

stringBuilder.append(cmdJsonFile + " ");

return stringBuilder;

}

public static void main(String[] args) {

Csv2dbParameter csv2dbParameter = new Csv2dbParameter();

csv2dbParameter.setDataxHome("/home/datax/datax");

csv2dbParameter.setCmdJsonFile("csv2db.json");

csv2dbParameter.setFilePath("/tmp/damp_table");

csv2dbParameter.setFileName("damp_asset_model_x_attr.csv");

csv2dbParameter.setWriter("oraclewriter");

csv2dbParameter.setUsername("dap_damp_inst");

csv2dbParameter.setPassword("dap_damp_inst");

csv2dbParameter.setJdbcUrl("jdbc:oracle:thin:@172.17.0.144:1521:orcl171");

csv2dbParameter.setPreSql("delete from index_origin");

csv2dbParameter.setTableName("damp_asset_model_x_attr");

String cmd = csv2dbParameter.generateCmd();

System.out.println(cmd);

}

}

@Data

public class Db2csvParameter {

private String dataxHome;

@Setter(AccessLevel.PRIVATE)

private String cmdJsonFile = "db2csv.json";

private int record = 50000;

private String reader;

private String username;

private String password;

private String querySql;

private String jdbcUrl;

private String fileName;

private String filePath;

private String fieldDelimiter = "^";

public String generateCmd() {

StringBuilder shellBuilder = baseCmd();

shellBuilder.append("-p \"");

shellBuilder.append("-Drecord=");

shellBuilder.append(record + " ");

shellBuilder.append("-Dreader=");

shellBuilder.append(reader + " ");

shellBuilder.append("-Dusername=");

shellBuilder.append(username + " ");

shellBuilder.append("-Dpassword=");

shellBuilder.append(password + " ");

shellBuilder.append("-DquerySql=");

shellBuilder.append("\\\"" + querySql + "\\\"" + " ");

shellBuilder.append("-DjdbcUrl=");

shellBuilder.append(jdbcUrl + " ");

shellBuilder.append("-DfileName=");

shellBuilder.append(fileName + " ");

shellBuilder.append("-DfilePath=");

shellBuilder.append(filePath + " ");

shellBuilder.append("-DfieldDelimiter=");

shellBuilder.append(fieldDelimiter + " ");

shellBuilder.append("\"");

return shellBuilder.toString();

}

private StringBuilder baseCmd() {

StringBuilder stringBuilder = new StringBuilder("python " + dataxHome + "/bin/datax.py ");

stringBuilder.append(dataxHome);

stringBuilder.append("/script/");

stringBuilder.append(cmdJsonFile + " ");

return stringBuilder;

}

public static void main(String[] args) {

Db2csvParameter db2csvParameter = new Db2csvParameter();

db2csvParameter.setDataxHome("/home/datax/datax");

db2csvParameter.setCmdJsonFile("db2csv.json");

db2csvParameter.setReader("mysqlreader");

db2csvParameter.setUsername("root");

db2csvParameter.setPassword("Yatop@2022");

db2csvParameter.setQuerySql("select '123' as yt_id from test");

db2csvParameter.setJdbcUrl("jdbc:mysql://172.17.0.117:9010/damp_ind_product");

db2csvParameter.setFileName("voltdb-test.csv");

db2csvParameter.setFilePath("/tmp/damp_table");

String cmd = db2csvParameter.generateCmd();

System.out.println(cmd);

}

}

@Data

public class Db2dbParameter {

private String dataxHome;

private String cmdJsonFile = "db2db.json";

private int record = 50000;

private String reader;

private String readerUsername;

private String readerPassword;

private String querySql;

private String readerJdbcUrl;

private String writer;

private String writerLoadUrl;

private String writerJdbcUrl;

private String writerSelectedDatabase;

private String writerUsername;

private String writerPassword;

private String writerTable;

private String preSql;

private String xms = "512m";

private String xmx = "512m";

private String logFileName;

public String generateCmd(){

DataxServerConfig dataxServerConfig = SpringUtil.getBean(DataxServerConfig.class);

StringBuilder shellBuilder = baseCmd();

shellBuilder.append("-p \"");

shellBuilder.append("-Drecord=");

shellBuilder.append(record + " ");

shellBuilder.append("-Dreader=");

shellBuilder.append(reader+ " ");

shellBuilder.append("-DreaderJdbcUrl=");

shellBuilder.append("\\\"" +readerJdbcUrl+ "\\\""+ " ");

shellBuilder.append("-DreaderUsername=");

shellBuilder.append(readerUsername+ " ");

shellBuilder.append("-DreaderPassword=");

shellBuilder.append(readerPassword+ " ");

shellBuilder.append("-DquerySql=");

shellBuilder.append("\\\"" + querySql+ "\\\"" + " ");

shellBuilder.append("-Dwriter=");

shellBuilder.append(writer+ " ");

shellBuilder.append("-DwriterLoadUrl=");

shellBuilder.append("\\\"" +writerLoadUrl+ "\\\""+ " ");

shellBuilder.append("-DwriterJdbcUrl=");

shellBuilder.append("\\\"" +writerJdbcUrl+ "\\\""+ " ");

shellBuilder.append("-DwriterUsername=");

shellBuilder.append(writerUsername+ " ");

shellBuilder.append("-DwriterPassword=");

shellBuilder.append(writerPassword+ " ");

shellBuilder.append("-DbatchSize=");

shellBuilder.append(dataxServerConfig.getBatchSize() + " ");

shellBuilder.append("-DwriterTable=");

shellBuilder.append(writerTable+ " ");

shellBuilder.append("-DwriterSelectedDatabase=");

shellBuilder.append(writerSelectedDatabase+ " ");

shellBuilder.append("-DwriterTable=");

shellBuilder.append(writerTable+ " ");

shellBuilder.append("-DpreSql=");

shellBuilder.append("\\\"" + preSql + "\\\""+ " ");

shellBuilder.append("\"");

return shellBuilder.toString();

}

private StringBuilder baseCmd(){

StringBuilder stringBuilder = new StringBuilder("python " + dataxHome + "/bin/datax.py ");

stringBuilder.append(dataxHome);

stringBuilder.append("/script/");

stringBuilder.append(cmdJsonFile + " ");

return stringBuilder;

}

private void runCmd(StringBuilder shellBuilder){

shellBuilder.append("com.alibaba.datax.core.Engine \\\n");

shellBuilder.append("-job " + this.dataxHome + "/script/db2db.json \\\n");

shellBuilder.append("-mode standalone \\\n");

shellBuilder.append("-jobid " + " -1");

}

public static void main(String[] args) {

Db2dbParameter db2dbParameter = new Db2dbParameter();

db2dbParameter.setDataxHome("/home/datax/datax");

db2dbParameter.setCmdJsonFile("db2csv.json");

db2dbParameter.setReader(DbTypeEnum.DORIS.getReader());

db2dbParameter.setReaderJdbcUrl("jdbc:hive2://66.5.150.1:30100/default;principal=hive/tos_m6xxw7y@M6XXW7Y.TDH");

db2dbParameter.setReaderUsername("appinst");

db2dbParameter.setReaderPassword("appinst");

db2dbParameter.setQuerySql("select rawtohex(sys_guid()) as yt_id from dap_damp_inst.damp_asset_model_x_attr");

db2dbParameter.setWriter(DbTypeEnum.DORIS.getWriter());

db2dbParameter.setWriterUsername("jdbc:oracle:thin:@172.17.0.144:1521:orcl171");

String s = db2dbParameter.generateCmd();

System.out.println(s);

}

}

(2)Param类型

@Data

public class DataxCsvParam {

/**

* CSV文件名称

*/

private String fileName;

/**

* 需要保存文件目录

*/

private String dir;

/**

* CSV分隔符

*/

private String delimiter = "^";

}

@Data

public class DataxReaderParam {

private String reader;

private String readerUsername;

private String readerPassword;

private String querySql;

private String readerJdbcUrl;

}

@Data

public class DataxTransferParam {

private String dataxHome;

private String cmdJsonFile = "db2db.json";

private int record = 50000;

/**

* 读取源

*/

private DataxReaderParam readerParam;

/**

* 写入源

*/

private DataxWriterParam writerParam;

private String xms = "512m";

private String xmx = "512m";

/**

* java -server \

* -Xms1g \

* -Xmx1g \

* -XX:+HeapDumpOnOutOfMemoryError \

* -XX:HeapDumpPath=/home/datax/datax/log \

* -Dloglevel=info \

* -Dfile.encoding=UTF-8 \

* -Dlogback.statusListenerClass=ch.qos.logback.core.status.NopStatusListener \

* -Djava.security.egd=file:///dev/urandom \

* -Ddatax.home=/home/datax/datax \

* -Dlogback.configurationFile=/home/datax/datax/conf/logback.xml \

* -Dlog.file.name=x_script_db2csv_json \

* -Dreader=mysqlreader \

* -DquerySql="select 'test' from ppp" \

* -classpath /home/datax/datax/lib/*:. \

* com.alibaba.datax.core.Engine \

* -job /home/datax/datax/script/db2csv.json \

* -mode standalone \

* -jobid -1

*

* @return

*/

public String generateCmd() {

DataxServerConfig dataxServerConfig = SpringUtil.getBean(DataxServerConfig.class);

StringBuilder shellBuilder = baseCmd();

shellBuilder.append("-p \"");

shellBuilder.append("-Drecord=");

shellBuilder.append(record + " ");

shellBuilder.append("-Dreader=");

shellBuilder.append(readerParam.getReader() + " ");

shellBuilder.append("-DreaderJdbcUrl=");

//shellBuilder.append(readerParam.getReaderJdbcUrl() + " ");

shellBuilder.append("\\\"" +readerParam.getReaderJdbcUrl()+ "\\\""+ " ");

shellBuilder.append("-DreaderUsername=");

shellBuilder.append(readerParam.getReaderUsername() + " ");

shellBuilder.append("-DreaderPassword=");

shellBuilder.append(readerParam.getReaderPassword() + " ");

shellBuilder.append("-DquerySql=");

shellBuilder.append("\\\"" + readerParam.getQuerySql() + "\\\"" + " ");

shellBuilder.append("-Dwriter=");

shellBuilder.append(writerParam.getWriter() + " ");

if (StrUtil.isNotBlank(writerParam.getWriterLoadUrl())) {

shellBuilder.append("-DwriterLoadUrl=");

shellBuilder.append("\\\"" + writerParam.getWriterLoadUrl() + "\\\"" + " ");

} else {

shellBuilder.append("-DwriterLoadUrl=");

shellBuilder.append("\\\"" + writerParam.getWriterJdbcUrl() + "\\\"" + " ");

}

shellBuilder.append("-DwriterJdbcUrl=");

shellBuilder.append("\\\"" + writerParam.getWriterJdbcUrl() + "\\\"" + " ");

shellBuilder.append("-DwriterUsername=");

shellBuilder.append(writerParam.getWriterUsername() + " ");

shellBuilder.append("-DwriterPassword=");

shellBuilder.append(writerParam.getWriterPassword() + " ");

shellBuilder.append("-DbatchSize=");

shellBuilder.append(dataxServerConfig.getBatchSize() + " ");

shellBuilder.append("-DwriterTable=");

shellBuilder.append(writerParam.getWriterTable() + " ");

shellBuilder.append("-DwriterSelectedDatabase=");

shellBuilder.append(writerParam.getWriterSelectedDatabase() + " ");

shellBuilder.append("-DwriterTable=");

shellBuilder.append(writerParam.getWriterTable() + " ");

shellBuilder.append("-DpreSql=");

shellBuilder.append("\\\"" + writerParam.getPreSql() + "\\\"" + " ");

if(StringUtils.isNotBlank(writerParam.getLogFileName())){

shellBuilder.append("-Dlog.file.name=");

shellBuilder.append(writerParam.getLogFileName() + " ");

}

shellBuilder.append("\"");

return shellBuilder.toString();

}

private StringBuilder baseCmd() {

StringBuilder stringBuilder = new StringBuilder("python " + dataxHome + "/bin/datax.py ");

stringBuilder.append(dataxHome);

stringBuilder.append("/script/");

stringBuilder.append(cmdJsonFile + " ");

return stringBuilder;

}

private void runCmd(StringBuilder shellBuilder) {

shellBuilder.append("com.alibaba.datax.core.Engine \\\n");

shellBuilder.append("-job " + this.dataxHome + "/script/db2db.json \\\n");

shellBuilder.append("-mode standalone \\\n");

shellBuilder.append("-jobid " + " -1");

}

}

@Data

public class DataxWriterParam {

private String writer;

private String writerLoadUrl;

private String writerJdbcUrl;

private String writerSelectedDatabase;

private String writerUsername;

private String writerPassword;

private String writerTable;

private String preSql;

private String logFileName;

}

(3)Context类型参数

private String writerSelectedDatabase;

private String writerUsername;

private String writerPassword;

private String writerTable;

private String preSql;

private String logFileName;

}

@Data

@Slf4j

public class DataxReaderContext {

private String querySql;

private DataSourceResponse dataSourceResponse;

private DbTypeEnum dbType;

}

@Data

@Slf4j

public class DataxWriterContext extends DataxBaseWriterContext {

public DataxWriterContext() {

}

public DataxWriterContext(DataxBaseWriterContext baseWriterContext) {

this.setDataSrcId(baseWriterContext.getDataSrcId());

this.setRequireUpper(baseWriterContext.getRequireUpper());

this.setSchemaNa(baseWriterContext.getSchemaNa());

this.setTableNm(baseWriterContext.getTableNm());

this.setPreSql(baseWriterContext.getPreSql());

this.setInstanceCode(baseWriterContext.getInstanceCode());

}

private DataSourceResponse dataSourceResponse;

private DbTypeEnum dbType;

}

(6)同步流程

(1)指标固化时同步数据案例

// 来源数据源参数

TransferReqContext srcContext = new TransferReqContext();

srcContext.setEngineAssist(srcEngineAssist);

srcContext.setDataSrcId(CalcEngineUtil.getNonCalcDataSrcId());

srcContext.setSchemaNm(CalcEngineUtil.getCalcSchemaNm());

srcContext.setTableNm(indIndex.getCalcRsTableNm());

srcContext.addEqCondition(IndexConstant.INDEX_ID_COLUMN, indIndex.getIndexCode());

srcContext.addEqCondition(IndexConstant.INDEX_VERSION_COLUMN, taskInstance.getIndVersion());

srcContext.addEqCondition(IndexConstant.STANDARD_BUSINESS_DATE_COLUMN, taskInstance.getStandardBusinessDate());

// 目标数据源参数

TransferReqContext targetContext = new TransferReqContext();

targetContext.setSchemaNm(indIndex.getPhysicalSchemaNm());

targetContext.setTableNm(indIndex.getPhysicalTableName());

targetContext.addEqCondition(IndexConstant.INDEX_ID_COLUMN, indIndex.getIndexCode());

targetContext.addEqCondition(IndexConstant.INDEX_VERSION_COLUMN, taskInstance.getIndVersion());

targetContext.addEqCondition(IndexConstant.STANDARD_BUSINESS_DATE_COLUMN, taskInstance.getStandardBusinessDate());

targetContext.addPartitionCondition(IndexConstant.INDEX_ID_COLUMN, indIndex.getIndexCode());

targetContext.addPartitionCondition(IndexConstant.INDEX_VERSION_COLUMN, taskInstance.getIndVersion());

targetContext.addPartitionCondition(IndexConstant.STANDARD_BUSINESS_DATE_COLUMN, taskInstance.getStandardBusinessDate());

targetContext.setInstanceCode(storageContext.getTaskInstance().getInstanceCode());

DbTypeEnum dbType = storageContext.getDbTypeEnum();

AbstractEngineAssist targetEngineAssist = EngineUtil.getEngineAssistByDbType(dbType);

targetContext.setEngineAssist(targetEngineAssist);

targetContext.setDataSrcId(dataSrcId);

TransferResult transferResult = DataTransferUtil.dataSync(srcContext, targetContext, CalcEngineUtil.listEngineDataSrcIds().contains(dataSrcId));

(2)参数对象

@Data

@Slf4j

public class TransferReqContext {

private AbstractEngineAssist engineAssist;

private Long dataSrcId;

/**

* 优先使用SQL,且只有当做转移的源头的时候才被使用

*/

private String querySql;

/**

* 实例code

*/

private String instanceCode;

private final DataSqlReqContext dataSqlReqContext = new DataSqlReqContext();

public String getSchemaNm() {

return dataSqlReqContext.getSchemaNm();

}

public void setSchemaNm(String schemaNm) {

dataSqlReqContext.setSchemaNm(schemaNm);

}

public String getTableNm() {

return dataSqlReqContext.getTableNm();

}

public void setTableNm(String tableNm) {

dataSqlReqContext.setTableNm(tableNm);

}

public void addEqCondition(String colNm, Object val) {

dataSqlReqContext.addEqCondition(colNm, val);

}

public void addPartitionCondition(String colNm, Object val) {

dataSqlReqContext.addPartColCondition(colNm, val);

}

public void addExtTarget2SrcColNm(Map<String, String> extendTargetMappingSrcColNms) {

dataSqlReqContext.addExtTarget2SrcColNm(extendTargetMappingSrcColNms);

}

public void addExtTarget2SrcColNm(String targetColNm, String srcColNm) {

dataSqlReqContext.addExtTarget2SrcColNm(targetColNm, srcColNm);

}

/**

* 如果设置 querySql, 则优先使用querySql

*

* @param queryColNms

* @return

*/

public String getQueryDataSql(List<String> queryColNms) {

return dataSqlReqContext.getQueryDataSql(queryColNms);

}

public String getInsertSelectDataSql(String targetSchemaNm, String targetTableNm, List<String> queryColNms) {

return dataSqlReqContext.getInsertDataSql(targetSchemaNm, targetTableNm, queryColNms);

}

public DataSqlReqContext getNewDataSqlReqContext() {

return ObjectUtil.clone(this.dataSqlReqContext);

}

public void setDataSrcId(Long dataSrcId) {

this.dataSrcId = dataSrcId;

this.dataSqlReqContext.setDataSrcId(dataSrcId);

}

}

@Data

public class DataSqlReqContext implements Serializable {

private static final long serialVersionUID = 1247119788382272621L;

private String schemaNm;

private String tableNm;

private Long dataSrcId;

/**

* 等于部分的条件

*/

@Getter(AccessLevel.PRIVATE)

private final Map<String, List<Object>> eqConditions = new HashMap<>();

/**

* 不等于的条件部分

*/

@Getter(AccessLevel.PRIVATE)

private final Map<String, List<Object>> neConditions = new HashMap<>();

/**

* 分区列,特殊场景使用,适用数据库区分分区字段适用

*/

@Setter(AccessLevel.PRIVATE)

private final Map<String, List<Object>> partColConditions = new HashMap<>();

/**

* 扩展映射字段

* KEY 目标表字段名

* VALUE 源表字段名称

*/

@Getter(AccessLevel.PRIVATE)

private final Map<String, String> extendTarget2SrcColNms = new HashMap<>();

@Getter(AccessLevel.PRIVATE)

private final List<String> extSrcColNms = new ArrayList<>();

@Getter(AccessLevel.PRIVATE)

private final List<String> extTargetColNms = new ArrayList<>();

@Getter(AccessLevel.PRIVATE)

private final List<Class> clazz = Arrays.asList(

String.class, Character.class,

Integer.class, Short.class, Long.class,

Float.class, Double.class,

BigDecimal.class

);

public void addPartColCondition(String colNm, Object val) {

Assert.isTrue(clazz.contains(val.getClass()), "不支持的数据类型:{}", val.getClass());

List<Object> vals = partColConditions.getOrDefault(colNm, new ArrayList<>());

if (CollectionUtil.isNotEmpty(vals)) {

Assert.isTrue(vals.get(0).getClass() == val.getClass(), "colNm:{},数据类型不匹配,{},", colNm, vals.get(0).getClass());

}

vals.add(val);

partColConditions.put(colNm, vals);

}

/**

* 设置等于条件项

*

* @param colNm

* @param val

*/

public void addEqCondition(String colNm, Object val) {

Assert.isTrue(clazz.contains(val.getClass()), "不支持的数据类型:{}", val.getClass());

List<Object> vals = eqConditions.getOrDefault(colNm, new ArrayList<>());

if (CollectionUtil.isNotEmpty(vals)) {

Assert.isTrue(vals.get(0).getClass() == val.getClass(), "colNm:{},数据类型不匹配,{},", colNm, vals.get(0).getClass());

}

vals.add(val);

eqConditions.put(colNm, vals);

}

/**

* 设置不等于条件项

*

* @param colNm

* @param val

*/

public void addNeCondition(String colNm, Object val) {

Assert.isTrue(clazz.contains(val.getClass()), "不支持的数据类型:{}", val.getClass());

List<Object> vals = neConditions.getOrDefault(colNm, new ArrayList<>());

if (CollectionUtil.isNotEmpty(vals)) {

Assert.isTrue(vals.get(0).getClass() == val.getClass(), "colNm:{},数据类型不匹配,{},", colNm, vals.get(0).getClass());

}

vals.add(val);

neConditions.put(colNm, vals);

}

public void addExtTarget2SrcColNm(Map<String, String> extTarget2SrcColNms) {

if (CollectionUtil.isNotEmpty(extTarget2SrcColNms)) {

extendTarget2SrcColNms.putAll(extTarget2SrcColNms);

for (Map.Entry<String, String> entry : extTarget2SrcColNms.entrySet()) {

Assert.isFalse(extTargetColNms.stream().anyMatch(s -> s.equalsIgnoreCase(entry.getKey())), "字段:{} 已存在", entry.getKey());

extTargetColNms.add(entry.getValue());

extSrcColNms.add(entry.getValue());

}

}

}

public void addExtTarget2SrcColNm(String targetColNm, String srcColNm) {

Assert.isFalse(extTargetColNms.stream().anyMatch(s -> s.equalsIgnoreCase(targetColNm)), "字段:{} 已存在", targetColNm);

extendTarget2SrcColNms.put(targetColNm, srcColNm);

extTargetColNms.add(targetColNm);

extSrcColNms.add(srcColNm);

}

/**

* 获取查询数据sql

*

* @param queryColNms 查询col-nms

* @return {@link String}

*/

public String getQueryDataSql(List<String> queryColNms) {

String queryPartSql = null;

if (CollectionUtil.isEmpty(this.extSrcColNms)) {

queryPartSql = StrUtil.format("SELECT {} FROM {}.{}", String.join(",", queryColNms), this.getSchemaNm(), this.getTableNm());

} else {

List<String> tempColNms = new ArrayList<>(queryColNms);

tempColNms.addAll(queryColNms);

tempColNms.addAll(this.extSrcColNms);

queryPartSql = StrUtil.format("SELECT {} FROM {}.{}", String.join(",", tempColNms), this.getSchemaNm(), this.getTableNm());

}

List<String> whereConditionStrs = getWherePartSql();

String result = null;

if (CollectionUtil.isEmpty(eqConditions)) {

result = queryPartSql;

} else {

result = queryPartSql + " WHERE " + String.join(" AND ", whereConditionStrs);

}

return result;

}

/**

* 获取Sql where部分条件语句

*

* @return {@link List}<{@link String}>

*/

public List<String> getWherePartSql() {

List<String> whereConditionStrs = new ArrayList<>();

if (CollectionUtil.isNotEmpty(eqConditions)) {

for (Map.Entry<String, List<Object>> entry : eqConditions.entrySet()) {

if (entry.getValue().size() == 0) {

continue;

}

Object firstVal = entry.getValue().get(0);

if (entry.getValue().size() == 1) {

if (firstVal.getClass() == String.class || firstVal.getClass() == Character.class) {

whereConditionStrs.add(StrUtil.format("{} = '{}'", entry.getKey(), firstVal));

} else {

whereConditionStrs.add(StrUtil.format("{} = {}", entry.getKey(), firstVal));

}

} else {

if (firstVal.getClass() == String.class || firstVal.getClass() == Character.class) {

String colValStr = entry.getValue().stream().map(v -> {

return StrUtil.format("'{}'", v);

}).collect(Collectors.joining(","));

whereConditionStrs.add(StrUtil.format("{} IN ({})", entry.getKey(), colValStr));

} else {

String colValStr = entry.getValue().stream().map(v -> {

return StrUtil.format("{}", v);

}).collect(Collectors.joining(","));

whereConditionStrs.add(StrUtil.format("{} IN ({})", entry.getKey(), colValStr));

}

}

}

}

if (CollectionUtil.isNotEmpty(neConditions)) {

for (Map.Entry<String, List<Object>> entry : neConditions.entrySet()) {

if (entry.getValue().size() == 0) {

continue;

}

Object firstVal = entry.getValue().get(0);

if (entry.getValue().size() == 1) {

if (firstVal.getClass() == String.class || firstVal.getClass() == Character.class) {

whereConditionStrs.add(StrUtil.format("{} != '{}'", entry.getKey(), firstVal));

} else {

whereConditionStrs.add(StrUtil.format("{} != {}", entry.getKey(), firstVal));

}

} else {

if (firstVal.getClass() == String.class || firstVal.getClass() == Character.class) {

String colValStr = entry.getValue().stream().map(v -> {

return StrUtil.format("'{}'", v);

}).collect(Collectors.joining(","));

whereConditionStrs.add(StrUtil.format("{} NOT IN ({})", entry.getKey(), colValStr));

} else {

String colValStr = entry.getValue().stream().map(v -> {

return StrUtil.format("{}", v);

}).collect(Collectors.joining(","));

whereConditionStrs.add(StrUtil.format("{} NOT IN ({})", entry.getKey(), colValStr));

}

}

}

}

return whereConditionStrs;

}

public String getInsertDataSql(String targetSchemaNm, String targetTableNm, List<String> queryColNms) {

List<String> insertColNms = new ArrayList<>();

insertColNms.addAll(queryColNms);

if (CollectionUtil.isNotEmpty(this.extTargetColNms)) {

insertColNms.addAll(this.extTargetColNms);

}

String result = StrUtil.format("INSERT INTO {}.{} ({}) {}", targetSchemaNm, targetTableNm, String.join(",", insertColNms), getQueryDataSql(queryColNms));

return result;

}

public String getDelDataSql() {

List<String> whereConditionStrs = getWherePartSql();

String result = null;

if (CollectionUtil.isEmpty(whereConditionStrs)) {

result = StrUtil.format("TRUNCATE TABLE {}.{}", this.getSchemaNm(), this.getTableNm());

} else {

result = StrUtil.format("DELETE FROM {}.{}", this.getSchemaNm(), this.getTableNm()) + " WHERE " + String.join(" AND ", whereConditionStrs);

}

return result;

}

@Override

public String toString() {

return StrUtil.format("{}.{}", schemaNm, tableNm);

}

public String getFullTableNm() {

return StrUtil.format("{}.{}", schemaNm, tableNm);

}

}

@Data

public class TransferResult {

/**

* 源数量

*/

private Integer srcNum;

/**

* 目标数量

*/

private Integer targetNum;

/**

* 错误信息

*/

private String errorMsg;

}

(3)传输工具类DataTransferUtil

@Slf4j

public class DataTransferUtil {

public static TransferResult dataSync(TransferReqContext srcContext, TransferReqContext targetContext, boolean isSameDataSrc) {

TransferResult result = new TransferResult();

if (isSameDataSrc) {

result = DataTransferUtil.sameDataSrcDataSync(srcContext, targetContext);

} else {

if ((srcContext.getEngineAssist() instanceof DataxReaderDataSrc) && (targetContext.getEngineAssist() instanceof DataXWriterDataSrc)) {

result = DataTransferUtil.diffDataSrcDataSyncByDataX(srcContext, targetContext);

} else {

result = new TransferResult();

result.setErrorMsg("双方不支持DataX互传数据");

}

}

return result;

}

/**

* 同一个数据源数据同步

*

* @param srcContext

* @param targetContext

*/

public static TransferResult sameDataSrcDataSync(TransferReqContext srcContext, TransferReqContext targetContext) {

TransferResult result = new TransferResult();

log.info("开始执行同源数据转移操作");

// 自定义指标 计算结果在数仓的窄表中、

AbstractEngineAssist engineAssist = srcContext.getEngineAssist();

// 指标计算中间结果表

List<EngineColumn> srcCols = engineAssist.listColumnsByTable(srcContext.getDataSrcId(), srcContext.getSchemaNm(), srcContext.getTableNm(), true);

List<String> srcColNms = srcCols.stream().map(EngineColumn::getColName).map(String::toUpperCase).collect(Collectors.toList());

// 指标计算存储结果表

List<EngineColumn> destCols = engineAssist.listColumnsByTable(srcContext.getDataSrcId(), targetContext.getSchemaNm(), targetContext.getTableNm(), true);

List<String> destColNms = destCols.stream().map(EngineColumn::getColName).map(String::toUpperCase).collect(Collectors.toList());

// 相同的列名称

ArrayList<String> sameColNms = new ArrayList<>(CollectionUtil.intersection(destColNms, srcColNms));

String storageSql = srcContext.getInsertSelectDataSql(targetContext.getSchemaNm(), targetContext.getTableNm(), sameColNms);

String delHistorySql = targetContext.getEngineAssist().getDelTableDataSql(targetContext.getDataSqlReqContext());

log.info("同源数据转移Sql:{}", storageSql);

log.info("开始执行同源数据转移操作=====");

log.info("同源数据转移,清理历史数据SQL:{}", delHistorySql);

engineAssist.executeSql(srcContext.getDataSrcId(), delHistorySql);

log.info("同源数据转移,清理历史数据结果完毕=====");

engineAssist.executeSql(srcContext.getDataSrcId(), storageSql);

log.info("同源数据转移,数据转移完毕=====");

return result;

}

/**

* 通过Datax的形式将不同数据源的数据进行同步

*

* @param srcContext

* @param targetContext

*/

public static TransferResult diffDataSrcDataSyncByDataX(TransferReqContext srcContext,

TransferReqContext targetContext) {

TransferResult result = new TransferResult();

log.info("通过Datax,开始执行不同源间转移操作");

List<EngineColumn> srcCols = srcContext.getEngineAssist().listColumnsByTable(

srcContext.getDataSrcId(), srcContext.getSchemaNm(), srcContext.getTableNm(), true);

List<EngineColumn> destCols = targetContext.getEngineAssist().listColumnsByTable(

targetContext.getDataSrcId(), targetContext.getSchemaNm(), targetContext.getTableNm(), true);

List<String> insertSelectColNms = listInsertSelectColNms(srcCols, destCols);

Assert.notEmpty(insertSelectColNms, "异源数据转移,两张表不存在相同列名的字段,无法执行转移操作");

DataxReaderContext readerContext = new DataxReaderContext();

Tuple3<String, IDatabaseTemplate, DataSourceResponse> tuple3 = DatabaseUtil.queryIDatabaseTemplate3(srcContext.getDataSrcId());

readerContext.setDataSourceResponse(tuple3.getV3());

if (StrUtil.isNotBlank(srcContext.getQuerySql())) {

readerContext.setQuerySql(srcContext.getQuerySql());

} else {

readerContext.setQuerySql(srcContext.getQueryDataSql(insertSelectColNms));

}

DataxReaderParam dataxReaderParam = ((DataxReaderDataSrc) srcContext.getEngineAssist()).getDataxReaderParam(readerContext);

String delTableDataSql = targetContext.getEngineAssist().getDelTableDataSql(targetContext.getDataSqlReqContext());

DataxBaseWriterContext baseWriterContext = new DataxBaseWriterContext();

baseWriterContext.setDataSrcId(targetContext.getDataSrcId());

baseWriterContext.setSchemaNa(targetContext.getSchemaNm());

baseWriterContext.setTableNm(targetContext.getTableNm());

baseWriterContext.setPreSql(delTableDataSql);

baseWriterContext.setInstanceCode(targetContext.getInstanceCode());

DataxWriterParam writerParam = ((DataXWriterDataSrc) targetContext.getEngineAssist()).getDataxWriterParam(baseWriterContext);

DataxTransferParam transferParam = new DataxTransferParam();

transferParam.setReaderParam(dataxReaderParam);

transferParam.setWriterParam(writerParam);

log.info("开始进行DataX数据同步");

Tuple3<Integer, Integer, String> execDb2db = DataXServerSshUtil.execDb2db(transferParam);

log.info("DataX数据同步完毕,执行结果:{},{}", execDb2db.getV1(), execDb2db.getV2());

result.setSrcNum(execDb2db.getV1());

result.setTargetNum(execDb2db.getV2());

result.setErrorMsg(execDb2db.getV3());

return result;

}

/**

* @param srcCols src列

* @param destCols Dest Cols

* @return {@link List}<{@link String}>

*/

private static List<String> listInsertSelectColNms(List<EngineColumn> srcCols, List<EngineColumn> destCols) {

List<String> srcColNms = srcCols.stream().map(EngineColumn::getColName).map(String::toUpperCase).collect(Collectors.toList());

List<String> destColNms = destCols.stream().map(EngineColumn::getColName).map(String::toUpperCase).collect(Collectors.toList());

List<String> result = new ArrayList<>();

// 处理 结果表存在7个字段,但是查询SQL只有5个字段的情况

destColNms.forEach(colNm -> {

if (srcColNms.contains(colNm)) {

result.add(colNm);

}

});

return result;

}

}

(4)ssh执行工具DataXServerSshUtil

@Slf4j

@Component

public class DataXServerSshUtil implements InitializingBean {

private static DataxServerConfig config;

private final static Pattern totalPattern = Pattern.compile("读出记录总数.*.*");

private final static Pattern errorPattern = Pattern.compile("读写失败总数.*.*");

@Override

public void afterPropertiesSet() throws Exception {

init();

}

private void init() {

config = SpringUtil.getBean(DataxServerConfig.class);

Assert.notEmpty(config.getHost(), "Datax 服务器列表为空");

log.info("获取Datax的SSH配置完成:{}", config.getHost());

}

public static SshExecResult execCommand(String command) {

Session session = openSession();

SshExecResult result;

try {

FastByteArrayOutputStream outputStream = new FastByteArrayOutputStream();

String ipResult = JschUtil.exec(session, "hostname -I | awk '{print $1}'", StandardCharsets.UTF_8, new FastByteArrayOutputStream());

log.info(StrUtil.format("datax服务ip:{}",ipResult));

String execResult = JschUtil.exec(session, command, StandardCharsets.UTF_8, outputStream);

String errorMsg = outputStream.toString();

result = new SshExecResult();

result.setNomarlMsg(execResult);

result.setErrorMsg(errorMsg);

} finally {

if (session != null) {

session.disconnect();

}

}

return result;

}

public static SshExecResult execCommand(Session session, String command) {

FastByteArrayOutputStream outputStream = new FastByteArrayOutputStream();

String execResult = JschUtil.exec(session, command, StandardCharsets.UTF_8, outputStream);

String errorMsg = outputStream.toString();

SshExecResult result = new SshExecResult();

result.setNomarlMsg(execResult);

result.setErrorMsg(errorMsg);

return result;

}

public static SshExecResult execCommands(Session session, List<String> commands) {

String command = String.join(" && ", commands);

return execCommand(session, command);

}

/**

* 上传文件

*

* @param targetDir

* @param localFile

* @return 远程文件地址

*/

public static File uploadFile(String targetDir, File localFile) {

String remoteFileName = "";

Session session = openSession();

try {

Sftp sftp = JschUtil.createSftp(session);

sftp.syncUpload(localFile, targetDir);

remoteFileName = targetDir + "/" + localFile.getName();

sftp.close();

} finally {

if (session != null) {

session.disconnect();

}

}

return new File(remoteFileName);

}

public static void download(String remoteFile, File localFile) {

Session session = openSession();

try {

Sftp sftp = JschUtil.createSftp(session);

sftp.download(remoteFile, localFile);

sftp.close();

} finally {

if (session != null) {

session.disconnect();

}

}

}

public static void delDir(String path) {

Session session = openSession();

try {

Sftp sftp = JschUtil.createSftp(session);

sftp.delDir(path);

sftp.close();

} finally {

if (session != null) {

session.disconnect();

}

}

}

public static void delFile(String filePath) {

Session session = openSession();

try {

Sftp sftp = JschUtil.createSftp(session);

sftp.delFile(filePath);

sftp.close();

} finally {

if (session != null) {

session.disconnect();

}

}

}

/**

* 获取同步父目录

*

* @param date 日期

* @return {@link String}

*/

public static String getSyncParentDir(Date date, String dirName) {

return StrUtil.format("{}/{}/{}/{}", config.getSyncIndexDir(), IndexSystemConstant.DAMP_INDEX_PARENT_DIR_NAME,

DateUtil.format(date, DatePattern.PURE_DATE_FORMAT), dirName);

}

/**

* 获取指标计算父目录

*

* @param dateStr 日期str

* @param dirName

* @return {@link String}

*/

public static String getIndexSyncParentDir(String dateStr, String dirName) {

return StrUtil.format("{}/{}/{}/{}", config.getSyncIndexDir(), IndexSystemConstant.DAMP_INDEX_PARENT_DIR_NAME,

StrUtil.replace(dateStr, "-", ""), dirName);

}

/**

* 获取表同步父目录

*

* @param dateStr 日期str

* @return {@link String}

*/

public static String getTableSyncParentDir(String dateStr) {

return StrUtil.format("{}/{}", config.getSyncTableDir(), StrUtil.replace(dateStr, "-", ""));

}

private static Session openSession() {

Session session = JschUtil.openSession(config.getHost(), config.getPort(), config.getUserName(), config.getPassWd(), 60 * 60 * 1000);

return session;

}

private static Pattern LOAD_CSV_LOAD_PATTERN = Pattern.compile("\\d+");

/**

* 数据源 -> csv

*

* @param db2csvParameter 同步参数配置

* @return {@link Tuple2}<{@link Integer}, {@link Integer}>

* 第一个: 读取的数据总量

* 第二个: 入库成功的数量

* 第三个: 失败原因

*/

public static Tuple3<Integer, Integer, String> execDb2csv(Db2csvParameter db2csvParameter) {

/*

db2csvParameter.setFilePath(config.getSyncTableDir());

*/

db2csvParameter.setDataxHome(config.getDataxHome());

String cmd = db2csvParameter.generateCmd();

log.info("同步命令: {}", cmd);

SshExecResult execResult = execCommand(cmd);

if (!execResult.execDataxSuccess()) {

log.error("datax同步异常: 标准日志输出:{},错误日志输出:{}", execResult.getNomarlMsg(), execResult.getErrorMsg());

throw new YTRuntimeException("datax同步失败");

}

return analysisDataxExecResultStr(execResult);

}

/**

* csv -> db

*

* @param csv2dbParameter 同步参数配置

* @return {@link Tuple2}<{@link Integer}, {@link Integer}>

* 第一个: 读取的数据总量

* 第二个: 入库成功的数量

*/

public static Tuple3<Integer, Integer, String> execCsv2db(Csv2dbParameter csv2dbParameter) {

/*

csv2dbParameter.setFilePath(config.getVoltdbPath());

*/

csv2dbParameter.setDataxHome(config.getDataxHome());

String cmd = csv2dbParameter.generateCmd();

log.info("同步命令: {}", cmd);

SshExecResult execResult = execCommand(cmd);

if (!execResult.execDataxSuccess()) {

log.error("同步异常: 标准日志输出:{},错误日志输出:{}", execResult.getNomarlMsg(), execResult.getErrorMsg());

throw new YTRuntimeException("datax同步失败");

}

return analysisDataxExecResultStr(execResult);

}

/**

* db -> db

*

* @param db2dbParameter 同步参数配置

* @return {@link Tuple2}<{@link Integer}, {@link Integer}>

* 第一个: 读取的数据总量

* 第二个: 入库成功的数量

*/

public static Tuple3<Integer, Integer, String> execDb2db(Db2dbParameter db2dbParameter) {

/*

csv2dbParameter.setFilePath(config.getVoltdbPath());

*/

db2dbParameter.setDataxHome(config.getDataxHome());

String cmd = db2dbParameter.generateCmd();

log.info("同步命令: {}", cmd);

SshExecResult execResult = execCommand(cmd);

if (!execResult.execDataxSuccess()) {

log.error("同步异常: 标准日志输出:{},错误日志输出:{}", execResult.getNomarlMsg(), execResult.getErrorMsg());

throw new YTRuntimeException("datax同步失败");

}

return analysisDataxExecResultStr(execResult);

}

public static Tuple3<Integer, Integer, String> execDb2csv(DataxReaderParam readerParam, DataxCsvParam dataxCsvParam) {

Db2csvParameter db2csvParameter = new Db2csvParameter();

//db2csvParameter.setRecord();

db2csvParameter.setReader(readerParam.getReader());

db2csvParameter.setUsername(readerParam.getReaderUsername());

db2csvParameter.setPassword(readerParam.getReaderPassword());

db2csvParameter.setQuerySql(readerParam.getQuerySql());

db2csvParameter.setJdbcUrl(readerParam.getReaderJdbcUrl());

db2csvParameter.setFileName(dataxCsvParam.getFileName());

db2csvParameter.setFilePath(dataxCsvParam.getDir());

db2csvParameter.setFieldDelimiter(dataxCsvParam.getDelimiter());

db2csvParameter.setDataxHome(config.getDataxHome());

String cmd = db2csvParameter.generateCmd();

log.info("同步命令: {}", cmd);

SshExecResult execResult = execCommand(cmd);

if (!execResult.execDataxSuccess()) {

log.error("datax同步异常: 标准日志输出:{},错误日志输出:{}", execResult.getNomarlMsg(), execResult.getErrorMsg());

throw new YTRuntimeException("datax同步失败");

}

return analysisDataxExecResultStr(execResult);

}

public static Tuple3<Integer, Integer, String> execDb2db(DataxTransferParam transferParam) {

transferParam.setDataxHome(config.getDataxHome());

String cmd = transferParam.generateCmd();

log.info("DataX 查询SQL:{}",transferParam.getReaderParam().getQuerySql());

log.info("DataX 数据预清理SQL:{}", transferParam.getWriterParam().getPreSql());

log.info("DataX数据同步命令: {}", cmd);

SshExecResult execResult = execCommand(cmd);

if (!execResult.execDataxSuccess()) {

log.error("同步异常: 标准日志输出:{},错误日志输出:{}", execResult.getNomarlMsg(), execResult.getErrorMsg());

throw new YTRuntimeException("datax同步失败");

}

return analysisDataxExecResultStr(execResult);

}

public static Tuple3<Integer, Integer, String> execCmd(String cmd) {

Assert.isTrue(ObjectUtil.isNotEmpty(cmd), "同步数据命令为空");

log.info("DataX数据同步命令: {}", cmd);

SshExecResult execResult = execCommand(cmd);

if (!execResult.execDataxSuccess()) {

log.error("同步异常: 标准日志输出:{},错误日志输出:{}", execResult.getNomarlMsg(), execResult.getErrorMsg());

throw new YTRuntimeException("datax同步失败");

}

return analysisDataxExecResultStr(execResult);

}

private static Tuple3<Integer, Integer, String> analysisDataxExecResultStr(SshExecResult sshExecResult) {

int total = 0;

int insertNum = 0;

Matcher total1Mather = totalPattern.matcher(sshExecResult.getNomarlMsg());

Matcher errorMather = errorPattern.matcher(sshExecResult.getNomarlMsg());

if (total1Mather.find()) {

String group = total1Mather.group(0);

String[] split = group.split(":");

total = Integer.valueOf(StringUtils.trim(split[1]));

}

if (errorMather.find()) {

String group = errorMather.group(0);

String[] split = group.split(":");

insertNum = total - Integer.valueOf(StringUtils.trim(split[1]));

}

Tuple3<Integer, Integer, String> result = new Tuple3<>(total, insertNum, sshExecResult.getErrorMsg());

return result;

}

}

4万+

4万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?