终端运行

scrapy crawl zhihu_com报错

2018-08-15 16:55:23 [scrapy.utils.log] INFO: Scrapy 1.5.1 started (bot: zhihuCrawl)

2018-08-15 16:55:23 [scrapy.utils.log] INFO: Versions: lxml 4.1.1.0, libxml2 2.9.7, cssselect 1.0.3, parsel 1.5.0, w3lib 1.19.0, Twisted 18.7.0, Python 3.6.4 |Anaconda custom (64-bit)| (default, Jan 16 2018, 18:10:19) - [GCC 7.2.0], pyOpenSSL 17.5.0 (OpenSSL 1.0.2o 27 Mar 2018), cryptography 2.1.4, Platform Linux-4.14.47-1-MANJARO-x86_64-with-arch-Manjaro-Linux

Traceback (most recent call last):

File "/home/as/anaconda3/lib/python3.6/site-packages/scrapy/spiderloader.py", line 69, in load

return self._spiders[spider_name]

KeyError: 'zhihu_com'

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/home/as/anaconda3/bin/scrapy", line 11, in <module>

sys.exit(execute())

File "/home/as/anaconda3/lib/python3.6/site-packages/scrapy/cmdline.py", line 150, in execute

_run_print_help(parser, _run_command, cmd, args, opts)

File "/home/as/anaconda3/lib/python3.6/site-packages/scrapy/cmdline.py", line 90, in _run_print_help

func(*a, **kw)

File "/home/as/anaconda3/lib/python3.6/site-packages/scrapy/cmdline.py", line 157, in _run_command

cmd.run(args, opts)

File "/home/as/anaconda3/lib/python3.6/site-packages/scrapy/commands/crawl.py", line 57, in run

self.crawler_process.crawl(spname, **opts.spargs)

File "/home/as/anaconda3/lib/python3.6/site-packages/scrapy/crawler.py", line 170, in crawl

crawler = self.create_crawler(crawler_or_spidercls)

File "/home/as/anaconda3/lib/python3.6/site-packages/scrapy/crawler.py", line 198, in create_crawler

return self._create_crawler(crawler_or_spidercls)

File "/home/as/anaconda3/lib/python3.6/site-packages/scrapy/crawler.py", line 202, in _create_crawler

spidercls = self.spider_loader.load(spidercls)

File "/home/as/anaconda3/lib/python3.6/site-packages/scrapy/spiderloader.py", line 71, in load

raise KeyError("Spider not found: {}".format(spider_name))

KeyError: 'Spider not found: zhihu_com'

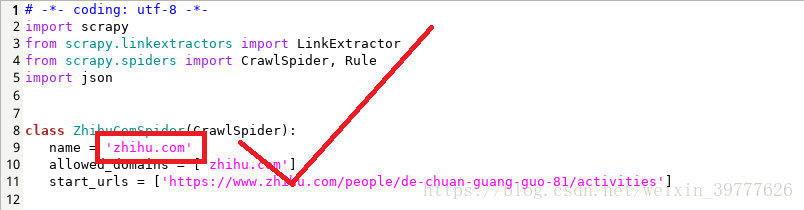

解决(代码纠正为)

scrapy crawl zhihu.com注意爬虫名称应为Spider下name所对应的名称,而不是Spider文件的名称

本文记录了一次使用Scrapy框架爬取知乎网站时遇到的启动错误,并给出了详细的错误日志及解决方法。强调了在配置爬虫时正确指定爬虫名称的重要性。

本文记录了一次使用Scrapy框架爬取知乎网站时遇到的启动错误,并给出了详细的错误日志及解决方法。强调了在配置爬虫时正确指定爬虫名称的重要性。

5万+

5万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?