1. 分布式事务问题:

单体应用被拆分为微服务应用,原来的三个模块被拆分成三个独立的应用,分别使用三个独立的数据源,业务操作需要调用三个服务来完成。此时每个服务内部的数据一致性由本地事务来保证,但是全局的数据一致性问题没法保证。

一句话:一次业务操作需要跨多个数据源或需要跨多个系统进行远程调用,就会产生分布式事务问题。

2. Seata:

是一款开源的分布式事务解决方案,致力于在微服务架构下提供高性能和简单易用的分布式事务服务。

2.1 一个典型的分布式事务过程:

2.1.1 分布式事务处理过程的1ID+三组件模型:

- 1个ID:Transaction ID XID :全局唯一的事务ID

- 三个组件:

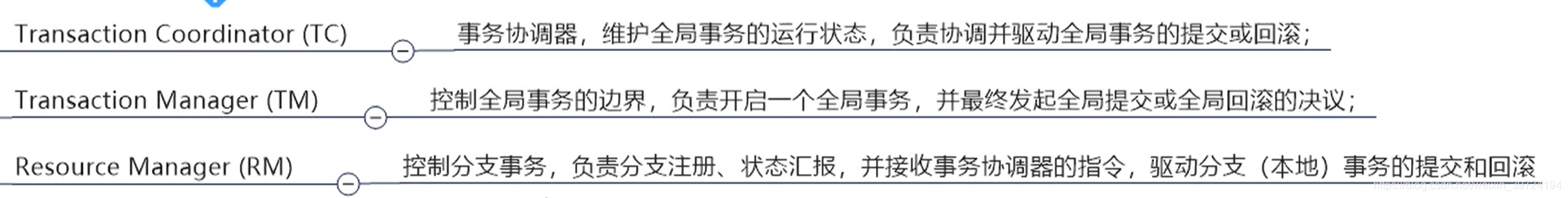

- TC (Transaction Coordinator) - 事务协调者:维护全局和分支事务的状态,驱动全局事务提交或回滚。

- TM (Transaction Manager) - 事务管理器:定义全局事务的范围:开始全局事务、提交或回滚全局事务。

- RM (Resource Manager) - 资源管理器:管理分支事务处理的资源,与TC交谈以注册分支事务和报告分支事务的状态,并驱动分支事务提交或回滚。

2.1.2 处理过程:

2.2 seata安装:

-

官网下载,解压,我下载的是1.2版本

-

修改conf/file.conf,主要是service模块和store模块

-

在mysql中新建seata数据库,sql在seata/conf中

-

打开conf中的readme,里面有数据库表的github地址,新建表

-

register.conf中指定注册中心为nacos,并设置serverAddr:

-

启动

注意:如果出现闪退请查看seata闪退问题解决。

3. 业务模拟:

3.1 订单/库存/账户业务数据库准备:

3.1.1.业务模拟:

3.1.2 创建数据库:

1.3.订单表:

CREATE TABLE t_order(

`id` BIGINT(11) NOT NULL AUTO_INCREMENT PRIMARY KEY,

`user_id` BIGINT(11) DEFAULT NULL COMMENT '用户id',

`product_id` BIGINT(11) DEFAULT NULL COMMENT '产品id',

`count` BIGINT(11) DEFAULT NULL COMMENT '数量',

`money` BIGINT(11) DEFAULT NULL COMMENT '金额',

`status` BIGINT(11) DEFAULT NULL COMMENT '订单状态: 0创建中 1已完结'

) ENGINE = INNODB AUTO_INCREMENT=7 DEFAULT CHARSET=utf8;

1.4.库存表:

CREATE TABLE t_storage(

`id` BIGINT(11) NOT NULL AUTO_INCREMENT PRIMARY KEY,

`product_id` BIGINT(11) DEFAULT NULL COMMENT '产品id',

`total` BIGINT(11) DEFAULT NULL COMMENT '总库存',

`used` BIGINT(11) DEFAULT NULL COMMENT '已用库存',

`residue` BIGINT(11) DEFAULT NULL COMMENT '剩余库存'

) ENGINE = INNODB AUTO_INCREMENT=2 DEFAULT CHARSET=utf8;

INSERT INTO t_storage( `id`,`product_id`, `total`,`used`,`residue`) values(1,1,100,0,100);

SELECT * FROM t_storage;

1.5.账户表:

CREATE TABLE t_account(

`id` BIGINT(11) NOT NULL AUTO_INCREMENT PRIMARY KEY,

`user_id` BIGINT(11) DEFAULT NULL COMMENT '用户id',

`total` BIGINT(11) DEFAULT NULL COMMENT '总额度',

`used` BIGINT(11) DEFAULT NULL COMMENT '已用额度',

`residue` BIGINT(11) DEFAULT NULL COMMENT '剩余额度'

) ENGINE = INNODB AUTO_INCREMENT=2 DEFAULT CHARSET=utf8;

INSERT INTO t_account( `id`,`user_id`, `total`,`used`,`residue`) values(1,1,1000,0,1000);

SELECT * FROM t_account;

1.6.日志回滚表(每个数据库都需要创建):

drop table `undo_log`;

CREATE TABLE `undo_log` (

`id` bigint(20) NOT NULL AUTO_INCREMENT,

`branch_id` bigint(20) NOT NULL,

`xid` varchar(100) NOT NULL,

`context` varchar(128) NOT NULL,

`rollback_info` longblob NOT NULL,

`log_status` int(11) NOT NULL,

`log_created` datetime NOT NULL,

`log_modified` datetime NOT NULL,

`ext` varchar(100) DEFAULT NULL,

PRIMARY KEY (`id`),

UNIQUE KEY `ux_undo_log` (`xid`,`branch_id`)

) ENGINE=InnoDB AUTO_INCREMENT=1 DEFAULT CHARSET=utf8;

2.module搭建:

2.1.订单服务

业务代码不多说,主要看看配置:

- pom

<!-- nacos -->

<dependency>

<groupId>com.alibaba.cloud</groupId>

<artifactId>spring-cloud-starter-alibaba-nacos-discovery</artifactId>

</dependency>

<!-- seata-->

<dependency>

<groupId>com.alibaba.cloud</groupId>

<artifactId>spring-cloud-starter-alibaba-seata</artifactId>

<exclusions>

<exclusion>

<groupId>io.seata</groupId>

<artifactId>seata-all</artifactId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>io.seata</groupId>

<artifactId>seata-all</artifactId>

<version>1.2.0</version>

</dependency>

- yml

server:

port: 2001

spring:

application:

name: seata-order-service

cloud:

alibaba:

seata:

# 自定义事务组名称需要与seata-server中的对应

tx-service-group: my_test_tx_group #因为seata的file.conf文件中没有service模块,事务组名默认为my_test_tx_group

#service要与tx-service-group对齐,vgroupMapping和grouplist在service的下一级,my_test_tx_group在再下一级

service:

vgroupMapping:

#要和tx-service-group的值一致

my_test_tx_group: default

grouplist:

# seata seaver的 地址配置,此处可以集群配置是个数组

default: 10.211.55.26:8091

nacos:

discovery:

server-addr: 10.211.55.26:8848 #nacos

datasource:

# 当前数据源操作类型

type: com.alibaba.druid.pool.DruidDataSource

# mysql驱动类

driver-class-name: com.mysql.cj.jdbc.Driver

url: jdbc:mysql://10.211.55.26:3305/seata_storage?useUnicode=true&characterEncoding=UTF-8&useSSL=false&serverTimezone=GMT%2B8

username: root

password: 123456

feign:

hystrix:

enabled: false

logging:

level:

io:

seata: info

mybatis:

mapperLocations: classpath*:mapper/*.xml

- file.conf

transport {

# tcp udt unix-domain-socket

type = "TCP"

#NIO NATIVE

server = "NIO"

#enable heartbeat

heartbeat = true

# the client batch send request enable

enableClientBatchSendRequest = true

#thread factory for netty

threadFactory {

bossThreadPrefix = "NettyBoss"

workerThreadPrefix = "NettyServerNIOWorker"

serverExecutorThread-prefix = "NettyServerBizHandler"

shareBossWorker = false

clientSelectorThreadPrefix = "NettyClientSelector"

clientSelectorThreadSize = 1

clientWorkerThreadPrefix = "NettyClientWorkerThread"

# netty boss thread size,will not be used for UDT

bossThreadSize = 1

#auto default pin or 8

workerThreadSize = "default"

}

shutdown {

# when destroy server, wait seconds

wait = 3

}

serialization = "seata"

compressor = "none"

}

service {

vgroupMapping.my_test_tx_group = "default"

default.grouplist = "10.211.55.26:8091"

enableDegrade = false

disableGlobalTransaction = false

}

client {

rm {

asyncCommitBufferLimit = 10000

lock {

retryInterval = 10

retryTimes = 30

retryPolicyBranchRollbackOnConflict = true

}

reportRetryCount = 5

tableMetaCheckEnable = false

reportSuccessEnable = false

sagaBranchRegisterEnable = false

}

tm {

commitRetryCount = 5

rollbackRetryCount = 5

degradeCheck = false

degradeCheckPeriod = 2000

degradeCheckAllowTimes = 10

}

undo {

dataValidation = true

onlyCareUpdateColumns = true

logSerialization = "jackson"

logTable = "undo_log"

}

log {

exceptionRate = 100

}

}

- registry.conf

registry {

# file 、nacos 、eureka、redis、zk、consul、etcd3、sofa

type = "nacos"

nacos {

application = "seata-server"

serverAddr = "10.211.55.26:8848" #nacos

namespace = ""

username = ""

password = ""

}

eureka {

serviceUrl = "http://localhost:8761/eureka"

weight = "1"

}

redis {

serverAddr = "localhost:6379"

db = "0"

password = ""

timeout = "0"

}

zk {

serverAddr = "127.0.0.1:2181"

sessionTimeout = 6000

connectTimeout = 2000

username = ""

password = ""

}

consul {

serverAddr = "127.0.0.1:8500"

}

etcd3 {

serverAddr = "http://localhost:2379"

}

sofa {

serverAddr = "127.0.0.1:9603"

region = "DEFAULT_ZONE"

datacenter = "DefaultDataCenter"

group = "SEATA_GROUP"

addressWaitTime = "3000"

}

file {

name = "file.conf"

}

}

config {

# file、nacos 、apollo、zk、consul、etcd3、springCloudConfig

type = "file"

nacos {

serverAddr = "127.0.0.1:8848"

namespace = ""

group = "SEATA_GROUP"

username = ""

password = ""

}

consul {

serverAddr = "127.0.0.1:8500"

}

apollo {

appId = "seata-server"

apolloMeta = "http://192.168.1.204:8801"

namespace = "application"

}

zk {

serverAddr = "127.0.0.1:2181"

sessionTimeout = 6000

connectTimeout = 2000

username = ""

password = ""

}

etcd3 {

serverAddr = "http://localhost:2379"

}

file {

name = "file.conf"

}

}

2.2. 库存

2.3. 账户

3.测试

在业务的流程中添加等待20s的代码,让其超时,查看结果。

添加@GlobalTransaction,再次验证,查看结果。

rollbackFor:出现了什么异常进行回滚

4. seata原理:

4.1 TC,TM,RM

- TC:相当于seata服务器

- TM:添加了@GlobalTransactional注解的方法,事物的发起方

- RM:事务的参与方

执行流程:

4.2 AT模式:

4.2.1 整体机制:

- 一阶段:业务数据和回滚日志记录在同一个本地事务中提交,释放本地锁和连接资源。

- 二阶段:

- 提交异步化,非常快速地完成

- 回滚通过一阶段的回滚日志进行反向补偿

4.2.2 一阶段加载:

4.2.3 二阶段提交:

二阶段如果是顺利提交的话,因为“业务SQL”在一阶段已经提交至数据库,所以Seata框架只需将一阶段保存的快照数据和行锁删掉,完成数据清理即可。

4.2.4 二阶段回滚:

在分布式事务执行过程中,可以查看seata_order数据库的undo_log表中的rollback_info字段内容,查看before image和after image的内容。

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?