本篇blog主要以code+markdown的形式介绍tf这本实战书。(建议使用jupyter来学习)

[实战Google深度学习框架]Tensorflow(1)TF环境搭建+入门学习

[实战Google深度学习框架]Tensorflow(2)深层神经网络

[实战Google深度学习框架]Tensorflow(3)MNIST数字识别问题

[实战Google深度学习框架]Tensorflow(4)图像识别与卷积神经网络

[实战Google深度学习框架]Tensorflow(5)图像数据处理

[实战Google深度学习框架]Tensorflow(6)循环神经网络

第八章 循环神经网络

8.1 循环神经网络简介

8.2 长短时记忆网络LSTM结构

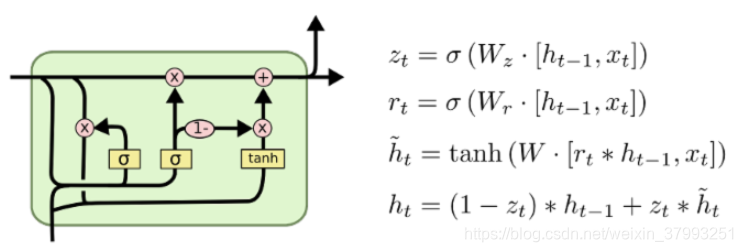

8.3 循环神经网络的变种

8.4 循环神经网络样例应用

8.1 循环神经网络简介

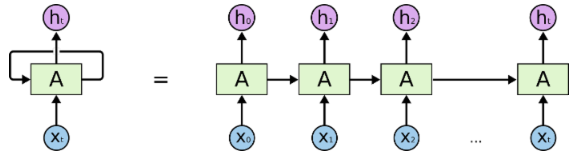

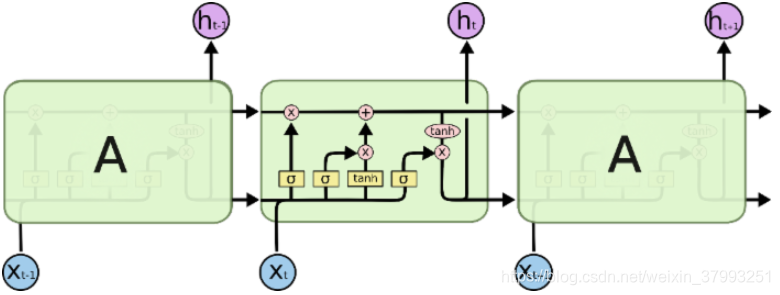

Reurrent neural network

神经网络的模块,A,正在读取某个输入 x_i,并输出一个值 h_i。循环可以使得信息可以从当前步传递到下一步。这些循环使得 RNN 看起来非常神秘。然而,如果你仔细想想,这样也不比一个正常的神经网络难于理解。RNN 可以被看做是同一神经网络的多次赋值,每个神经网络模块会把消息传递给下一个。

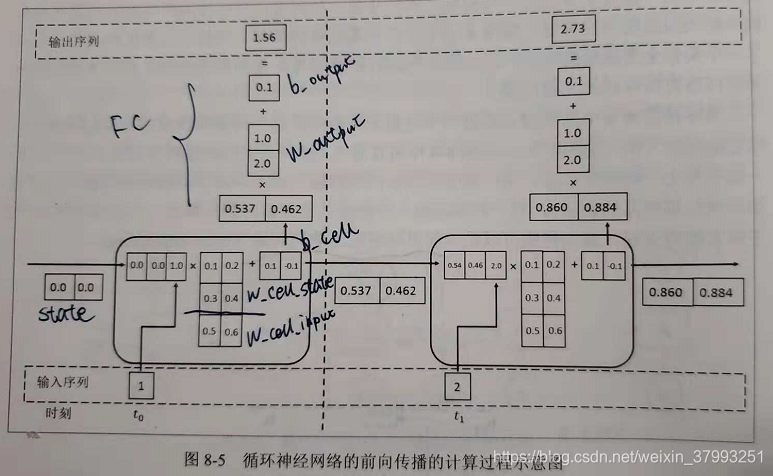

在每一个时刻t,循环神经网络会针对该时刻的输入结合当前模型状态给出一个输出,并更新模型。广泛用于语音识别、语音模型、机器翻译以及时序分析。

import numpy as np

X = [1, 2]

state = [0.0, 0.0]

w_cell_state = np.asarray([[0.1, 0.2], [0.3, 0.4]])

w_cell_input = np.asarray([0.5, 0.6])

b_cell = np.asarray([0.1, -0.1])

# 定义输出的全连接层参数

w_output = np.asarray([[1.0], [2.0]])

b_output = 0.1

# 前向传播

for i in range(len(X)):

# 计算循环体中的全连接层神经网络

before_activation = np.dot(state, w_cell_state) + X[i] * w_cell_input + b_cell

state = np.tanh(before_activation)

final_output = np.dot(state, w_output) + b_output

print("before activation: ", before_activation)

print("state: ", state)

print("output: ", final_output)before activation: [0.6 0.5] state: [0.53704957 0.46211716] output: [1.56128388] before activation: [1.2923401 1.39225678] state: [0.85973818 0.88366641] output: [2.72707101]

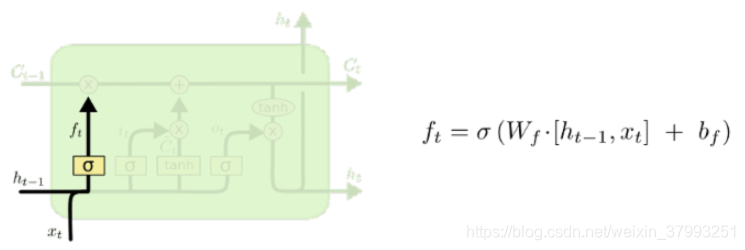

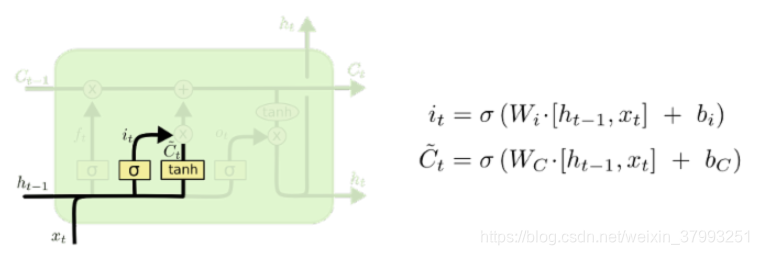

8.2 长短时记忆网络LSTM结构

RNN 可以学会使用先前的信息,相关信息和当前预测位置之间的间隔就肯定变得相当的大。为了解决这个问题,lstm是可以学习长期依赖信息。

下面是LSTM的细胞(Cell),每个细胞可视作4层。

- 忘记层(Forget layer)

- 确定更新的信息

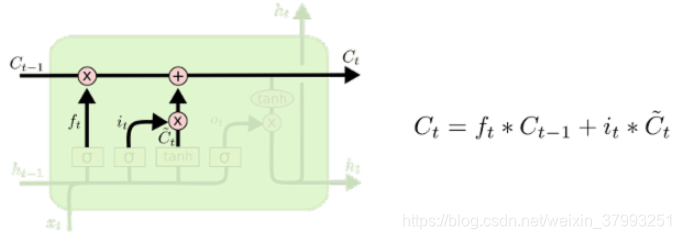

- 更新细胞状态

、

、

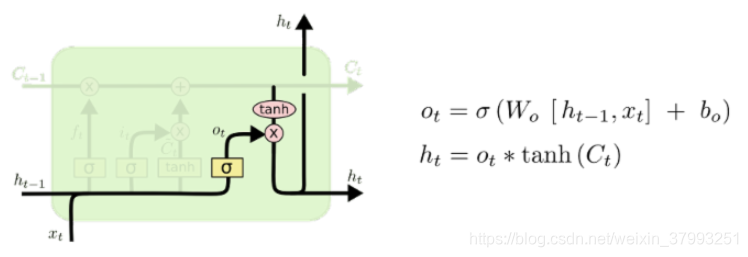

- 输出信息

综合来看

# lstm

import tensorflow as tf

from tensorflow.contrib import rnn

lstm = rnn.BasicLSTMCell(lstm_hidden_size)

# 将lstm设置为全0数组。

state = lstm.zero_state(batch_size, tf.float32)

# 定义损失函数

loss = 0.0

# num_step表示长度

for i in range(num_steps):

if i> 0: tf.get_variable_scope().reuse_variables()

# 每一步处理时间的一个时刻

lstm_output, state = lstm(current_input, state)

final_output = fully_connected(lstm_output)

loss += calc_loss(final_output, expected_output)8.3 循环神经网络的变种

lstm变种:GRU

8.3.1 双向循环神经网络和深层循环神经网络

- bidirection RNN

- Deep RNN

# bidirection RNN

# 定义一个基本的LSTM结构作为循环体的基础结构

lstm_cell = tf.nn.run_cell.BasicLSTMCell

# number_of_laters表示有多少层

stacked_lstm = tf.nn.run_cell.MultiRNNCell([lstm_cell(lstm_size) for _ in range (num_of_layers)])

state = stacked_lstm.zero_state(batch_size, tf.float32)

for i in range (len(num_steps)):

if i>0: tf.get_variable_scope().reuse_variables()

stacked_lstm_output, state = stacked_lstm(current_input, state)

final_out = fully_connected(stacked_lstm_output)

loss += calc_loss(final_output, expected_output)8.3.2 循环神经网络的dropout

从t-1传递到时刻t时不会使用dropout;在同一个时刻t时,不同层循环体之间会用到dropout。

# Dropout

lstm_cell = tf.nn.run_cell.BasicLSTMCell

stacked_lstm = tf.nn.run_cell.MultiRNNCell(

[tf.nn.run_cell.DropoutWrapper(lstm_cell(lstm_size)) for _ in range(number_of_layers)])

8.4 循环神经网络样例应用

import numpy as np

import tensorflow as tf

from tensorflow.contrib.learn.python.learn.estimators.estimator import SKCompat

from tensorflow.python.ops import array_ops as array_ops_

import matplotlib.pyplot as plt

learn = tf.contrib.learn

HIDDEN_SIZE = 30

NUM_LAYERS = 2

TIMESTEPS = 10

TRAINING_STEPS = 3000

BATCH_SIZE = 32

TRAINING_EXAMPLES = 10000

TESTING_EXAMPLES = 1000

SAMPLE_GAP = 0.01

def generate_data(seq):

X = []

y = []

for i in range(len(seq) - TIMESTEPS - 1):

X.append([seq[i: i + TIMESTEPS]])

y.append([seq[i + TIMESTEPS]])

return np.array(X, dtype=np.float32), np.array(y, dtype=np.float32)

def lstm_model(X, y):

#使用多层的lstm结构

# lstm_cell = tf.contrib.rnn.BasicLSTMCell(HIDDEN_SIZE, state_is_tuple=True)

stack_rnn = []

for i in range(NUM_LAYERS):

stack_rnn.append(tf.contrib.rnn.BasicLSTMCell(HIDDEN_SIZE, state_is_tuple=True))

cell = tf.contrib.rnn.MultiRNNCell(stack_rnn, state_is_tuple = True)

# cell = tf.contrib.rnn.MultiRNNCell([lstm_cell] * NUM_LAYERS)

output, _ = tf.nn.dynamic_rnn(cell, X, dtype=tf.float32)

output = tf.reshape(output, [-1, HIDDEN_SIZE])

# 通过无激活函数的全联接层计算线性回归,并将数据压缩成一维数组的结构。

predictions = tf.contrib.layers.fully_connected(output, 1, None)

# 将predictions和labels调整统一的shape

labels = tf.reshape(y, [-1])

predictions=tf.reshape(predictions, [-1])

loss = tf.losses.mean_squared_error(predictions, labels)

train_op = tf.contrib.layers.optimize_loss(

loss, tf.contrib.framework.get_global_step(),

optimizer="Adagrad", learning_rate=0.1)

return predictions, loss, train_op

# 封装之前定义的lstm。

regressor = SKCompat(learn.Estimator(model_fn=lstm_model,model_dir="Models/model_2"))

# 生成数据。

test_start = TRAINING_EXAMPLES * SAMPLE_GAP

test_end = (TRAINING_EXAMPLES + TESTING_EXAMPLES) * SAMPLE_GAP

train_X, train_y = generate_data(np.sin(np.linspace(

0, test_start, TRAINING_EXAMPLES, dtype=np.float32)))

test_X, test_y = generate_data(np.sin(np.linspace(

test_start, test_end, TESTING_EXAMPLES, dtype=np.float32)))

# 拟合数据。

regressor.fit(train_X, train_y, batch_size=BATCH_SIZE, steps=TRAINING_STEPS)

# 计算预测值。

predicted = [[pred] for pred in regressor.predict(test_X)]

# 计算MSE。

rmse = np.sqrt(((predicted - test_y) ** 2).mean(axis=0))

print ("Mean Square Error is: %f" % rmse[0])INFO:tensorflow:Using default config.

INFO:tensorflow:Using config: {'_task_type': None, '_tf_random_seed': None, '_save_summary_steps': 100, '_num_worker_replicas': 0, '_task_id': 0, '_master': '', '_model_dir': 'Models/model_2', '_environment': 'local', '_cluster_spec': <tensorflow.python.training.server_lib.ClusterSpec object at 0x00000235C9C464E0>, '_evaluation_master': '', '_keep_checkpoint_max': 5, '_keep_checkpoint_every_n_hours': 10000, '_save_checkpoints_secs': 600, '_save_checkpoints_steps': None, '_session_config': None, '_tf_config': gpu_options {

per_process_gpu_memory_fraction: 1

}

, '_is_chief': True, '_num_ps_replicas': 0}

INFO:tensorflow:Create CheckpointSaverHook.

INFO:tensorflow:Saving checkpoints for 1 into Models/model_2\model.ckpt.

INFO:tensorflow:step = 1, loss = 0.47839618

INFO:tensorflow:global_step/sec: 430.922

INFO:tensorflow:step = 101, loss = 0.004381972 (0.240 sec)

INFO:tensorflow:global_step/sec: 409.73

INFO:tensorflow:step = 201, loss = 0.0045765224 (0.240 sec)

INFO:tensorflow:global_step/sec: 414.83

INFO:tensorflow:step = 301, loss = 0.0027877581 (0.239 sec)

INFO:tensorflow:global_step/sec: 436.57

INFO:tensorflow:step = 401, loss = 0.003011153 (0.230 sec)

INFO:tensorflow:global_step/sec: 558.501

INFO:tensorflow:step = 501, loss = 0.0025163838 (0.179 sec)

INFO:tensorflow:global_step/sec: 549.323

INFO:tensorflow:step = 601, loss = 0.0021143623 (0.184 sec)

INFO:tensorflow:global_step/sec: 520.698

INFO:tensorflow:step = 701, loss = 0.0013500093 (0.190 sec)

INFO:tensorflow:global_step/sec: 531.772

INFO:tensorflow:step = 801, loss = 0.0014220935 (0.189 sec)

INFO:tensorflow:global_step/sec: 520.701

INFO:tensorflow:step = 901, loss = 0.0011332377 (0.191 sec)

INFO:tensorflow:global_step/sec: 504.923

INFO:tensorflow:step = 1001, loss = 0.00088214123 (0.197 sec)

INFO:tensorflow:global_step/sec: 471.575

INFO:tensorflow:step = 1101, loss = 0.0007954753 (0.212 sec)

INFO:tensorflow:global_step/sec: 492.485

INFO:tensorflow:step = 1201, loss = 0.0008214351 (0.204 sec)

INFO:tensorflow:global_step/sec: 473.81

INFO:tensorflow:step = 1301, loss = 0.0005900164 (0.211 sec)

INFO:tensorflow:global_step/sec: 492.774

INFO:tensorflow:step = 1401, loss = 0.00063968275 (0.203 sec)

INFO:tensorflow:global_step/sec: 469.362

INFO:tensorflow:step = 1501, loss = 0.00068045617 (0.214 sec)

INFO:tensorflow:global_step/sec: 440.416

INFO:tensorflow:step = 1601, loss = 0.0005436362 (0.226 sec)

INFO:tensorflow:global_step/sec: 420.056

INFO:tensorflow:step = 1701, loss = 0.00047062963 (0.239 sec)

INFO:tensorflow:global_step/sec: 452.373

INFO:tensorflow:step = 1801, loss = 0.00049600465 (0.223 sec)

INFO:tensorflow:global_step/sec: 389.006

INFO:tensorflow:step = 1901, loss = 0.00036307215 (0.254 sec)

INFO:tensorflow:global_step/sec: 442.364

INFO:tensorflow:step = 2001, loss = 0.00025714404 (0.226 sec)

INFO:tensorflow:global_step/sec: 403.119

INFO:tensorflow:step = 2101, loss = 0.00022472427 (0.250 sec)

INFO:tensorflow:global_step/sec: 444.333

INFO:tensorflow:step = 2201, loss = 0.00029125522 (0.224 sec)

INFO:tensorflow:global_step/sec: 476.066

INFO:tensorflow:step = 2301, loss = 0.00015195832 (0.211 sec)

INFO:tensorflow:global_step/sec: 446.315

INFO:tensorflow:step = 2401, loss = 0.0001383292 (0.222 sec)

INFO:tensorflow:global_step/sec: 462.844

INFO:tensorflow:step = 2501, loss = 0.000150413 (0.218 sec)

INFO:tensorflow:global_step/sec: 444.329

INFO:tensorflow:step = 2601, loss = 0.00012988682 (0.224 sec)

INFO:tensorflow:global_step/sec: 456.503

INFO:tensorflow:step = 2701, loss = 0.00012928667 (0.218 sec)

INFO:tensorflow:global_step/sec: 450.334

INFO:tensorflow:step = 2801, loss = 0.00010170178 (0.223 sec)

INFO:tensorflow:global_step/sec: 458.596

INFO:tensorflow:step = 2901, loss = 6.4593165e-05 (0.217 sec)

INFO:tensorflow:Saving checkpoints for 3000 into Models/model_2\model.ckpt.

INFO:tensorflow:Loss for final step: 7.6830984e-05.

INFO:tensorflow:Restoring parameters from Models/model_2\model.ckpt-3000

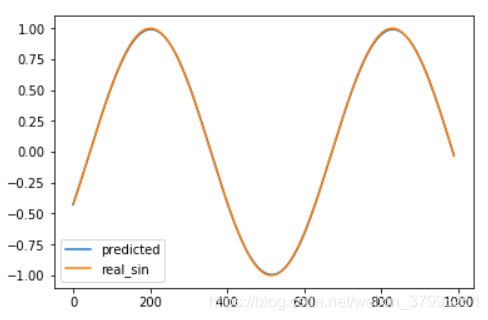

Mean Square Error is: 0.008494plot_predicted, = plt.plot(predicted, label='predicted')

plot_test, = plt.plot(test_y, label='real_sin')

plt.legend([plot_predicted, plot_test],['predicted', 'real_sin'])

plt.show()

本文详细介绍循环神经网络(RNN)及其变种长短时记忆网络(LSTM)的原理与实现,通过具体实例演示如何利用TensorFlow搭建RNN模型,解决序列预测问题。文章覆盖RNN的基本概念、LSTM结构解析、双向及深层RNN、dropout技巧,以及在时间序列预测中的应用案例。

本文详细介绍循环神经网络(RNN)及其变种长短时记忆网络(LSTM)的原理与实现,通过具体实例演示如何利用TensorFlow搭建RNN模型,解决序列预测问题。文章覆盖RNN的基本概念、LSTM结构解析、双向及深层RNN、dropout技巧,以及在时间序列预测中的应用案例。

1万+

1万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?