1. 集群的规划

描述:hadoop HA机制的搭建依赖与zookeeper,所以选取三台当作zookeeper集群,总共准备了4台主机,分别是hadoop01,hadoop02,hadoop03,hadoop04,其中hadoop01和hadoop02做namenode主备的切换,hadoop03和hadoop04作为resourcemanager的切换。

| hadoop01 | hadoop02 | hadoop03 | hadoop04 | |

| namenode | ✓ | ✓ | ||

| datanode | ✓ | ✓ | ✓ | ✓ |

| resourcemanager | ✓ | ✓ | ||

| nodemanager | ✓ | ✓ | ✓ | ✓ |

| zookeeper | ✓ | ✓ | ✓ | |

| journalnode | ✓ | ✓ | ✓ | |

| zkfc | ✓ | ✓ |

2. 集群服务器的准备

- 修改主机名和主机IP

- 添加主机名和IP映射

- 添加普通用户到sudoers权限

- 设置服务启动级别

- 同步Linux时间

- 关闭防火墙

- 配置SSH免密登陆

- 安装JDK

- 修改主机名和IP

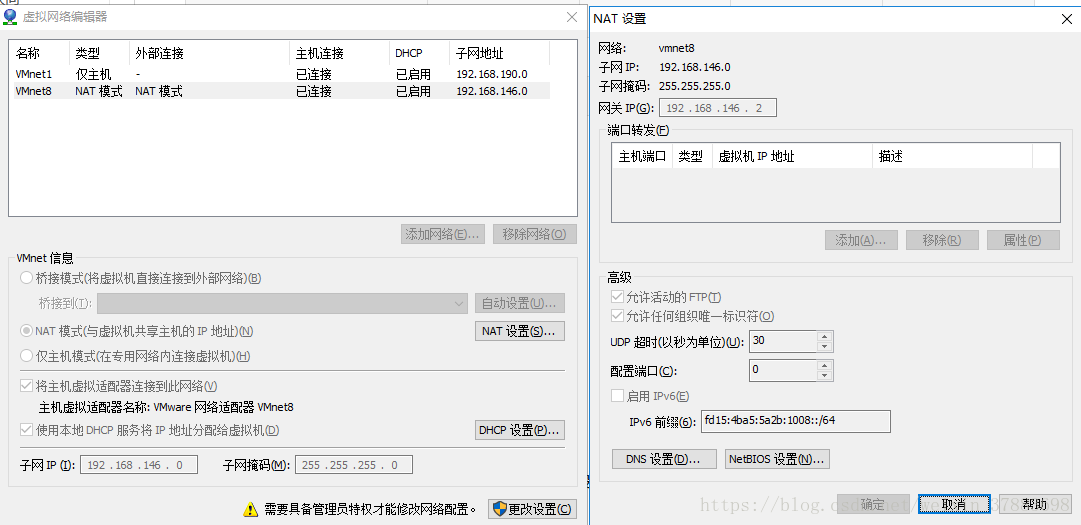

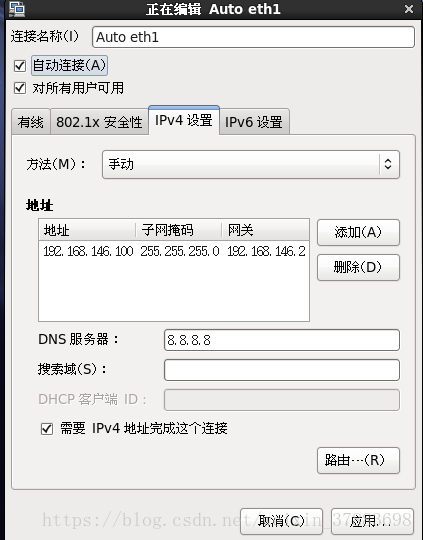

修改虚拟机中的Linux IP,首先查看虚拟机Vmnet8网络设置,其中我的网关是192.168.146.2

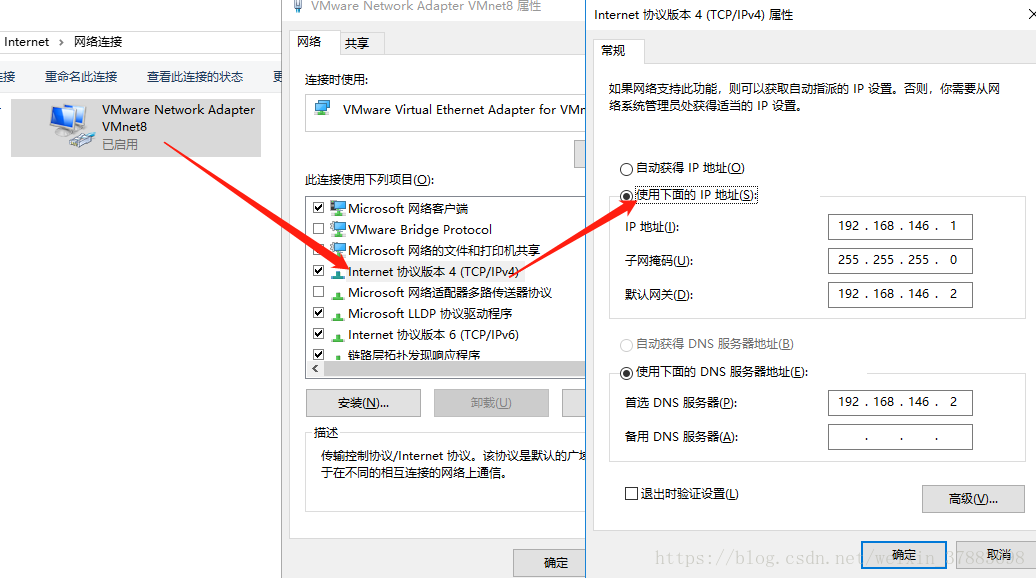

然后修改本地VMnet8网络设置:

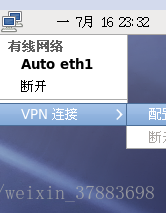

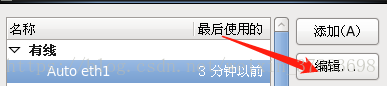

接着修改Linux网络设置(Redhat6.5):

修改好IP后修改主机名:

1. 修改hosts配置:[root@hadoop01 hyotei]# vi /etc/hosts 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 192.168.146.100 hadoop01 192.168.146.101 hadoop02 192.168.146.102 hadoop03 192.168.146.103 hadoop04 ##因为我使用了4台主机,所以将4台主机名及IP都加入##2.修改network设置:

[root@hadoop01 hyotei]# vi /etc/sysconfig/network NETWORKING=yes HOSTNAME=hadoop01之后重启即可

-

添加windows主机名和映射:

我是用的是sublime text 打开windows 下的hosts文件,首先右击sublime使用管理员身份打开,然后从sublime中找到windows下的hosts文件(C:\Windows\System32\drivers\etc\hosts)192.168.146.100 hadoop01 192.168.146.101 hadoop02 192.168.146.102 hadoop03 192.168.146.103 hadoop04将以下内容添加到文件中,然后重启电脑即可

-

添加用户到sudoers 参考:https://blog.youkuaiyun.com/eldn__/article/details/26767997

-

Linux 同步时间:https://blog.youkuaiyun.com/W77_20/article/details/79125859

-

关闭防火墙(不同的操作系统关闭指令有些差别):https://blog.youkuaiyun.com/qq_34989708/article/details/73603638

-

设置SSH免密登陆:

[root@hadoop01 hyotei]# ssh-keygen -t rsa (这个是用来生成密钥对的) [root@hadoop01 hyotei]# ssh-copy-id hadoop02 这样hadoop01就可以利用ssh 远程登陆hadoop02

3. 集群的安装

- 安装zookeeper,下载地址:http://www-eu.apache.org/dist/zookeeper/

1.1 首先解压zookeeper文件[hyotei@hadoop01 ~]$ tar zookeeper-3.4.6.tar.gz -C /usr/app 解压到/usr/app文件夹下1.2 配置zoo.cfg文件:

[hyotei@hadoop01 ~]$cd zookeeper-3.4.6/conf/ [hyotei@hadoop01 ~]$mv zoo-sample.cfg zoo.cfg [hyotei@hadoop01 ~]$vi zoo.cfg # The number of milliseconds of each tick tickTime=2000 # The number of ticks that the initial # synchronization phase can take initLimit=10 # The number of ticks that can pass between # sending a request and getting an acknowledgement syncLimit=5 # the directory where the snapshot is stored. # do not use /tmp for storage, /tmp here is just # example sakes. dataDir=/home/hyotei/opt/zookeeper (制定zookeeper产生文件的目录) # the port at which the clients will connect clientPort=2181 # the maximum number of client connections. # increase this if you need to handle more clients #maxClientCnxns=60 # # Be sure to read the maintenance section of the # administrator guide before turning on autopurge. # # http://zookeeper.apache.org/doc/current/zookeeperAdmin.html#sc_maintenance # # The number of snapshots to retain in dataDir #autopurge.snapRetainCount=3 # Purge task interval in hours # Set to "0" to disable auto purge feature #autopurge.purgeInterval=1 server.1=hadoop01:2888:3888 server.2=hadoop02:2888:3888 server.3=hadoop03:2888:3888将zookeeper文件拷贝到hadoop02与hadoop03上去

[hyotei@hadoop01 ~]$scp -r zookeeper-3.4.6 hadoop02:/home/hyotei/zookeeper-3.4.6 [hyotei@hadoop01 ~]$scp -r zookeeper-3.4.6 hadoop03:/home/hyotei/zookeeper-3.4.61.3配置myid,在hadoop01中:

在该文件夹下执行:

在该文件夹下执行:[hyotei@hadoop01 zookeeper]$mkdir data [hyotei@hadoop01 zookeeper]$echo 1 > myid在hadoop02,hadoop03下类似的执行

[hyotei@hadoop02 zookeeper]$echo 2 > myid [hyotei@hadoop03 zookeeper]$echo 3 > myid1.4为方便zookeeper启动,将zookeeper添加大环境变量中:

[hyotei@hadoop01 zookeeper]$sudo vi /etc/profile 添加 export ZK_HOME=/home/hyotei/hadoop/zookeeper-3.4.6/ export PATH==${JAVA_HOME}/bin:${ZK_HOME}/bin:$PATH [hyotei@hadoop01 zookeeper]$source /etc.profile [hyotei@hadoop01 zookeeper]$zkServer.sh start (启动zk) [hyotei@hadoop01 zookeeper]$zkServer.sh status (查看zk状态) [hyotei@hadoop01 zookeeper]$zkServer.sh stop (停止zk) -

安装hadoop集群(下载地址:http://www-us.apache.org/dist/hadoop/common/)

2.1解压[hyotei@hadoop01 ~]$tar -zxvf hadoop-2.7.4.tar.gz -C /usr/app/hadoop-2.7.42.2修改配置文件:配置文件在/hadoop-2.7.4/etc/hadoop下

2.2.1修改hadoop-env.sh:[hyotei@hadoop01 hadoop]$ vi hadoop-env.sh export JAVA_HOME=/usr/lib/jvm/jdk1.8.0_171/ (将自己JAVA_HOME位置手动添加) JAVA_HOME位置可使用查到: echo $JAVA_HOME2.2.2修改core-site.xml:

<configuration> <!-- 指定 hdfs 的 nameservice 为 myha01 --> <property> <name>fs.defaultFS</name> <value>hdfs://myhadoop/</value> </property> <!-- 指定 hadoop 工作目录 --> <property> <name>hadoop.tmp.dir</name> <value>/home/hadoop/data/hadoopdata/</value> </property> <!-- 指定 zookeeper 集群访问地址 --> <property> <name>ha.zookeeper.quorum</name> <value>hadoop01:2181,hadoop02:2181,hadoop03:2181</value> </property> </configuration>2.2.3修改hdfs-site.xml:

<configuration> <!-- 指定副本数 --> <property> <name>dfs.replication</name> <value>2</value> </property> <!--指定 hdfs 的 nameservice 为 myhadoop,需要和 core-site.xml 中保持一致--> <property> <name>dfs.nameservices</name> <value>myhadoop</value> </property> <!-- myhadoop 下面有两个 NameNode,分别是 nn1,nn2 --> <property> <name>dfs.ha.namenodes.myhadoop</name> <value>nn1,nn2</value> </property> <!-- nn1 的 RPC 通信地址 --> <property> <name>dfs.namenode.rpc-address.myhadoop.nn1</name> <value>hadoop01:9000</value> </property> <!-- nn1 的 http 通信地址 --> <property> <name>dfs.namenode.http-address.myhadoop.nn1</name> <value>hadoop01:50070</value> </property> <!-- nn2 的 RPC 通信地址 --> <property> <name>dfs.namenode.rpc-address.myhadoop.nn2</name> <value>hadoop02:9000</value> </property> <!-- nn2 的 http 通信地址 --> <property> <name>dfs.namenode.http-address.myhadoop.nn2</name> <value>hadoop02:50070</value> </property> <!-- 指定 NameNode 的 edits 元数据在 JournalNode 上的存放位置 --> <property> <name>dfs.namenode.shared.edits.dir</name> <value>qjournal://hadoop01:8485;hadoop02:8485;hadoop03:8485/myhadoop</value> </property> <!-- 指定 JournalNode 在本地磁盘存放数据的位置 --> <property> <name>dfs.journalnode.edits.dir</name> <value>/home/hadoop/data/journaldata</value> </property> <!-- 开启 NameNode 失败自动切换 --> <property> <name>dfs.ha.automatic-failover.enabled</name> <value>true</value> </property> <!-- 配置失败自动切换实现方式 --> <!-- 此处配置在安装的时候切记检查不要换行--> <property> <name>dfs.client.failover.proxy.provider.myhadoop</name> <value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value> </property> <!-- 配置隔离机制方法,多个机制用换行分割,即每个机制暂用一行--> <property> <name>dfs.ha.fencing.methods</name> <value> sshfence shell(/bin/true) </value> </property> <!-- 使用 sshfence 隔离机制时需要 ssh 免登陆 --> <property> <name>dfs.ha.fencing.ssh.private-key-files</name> <value>/home/hyotei/.ssh/id_rsa</value> </property> <!-- 配置 sshfence 隔离机制超时时间 --> <property> <name>dfs.ha.fencing.ssh.connect-timeout</name> <value>30000</value> </property> </configuration>2.2.4修改mapred-site.xml:(需要先将mapred-site.xml.template改名mapred-site.xml)

<configuration> <!-- 指定 mr 框架为 yarn 方式 --> <property> <name>mapreduce.framework.name</name> <value>yarn</value> </property> <!-- 设置 mapreduce 的历史服务器地址和端口号 --> <property> <name>mapreduce.jobhistory.address</name> <value>hadoop01:10020</value> </property> <!-- mapreduce 历史服务器的 web 访问地址 --> <property> <name>mapreduce.jobhistory.webapp.address</name> <value>hadoop01:19888</value> </property> </configuration>2.2.5修改yarn-site.xml:

<configuration> <!-- 开启 RM 高可用 --> <property> <name>yarn.resourcemanager.ha.enabled</name> <value>true</value> </property> <!-- 指定 RM 的 cluster id --> <property> <name>yarn.resourcemanager.cluster-id</name> <value>yrc</value> </property> <!-- 指定 RM 的名字 --> <property> <name>yarn.resourcemanager.ha.rm-ids</name> <value>rm1,rm2</value> </property> <!-- 分别指定 RM 的地址 --> <property> <name>yarn.resourcemanager.hostname.rm1</name> <value>hadoop03</value> </property> <property> <name>yarn.resourcemanager.hostname.rm2</name> <value>hadoop04</value> </property> <!-- 指定 zk 集群地址 --> <property> <name>yarn.resourcemanager.zk-address</name> <value>hadoop01:2181,hadoop02:2181,hadoop03:2181</value> </property> <!-- 要运行 MapReduce 程序必须配置的附属服务 --> <property> <name>yarn.nodemanager.aux-services</name> <value>mapreduce_shuffle</value> </property> <!-- 开启 YARN 集群的日志聚合功能 --> <property> <name>yarn.log-aggregation-enable</name> <value>true</value> </property> <!-- YARN 集群的聚合日志最长保留时长 --> <property> <name>yarn.log-aggregation.retain-seconds</name> <value>86400</value> </property> <!-- 启用自动恢复 --> <property> <name>yarn.resourcemanager.recovery.enabled</name> <value>true</value> </property> <!-- 制定 resourcemanager 的状态信息存储在 zookeeper 集群上--> <property> <name>yarn.resourcemanager.store.class</name> <value>org.apache.hadoop.yarn.server.resourcemanager.recovery.ZKRMStateStore</value> </property> <property> <name>yarn.nodemanager.resource.memory-mb</name> <value>1536</value> </property> <property> <name>yarn.nodemanager.resource.cpu-vcores</name> <value>1</value> </property> </configuration>2.2.6修改slaves:

[hyotei@hadoop01 hadoop]$ vi slaves 添加datanode结点地址: hadoop01 hadoop02 hadoop03 hadoop042.2.7分发安装包到其他机器:

scp -r hadoop-2.7.4 hadoop02:/home/hyotei/hadoop-2.7.4 scp -r hadoop-2.7.4 hadoop03:/home/hyotei/hadoop-2.7.4 scp -r hadoop-2.7.4 hadoop04:/home/hyotei/hadoop-2.7.42.2.8每台机器上分别配置环境变量:

[hyotei@hadoop01 ~]$ sudo vi /etc/profile 添加 export HADOOP_HOME=/home/hyotei/hadoop-2.7.4 export PATH=${JAVA_HOME}/bin:${HADOOP_HOME}/bin:${HADOOP_HOME}/sbin:${ZK_HOME}/bin:$PATH 保存退出 [hyotei@hadoop01 ~]$ source /etc/profile -

集群初始化(严格要求):

3.1先启动zookeeper(hadoop01,hadoop02,hadoop03)都需要启动[hyotei@hadoop01 ~]$zkServer.sh start [hyotei@hadoop01 ~]$zkServer.sh status (检查是否启动成功)3.2分别启动journalnode:

[hyotei@hadoop01 ~]$hadoop-daemon.sh start journalnode [hyotei@hadoop02 ~]$hadoop-daemon.sh start journalnode [hyotei@hadoop03 ~]$hadoop-daemon.sh start journalnodejps检查是否有jurnalnode 进程

3.3第一个namenode节点进行初始化操作:[hyotei@hadoop01 ~]$hadoop namenode -format然后会在 core-site.xml 中配置的临时目录中生成一些集群的信息,把他拷贝的第二个 namenode 的相同目录下

<name>hadoop.tmp.dir</name> <value>/home/hyotei/hadoop-2.7.4/data/hadoopdata/</value>[hyotei@hadoop01 hadoop-2.7.4]$scp -r ./data/hadoopdata/ hadoop02:/home/hyotei/hadoop-2.7.4/data 或者第二个节点 [hyotei@hadoop02 ~]$hadoop namenode -bootstrapStandby3.4格式化zkfc(第一台机器上即可):

[hyotei@hadoop01 hadoop-2.7.4]$hdfs zkfc -formatZK3.5启动HDFS:

[hyotei@hadoop01 hadoop-2.7.4]$start-dfs.shJPS查看各节点进程是否启动正常:依次为 1234 四台机器的进程

web访问页面:第一个namenode http://hadoop01:50070

第二个namenode http://hadoop02:50070

3.6 hadoop03上启动YARN:[hyotei@hadoop03 hadoop-2.7.4]$start-yarn.sh若备用节点的 resourcemanager 没有启动起来,则手动启动起来:

[hyotei@hadoop04 hadoop-2.7.4]$yarn-daemon.sh start resourcemanagerresourcemanager的web页面:http://hadoop03:8088

hadoop04 是 standby resourcemanager,会自动跳转到 hadoop03 -

查看各个namenode和resourcemanager节点状态:

[hyotei@hadoop01 hadoop-2.7.4]$hdfs haadmin -getServiceState nn1 active [hyotei@hadoop01 hadoop-2.7.4]$hdfs haadmin -getServiceState nn2 standby [hyotei@hadoop01 hadoop-2.7.4]$yarn rmadmin -getServiceState rm1 active [hyotei@hadoop01 hadoop-2.7.4]$yarn rmadmin -getServiceState rm2 standby -

启动 mapreduce 任务历史服务器:

[hyotei@hadoop01 hadoop-2.7.4]$ mr-jobhistory-daemon.sh start historyserver按照配置文件配置的历史服务器的 web 访问地址去访问:http://hadoop01:19888

-

初始化后再次启动hadoop集群顺序:

zkServer.sh start (三台) => start-dfs.sh (第一个namenode节点)=> start-yarn.sh (hadoop03上) => yarn-daemon.sh start resourcemanager (一般备用的resourcemanger 总是启动不了需要手动启动) -

如果存在有的机器namenode 或则datanode节点没有启动的了,可以尝试 :

[hyotei@hadoop01 hadoop-2.7.4]$hadoop-daemon.sh start namenode [hyotei@hadoop01 hadoop-2.7.4]$hadoop-daemon.sh start datanode -

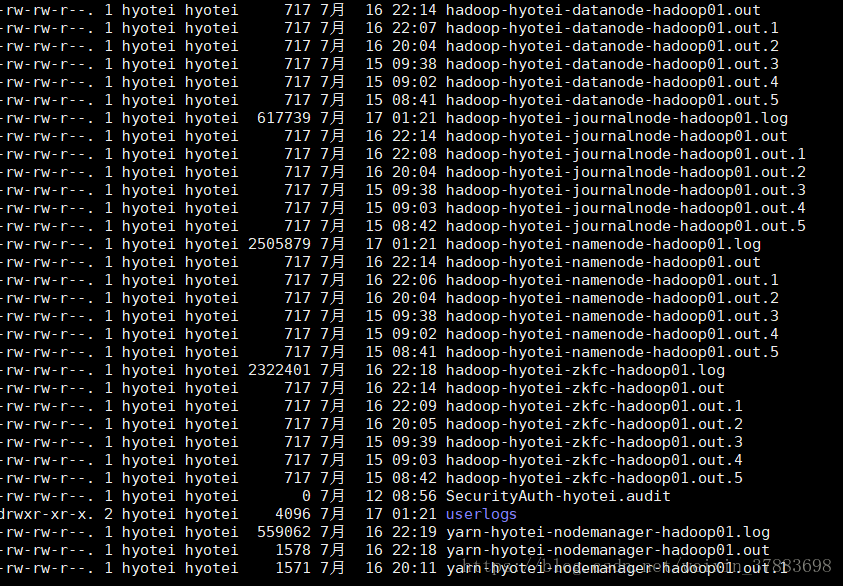

如果尝试启动的节点仍然会消失或者在初始化或启动不成功,可以查看对应机器的hadoop日志:

日志文件存在与/hadoop-2.7.4/logs文件夹下:

假设hadoop01下的namenode没有启动成功,那就执行:[hyotei@hadoop01 logs]$ less hadoop-hyotei-namenode-hadoop01.log查看error

本文详细介绍了如何构建Hadoop HA高可用集群,包括四台主机的配置、zookeeper集群搭建、Hadoop集群安装与初始化步骤等关键技术环节。

本文详细介绍了如何构建Hadoop HA高可用集群,包括四台主机的配置、zookeeper集群搭建、Hadoop集群安装与初始化步骤等关键技术环节。

1628

1628

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?