[root@hadoop01 apache-hive-3.1.3-bin]# hive

which: no hbase in (/export/servers/hadoop-3.3.5/bin::/export/servers/apache-hive-3.1.3-bin/bin:/export/servers/flume-1.9.0/bin::/export/servers/apache-hive-3.1.3-bin/bin:/export/servers/flume-1.9.0/bin:/export/servers/flume-1.9.0/bin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/export/servers/jdk1.8.0_161/bin:/export/servers/hadoop-3.3.5/bin:/export/servers/hadoop-3.3.5/sbin:/export/servers/scala-2.12.10/bin:/root/bin:/export/servers/jdk1.8.0_161/bin:/export/servers/hadoop-3.3.5/bin:/export/servers/hadoop-3.3.5/sbin:/export/servers/scala-2.12.10/bin:/export/servers/jdk1.8.0_161/bin:/export/servers/hadoop-3.3.5/bin:/export/servers/hadoop-3.3.5/sbin:/export/servers/scala-2.12.10/bin:/root/bin)

2025-06-17 20:45:39,133 INFO conf.HiveConf: Found configuration file file:/export/servers/apache-hive-3.1.3-bin/conf/hive-site.xml

Hive Session ID = 7a465677-eec4-40ee-b6a2-5c7b638725a7

2025-06-17 20:45:43,011 INFO SessionState: Hive Session ID = 7a465677-eec4-40ee-b6a2-5c7b638725a7

Logging initialized using configuration in jar:file:/export/servers/apache-hive-3.1.3-bin/lib/hive-common-3.1.3.jar!/hive-log4j2.properties Async: true

2025-06-17 20:45:43,144 INFO SessionState:

Logging initialized using configuration in jar:file:/export/servers/apache-hive-3.1.3-bin/lib/hive-common-3.1.3.jar!/hive-log4j2.properties Async: true

2025-06-17 20:45:45,757 INFO session.SessionState: Created HDFS directory: /tmp/hive/root/7a465677-eec4-40ee-b6a2-5c7b638725a7

2025-06-17 20:45:45,806 INFO session.SessionState: Created local directory: /tmp/root/7a465677-eec4-40ee-b6a2-5c7b638725a7

2025-06-17 20:45:45,820 INFO session.SessionState: Created HDFS directory: /tmp/hive/root/7a465677-eec4-40ee-b6a2-5c7b638725a7/_tmp_space.db

2025-06-17 20:45:45,850 INFO conf.HiveConf: Using the default value passed in for log id: 7a465677-eec4-40ee-b6a2-5c7b638725a7

2025-06-17 20:45:45,850 INFO session.SessionState: Updating thread name to 7a465677-eec4-40ee-b6a2-5c7b638725a7 main

2025-06-17 20:45:47,956 INFO metastore.HiveMetaStore: 0: Opening raw store with implementation class:org.apache.hadoop.hive.metastore.ObjectStore

2025-06-17 20:45:48,005 WARN metastore.ObjectStore: datanucleus.autoStartMechanismMode is set to unsupported value null . Setting it to value: ignored

2025-06-17 20:45:48,017 INFO metastore.ObjectStore: ObjectStore, initialize called

2025-06-17 20:45:48,020 INFO conf.MetastoreConf: Found configuration file file:/export/servers/apache-hive-3.1.3-bin/conf/hive-site.xml

2025-06-17 20:45:48,023 INFO conf.MetastoreConf: Unable to find config file hivemetastore-site.xml

2025-06-17 20:45:48,023 INFO conf.MetastoreConf: Found configuration file null

2025-06-17 20:45:48,024 INFO conf.MetastoreConf: Unable to find config file metastore-site.xml

2025-06-17 20:45:48,024 INFO conf.MetastoreConf: Found configuration file null

2025-06-17 20:45:48,400 INFO DataNucleus.Persistence: Property datanucleus.cache.level2 unknown - will be ignored

2025-06-17 20:45:48,953 INFO hikari.HikariDataSource: HikariPool-1 - Starting...

2025-06-17 20:45:49,485 INFO hikari.HikariDataSource: HikariPool-1 - Start completed.

2025-06-17 20:45:49,573 INFO hikari.HikariDataSource: HikariPool-2 - Starting...

2025-06-17 20:45:49,644 INFO hikari.HikariDataSource: HikariPool-2 - Start completed.

2025-06-17 20:45:50,533 INFO metastore.ObjectStore: Setting MetaStore object pin classes with hive.metastore.cache.pinobjtypes="Table,StorageDescriptor,SerDeInfo,Partition,Database,Type,FieldSchema,Order"

2025-06-17 20:45:50,824 INFO metastore.MetaStoreDirectSql: Using direct SQL, underlying DB is MYSQL

2025-06-17 20:45:50,827 INFO metastore.ObjectStore: Initialized ObjectStore

2025-06-17 20:45:51,223 WARN DataNucleus.MetaData: Metadata has jdbc-type of null yet this is not valid. Ignored

2025-06-17 20:45:51,224 WARN DataNucleus.MetaData: Metadata has jdbc-type of null yet this is not valid. Ignored

2025-06-17 20:45:51,225 WARN DataNucleus.MetaData: Metadata has jdbc-type of null yet this is not valid. Ignored

2025-06-17 20:45:51,225 WARN DataNucleus.MetaData: Metadata has jdbc-type of null yet this is not valid. Ignored

2025-06-17 20:45:51,225 WARN DataNucleus.MetaData: Metadata has jdbc-type of null yet this is not valid. Ignored

2025-06-17 20:45:51,225 WARN DataNucleus.MetaData: Metadata has jdbc-type of null yet this is not valid. Ignored

2025-06-17 20:45:54,606 WARN DataNucleus.MetaData: Metadata has jdbc-type of null yet this is not valid. Ignored

2025-06-17 20:45:54,607 WARN DataNucleus.MetaData: Metadata has jdbc-type of null yet this is not valid. Ignored

2025-06-17 20:45:54,608 WARN DataNucleus.MetaData: Metadata has jdbc-type of null yet this is not valid. Ignored

2025-06-17 20:45:54,608 WARN DataNucleus.MetaData: Metadata has jdbc-type of null yet this is not valid. Ignored

2025-06-17 20:45:54,609 WARN DataNucleus.MetaData: Metadata has jdbc-type of null yet this is not valid. Ignored

2025-06-17 20:45:54,609 WARN DataNucleus.MetaData: Metadata has jdbc-type of null yet this is not valid. Ignored

2025-06-17 20:45:59,277 WARN metastore.ObjectStore: Version information not found in metastore. metastore.schema.verification is not enabled so recording the schema version 3.1.0

2025-06-17 20:45:59,278 WARN metastore.ObjectStore: setMetaStoreSchemaVersion called but recording version is disabled: version = 3.1.0, comment = Set by MetaStore root@192.168.245.131

2025-06-17 20:45:59,555 INFO metastore.HiveMetaStore: Added admin role in metastore

2025-06-17 20:45:59,559 INFO metastore.HiveMetaStore: Added public role in metastore

2025-06-17 20:45:59,663 INFO metastore.HiveMetaStore: No user is added in admin role, since config is empty

2025-06-17 20:46:00,035 INFO metastore.RetryingMetaStoreClient: RetryingMetaStoreClient proxy=class org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient ugi=root (auth:SIMPLE) retries=1 delay=1 lifetime=0

2025-06-17 20:46:00,086 INFO metastore.HiveMetaStore: 0: get_all_functions

2025-06-17 20:46:00,097 INFO HiveMetaStore.audit: ugi=root ip=unknown-ip-addr cmd=get_all_functions

Hive-on-MR is deprecated in Hive 2 and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive 1.X releases.Hive Session ID = b7eae93e-640d-4628-b883-4e088aafa6e6

2025-06-17 20:46:00,287 INFO CliDriver: Hive-on-MR is deprecated in Hive 2 and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive 1.X releases.

2025-06-17 20:46:00,287 INFO SessionState: Hive Session ID = b7eae93e-640d-4628-b883-4e088aafa6e6

2025-06-17 20:46:00,331 INFO session.SessionState: Created HDFS directory: /tmp/hive/root/b7eae93e-640d-4628-b883-4e088aafa6e6

2025-06-17 20:46:00,336 INFO session.SessionState: Created local directory: /tmp/root/b7eae93e-640d-4628-b883-4e088aafa6e6

2025-06-17 20:46:00,346 INFO session.SessionState: Created HDFS directory: /tmp/hive/root/b7eae93e-640d-4628-b883-4e088aafa6e6/_tmp_space.db

2025-06-17 20:46:00,354 INFO metastore.HiveMetaStore: 1: get_databases: @hive#

2025-06-17 20:46:00,355 INFO HiveMetaStore.audit: ugi=root ip=unknown-ip-addr cmd=get_databases: @hive#

2025-06-17 20:46:00,360 INFO metastore.HiveMetaStore: 1: Opening raw store with implementation class:org.apache.hadoop.hive.metastore.ObjectStore

2025-06-17 20:46:00,364 INFO metastore.ObjectStore: ObjectStore, initialize called

2025-06-17 20:46:00,404 INFO metastore.MetaStoreDirectSql: Using direct SQL, underlying DB is MYSQL

2025-06-17 20:46:00,406 INFO metastore.ObjectStore: Initialized ObjectStore

2025-06-17 20:46:00,446 INFO metastore.HiveMetaStore: 1: get_tables_by_type: db=@hive#db_hive1 pat=.*,type=MATERIALIZED_VIEW

2025-06-17 20:46:00,447 INFO HiveMetaStore.audit: ugi=root ip=unknown-ip-addr cmd=get_tables_by_type: db=@hive#db_hive1 pat=.*,type=MATERIALIZED_VIEW

2025-06-17 20:46:00,470 INFO metastore.HiveMetaStore: 1: get_multi_table : db=db_hive1 tbls=

2025-06-17 20:46:00,471 INFO HiveMetaStore.audit: ugi=root ip=unknown-ip-addr cmd=get_multi_table : db=db_hive1 tbls=

2025-06-17 20:46:00,486 INFO metastore.HiveMetaStore: 1: get_tables_by_type: db=@hive#default pat=.*,type=MATERIALIZED_VIEW

2025-06-17 20:46:00,486 INFO HiveMetaStore.audit: ugi=root ip=unknown-ip-addr cmd=get_tables_by_type: db=@hive#default pat=.*,type=MATERIALIZED_VIEW

2025-06-17 20:46:00,495 INFO metastore.HiveMetaStore: 1: get_multi_table : db=default tbls=

2025-06-17 20:46:00,495 INFO HiveMetaStore.audit: ugi=root ip=unknown-ip-addr cmd=get_multi_table : db=default tbls=

2025-06-17 20:46:00,495 INFO metastore.HiveMetaStore: 1: get_tables_by_type: db=@hive#itcast_ods pat=.*,type=MATERIALIZED_VIEW

2025-06-17 20:46:00,496 INFO HiveMetaStore.audit: ugi=root ip=unknown-ip-addr cmd=get_tables_by_type: db=@hive#itcast_ods pat=.*,type=MATERIALIZED_VIEW

2025-06-17 20:46:00,503 INFO metastore.HiveMetaStore: 1: get_multi_table : db=itcast_ods tbls=

2025-06-17 20:46:00,503 INFO HiveMetaStore.audit: ugi=root ip=unknown-ip-addr cmd=get_multi_table : db=itcast_ods tbls=

2025-06-17 20:46:00,503 INFO metadata.HiveMaterializedViewsRegistry: Materialized views registry has been initialized

hive>

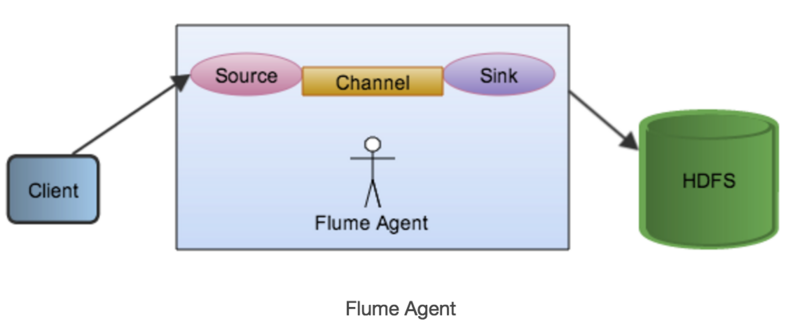

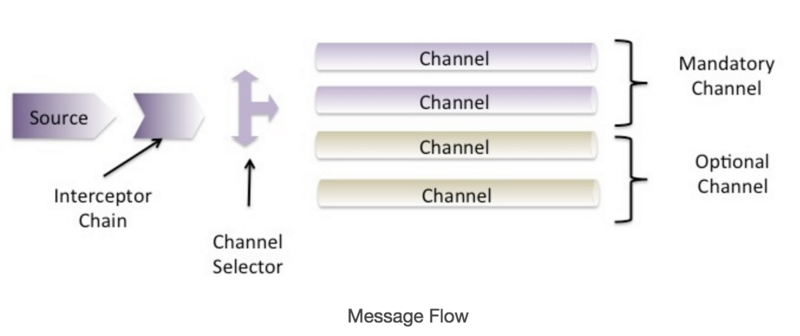

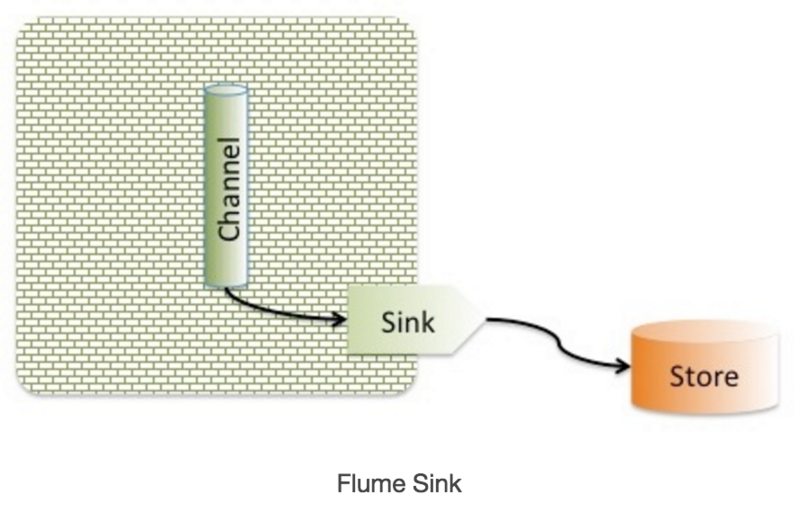

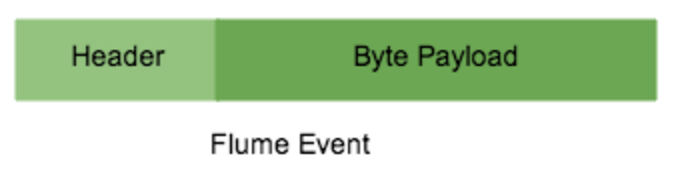

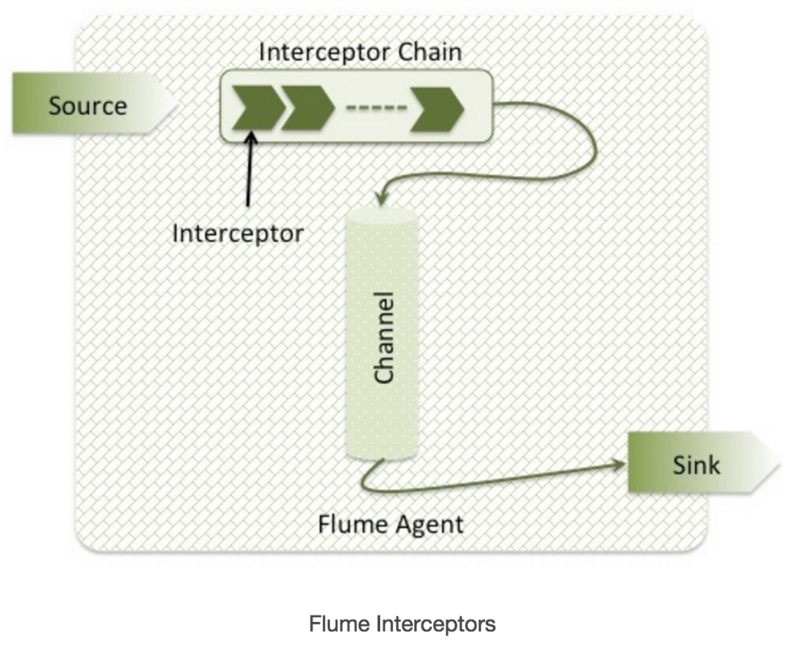

Flume是一种可靠且可扩展的大数据传输系统,用于平衡数据生产者与消费者之间的数据流动。它能够解决数据存储过程中的复杂问题,如并发写入、系统压力及网络延迟等,并提供稳定的数据流状态。本文详细介绍Flume的组成、特点及其应用场景。

Flume是一种可靠且可扩展的大数据传输系统,用于平衡数据生产者与消费者之间的数据流动。它能够解决数据存储过程中的复杂问题,如并发写入、系统压力及网络延迟等,并提供稳定的数据流状态。本文详细介绍Flume的组成、特点及其应用场景。

1416

1416

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?