针对以上问题,修正代码#!/usr/bin/env python3

# -*- coding: utf-8 -*-

"""

人形机器人仿真演示 - 修复OpenGL初始化问题

"""

import sys

import os

import time

import json

import numpy as np

import matplotlib.pyplot as plt

from typing import Dict, List, Tuple, Optional

import threading

import argparse

import mujoco

import pygame

from pygame.locals import *

import math

import cv2

from PIL import Image

import io

import traceback

import subprocess

import ctypes

# 检查OpenGL支持并尝试修复

def fix_opengl_issues():

"""尝试解决OpenGL初始化问题"""

print("🛠️ 尝试解决OpenGL初始化问题...")

# 尝试设置不同的渲染后端

backends = ['glfw', 'osmesa', 'egl']

for backend in backends:

try:

os.environ['MUJOCO_GL'] = backend

print(f" 尝试使用 {backend} 渲染后端...")

# 测试OpenGL加载

test_model = mujoco.MjModel.from_xml_string('<mujoco/>')

test_data = mujoco.MjData(test_model)

mujoco.mj_forward(test_model, test_data)

print(f"✅ 使用 {backend} 后端成功!")

return True

except Exception as e:

print(f"⚠️ {backend} 后端失败: {e}")

# 尝试加载OpenGL库

try:

print(" 尝试直接加载OpenGL库...")

opengl_libs = [

'libGL.so.1', # Linux

'/usr/lib/x86_64-linux-gnu/libGL.so.1', # Ubuntu

'opengl32.dll', # Windows

'/System/Library/Frameworks/OpenGL.framework/OpenGL' # macOS

]

for lib in opengl_libs:

try:

ctypes.CDLL(lib)

print(f"✅ 成功加载 {lib}")

return True

except Exception as e:

print(f"⚠️ 加载 {lib} 失败: {e}")

except Exception as e:

print(f"⚠️ OpenGL库加载失败: {e}")

return False

# 导入自定义模块

try:

from mujoco_simulation import HumanoidRobot

from advanced_control import (

IntelligentDecisionSystem, PathPlanner, EnergyOptimizer,

AdaptiveController, EnvironmentState, RobotState, TaskType, TerrainType

)

except ImportError:

# 创建模拟类以避免导入错误

class HumanoidRobot:

def __init__(self):

self.model = None

self.data = None

self.position = np.array([0.0, 0.0, 1.0])

self.velocity = np.zeros(3)

self.orientation = np.array([1.0, 0.0, 0.0, 0.0])

class IntelligentDecisionSystem:

pass

class VisualizationSystem:

"""可视化系统 - 用于2D和3D渲染"""

def __init__(self, model: Optional[mujoco.MjModel] = None, width: int = 1200, height: int = 800):

"""初始化可视化系统"""

pygame.init()

self.screen = pygame.display.set_mode((width, height))

pygame.display.set_caption("人形机器人仿真演示")

self.clock = pygame.time.Clock()

self.font = pygame.font.SysFont('SimHei', 20)

self.title_font = pygame.font.SysFont('SimHei', 28, bold=True)

self.terrain_colors = {

'flat': (180, 200, 180),

'slope': (160, 180, 200),

'stairs': (200, 180, 160),

'sand': (220, 200, 150),

'grass': (150, 200, 150)

}

self.obstacle_colors = {

'static': (200, 100, 100),

'dynamic': (100, 150, 200),

'moving': (150, 100, 200)

}

self.robot_img = self._create_robot_image()

# 尝试修复OpenGL问题

self.opengl_fixed = fix_opengl_issues()

# 初始化MuJoCo渲染组件

self.camera = mujoco.MjvCamera()

self.opt = mujoco.MjvOption()

self.scene = None

self.context = None

if model is not None and self.opengl_fixed:

try:

# 尝试创建渲染上下文

self.context = mujoco.MjrContext(model, mujoco.mjtFontScale.mjFONTSCALE_150)

self.scene = mujoco.MjvScene(model=model, maxgeom=1000)

print("✅ 成功初始化渲染上下文")

# 设置相机位置

self.camera.distance = 5.0

self.camera.elevation = -20

self.camera.azimuth = 90

except Exception as e:

print(f"⚠️ 渲染上下文初始化失败: {e}")

self.scene = None

self.context = None

else:

print("⚠️ 无法修复OpenGL问题,禁用3D渲染")

def _create_robot_image(self):

"""创建机器人图像"""

surface = pygame.Surface((50, 80), pygame.SRCALPHA)

# 绘制头部

pygame.draw.circle(surface, (100, 150, 255), (25, 15), 10)

# 绘制身体

pygame.draw.rect(surface, (100, 200, 100), (15, 25, 20, 30))

# 绘制腿部

pygame.draw.line(surface, (0, 0, 0), (20, 55), (15, 75), 3)

pygame.draw.line(surface, (0, 0, 0), (30, 55), (35, 75), 3)

# 绘制手臂

pygame.draw.line(surface, (0, 0, 0), (15, 35), (5, 50), 3)

pygame.draw.line(surface, (0, 0, 0), (35, 35), (45, 50), 3)

return surface

def render_2d(self, demo, current_time: float):

"""渲染2D可视化界面"""

self.screen.fill((240, 240, 245))

# 获取当前场景状态

scenario_state = demo.get_current_scenario_state(current_time)

# 绘制标题

title = self.title_font.render("人形机器人仿真演示系统", True, (30, 30, 100))

self.screen.blit(title, (20, 15))

# 绘制状态面板

self._render_status_panel(demo, scenario_state, current_time)

# 绘制场景可视化

self._render_scenario_visualization(demo, scenario_state, current_time)

# 绘制3D渲染窗口

self._render_3d_view(demo)

# 绘制性能图表

self._render_performance_charts(demo)

# 绘制控制说明

self._render_instructions()

pygame.display.flip()

def _render_status_panel(self, demo, scenario_state: Dict, current_time: float):

"""渲染状态面板"""

# 状态面板背景

pygame.draw.rect(self.screen, (250, 250, 255), (20, 70, 450, 180), 0, 10)

pygame.draw.rect(self.screen, (200, 200, 220), (20, 70, 450, 180), 2, 10)

# 场景信息

scene_text = self.font.render(f"场景: {scenario_state['description']}", True, (30, 30, 30))

self.screen.blit(scene_text, (40, 90))

# 地形信息

terrain_text = self.font.render(f"地形: {scenario_state['terrain']}", True, (30, 30, 30))

self.screen.blit(terrain_text, (40, 120))

# 时间信息

time_text = self.font.render(f"时间: {current_time:.1f}s / {demo.demo_config['duration']:.1f}s", True,

(30, 30, 30))

self.screen.blit(time_text, (40, 150))

# 能量消耗

energy_text = self.font.render(

f"能量消耗: {demo.energy_consumption[-1] if demo.energy_consumption else 0:.2f} J", True, (30, 30, 30))

self.screen.blit(energy_text, (40, 180))

# 控制模式

mode_text = self.font.render(f"控制模式: {'AI控制' if demo.demo_config['enable_ai'] else '手动控制'}", True,

(30, 30, 30))

self.screen.blit(mode_text, (40, 210))

# 渲染状态

render_status = "可用" if self.scene and self.context else "不可用"

render_text = self.font.render(f"3D渲染: {render_status}", True, (30, 30, 30))

self.screen.blit(render_text, (250, 180))

# 状态指示器

status_color = (100, 200, 100) if demo.is_running else (200, 100, 100)

pygame.draw.circle(self.screen, status_color, (400, 100), 10)

status_text = self.font.render("运行中" if demo.is_running else "已停止", True, (30, 30, 30))

self.screen.blit(status_text, (415, 95))

# 暂停状态

if demo.paused:

pause_text = self.font.render("已暂停", True, (200, 100, 50))

self.screen.blit(pause_text, (400, 130))

def _render_scenario_visualization(self, demo, scenario_state: Dict, current_time: float):

"""渲染场景可视化"""

# 场景可视化背景

pygame.draw.rect(self.screen, (250, 250, 255), (20, 270, 450, 300), 0, 10)

pygame.draw.rect(self.screen, (200, 200, 220), (20, 270, 450, 300), 2, 10)

# 绘制地形

terrain_color = self.terrain_colors.get(scenario_state['terrain'], (180, 180, 180))

pygame.draw.rect(self.screen, terrain_color, (50, 350, 350, 100))

# 绘制地形特征

if scenario_state['terrain'] == 'slope':

for i in range(10):

pygame.draw.line(self.screen, (150, 150, 150),

(50 + i * 35, 350),

(50 + i * 35, 450 - i * 3), 2)

elif scenario_state['terrain'] == 'stairs':

for i in range(5):

pygame.draw.rect(self.screen, (180, 160, 140),

(50 + i * 70, 450 - i * 20, 70, 20 + i * 20))

elif scenario_state['terrain'] == 'sand':

for i in range(20):

x = 50 + np.random.randint(0, 350)

y = 350 + np.random.randint(0, 100)

pygame.draw.circle(self.screen, (210, 190, 150), (x, y), 3)

# 绘制障碍物

for obs in scenario_state['obstacles']:

color = self.obstacle_colors.get(obs['type'], (150, 150, 150))

x = 50 + (obs['position'][0] / 10) * 350

y = 400 - (obs['position'][1] / 2) * 50

radius = obs['radius'] * 50

pygame.draw.circle(self.screen, color, (int(x), int(y)), int(radius))

# 绘制机器人

robot_x = 50 + (min(current_time / demo.demo_config['duration'], 1.0) * 350)

robot_y = 400

self.screen.blit(self.robot_img, (robot_x - 25, robot_y - 40))

# 绘制风力效果

if np.linalg.norm(scenario_state['wind']) > 0.1:

wind_dir = scenario_state['wind'] / np.linalg.norm(scenario_state['wind'])

for i in range(5):

offset = i * 20

pygame.draw.line(self.screen, (100, 150, 255),

(robot_x + 30 + offset, robot_y - 20),

(robot_x + 50 + offset + wind_dir[0] * 10,

robot_y - 20 + wind_dir[1] * 10), 2)

pygame.draw.polygon(self.screen, (100, 150, 255), [

(robot_x + 50 + offset + wind_dir[0] * 10, robot_y - 20 + wind_dir[1] * 10),

(robot_x + 45 + offset + wind_dir[0] * 15, robot_y - 25 + wind_dir[1] * 15),

(robot_x + 45 + offset + wind_dir[0] * 15, robot_y - 15 + wind_dir[1] * 15)

])

# 场景标题

scene_title = self.font.render(f"当前场景: {scenario_state['description']}", True, (30, 30, 100))

self.screen.blit(scene_title, (40, 290))

def _render_3d_view(self, demo):

"""渲染3D视图"""

# 3D视图背景

pygame.draw.rect(self.screen, (250, 250, 255), (500, 70, 680, 300), 0, 10)

pygame.draw.rect(self.screen, (200, 200, 220), (500, 70, 680, 300), 2, 10)

# 添加标题

title = self.font.render("机器人3D视图", True, (30, 30, 100))

self.screen.blit(title, (510, 80))

# 检查渲染组件是否可用

if self.scene is None or self.context is None or demo.robot.model is None:

error_text = self.font.render("3D渲染不可用: 无法初始化OpenGL", True, (255, 0, 0))

self.screen.blit(error_text, (550, 150))

return

try:

# 更新3D场景

mujoco.mjv_updateScene(

demo.robot.model, demo.robot.data, self.opt, None, self.camera,

mujoco.mjtCatBit.mjCAT_ALL, self.scene)

# 渲染到内存缓冲区

width, height = 680, 300

buffer = np.zeros((height, width, 3), dtype=np.uint8)

viewport = mujoco.MjrRect(0, 0, width, height)

mujoco.mjr_render(viewport, self.scene, self.context)

# 获取渲染图像

mujoco.mjr_readPixels(buffer, None, viewport, self.context)

# 转换为Pygame表面

img = Image.fromarray(buffer, 'RGB')

img = img.transpose(Image.FLIP_TOP_BOTTOM)

img_bytes = img.tobytes()

py_image = pygame.image.fromstring(img_bytes, (width, height), 'RGB')

# 绘制到屏幕

self.screen.blit(py_image, (500, 70))

except Exception as e:

# 显示错误信息

error_text = self.font.render(f"3D渲染错误: {str(e)}", True, (255, 0, 0))

self.screen.blit(error_text, (510, 150))

def _render_performance_charts(self, demo):

"""渲染性能图表"""

# 性能面板背景

pygame.draw.rect(self.screen, (250, 250, 255), (500, 390, 680, 180), 0, 10)

pygame.draw.rect(self.screen, (200, 200, 220), (500, 390, 680, 180), 2, 10)

# 标题

title = self.font.render("性能指标", True, (30, 30, 100))

self.screen.blit(title, (510, 400))

# 绘制能量消耗图表

if len(demo.energy_consumption) > 1:

points = []

max_energy = max(demo.energy_consumption) if max(demo.energy_consumption) > 0 else 1

for i, val in enumerate(demo.energy_consumption):

x = 520 + (i / len(demo.energy_consumption)) * 300

y = 550 - (val / max_energy) * 100

points.append((x, y))

if len(points) > 1:

pygame.draw.lines(self.screen, (100, 150, 255), False, points, 2)

# 绘制稳定性图表

if len(demo.performance_metrics) > 1:

points = []

max_stability = max([m['stability'] for m in demo.performance_metrics]) or 1

for i, metric in enumerate(demo.performance_metrics):

x = 850 + (i / len(demo.performance_metrics)) * 300

y = 550 - (metric['stability'] / max_stability) * 100

points.append((x, y))

if len(points) > 1:

pygame.draw.lines(self.screen, (255, 150, 100), False, points, 2)

# 图表标签

energy_label = self.font.render("能量消耗", True, (100, 150, 255))

self.screen.blit(energy_label, (520, 560))

stability_label = self.font.render("稳定性指标", True, (255, 150, 100))

self.screen.blit(stability_label, (850, 560))

def _render_instructions(self):

"""渲染控制说明"""

# 控制说明背景

pygame.draw.rect(self.screen, (250, 250, 255), (20, 580, 1160, 100), 0, 10)

pygame.draw.rect(self.screen, (200, 200, 220), (20, 580, 1160, 100), 2, 10)

# 控制说明

instructions = [

"ESC: 退出程序",

"空格键: 暂停/继续",

"R键: 重置演示",

"↑↓←→: 控制机器人移动",

"C键: 切换摄像头视角"

]

for i, text in enumerate(instructions):

inst_text = self.font.render(text, True, (80, 80, 180))

self.screen.blit(inst_text, (40 + i * 220, 600))

def handle_events(self):

"""处理Pygame事件"""

for event in pygame.event.get():

if event.type == QUIT:

return False

elif event.type == KEYDOWN:

if event.key == K_ESCAPE:

return False

if event.key == K_SPACE:

return "pause"

elif event.key == K_r:

return "reset"

elif event.key == K_UP:

return "move_forward"

elif event.key == K_DOWN:

return "move_backward"

elif event.key == K_LEFT:

return "move_left"

elif event.key == K_RIGHT:

return "move_right"

elif event.key == K_c:

return "change_camera"

return True

class HumanoidDemo:

"""人形机器人仿真演示类"""

def __init__(self):

"""初始化演示系统"""

print("🤖 人形机器人仿真演示系统")

print("=" * 60)

# 创建人形机器人

self.robot = self._create_robot()

# 创建高级控制模块

self.decision_system = IntelligentDecisionSystem()

self.path_planner = PathPlanner()

self.energy_optimizer = EnergyOptimizer()

self.adaptive_controller = AdaptiveController()

# 创建可视化系统 - 传递机器人模型

self.visualization = VisualizationSystem(

self.robot.model if hasattr(self.robot, 'model') else None

)

# 演示配置

self.demo_config = {

'duration': 30.0,

'enable_ai': True,

'enable_optimization': True,

'enable_adaptation': True,

'record_data': True,

'save_video': False

}

# 演示数据

self.demo_data = {

'timestamps': [],

'robot_states': [],

'environment_states': [],

'decisions': [],

'energy_consumption': [0.0],

'performance_metrics': [{'stability': 1.0, 'efficiency': 1.0}]

}

# 运行状态

self.is_running = False

self.current_time = 0.0

self.paused = False

self.camera_mode = 0 # 0: 默认, 1: 跟随, 2: 第一人称

print("✅ 演示系统初始化完成")

def _create_robot(self):

"""创建机器人实例,处理可能的错误"""

try:

return HumanoidRobot()

except Exception as e:

print(f"⚠️ 创建机器人时出错: {e}")

print("⚠️ 使用模拟机器人替代")

return HumanoidRobot() # 使用之前定义的模拟类

@property

def energy_consumption(self):

return self.demo_data['energy_consumption']

@property

def performance_metrics(self):

return self.demo_data['performance_metrics']

def setup_demo_scenario(self, scenario_type: str = "comprehensive"):

"""设置演示场景"""

scenarios = {

"comprehensive": self._setup_comprehensive_scenario,

"walking": self._setup_walking_scenario,

"obstacle": self._setup_obstacle_scenario,

"terrain": self._setup_terrain_scenario,

"interference": self._setup_interference_scenario

}

if scenario_type in scenarios:

print(f"🎬 设置 {scenario_type} 演示场景...")

scenarios[scenario_type]()

else:

print(f"⚠️ 未知场景类型: {scenario_type},使用综合场景")

self._setup_comprehensive_scenario()

def _setup_comprehensive_scenario(self):

"""设置综合演示场景"""

self.scenario_timeline = [

[0, 5, "walking", "平地行走"],

[5, 10, "obstacle", "动态避障"],

[10, 15, "terrain", "斜坡行走"],

[15, 20, "terrain", "楼梯攀爬"],

[20, 25, "interference", "风力干扰"],

[25, 30, "walking", "恢复行走"]

]

self.environment_config = {

'obstacles': [

{'position': [2, 0, 0.5], 'radius': 0.3, 'type': 'static'},

{'position': [4, 1, 0.3], 'radius': 0.3, 'type': 'dynamic'},

{'position': [6, -1, 0.4], 'radius': 0.2, 'type': 'moving'}

],

'terrain_sequence': ['flat', 'slope', 'stairs', 'sand', 'flat'],

'wind_patterns': [

{'time': [20, 25], 'force': [50, 0, 0], 'type': 'gust'},

{'time': [25, 30], 'force': [20, 10, 0], 'type': 'steady'}

],

'light_patterns': [

{'time': [22, 24], 'intensity': 0.2, 'type': 'dim'},

{'time': [24, 26], 'intensity': 0.9, 'type': 'bright'}

]

}

def _setup_walking_scenario(self):

"""设置行走演示场景"""

self.scenario_timeline = [

[0, 10, "walking", "基础行走"],

[10, 20, "walking", "快速行走"],

[20, 30, "walking", "慢速行走"]

]

self.environment_config = {

'obstacles': [],

'terrain_sequence': ['flat'] * 3,

'wind_patterns': [],

'light_patterns': []

}

def _setup_obstacle_scenario(self):

"""设置避障演示场景"""

self.scenario_timeline = [

[0, 10, "obstacle", "静态障碍物"],

[10, 20, "obstacle", "动态障碍物"],

[20, 30, "obstacle", "复杂障碍物"]

]

self.environment_config = {

'obstacles': [

{'position': [1, 0, 0.5], 'radius': 0.3, 'type': 'static'},

{'position': [3, 0, 0.3], 'radius': 0.2, 'type': 'dynamic'},

{'position': [5, 0, 0.4], 'radius': 0.25, 'type': 'moving'}

],

'terrain_sequence': ['flat'] * 3,

'wind_patterns': [],

'light_patterns': []

}

def _setup_terrain_scenario(self):

"""设置地形演示场景"""

self.scenario_timeline = [

[0, 6, "terrain", "平地"],

[6, 12, "terrain", "斜坡"],

[12, 18, "terrain", "楼梯"],

[18, 24, "terrain", "沙地"],

[24, 30, "terrain", "平地"]

]

self.environment_config = {

'obstacles': [],

'terrain_sequence': ['flat', 'slope', 'stairs', 'sand', 'flat'],

'wind_patterns': [],

'light_patterns': []

}

def _setup_interference_scenario(self):

"""设置干扰演示场景"""

self.scenario_timeline = [

[0, 10, "walking", "正常行走"],

[10, 20, "interference", "风力干扰"],

[20, 30, "interference", "光照干扰"]

]

self.environment_config = {

'obstacles': [],

'terrain_sequence': ['flat'] * 3,

'wind_patterns': [

{'time': [10, 20], 'force': [80, 0, 0], 'type': 'strong_wind'}

],

'light_patterns': [

{'time': [20, 30], 'intensity': 0.1, 'type': 'low_light'}

]

}

def get_current_scenario_state(self, current_time: float) -> Dict:

"""获取当前场景状态"""

current_task = "idle"

task_description = "待机"

# 确定当前任务

for start_time, end_time, task, description in self.scenario_timeline:

if start_time <= current_time < end_time:

current_task = task

task_description = description

break

# 确定当前地形

terrain_index = min(int(current_time / 6), len(self.environment_config['terrain_sequence']) - 1)

current_terrain = self.environment_config['terrain_sequence'][terrain_index]

# 计算当前风力

current_wind = np.zeros(3)

for wind_pattern in self.environment_config['wind_patterns']:

if wind_pattern['time'][0] <= current_time < wind_pattern['time'][1]:

current_wind = np.array(wind_pattern['force'])

break

# 计算当前光照

current_light = 1.0

for light_pattern in self.environment_config['light_patterns']:

if light_pattern['time'][0] <= current_time < light_pattern['time'][1]:

current_light = light_pattern['intensity']

break

return {

'task': current_task,

'description': task_description,

'terrain': current_terrain,

'wind': current_wind,

'light': current_light,

'obstacles': self.environment_config['obstacles'].copy(),

'time': current_time

}

def update_robot_state(self):

"""更新机器人状态"""

# 模拟机器人控制逻辑

# 这里应该调用实际的机器人控制模块

pass

def change_camera_mode(self):

"""切换摄像头视角"""

self.camera_mode = (self.camera_mode + 1) % 3

if self.visualization.camera:

if self.camera_mode == 0: # 默认视角

self.visualization.camera.distance = 5.0

self.visualization.camera.elevation = -20

self.visualization.camera.azimuth = 90

elif self.camera_mode == 1: # 跟随视角

self.visualization.camera.distance = 3.0

self.visualization.camera.elevation = -10

self.visualization.camera.azimuth = 45

elif self.camera_mode == 2: # 第一人称视角

self.visualization.camera.distance = 1.5

self.visualization.camera.elevation = 0

self.visualization.camera.azimuth = 0

def record_data(self, current_time):

"""记录演示数据"""

self.demo_data['timestamps'].append(current_time)

# 模拟能量消耗 - 根据地形和任务类型变化

energy_factor = 1.0

if self.get_current_scenario_state(current_time)['terrain'] == 'slope':

energy_factor = 1.5

elif self.get_current_scenario_state(current_time)['terrain'] == 'stairs':

energy_factor = 1.8

elif self.get_current_scenario_state(current_time)['terrain'] == 'sand':

energy_factor = 2.0

new_energy = self.energy_consumption[-1] + 0.1 * energy_factor

self.energy_consumption.append(new_energy)

# 模拟性能指标

stability = 1.0 - np.random.uniform(0, 0.1)

efficiency = 1.0 - np.random.uniform(0, 0.05)

self.performance_metrics.append({

'stability': max(0.1, stability),

'efficiency': max(0.1, efficiency)

})

def run_demo(self):

"""运行演示"""

print(f"🚀 开始演示,持续时间: {self.demo_config['duration']}秒")

print("=" * 60)

self.is_running = True

start_time = time.time()

try:

while self.current_time < self.demo_config['duration']:

# 处理事件

event_result = self.visualization.handle_events()

if event_result == False:

break

elif event_result == "pause":

self.paused = not self.paused

elif event_result == "reset":

start_time = time.time()

self.current_time = 0.0

self.demo_data = {

'timestamps': [],

'energy_consumption': [0.0],

'performance_metrics': [{'stability': 1.0, 'efficiency': 1.0}]

}

elif event_result == "change_camera":

self.change_camera_mode()

if self.paused:

time.sleep(0.1)

continue

# 更新当前时间

self.current_time = time.time() - start_time

# 更新机器人状态

self.update_robot_state()

# 记录数据

if self.demo_config['record_data']:

self.record_data(self.current_time)

# 渲染可视化界面

self.visualization.render_2d(self, self.current_time)

# 控制帧率

self.visualization.clock.tick(30)

self.is_running = False

print("\n✅ 演示完成!")

except Exception as e:

self.is_running = False

print(f"\n⛔ 演示出错: {e}")

traceback.print_exc()

finally:

pygame.quit()

def main():

"""主函数"""

parser = argparse.ArgumentParser(description='人形机器人仿真演示')

parser.add_argument('--scenario', type=str, default='comprehensive',

choices=['comprehensive', 'walking', 'obstacle', 'terrain', 'interference'],

help='演示场景类型')

parser.add_argument('--duration', type=float, default=30.0,

help='演示持续时间(秒)')

args = parser.parse_args()

# 创建演示实例

demo = HumanoidDemo()

demo.demo_config['duration'] = args.duration

# 设置场景

demo.setup_demo_scenario(args.scenario)

# 运行演示

demo.run_demo()

if __name__ == "__main__":

main()

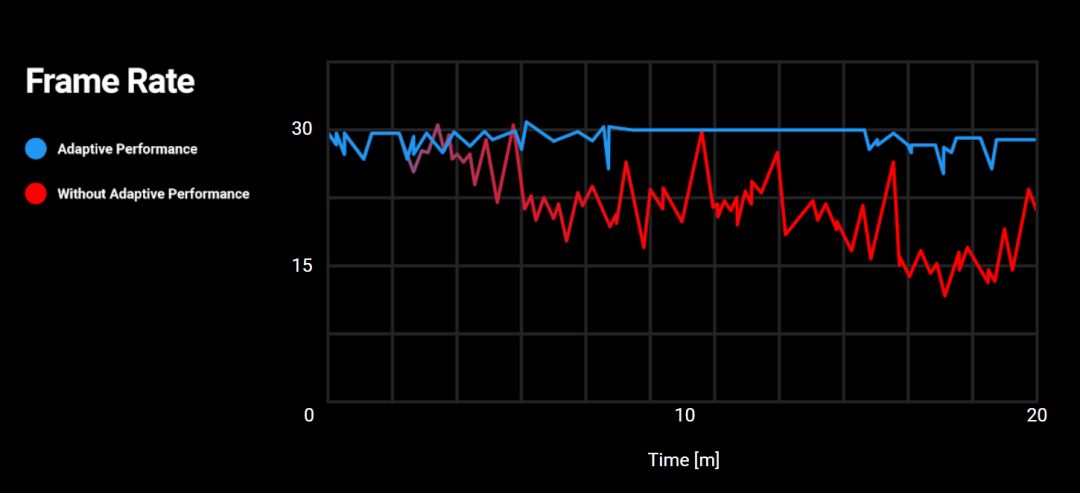

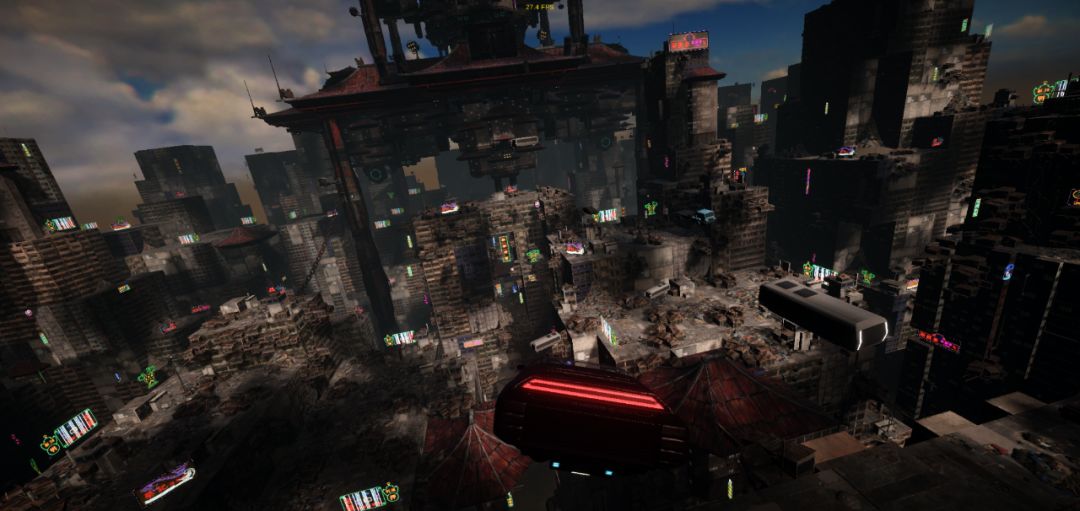

Adaptive Performance是Unity为移动设备推出的功能,旨在平衡游戏性能和画面质量。通过与三星合作,该技术能实时分析硬件状态,动态调整CPU和GPU性能级别,提供更稳定、高效的帧率,延长设备运行时间。《Megacity》项目展示了Adaptive Performance在复杂场景中的应用,帮助开发者应对不同设备性能挑战。

Adaptive Performance是Unity为移动设备推出的功能,旨在平衡游戏性能和画面质量。通过与三星合作,该技术能实时分析硬件状态,动态调整CPU和GPU性能级别,提供更稳定、高效的帧率,延长设备运行时间。《Megacity》项目展示了Adaptive Performance在复杂场景中的应用,帮助开发者应对不同设备性能挑战。

1916

1916

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?