环形缓冲区,| audio_track_cblk_t | PCM ring buffer | 如何读取和写入

环形缓冲区:初始 R = 0,W = 0,buf 长度为 LEN

写入一个数据:w = W % LEN;buf[w] = data;W++;等价于 w = W & (LEN -1)

读取一个数据:r = R % LEN;buf[r] = data;R++;等价于 r = R & (LEN -1)

判断 满:W - R == LEN

判断 空:W == R

接上文

https://blog.youkuaiyun.com/we1less/article/details/156458655?spm=1001.2014.3001.5502

libaudioclient

av/media/libaudioclient/AudioTrack.cpp createTrack_l

接下来创建 mProxy | mStaticProxy 跟audioflinger中的 Proxy 是对应的

cblk 和 buffers 在 createTrack 的时候已经包含在 response 中了

STATIC: StaticAudioTrackClientProxy

STREAM: AudioTrackClientProxy

cblk, buffers, mFrameCount, mFrameSize

status_t AudioTrack::createTrack_l()

{

status_t status;

const sp<IAudioFlinger>& audioFlinger = AudioSystem::get_audio_flinger();

// 使用返回的 response 解析出 Cblk

status = audioFlinger->createTrack(aidlInput.value(), response);

output.audioTrack->getCblk(&sfr);

audio_track_cblk_t* cblk = static_cast<audio_track_cblk_t*>(iMemPointer);

mCblk = cblk;

void* buffers;

if (mSharedBuffer == 0) {

// STREAM 模式下音频数据 buffers 就是 + 1

buffers = cblk + 1;

} else {

// STATIC 模式下音频数据 buffers 就是传进来的 buffer

buffers = mSharedBuffer->unsecurePointer();

}

}

if (mSharedBuffer == 0) {

mStaticProxy.clear();

//stream

mProxy = new AudioTrackClientProxy(cblk, buffers, mFrameCount, mFrameSize);

} else {

//static

mStaticProxy = new StaticAudioTrackClientProxy(cblk, buffers, mFrameCount, mFrameSize);

mProxy = mStaticProxy;

}

return logIfErrorAndReturnStatus(status, "");

}audioflinger

av/services/audioflinger/AudioFlinger.cpp createTrack

av/services/audioflinger/Threads.cpp createTrack_l

av/services/audioflinger/Tracks.cpp IAfTrack::create

这里面构造了一个 Track

接下来创建 mAudioTrackServerProxy 跟 libaudioclient 中的 Proxy 是对应的

mCblk 和 mBuffer 在构造 TrackBase 的时候已经按照对应的 mode 创建好了

STATIC: AudioTrackServerProxy

STREAM: StaticAudioTrackServerProxy

sp<IAfTrack> IAfTrack::create(

IAfPlaybackThread* thread,

...

bool isBitPerfect,

float volume,

bool muted) {

return sp<Track>::make(thread, ..., sharedBuffer, ...);

}

Track::Track(... , const sp<IMemory>& sharedBuffer, ...)

: TrackBase(thread, client, attr, sampleRate, format, channelMask, frameCount,

(sharedBuffer != 0) ? sharedBuffer->unsecurePointer() : buffer,

(sharedBuffer != 0) ? sharedBuffer->size() : bufferSize,

... ,

ALLOC_CBLK, /*alloc*/

...),

mFillingStatus(FS_INVALID),

// mRetryCount initialized later when needed

mSharedBuffer(sharedBuffer),

{

if (sharedBuffer == 0) {

mAudioTrackServerProxy = new AudioTrackServerProxy(mCblk, mBuffer, frameCount,

mFrameSize, !isExternalTrack(), sampleRate);

} else {

mAudioTrackServerProxy = new StaticAudioTrackServerProxy(mCblk, mBuffer, frameCount,

mFrameSize, sampleRate);

}

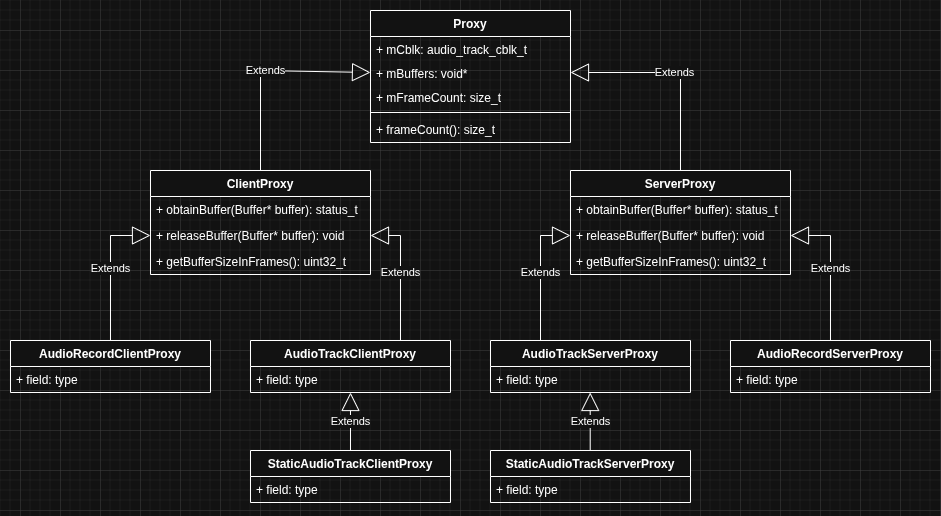

}先看下这几个类的关系

app 写数据调用 streamTrack.write(buffer, 0, len)

base/media/java/android/media/AudioTrack.java

native_write_byte

public int write(@NonNull byte[] audioData, int offsetInBytes, int sizeInBytes,

@WriteMode int writeMode) {

final int ret = native_write_byte(audioData, offsetInBytes, sizeInBytes, mAudioFormat,

writeMode == WRITE_BLOCKING);

return ret;

}base/core/jni/android_media_AudioTrack.cpp android_media_AudioTrack_writeArray

STATIC 模式下直接使用的 memcpy

STREAM 模式下 track->write

template <typename T>

static jint android_media_AudioTrack_writeArray(JNIEnv *env, jobject thiz,

T javaAudioData,

jint offsetInSamples, jint sizeInSamples,

jint javaAudioFormat,

jboolean isWriteBlocking) {

//ALOGV("android_media_AudioTrack_writeArray(offset=%d, sizeInSamples=%d) called",

// offsetInSamples, sizeInSamples);

sp<AudioTrack> lpTrack = getAudioTrack(env, thiz);

jint samplesWritten = writeToTrack(lpTrack, javaAudioFormat, cAudioData,

offsetInSamples, sizeInSamples, isWriteBlocking == JNI_TRUE /* blocking */);

return samplesWritten;

}

template <typename T>

static jint writeToTrack(const sp<AudioTrack>& track, jint audioFormat, const T *data,

jint offsetInSamples, jint sizeInSamples, bool blocking) {

ssize_t written = 0;

size_t sizeInBytes = sizeInSamples * sizeof(T);

if (track->sharedBuffer() == 0) {

written = track->write(data + offsetInSamples, sizeInBytes, blocking);

if (written == (ssize_t) WOULD_BLOCK) {

written = 0;

}

} else {

// STATIC 模式下直接使用的memcpy

if ((size_t)sizeInBytes > track->sharedBuffer()->size()) {

sizeInBytes = track->sharedBuffer()->size();

}

memcpy(track->sharedBuffer()->unsecurePointer(), data + offsetInSamples, sizeInBytes);

written = sizeInBytes;

}

if (written >= 0) {

return written / sizeof(T);

}

return interpretWriteSizeError(written);

}

libaudioclient

av/media/libaudioclient/AudioTrack.cpp write

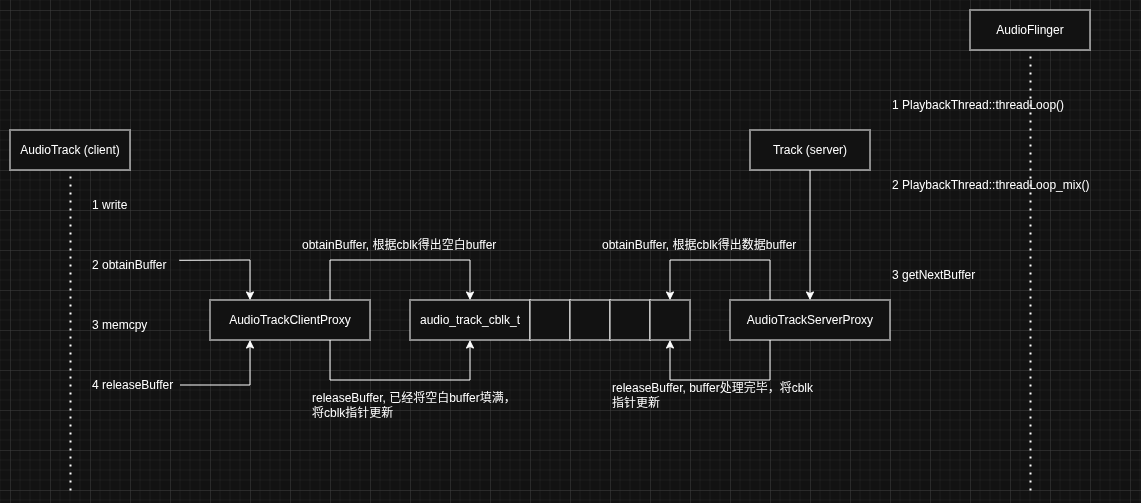

obtainBuffer memcpy releaseBuffer

sp<StaticAudioTrackClientProxy> mStaticProxy;

sp<AudioTrackClientProxy> mProxy;

mProxyObtainBufferRef = mProxy;

ssize_t AudioTrack::write(const void* buffer, size_t userSize, bool blocking)

{

// 获取buffer

status_t err = obtainBuffer(&audioBuffer,

blocking ? &ClientProxy::kForever : &ClientProxy::kNonBlocking);

size_t toWrite = audioBuffer.size();

// memcpy buffer

memcpy(audioBuffer.raw, buffer, toWrite);

releaseBuffer(&audioBuffer);

return written;

}

status_t AudioTrack::obtainBuffer(Buffer* audioBuffer, const struct timespec *requested,

struct timespec *elapsed, size_t *nonContig)

{

Proxy::Buffer buffer;

sp<AudioTrackClientProxy> proxy;

mProxyObtainBufferRef = mProxy;

proxy = mProxy;

status = proxy->obtainBuffer(&buffer, requested, elapsed);

audioBuffer->frameCount = buffer.mFrameCount;

audioBuffer->mSize = buffer.mFrameCount * mFrameSize;

audioBuffer->raw = buffer.mRaw;

return status;

}

av/media/libaudioclient/AudioTrackShared.cpp ClientProxy::obtainBuffer

生产者获取可用内存 mFront

__attribute__((no_sanitize("integer")))

status_t ClientProxy::obtainBuffer(Buffer* buffer, const struct timespec *requested,

struct timespec *elapsed)

{

for (;;) {

int32_t front;

int32_t rear;

// mIsOut 判断出来是播放 还是录音,音频的流向

if (mIsOut) {

// 原子读 mFront

front = android_atomic_acquire_load(&cblk->u.mStreaming.mFront);

//

rear = cblk->u.mStreaming.mRear;

} else {

rear = android_atomic_acquire_load(&cblk->u.mStreaming.mRear);

front = cblk->u.mStreaming.mFront;

}

// 一些计算过程,

// 计算帧数

// 找出空余buffer

buffer->mFrameCount = part1;

buffer->mRaw = part1 > 0 ?

&((char *) mBuffers)[(mIsOut ? rear : front) * mFrameSize] : NULL;

}

return status;

}

// 参考 av/include/private/media/AudioTrackShared.h

struct audio_track_cblk_t

{

public:

union {

AudioTrackSharedStreaming mStreaming;

AudioTrackSharedStatic mStatic;

int mAlign[8];

} u;

};

struct AudioTrackSharedStreaming {

// similar to NBAIO MonoPipe

// in continuously incrementing frame units, take modulo buffer size, which must be a power of 2

volatile int32_t mFront; // read by consumer (output: server, input: client)

volatile int32_t mRear; // written by producer (output: client, input: server)

volatile int32_t mFlush; // incremented by client to indicate a request to flush;

// server notices and discards all data between mFront and mRear

volatile int32_t mStop; // set by client to indicate a stop frame position; server

// will not read beyond this position until start is called.

volatile uint32_t mUnderrunFrames; // server increments for each unavailable but desired frame

volatile uint32_t mUnderrunCount; // server increments for each underrun occurrence

};

av/media/libaudioclient/AudioTrack.cpp ClientProxy::releaseBuffer

mProxyObtainBufferRef 是在 AudioTrack::obtainBuffer 函数中被赋值 sp<AudioTrackClientProxy> mProxy;

void AudioTrack::releaseBuffer(const Buffer* audioBuffer)

{

Proxy::Buffer buffer;

buffer.mFrameCount = stepCount;

buffer.mRaw = audioBuffer->raw;

sp<IMemory> tempMemory;

sp<AudioTrackClientProxy> tempProxy;

mReleased += stepCount;

mInUnderrun = false;

mProxyObtainBufferRef->releaseBuffer(&buffer);

restartIfDisabled();

}av/media/libaudioclient/AudioTrackShared.cpp ClientProxy::releaseBuffer

生产者更新 mRear 值

__attribute__((no_sanitize("integer")))

void ClientProxy::releaseBuffer(Buffer* buffer)

{

mUnreleased -= stepCount;

audio_track_cblk_t* cblk = mCblk;

if (mIsOut) {

int32_t rear = cblk->u.mStreaming.mRear;

android_atomic_release_store(stepCount + rear, &cblk->u.mStreaming.mRear);

} else {

int32_t front = cblk->u.mStreaming.mFront;

android_atomic_release_store(stepCount + front, &cblk->u.mStreaming.mFront);

}

}ServerProxy

av/media/libaudioclient/AudioTrackShared.cpp ServerProxy::obtainBuffer

__attribute__((no_sanitize("integer")))

status_t ServerProxy::obtainBuffer(Buffer* buffer, bool ackFlush)

{

if (mIsOut) {

flushBufferIfNeeded(); // might modify mFront

rear = getRear();

front = cblk->u.mStreaming.mFront;

} else {

front = android_atomic_acquire_load(&cblk->u.mStreaming.mFront);

rear = cblk->u.mStreaming.mRear;

}

return NO_INIT;

}

__attribute__((no_sanitize("integer")))

int32_t AudioTrackServerProxy::getRear() const

{

const int32_t stop = android_atomic_acquire_load(&mCblk->u.mStreaming.mStop);

const int32_t rear = android_atomic_acquire_load(&mCblk->u.mStreaming.mRear);

return rear;

}

av/media/libaudioclient/AudioTrackShared.cpp ServerProxy::releaseBuffer

__attribute__((no_sanitize("integer")))

void ServerProxy::releaseBuffer(Buffer* buffer)

{

audio_track_cblk_t* cblk = mCblk;

if (mIsOut) {

int32_t front = cblk->u.mStreaming.mFront;

android_atomic_release_store(stepCount + front, &cblk->u.mStreaming.mFront);

} else {

int32_t rear = cblk->u.mStreaming.mRear;

android_atomic_release_store(stepCount + rear, &cblk->u.mStreaming.mRear);

}

}

补充

base/core/jni/android_media_AudioTrack.cpp android_media_AudioTrack_setup

在 MODE_STATIC 模式下共享内存是申请在app层的

可以看到 allocSharedMem 函数申请了一块名为 AudioTrack Heap Base 的内存,这块内存的大小是由 app 决定的

static jint android_media_AudioTrack_setup(...) {

switch (memoryMode) {

case MODE_STREAM:

case MODE_STATIC:

{

// AudioTrack is using shared memory

const auto iMem = allocSharedMem(buffSizeInBytes);

break;

}

default:

ALOGE("Unknown mode %d", memoryMode);

goto native_init_failure;

}

return (jint) AUDIOTRACK_ERROR_SETUP_NATIVEINITFAILED;

}

sp<IMemory> allocSharedMem(int sizeInBytes) {

const auto heap = sp<MemoryHeapBase>::make(sizeInBytes, 0, "AudioTrack Heap Base");

if (heap->getBase() == MAP_FAILED || heap->getBase() == nullptr) {

return nullptr;

}

return sp<MemoryBase>::make(heap, 0, sizeInBytes);

}这里首先将app侧的size大小固定,然后创建 AudioTrack 运行程序

val bufferSize = 1024 * 1024 * 10

Log.d("bufferSize", "bufferSize = $bufferSize")

.setTransferMode(AudioTrack.MODE_STATIC)

.setBufferSizeInBytes(bufferSize)可以使用命令

然后可以使用 app 7a46c4a000 - 7a4624a000 得出的结果和 bufferSize 一致

然后可以使用 audioserver 7158b01000 - 7158101000 得出的结果和 bufferSize 一致

NX563J:/ # ps -A | grep example.

u0_a187 9135 1024 14819432 169204 SyS_epoll_wait 0 S com.example.myapplication

NX563J:/ # cd /proc/9135/

NX563J:/proc/9135 # cat maps | grep Audio

7a4624a000-7a46c4a000 rw-s 00000000 00:05 88738 /dev/ashmem/AudioTrack Heap Base (deleted)

NX563J:/proc/9135 # logcat | grep bufferSize

12-28 13:20:43.979 9135 9135 D bufferSize: bufferSize = 10485760

# 然后可以使用 7a46c4a000 - 7a4624a000 得出的结果和 bufferSize 一致

1|NX563J:/proc/9135 # ps -A | grep audioserver

audioserver 3975 1 53432 18240 binder_ioctl_write_read 0 S android.hardware.audio.service

audioserver 3976 1 11625808 23700 binder_ioctl_write_read 0 S audioserver

NX563J:/proc/9135 # cd /proc/3976

NX563J:/proc/3976 # cat maps | grep Audio

7158101000-7158b01000 rw-s 00000000 00:05 88738 /dev/ashmem/AudioTrack Heap Base (deleted)

# 然后可以使用 7158b01000 - 7158101000 得出的结果和 bufferSize 一致

984

984

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?