参考:https://blog.youkuaiyun.com/qq_36242312/article/details/105888669

void rst::rasterizer::rasterize_triangle(const Triangle& t, const std::array<Eigen::Vector3f, 3>& view_pos)

{

// TODO: From your HW3, get the triangle rasterization code.

// TODO: Inside your rasterization loop:

// * v[i].w() is the vertex view space depth value z.

// * Z is interpolated view space depth for the current pixel

// * zp is depth between zNear and zFar, used for z-buffer

// float Z = 1.0 / (alpha / v[0].w() + beta / v[1].w() + gamma / v[2].w());

// float zp = alpha * v[0].z() / v[0].w() + beta * v[1].z() / v[1].w() + gamma * v[2].z() / v[2].w();

// zp *= Z;

// TODO: Interpolate the attributes:

// auto interpolated_color

// auto interpolated_normal

// auto interpolated_texcoords

// auto interpolated_shadingcoords

// Use: fragment_shader_payload payload( interpolated_color, interpolated_normal.normalized(), interpolated_texcoords, texture ? &*texture : nullptr);

// Use: payload.view_pos = interpolated_shadingcoords;

// Use: Instead of passing the triangle's color directly to the frame buffer, pass the color to the shaders first to get the final color;

// Use: auto pixel_color = fragment_shader(payload);

auto v = t.toVector4();

// bounding box

float min_x = std::min(v[0][0], std::min(v[1][0], v[2][0]));

float max_x = std::max(v[0][0], std::max(v[1][0], v[2][0]));

float min_y = std::min(v[0][1], std::min(v[1][1], v[2][1]));

float max_y = std::max(v[0][1], std::max(v[1][1], v[2][1]));

int x_min = std::floor(min_x);

int x_max = std::ceil(max_x);

int y_min = std::floor(min_y);

int y_max = std::ceil(max_y);

for (int i = x_min; i <= x_max; i++)

{

for (int j = y_min; j <= y_max; j++)

{

if (insideTriangle(i + 0.5, j + 0.5, t.v))

{

//Depth interpolated

auto[alpha, beta, gamma] = computeBarycentric2D(i + 0.5, j + 0.5, t.v);

float Z = 1.0 / (alpha / v[0].w() + beta / v[1].w() + gamma / v[2].w());

float zp = alpha * v[0].z() / v[0].w() + beta * v[1].z() / v[1].w() + gamma * v[2].z() / v[2].w();

zp *= Z;

if (zp < depth_buf[get_index(i, j)])

{

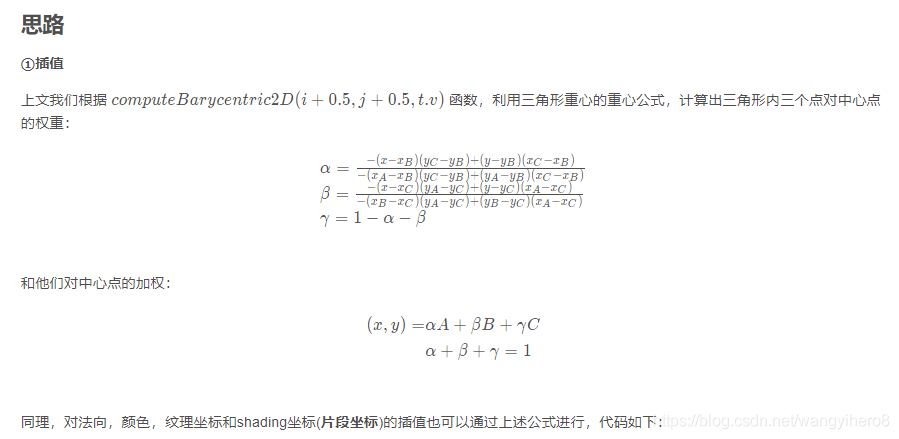

/*hero:计算出采样点的重心权重后,可以对三角形的顶点的所有属性进行插值 */

// color ,顶点色进行插值

auto interpolated_color = interpolate(alpha, beta, gamma, t.color[0], t.color[1], t.color[2], 1);

// normal

auto interpolated_normal = interpolate(alpha, beta, gamma, t.normal[0], t.normal[1], t.normal[2], 1).normalized();

// texture

auto interpolated_texcoords = interpolate(alpha, beta, gamma, t.tex_coords[0], t.tex_coords[1], t.tex_coords[2], 1);

//hero: shadingcoords,视线进行插值

auto interpolated_shadingcoords = interpolate(alpha, beta, gamma, view_pos[0], view_pos[1], view_pos[2], 1);

// 用来传递插值结果的结构体(将插值结果保存payload中)

fragment_shader_payload payload(interpolated_color, interpolated_normal, interpolated_texcoords, texture ? &*texture : nullptr);

payload.view_pos = interpolated_shadingcoords;

//hero:根据视线、光线、法线、顶点色的插值,计算该采样处最终颜色

auto pixel_color = fragment_shader(payload);

// 设置深度

depth_buf[get_index(i, j)] = zp;

// hero:使用最终颜色设置该索引像素块的颜色

set_pixel(Eigen::Vector2i(i, j), pixel_color);

}

}

}

}

}

②投影矩阵

前文实现的投影矩阵有一点点问题,这里稍作修改:

Eigen::Matrix4f get_projection_matrix(float eye_fov, float aspect_ratio, float zNear, float zFar)

{

Eigen::Matrix4f projection = Eigen::Matrix4f::Identity();

Eigen::Matrix4f M_persp2ortho(4, 4);

Eigen::Matrix4f M_ortho_scale(4, 4);

Eigen::Matrix4f M_ortho_trans(4, 4);

float angle = eye_fov * MY_PI / 180.0; // half angle

float height = zNear * tan(angle) * 2;

float width = height * aspect_ratio;

auto t = -zNear * tan(angle / 2);

auto r = t * aspect_ratio;

auto l = -r;

auto b = -t;

M_persp2ortho << zNear, 0, 0, 0,

0, zNear, 0, 0,

0, 0, zNear + zFar, -zNear * zFar,

0, 0, 1, 0;

// 之前我这里用的是 宽和高的长度,实际上r-l 和 t-b 应该是它们的两倍

// 为了避免麻烦,我这里改成了坐标形式

M_ortho_scale << 2 / (r - l), 0, 0, 0,

0, 2 / (t - b), 0, 0,

0, 0, 2 / (zNear - zFar), 0,

0, 0, 0, 1;

M_ortho_trans << 1, 0, 0, -(r + l) / 2,

0, 1, 0, -(t + b) / 2,

0, 0, 1, -(zNear + zFar) / 2,

0, 0, 0, 1;

Eigen::Matrix4f M_ortho = M_ortho_scale * M_ortho_trans;

projection = M_ortho * M_persp2ortho * projection;

return projection;

}

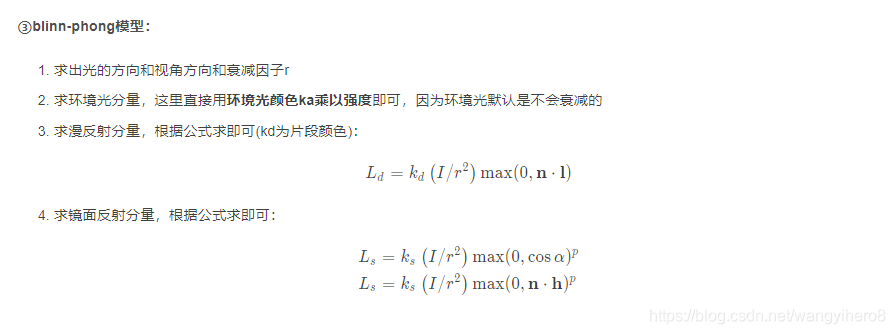

//对单个像素进行处理

Eigen::Vector3f phong_fragment_shader(const fragment_shader_payload& payload)

{

//hero:设置最初的环境光系数

Eigen::Vector3f ka = Eigen::Vector3f(0.005, 0.005, 0.005);

//hero:设置最初的固有色系数

Eigen::Vector3f kd = payload.color;

//hero:设置最初的环境光系数

Eigen::Vector3f ks = Eigen::Vector3f(0.7937, 0.7937, 0.7937);

auto l1 = light{{20, 20, 20}, {500, 500, 500}}; // 灯光位置 和 强度

auto l2 = light{{-20, 20, 0}, {500, 500, 500}};

std::vector<light> lights = {l1, l2};

Eigen::Vector3f amb_light_intensity{10, 10, 10};

Eigen::Vector3f eye_pos{0, 0, 10};

float p = 150;

Eigen::Vector3f color = payload.color;

Eigen::Vector3f point = payload.view_pos; // 片段位置

Eigen::Vector3f normal = payload.normal;

Eigen::Vector3f result_color = {0, 0, 0};

for (auto& light : lights)

{

// 光的方向

Eigen::Vector3f light_dir = light.position - point;

// 视线方向

Eigen::Vector3f view_dir = eye_pos - point;

// hero:衰减因子,有点问题,应该是r的平方

float r = light_dir.dot(light_dir);

//hero: ambient,cwiseProduct可以进行矩阵之间,或矩阵标量之间的对应元素相乘

Eigen::Vector3f La = ka.cwiseProduct(amb_light_intensity);

//hero: diffuse,归一化要使用normalized()!

Eigen::Vector3f Ld = kd.cwiseProduct(light.intensity / r);

Ld *= std::max(0.0f, normal.normalized().dot(light_dir.normalized()));

// specular

Eigen::Vector3f h = (light_dir + view_dir).normalized();

Eigen::Vector3f Ls = ks.cwiseProduct(light.intensity / r);

Ls *= std::pow(std::max(0.0f, normal.normalized().dot(h)), p);

//使用色彩的加法模式

result_color += (La + Ld + Ls);

}

return result_color * 255.f;

}

结果:

④texture:

texture的实现只需要将纹理坐标对应的颜色传给kd即可,需要注意的是我这里的纹理坐标会出现负值,所以我对它进行了限定,即把u,v坐标限定在[0,1]范围内

// 纹理shader

Eigen::Vector3f texture_fragment_shader(const fragment_shader_payload& payload)

{

Eigen::Vector3f return_color = { 0, 0, 0 };

if (payload.texture)

{

// hero:获取纹理坐标的颜色,payload是采样时构造的,有采样点的初始信息

return_color = payload.texture->getColor(payload.tex_coords.x(), payload.tex_coords.y());

}

Eigen::Vector3f texture_color;

texture_color << return_color.x(), return_color.y(), return_color.z();

Eigen::Vector3f ka = Eigen::Vector3f(0.005, 0.005, 0.005);

//hero:此处的kd由texure颜色取代

Eigen::Vector3f kd = texture_color / 255.f; // 颜色归一化

Eigen::Vector3f ks = Eigen::Vector3f(0.7937, 0.7937, 0.7937);

auto l1 = light{ {20, 20, 20}, {500, 500, 500} };

auto l2 = light{ {-20, 20, 0}, {500, 500, 500} };

std::vector<light> lights = { l1, l2 };

Eigen::Vector3f amb_light_intensity{ 10, 10, 10 };

Eigen::Vector3f eye_pos{ 0, 0, 10 };

float p = 150;

//hero:此处的kd由texure颜色取代

Eigen::Vector3f color = texture_color;

Eigen::Vector3f point = payload.view_pos;

Eigen::Vector3f normal = payload.normal;

Eigen::Vector3f result_color = { 0, 0, 0 };

//环境光可以视为常量

Eigen::Vector3f ambient = ka * amb_light_intensity[0];

for (auto& light : lights)

{

// TODO: For each light source in the code, calculate what the *ambient*, *diffuse*, and *specular*

// components are. Then, accumulate that result on the *result_color* object.

Eigen::Vector3f light_dir = light.position - point;

Eigen::Vector3f view_dir = eye_pos - point;

float r = light_dir.dot(light_dir);

// ambient

Eigen::Vector3f La = ka.cwiseProduct(amb_light_intensity);

// diffuse

Eigen::Vector3f Ld = kd.cwiseProduct(light.intensity / r);

Ld *= std::max(0.0f, normal.normalized().dot(light_dir.normalized()));

// specular

Eigen::Vector3f h = (light_dir + view_dir).normalized();

Eigen::Vector3f Ls = ks.cwiseProduct(light.intensity / r);

Ls *= std::pow(std::max(0.0f, normal.normalized().dot(h)), p);

result_color += (La + Ld + Ls);

}

return result_color * 255.f;

}

// 坐标限定

Eigen::Vector3f getColor(float u, float v)

{

// 坐标限定

if (u < 0) u = 0;

if (u > 1) u = 1;

if (v < 0) v = 0;

if (v > 1) v = 1;

auto u_img = u * width;

auto v_img = (1 - v) * height;

auto color = image_data.at<cv::Vec3b>(v_img, u_img);

return Eigen::Vector3f(color[0], color[1], color[2]);

}

结果:

⑤bump::

bump实现时需要注意获取纹理颜色时需要让u 加上 1.0f 而不是1,其次最后的颜色要用归一化的法向量

Eigen::Vector3f bump_fragment_shader(const fragment_shader_payload& payload)

{

Eigen::Vector3f ka = Eigen::Vector3f(0.005, 0.005, 0.005);

Eigen::Vector3f kd = payload.color;

Eigen::Vector3f ks = Eigen::Vector3f(0.7937, 0.7937, 0.7937);

auto l1 = light{{20, 20, 20}, {500, 500, 500}};

auto l2 = light{{-20, 20, 0}, {500, 500, 500}};

std::vector<light> lights = {l1, l2};

Eigen::Vector3f amb_light_intensity{10, 10, 10};

Eigen::Vector3f eye_pos{0, 0, 10};

float p = 150;

Eigen::Vector3f color = payload.color;

Eigen::Vector3f point = payload.view_pos;

Eigen::Vector3f normal = payload.normal;

float kh = 0.2, kn = 0.1;

// TODO: Implement bump mapping here

// Let n = normal = (x, y, z)

// Vector t = (x*y/sqrt(x*x+z*z),sqrt(x*x+z*z),z*y/sqrt(x*x+z*z))

// Vector b = n cross product t

// Matrix TBN = [t b n]

// dU = kh * kn * (h(u+1/w,v)-h(u,v))

// dV = kh * kn * (h(u,v+1/h)-h(u,v))

// Vector ln = (-dU, -dV, 1)

// Normal n = normalize(TBN * ln)

float x = normal.x();

float y = normal.y();

float z = normal.z();

Eigen::Vector3f t{ x * y / std::sqrt(x * x + z * z), std::sqrt(x * x + z * z), z*y / std::sqrt(x * x + z * z) };

Eigen::Vector3f b = normal.cross(t);

Eigen::Matrix3f TBN;

TBN << t.x(), b.x(), normal.x(),

t.y(), b.y(), normal.y(),

t.z(), b.z(), normal.z();

float u = payload.tex_coords.x();

float v = payload.tex_coords.y();

float w = payload.texture->width;

float h = payload.texture->height;

float dU = kh * kn * (payload.texture->getColor(u + 1.0f / w , v).norm() - payload.texture->getColor(u, v).norm());

float dV = kh * kn * (payload.texture->getColor(u, v + 1.0f / h).norm() - payload.texture->getColor(u, v).norm());

Eigen::Vector3f ln{ -dU,-dV,1.0f };

normal = TBN * ln;

// 归一化

Eigen::Vector3f result_color = normal.normalized();

return result_color * 255.f;

}

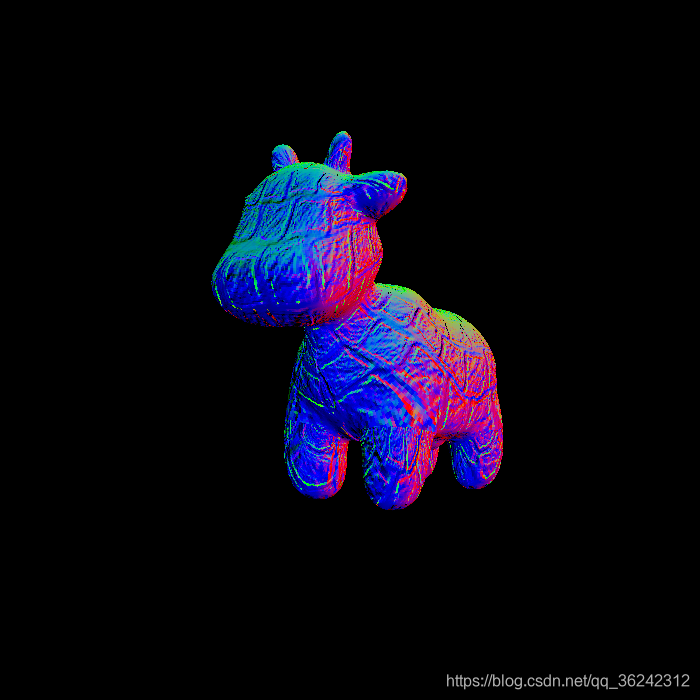

结果:

⑥displacement:

bump只是将法向量和它的梯度作为模型的颜色可视化了出来,而displacement在此基础上考虑了光照因素

Eigen::Vector3f displacement_fragment_shader(const fragment_shader_payload& payload)

{

Eigen::Vector3f ka = Eigen::Vector3f(0.005, 0.005, 0.005);

Eigen::Vector3f kd = payload.color;

Eigen::Vector3f ks = Eigen::Vector3f(0.7937, 0.7937, 0.7937);

auto l1 = light{{20, 20, 20}, {500, 500, 500}};

auto l2 = light{{-20, 20, 0}, {500, 500, 500}};

std::vector<light> lights = {l1, l2};

Eigen::Vector3f amb_light_intensity{10, 10, 10};

Eigen::Vector3f eye_pos{0, 0, 10};

float p = 150;

Eigen::Vector3f color = payload.color;

Eigen::Vector3f point = payload.view_pos;

Eigen::Vector3f normal = payload.normal;

float kh = 0.2, kn = 0.1;

// TODO: Implement displacement mapping here

// Let n = normal = (x, y, z)

// Vector t = (x*y/sqrt(x*x+z*z),sqrt(x*x+z*z),z*y/sqrt(x*x+z*z))

// Vector b = n cross product t

// Matrix TBN = [t b n]

// dU = kh * kn * (h(u+1/w,v)-h(u,v))

// dV = kh * kn * (h(u,v+1/h)-h(u,v))

// Vector ln = (-dU, -dV, 1)

// Position p = p + kn * n * h(u,v)

// Normal n = normalize(TBN * ln)

float x = normal.x();

float y = normal.y();

float z = normal.z();

Eigen::Vector3f t{ x*y / std::sqrt(x*x + z * z), std::sqrt(x*x + z * z), z*y / std::sqrt(x*x + z * z) };

Eigen::Vector3f b = normal.cross(t);

Eigen::Matrix3f TBN;

TBN << t.x(), b.x(), normal.x(),

t.y(), b.y(), normal.y(),

t.z(), b.z(), normal.z();

float u = payload.tex_coords.x();

float v = payload.tex_coords.y();

float w = payload.texture->width;

float h = payload.texture->height;

float dU = kh * kn * (payload.texture->getColor(u + 1.0f / w, v).norm() - payload.texture->getColor(u , v).norm());

float dV = kh * kn * (payload.texture->getColor(u, v + 1.0f / h).norm() - payload.texture->getColor(u , v).norm());

Eigen::Vector3f ln{ -dU,-dV,1.0f };

//payload.texture->getColor(u , v).norm()可以理解为置换贴图得出的normal量

point += (kn * normal * payload.texture->getColor(u , v).norm());

normal = TBN * ln;

normal = normal.normalized();

Eigen::Vector3f result_color = {0, 0, 0};

for (auto& light : lights)

{

// TODO: For each light source in the code, calculate what the *ambient*, *diffuse*, and *specular*

// components are. Then, accumulate that result on the *result_color* object.

//根据置换计算后的新point位置,来计算light_dir、view_dir,从而出现置换效果

Eigen::Vector3f light_dir = light.position - point;

Eigen::Vector3f view_dir = eye_pos - point;

float r = light_dir.dot(light_dir);

// ambient

Eigen::Vector3f La = ka.cwiseProduct(amb_light_intensity);

// diffuse

Eigen::Vector3f Ld = kd.cwiseProduct(light.intensity / r);

Ld *= std::max(0.0f, normal.dot(light_dir.normalized()));

// specular

Eigen::Vector3f h = (light_dir + view_dir).normalized();

Eigen::Vector3f Ls = ks.cwiseProduct(light.intensity / r);

Ls *= std::pow(std::max(0.0f, normal.dot(h)), p);

result_color += (La + Ld + Ls);

}

return result_color * 255.f;

}

结果:

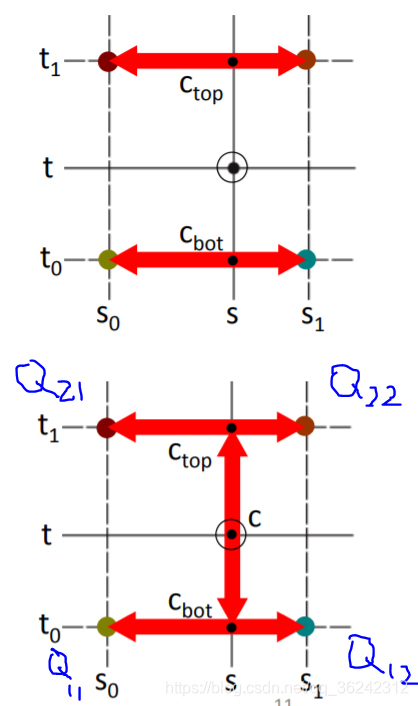

⑦对纹理进行双线性插值:

给定当前坐标(u_img,v_img),先把临近四个点取出来进行双边滤波,如下图所示,t为u方向,s为v方向,所以我们先把u_min,u_max,v_min,v_max取出来,用做表示四个方向上的点,然后先对u方向做两次插值,再对v方向做一次插值,即得到双边滤波结果:

这里还需要注意opencv坐标的高和uv坐标和高是相反的,即opencv图像坐标的y坐标是逐渐增大,而对应的uv坐标下的v坐标是逐渐减小,所以计算中心点的邻域点时,也要注意v_min实际上是在图像中中心点的上面,而v_max是在图像中中心点的下面

Eigen::Vector3f getColorBilinear(float u, float v)

{

if (u < 0) u = 0;

if (u > 1) u = 1;

if (v < 0) v = 0;

if (v > 1) v = 1;

auto u_img = u * width;

auto v_img = (1 - v) * height;

float u_min = std::floor(u_img);

float u_max = std::min((float)width, std::ceil(u_img));

float v_min = std::floor(v_img);

float v_max = std::min((float)height, std::ceil(v_img));

auto Q11 = image_data.at<cv::Vec3b>(v_max, u_min);

auto Q12 = image_data.at<cv::Vec3b>(v_max, u_max);

auto Q21 = image_data.at<cv::Vec3b>(v_min, u_min);

auto Q22 = image_data.at<cv::Vec3b>(v_min, u_max);

float rs = (u_img - u_min) / (u_max - u_min);

float rt = (v_img - v_max) / (v_min - v_max);

auto cBot = (1 - rs) * Q11 + rs * Q12;

auto cTop = (1 - rs) * Q21 + rs * Q22;

auto P = (1 - rt) * cBot + rt * cTop;

return Eigen::Vector3f(P[0], P[1], P[2]);

}

下面是我对原纹理下采样到300×300的比较结果:

下采样图:

下采样图的双边滤波:

本文深入探讨了图形渲染技术的关键步骤,包括三角形光栅化、深度缓冲、纹理映射及法线贴图等高级主题,并提供了详细的算法实现。

本文深入探讨了图形渲染技术的关键步骤,包括三角形光栅化、深度缓冲、纹理映射及法线贴图等高级主题,并提供了详细的算法实现。

1984

1984

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?