CRD-Backed

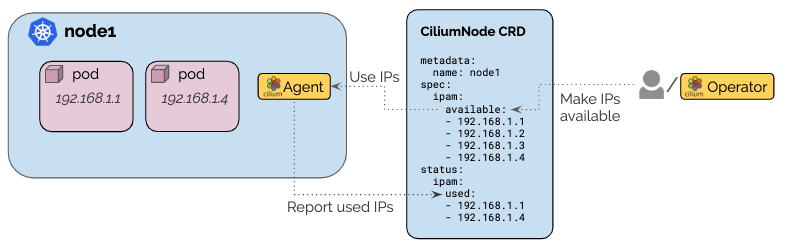

cilium 的CRD-Backed 模式IPAM通过kubernetes自定义资源CRD提供了一种扩展IP地址管理的接口。它允许每个节点可配置并将IPAM委托给外部operator。

架构

启动该模式后,cilium agent运行时会监听与节点同名的ciliumnodes.cilium.io自定义对象。

当自定义资源更新时,每个节点的可用IP地址池会通过spec.ipam.available数据域列出。当从池中删除一个已分配的IP时,这个已经分配的IP会继续被pod使用不会立即被回收,但是无法再被重用。

在IP地址池申请分配了一个IP地址时,已分配的IP地址会被纪录在status.ipam.inuse数据域。

节点状态更新最多每15秒运行一次。因此,如果同时调度多个POD,状态部分的更新可能会滞后

配置

cilium IPAM的CRD-Backed 模式可以通过cilium-configConfigMap 中设置ipam: crd或者通过helm 选项--ipam=crd开启。启用CRD-Backed 模式后,agent将等待与Kubernetes节点名称匹配的CiliumNode自定义资源变为可用,其中至少有一个IP地址列为可用。启用连接健康检查时,必须至少有两个IP地址可用。

agent 在等待时会打印以下日志

Waiting for initial IP to become available in '<node-name>' custom resource

Enable CRD IPAM mode

- 在kubernetes中安装cilium

- 使用

--ipam=crd选项运行Cilium,或在Cilium配置ConfigMap中设置ipam: crd。 - 重启cilium,cilium将自动注册CRD(如果还不可用)。

msg="Waiting for initial IP to become available in 'k8s1' custom resource" subsys=ipam

- 验证CRD是否已注册:

$ kubectl get crds

NAME CREATED AT

[...]

ciliumnodes.cilium.io 2019-06-08T12:26:41Z

Create a CiliumNode CR

- 导入以下自定义资源,使cilium agent有可用IP。

apiVersion: "cilium.io/v2"

kind: CiliumNode

metadata:

name: "k8s1"

spec:

ipam:

pool:

192.168.1.1: {}

192.168.1.2: {}

192.168.1.3: {}

192.168.1.4: {}

- 验证cilium agent是否正确启动

$ cilium status --all-addresses

KVStore: Ok etcd: 1/1 connected, has-quorum=true: https://192.168.60.11:2379 - 3.3.12 (Leader)

[...]

IPAM: IPv4: 2/4 allocated,

Allocated addresses:

192.168.1.1 (router)

192.168.1.3 (health)

- 验证

status.IPAM.used部分:

$ kubectl get cn k8s1 -o yaml

apiVersion: cilium.io/v2

kind: CiliumNode

metadata:

name: k8s1

[...]

spec:

ipam:

pool:

192.168.1.1: {}

192.168.1.2: {}

192.168.1.3: {}

192.168.1.4: {}

status:

ipam:

used:

192.168.1.1:

owner: router

192.168.1.3:

owner: health

目前地址池只允许使用单个IP地址。不支持CIDR。

权限

为了使自定义资源正常工作,需要以下附加权限。使用标准Cilium制品部署时,会自动授予这些权限:

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: cilium

rules:

- apiGroups:

- cilium.io

resources:

- ciliumnodes

- ciliumnodes/status

verbs:

- '*'

CRD 定义

CilumNode自定义资源以标准Kubernetes资源为模型,并分为spec和status部分:

type CiliumNode struct {

[...]

// Spec is the specification of the node

Spec NodeSpec `json:"spec"`

// Status it the status of the node

Status NodeStatus `json:"status"`

}

IPAM 规范

spec部分嵌入了一个IPAM特定字段,该字段允许定义节点可用于分配的所有IP的列表:

// AllocationMap is a map of allocated IPs indexed by IP

type AllocationMap map[string]AllocationIP

// NodeSpec is the configuration specific to a node

type NodeSpec struct {

// [...]

// IPAM is the address management specification. This section can be

// populated by a user or it can be automatically populated by an IPAM

// operator

//

// +optional

IPAM IPAMSpec `json:"ipam,omitempty"`

}

// IPAMSpec is the IPAM specification of the node

type IPAMSpec struct {

// Pool is the list of IPs available to the node for allocation. When

// an IP is used, the IP will remain on this list but will be added to

// Status.IPAM.InUse

//

// +optional

Pool AllocationMap `json:"pool,omitempty"`

}

// AllocationIP is an IP available for allocation or already allocated

type AllocationIP struct {

// Owner is the owner of the IP, this field is set if the IP has been

// allocated. It will be set to the pod name or another identifier

// representing the usage of the IP

//

// The owner field is left blank for an entry in Spec.IPAM.Pool

// and filled out as the IP is used and also added to

// Status.IPAM.InUse.

//

// +optional

Owner string `json:"owner,omitempty"`

// Resource is set for both available and allocated IPs, it represents

// what resource the IP is associated with, e.g. in combination with

// AWS ENI, this will refer to the ID of the ENI

//

// +optional

Resource string `json:"resource,omitempty"`

}

IPAM 状态

status部分包含IPAM特定字段。IPAM状态报告该节点正在使用的所有地址:

// NodeStatus is the status of a node

type NodeStatus struct {

// [...]

// IPAM is the IPAM status of the node

//

// +optional

IPAM IPAMStatus `json:"ipam,omitempty"`

}

// IPAMStatus is the IPAM status of a node

type IPAMStatus struct {

// InUse lists all IPs out of Spec.IPAM.Pool which have been

// allocated and are in use.

//

// +optional

InUse AllocationMap `json:"used,omitempty"`

}

464

464

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?