作业内容:实现一个两层的神经网络,包括FP和BP,在CIFAR-10上测试

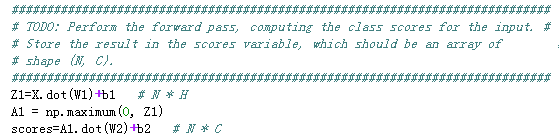

计算score,score function用的是ReLU:max(0,W*X):

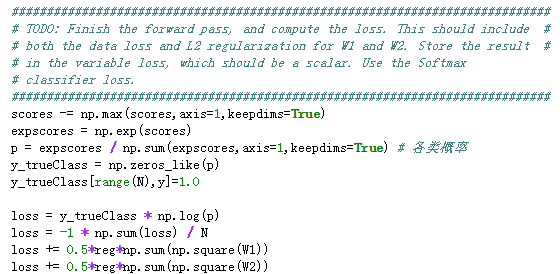

计算loss,用的是softmax+L2正则化:

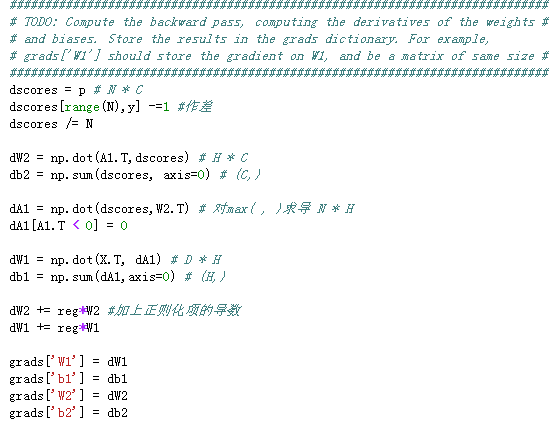

用BP计算梯度:

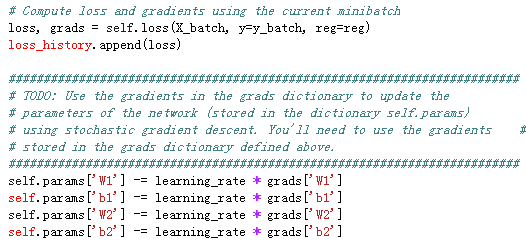

利用SGD训练模型:

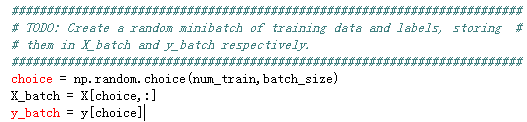

先是挑选mini batch:

更新:

最后精度达到29%。

很低,所以模型需要debug ,可以画出plot the loss function and the accuracies on the training and validation sets during optimization;visualize the weights that were learned in the first layer of the network。

有几点可以考虑:

1.hidden layer size,

2.learning rate,

3. numer of training epochs,

4.regularization strength.

本文介绍了一个简单的两层神经网络实现过程,包括前向传播(FP)和反向传播(BP)。通过ReLU激活函数和Softmax损失函数,在CIFAR-10数据集上进行训练并测试。此外还探讨了如何通过调整隐藏层大小、学习率等参数来优化模型。

本文介绍了一个简单的两层神经网络实现过程,包括前向传播(FP)和反向传播(BP)。通过ReLU激活函数和Softmax损失函数,在CIFAR-10数据集上进行训练并测试。此外还探讨了如何通过调整隐藏层大小、学习率等参数来优化模型。

2121

2121

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?