环境说明:jdk1.8+scala.2.11.8+spark2.2.1+hadoop.2.7.1

spark2.2.1伪分布式安装说明: 首先要安装好jdk+hadoop+scala

下载。上传、解压

配置spark/conf/spark-env.sh

记得先把原始文件copy一份 cp spark-env.sh.temple spark-env.sh

vim spark-env.sh

export JAVA_HOME=/usr/local/jdk export SCALA_HOME=/usr/local/scala export SPARK_WORKER_MEMORY=1G //根据自己的机器的情况,可以不用写在配置文件里面。 export HADOOP_HOME=/usr/local/hadoop export HADOOP_CONF_DIR=/usr/local/hadoop/etc/hadoop export SPARK_MASTER_IP=localhost

完毕之后。cd ../sbin

启动spark进程: ./start-all.sh

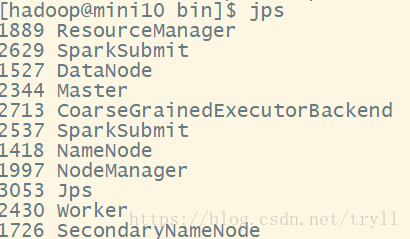

jps 查看

发现多了master 和worker两个进程

。。。。。。。。。。。。。。。。。。。。。。。。。。。。。。。。。。。。。。。

接下来是尝试spark-sql操作

首先要启动spark-sql

cd ../bin 执行./spark-sql --master spark://172.16.0.37:7077 --executor-memory 1g

这里首先贴一下,启动的日志:

18/08/16 08:23:24 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

18/08/16 08:23:24 INFO metastore.HiveMetaStore: 0: Opening raw store with implemenation class:org.apache.hadoop.hive.metastore.ObjectStore

18/08/16 08:23:24 INFO metastore.ObjectStore: ObjectStore, initialize called

18/08/16 08:23:24 INFO DataNucleus.Persistence: Property hive.metastore.integral.jdo.pushdown unknown - will be ignored

18/08/16 08:23:24 INFO DataNucleus.Persistence: Property datanucleus.cache.level2 unknown - will be ignored

18/08/16 08:23:26 INFO metastore.ObjectStore: Setting MetaStore object pin classes with hive.metastore.cache.pinobjtypes="Table,StorageDescriptor,SerDeInfo,Partition,Database,Type,FieldSchema,Order"

18/08/16 08:23:27 INFO DataNucleus.Datastore: The class "org.apache.hadoop.hive.metastore.model.MFieldSchema" is tagged as "embedded-only" so does not have its own datastore table.

18/08/16 08:23:27 INFO DataNucleus.Datastore: The class "org.apache.hadoop.hive.metastore.model.MOrder" is tagged as "embedded-only" so does not have its own datastore table.

18/08/16 08:23:28 INFO DataNucleus.Datastore: The class "org.apache.hadoop.hive.metastore.model.MFieldSchema" is tagged as "embedded-only" so does not have its own datastore table.

18/08/16 08:23:28 INFO DataNucleus.Datastore: The class "org.apache.hadoop.hive.metastore.model.MOrder" is tagged as "embedded-only" so does not have its own datastore table.

18/08/16 08:23:29 INFO metastore.MetaStoreDirectSql: Using direct SQL, underlying DB is DERBY

18/08/16 08:23:29 INFO metastore.ObjectStore: Initialized ObjectStore

18/08/16 08:23:29 WARN metastore.ObjectStore: Version information not found in metastore. hive.metastore.schema.verification is not enabled so recording the schema version 1.2.0

18/08/16 08:23:29 WARN metastore.ObjectStore: Failed to get database default, returning NoSuchObjectException

18/08/16 08:23:30 INFO metastore.HiveMetaStore: Added admin role in metastore

18/08/16 08:23:30 INFO metastore.HiveMetaStore: Added public role in metastore

18/08/16 08:23:30 INFO metastore.HiveMetaStore: No user is added in admin role, since config is empty

18/08/16 08:23:30 INFO metastore.HiveMetaStore: 0: get_all_databases

18/08/16 08:23:30 INFO HiveMetaStore.audit: ugi=hadoop ip=unknown-ip-addr cmd=get_all_databases

18/08/16 08:23:30 INFO metastore.HiveMetaStore: 0: get_functions: db=default pat=*

18/08/16 08:23:30 INFO HiveMetaStore.audit: ugi=hadoop ip=unknown-ip-addr cmd=get_functions: db=default pat=*

18/08/16 08:23:30 INFO DataNucleus.Datastore: The class "org.apache.hadoop.hive.metastore.model.MResourceUri" is tagged as "embedded-only" so does not have its own datastore table.

18/08/16 08:23:30 INFO session.SessionState: Created local directory: /tmp/3455c021-8c84-49a1-ad3b-037d5c706f68_resources

18/08/16 08:23:30 INFO session.SessionState: Created HDFS directory: /tmp/hive/hadoop/3455c021-8c84-49a1-ad3b-037d5c706f68

18/08/16 08:23:30 INFO session.SessionState: Created local directory: /tmp/hadoop/3455c021-8c84-49a1-ad3b-037d5c706f68

18/08/16 08:23:30 INFO session.SessionState: Created HDFS directory: /tmp/hive/hadoop/3455c021-8c84-49a1-ad3b-037d5c706f68/_tmp_space.db

18/08/16 08:23:30 INFO spark.SparkContext: Running Spark version 2.2.1

18/08/16 08:23:30 INFO spark.SparkContext: Submitted application: SparkSQL::172.16.0.37

18/08/16 08:23:30 INFO spark.SecurityManager: Changing view acls to: hadoop

18/08/16 08:23:30 INFO spark.SecurityManager: Changing modify acls to: hadoop

18/08/16 08:23:30 INFO spark.SecurityManager: Changing view acls groups to:

18/08/16 08:23:30 INFO spark.SecurityManager: Changing modify acls groups to:

18/08/16 08:23:30 INFO spark.SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(hadoop); groups with view permissions: Set(); users with modify permissions: Set(hadoop); groups with modify permissions: Set()

18/08/16 08:23:31 INFO util.Utils: Successfully started service 'sparkDriver' on port 33900.

18/08/16 08:23:31 INFO spark.SparkEnv: Registering MapOutputTracker

18/08/16 08:23:31 INFO spark.SparkEnv: Registering BlockManagerMaster

18/08/16 08:23:31 INFO storage.BlockManagerMasterEndpoint: Using org.apache.spark.storage.DefaultTopologyMapper for getting topology information

18/08/16 08:23:31 INFO storage.BlockManagerMasterEndpoint: BlockManagerMasterEndpoint up

18/08/16 08:23:31 INFO storage.DiskBlockManager: Created local directory at /tmp/blockmgr-730e28b9-c02c-4d5c-8b65-d8a490c234cf

18/08/16 08:23:31 INFO memory.MemoryStore: MemoryStore started with capacity 366.3 MB

18/08/16 08:23:31 INFO spark.SparkEnv: Registering OutputCommitCoordinator

18/08/16 08:23:31 INFO util.log: Logging initialized @8995ms

18/08/16 08:23:31 INFO server.Server: jetty-9.3.z-SNAPSHOT

18/08/16 08:23:31 INFO server.Server: Started @9087ms

18/08/16 08:23:31 WARN util.Utils: Service 'SparkUI' could not bind on port 4040. Attempting port 4041.

18/08/16 08:23:31 INFO server.AbstractConnector: Started ServerConnector@6533927b{HTTP/1.1,[http/1.1]}{0.0.0.0:4041}

18/08/16 08:23:31 INFO util.Utils: Successfully started service 'SparkUI' on port 4041.

18/08/16 08:23:31 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@5e5a8718{/jobs,null,AVAILABLE,@Spark}

18/08/16 08:23:31 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@38f617f4{/jobs/json,null,AVAILABLE,@Spark}

18/08/16 08:23:31 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@3dea226b{/jobs/job,null,AVAILABLE,@Spark}

18/08/16 08:23:31 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@7fc7152e{/jobs/job/json,null,AVAILABLE,@Spark}

18/08/16 08:23:31 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@6704df84{/stages,null,AVAILABLE,@Spark}

18/08/16 08:23:31 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@53bb71e5{/stages/json,null,AVAILABLE,@Spark}

18/08/16 08:23:31 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@15994b0b{/stages/stage,null,AVAILABLE,@Spark}

18/08/16 08:23:31 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@13aed42b{/stages/stage/json,null,AVAILABLE,@Spark}

18/08/16 08:23:31 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@78065fcd{/stages/pool,null,AVAILABLE,@Spark}

18/08/16 08:23:31 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@51ed2f68{/stages/pool/json,null,AVAILABLE,@Spark}

18/08/16 08:23:31 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@19b9f903{/storage,null,AVAILABLE,@Spark}

18/08/16 08:23:31 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@28cb86b2{/storage/json,null,AVAILABLE,@Spark}

18/08/16 08:23:31 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@748904e8{/storage/rdd,null,AVAILABLE,@Spark}

18/08/16 08:23:31 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@3b3056a6{/storage/rdd/json,null,AVAILABLE,@Spark}

18/08/16 08:23:31 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@51d8f2f2{/environment,null,AVAILABLE,@Spark}

18/08/16 08:23:31 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@72fb989b{/environment/json,null,AVAILABLE,@Spark}

18/08/16 08:23:31 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@597a7afa{/executors,null,AVAILABLE,@Spark}

18/08/16 08:23:31 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@7cdb7fc{/executors/json,null,AVAILABLE,@Spark}

18/08/16 08:23:31 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@586843bc{/executors/threadDump,null,AVAILABLE,@Spark}

18/08/16 08:23:31 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@7d8b66d9{/executors/threadDump/json,null,AVAILABLE,@Spark}

18/08/16 08:23:31 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@4ff66917{/static,null,AVAILABLE,@Spark}

18/08/16 08:23:31 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@40c76f5a{/,null,AVAILABLE,@Spark}

18/08/16 08:23:31 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@5546e754{/api,null,AVAILABLE,@Spark}

18/08/16 08:23:31 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@43e3a390{/jobs/job/kill,null,AVAILABLE,@Spark}

18/08/16 08:23:31 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@50dc49e1{/stages/stage/kill,null,AVAILABLE,@Spark}

18/08/16 08:23:31 INFO ui.SparkUI: Bound SparkUI to 0.0.0.0, and started at http://172.16.0.37:4041

18/08/16 08:23:31 INFO client.StandaloneAppClient$ClientEndpoint: Connecting to master spark://172.16.0.37:7077...

18/08/16 08:23:31 INFO client.TransportClientFactory: Successfully created connection to /172.16.0.37:7077 after 27 ms (0 ms spent in bootstraps)

18/08/16 08:23:32 INFO cluster.StandaloneSchedulerBackend: Connected to Spark cluster with app ID app-20180816082331-0000

18/08/16 08:23:32 INFO util.Utils: Successfully started service 'org.apache.spark.network.netty.NettyBlockTransferService' on port 44012.

18/08/16 08:23:32 INFO netty.NettyBlockTransferService: Server created on 172.16.0.37:44012

18/08/16 08:23:32 INFO storage.BlockManager: Using org.apache.spark.storage.RandomBlockReplicationPolicy for block replication policy

18/08/16 08:23:32 INFO storage.BlockManagerMaster: Registering BlockManager BlockManagerId(driver, 172.16.0.37, 44012, None)

18/08/16 08:23:32 INFO storage.BlockManagerMasterEndpoint: Registering block manager 172.16.0.37:44012 with 366.3 MB RAM, BlockManagerId(driver, 172.16.0.37, 44012, None)

18/08/16 08:23:32 INFO storage.BlockManagerMaster: Registered BlockManager BlockManagerId(driver, 172.16.0.37, 44012, None)

18/08/16 08:23:32 INFO storage.BlockManager: Initialized BlockManager: BlockManagerId(driver, 172.16.0.37, 44012, None)

18/08/16 08:23:32 INFO client.StandaloneAppClient$ClientEndpoint: Executor added: app-20180816082331-0000/0 on worker-20180816081203-172.16.0.37-37180 (172.16.0.37:37180) with 3 cores

18/08/16 08:23:32 INFO cluster.StandaloneSchedulerBackend: Granted executor ID app-20180816082331-0000/0 on hostPort 172.16.0.37:37180 with 3 cores, 700.0 MB RAM

18/08/16 08:23:32 INFO client.StandaloneAppClient$ClientEndpoint: Executor updated: app-20180816082331-0000/0 is now RUNNING

18/08/16 08:23:32 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@45e11627{/metrics/json,null,AVAILABLE,@Spark}

18/08/16 08:23:32 INFO cluster.StandaloneSchedulerBackend: SchedulerBackend is ready for scheduling beginning after reached minRegisteredResourcesRatio: 0.0

18/08/16 08:23:32 INFO internal.SharedState: Setting hive.metastore.warehouse.dir ('null') to the value of spark.sql.warehouse.dir ('file:/home/hadoop/apps/spark-2.2.1-bin-hadoop2.7/bin/spark-warehouse').

18/08/16 08:23:32 INFO internal.SharedState: Warehouse path is 'file:/home/hadoop/apps/spark-2.2.1-bin-hadoop2.7/bin/spark-warehouse'.

18/08/16 08:23:32 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@5d27b4c1{/SQL,null,AVAILABLE,@Spark}

18/08/16 08:23:32 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@4b508371{/SQL/json,null,AVAILABLE,@Spark}

18/08/16 08:23:32 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@270f28cf{/SQL/execution,null,AVAILABLE,@Spark}

18/08/16 08:23:32 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@467af68c{/SQL/execution/json,null,AVAILABLE,@Spark}

18/08/16 08:23:32 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@12c76d6e{/static/sql,null,AVAILABLE,@Spark}

18/08/16 08:23:32 INFO hive.HiveUtils: Initializing HiveMetastoreConnection version 1.2.1 using Spark classes.

18/08/16 08:23:33 INFO metastore.HiveMetaStore: 0: Opening raw store with implemenation class:org.apache.hadoop.hive.metastore.ObjectStore

18/08/16 08:23:33 INFO metastore.ObjectStore: ObjectStore, initialize called

18/08/16 08:23:33 INFO DataNucleus.Persistence: Property hive.metastore.integral.jdo.pushdown unknown - will be ignored

18/08/16 08:23:33 INFO DataNucleus.Persistence: Property datanucleus.cache.level2 unknown - will be ignored

18/08/16 08:23:35 INFO cluster.CoarseGrainedSchedulerBackend$DriverEndpoint: Registered executor NettyRpcEndpointRef(spark-client://Executor) (172.16.0.37:49208) with ID 0

18/08/16 08:23:35 INFO storage.BlockManagerMasterEndpoint: Registering block manager 172.16.0.37:42821 with 193.5 MB RAM, BlockManagerId(0, 172.16.0.37, 42821, None)

18/08/16 08:23:36 INFO metastore.ObjectStore: Setting MetaStore object pin classes with hive.metastore.cache.pinobjtypes="Table,StorageDescriptor,SerDeInfo,Partition,Database,Type,FieldSchema,Order"

18/08/16 08:23:37 INFO DataNucleus.Datastore: The class "org.apache.hadoop.hive.metastore.model.MFieldSchema" is tagged as "embedded-only" so does not have its own datastore table.

18/08/16 08:23:37 INFO DataNucleus.Datastore: The class "org.apache.hadoop.hive.metastore.model.MOrder" is tagged as "embedded-only" so does not have its own datastore table.

18/08/16 08:23:38 INFO DataNucleus.Datastore: The class "org.apache.hadoop.hive.metastore.model.MFieldSchema" is tagged as "embedded-only" so does not have its own datastore table.

18/08/16 08:23:38 INFO DataNucleus.Datastore: The class "org.apache.hadoop.hive.metastore.model.MOrder" is tagged as "embedded-only" so does not have its own datastore table.

18/08/16 08:23:38 INFO metastore.MetaStoreDirectSql: Using direct SQL, underlying DB is DERBY

18/08/16 08:23:38 INFO metastore.ObjectStore: Initialized ObjectStore

18/08/16 08:23:39 WARN metastore.ObjectStore: Version information not found in metastore. hive.metastore.schema.verification is not enabled so recording the schema version 1.2.0

18/08/16 08:23:39 WARN metastore.ObjectStore: Failed to get database default, returning NoSuchObjectException

18/08/16 08:23:39 INFO metastore.HiveMetaStore: Added admin role in metastore

18/08/16 08:23:39 INFO metastore.HiveMetaStore: Added public role in metastore

18/08/16 08:23:39 INFO metastore.HiveMetaStore: No user is added in admin role, since config is empty

18/08/16 08:23:39 INFO metastore.HiveMetaStore: 0: get_all_databases

18/08/16 08:23:39 INFO HiveMetaStore.audit: ugi=hadoop ip=unknown-ip-addr cmd=get_all_databases

18/08/16 08:23:39 INFO metastore.HiveMetaStore: 0: get_functions: db=default pat=*

18/08/16 08:23:39 INFO HiveMetaStore.audit: ugi=hadoop ip=unknown-ip-addr cmd=get_functions: db=default pat=*

18/08/16 08:23:39 INFO DataNucleus.Datastore: The class "org.apache.hadoop.hive.metastore.model.MResourceUri" is tagged as "embedded-only" so does not have its own datastore table.

18/08/16 08:23:39 INFO session.SessionState: Created local directory: /tmp/2cc14b21-0441-4d07-963e-2350d3148e9f_resources

18/08/16 08:23:39 INFO session.SessionState: Created HDFS directory: /tmp/hive/hadoop/2cc14b21-0441-4d07-963e-2350d3148e9f

18/08/16 08:23:39 INFO session.SessionState: Created local directory: /tmp/hadoop/2cc14b21-0441-4d07-963e-2350d3148e9f

18/08/16 08:23:39 INFO session.SessionState: Created HDFS directory: /tmp/hive/hadoop/2cc14b21-0441-4d07-963e-2350d3148e9f/_tmp_space.db

18/08/16 08:23:39 INFO client.HiveClientImpl: Warehouse location for Hive client (version 1.2.1) is file:/home/hadoop/apps/spark-2.2.1-bin-hadoop2.7/bin/spark-warehouse

18/08/16 08:23:39 INFO metastore.HiveMetaStore: 0: get_database: default

18/08/16 08:23:39 INFO HiveMetaStore.audit: ugi=hadoop ip=unknown-ip-addr cmd=get_database: default

18/08/16 08:23:40 INFO session.SessionState: Created local directory: /tmp/c96dfcc8-92ea-4fac-837b-a2de2344a4f3_resources

18/08/16 08:23:40 INFO session.SessionState: Created HDFS directory: /tmp/hive/hadoop/c96dfcc8-92ea-4fac-837b-a2de2344a4f3

18/08/16 08:23:40 INFO session.SessionState: Created local directory: /tmp/hadoop/c96dfcc8-92ea-4fac-837b-a2de2344a4f3

18/08/16 08:23:40 INFO session.SessionState: Created HDFS directory: /tmp/hive/hadoop/c96dfcc8-92ea-4fac-837b-a2de2344a4f3/_tmp_space.db

18/08/16 08:23:40 INFO client.HiveClientImpl: Warehouse location for Hive client (version 1.2.1) is file:/home/hadoop/apps/spark-2.2.1-bin-hadoop2.7/bin/spark-warehouse

18/08/16 08:23:40 INFO metastore.HiveMetaStore: 0: get_database: global_temp

18/08/16 08:23:40 INFO HiveMetaStore.audit: ugi=hadoop ip=unknown-ip-addr cmd=get_database: global_temp

18/08/16 08:23:40 WARN metastore.ObjectStore: Failed to get database global_temp, returning NoSuchObjectException

18/08/16 08:23:40 INFO session.SessionState: Created local directory: /tmp/6646afbf-c828-4682-8e77-e40b8181272b_resources

18/08/16 08:23:40 INFO session.SessionState: Created HDFS directory: /tmp/hive/hadoop/6646afbf-c828-4682-8e77-e40b8181272b

18/08/16 08:23:40 INFO session.SessionState: Created local directory: /tmp/hadoop/6646afbf-c828-4682-8e77-e40b8181272b

18/08/16 08:23:40 INFO session.SessionState: Created HDFS directory: /tmp/hive/hadoop/6646afbf-c828-4682-8e77-e40b8181272b/_tmp_space.db

18/08/16 08:23:40 INFO client.HiveClientImpl: Warehouse location for Hive client (version 1.2.1) is file:/home/hadoop/apps/spark-2.2.1-bin-hadoop2.7/bin/spark-warehouse

18/08/16 08:23:40 INFO state.StateStoreCoordinatorRef: Registered StateStoreCoordinator endpoint

spark-sql>

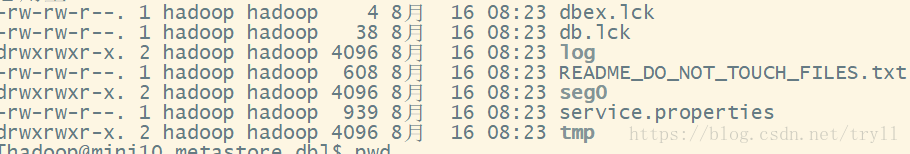

说明一下:这里我还没有安装hive环境,所以上面启动的时候报的warn没有找到hive的metordata的信息,但是spark2.2.x会默认在没有hive的环境下面也是可以运行的,它会在本地的spark安装目录下面spark/bin/spark-warehouse下面创建临时目录保存hive元数据信息。目录如下:这个metastore_db是临时目录

![]()

该文件下面会有如下几个文件:

另外在hdfs上面的/tmp/hive/hadoop/下面产生临时文件

本文详细记录了Spark2.2.1在伪分布式环境下的安装步骤,包括配置环境变量、启动Spark进程等,并分享了启动spark-sql的操作流程及日志解析,展示了Spark在无Hive环境下的兼容性。

本文详细记录了Spark2.2.1在伪分布式环境下的安装步骤,包括配置环境变量、启动Spark进程等,并分享了启动spark-sql的操作流程及日志解析,展示了Spark在无Hive环境下的兼容性。

2409

2409

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?