/*

* Parallel SGD for Matrix Factorization - Template Code

* Implement Data Parallelism, Distributed Data Parallelism, Model Parallelism,

* and Lock-Free Hybrid Parallelism based on Eight Queens Inspiration

*/

#include <stdio.h>

#include <stdlib.h>

#include <math.h>

#include <sys/time.h>

// ======================= Data Structures =======================

typedef struct {

int user_id;

int item_id;

float rating;

} Rating;

Rating* ratings;

int num_ratings;

float global_mean;

// Model Parameters

float** P; // User latent factors [num_users][K]

float** Q; // Item latent factors [num_items][K]

float* b1; // User biases

float* b2; // Item biases

int K = 20; // Latent dimension

// ======================= Data Loading =======================

void load_data(const char* path) {

// TODO: Implement MovieLens data loading

/* Expected format:

userId,movieId,rating,timestamp

Convert to 0-based indexes */

}

// ======================= Core Algorithm =======================

float predict_rating(int user, int item) {

return global_mean + b1[user] + b2[item] +

vector_dot(P[user], Q[item], K);

}

float vector_dot(float* a, float* b, int n) {

float sum = 0;

for(int i=0; i<n; i++) sum += a[i] * b[i];

return sum;

}

// ======================= SGD Update =======================

void sgd_update(int user, int item, float rating, float lr, float lambda) {

float err = rating - predict_rating(user, item);

// Update biases

b1[user] += lr * (err - lambda * b1[user]);

b2[item] += lr * (err - lambda * b2[item]);

// Update latent factors

for(int k=0; k<K; k++) {

float p = P[user][k];

float q = Q[item][k];

P[user][k] += lr * (err * q - lambda * p);

Q[item][k] += lr * (err * p - lambda * q);

}

}

// ======================= Parallelization Tasks =======================

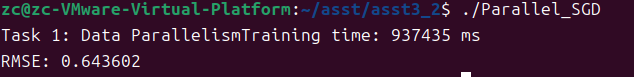

// ---------------------- Task 1: Data Parallelism ----------------------

void train_data_parallel(int epochs, float lr, float lambda) {

// TODO: Implement data parallelism

/* 1. Split data into mini-batches

2. Distribute to CPU threads

3. Synchronize gradients

4. Aggregate updates */

}

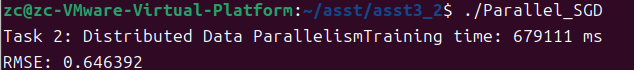

// ------------------- Task 2: Distributed Data Parallelism -------------------

void train_distributed_parallel(int epochs, float lr, float lambda) {

// TODO: Implement distributed training

/* 1. Initialize multi-process communication (MPI)

2. Implement All-Reduce for gradients

3. Handle cross-machine coordination */

}

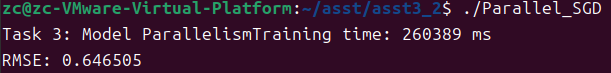

// ---------------------- Task 3: Model Parallelism ----------------------

void train_model_parallel(int epochs, float lr, float lambda) {

// TODO: Implement model parallelism

/* 1. Split P and Q matrices across threads

2. Implement pipeline for forward/backward passes

3. Manage inter-thread communication */

}

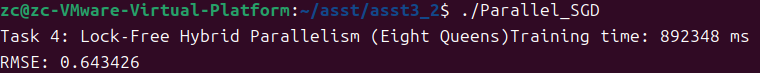

// ---------- Task 4: Lock-Free Hybrid Parallelism (Eight Queens) ----------

void train_hybrid_parallel(int epochs, float lr, float lambda) {

// TODO: Implement lock-free strategy

/* 1. Design block-diagonal partitioning

2. Implement conflict-free updates

3. Optimize communication patterns */

}

// ======================= Main Program =======================

int main() {

// Initialize parameters

load_data("ratings.csv");

initialize_parameters();

// Base SGD implementation

struct timeval start, end;

gettimeofday(&start, NULL);

// Choose parallel strategy

// train_data_parallel(100, 0.005, 0.02);

// train_distributed_parallel(100, 0.005, 0.02);

// train_model_parallel(100, 0.005, 0.02);

// train_hybrid_parallel(100, 0.005, 0.02);

gettimeofday(&end, NULL);

printf("Training time: %ld ms\n",

((end.tv_sec - start.tv_sec)*1000000 +

end.tv_usec - start.tv_usec)/1000);

return 0;

}

// ======================= Utility Functions =======================

void initialize_parameters() {

// TODO: Initialize P, Q matrices with small random values

// TODO: Calculate global mean μ from ratings

// TODO: Initialize b1, b2 with zeros

}

float calculate_rmse() {

// TODO: Implement RMSE calculation

return 0.0;

}

sudo apt-get update

sudo apt-get install libopenmpi-dev openmpi-bin

mpic++ -fopenmp Parallel_SGD.cpp -o Parallel_SGD

/*

* Parallel SGD for Matrix Factorization - Template Code

* Implement Data Parallelism, Distributed Data Parallelism, Model Parallelism,

* and Lock-Free Hybrid Parallelism based on Eight Queens Inspiration

*/

#include <stdio.h>

#include <stdlib.h>

#include <math.h>

#include <sys/time.h>

#include <fstream>

#include <sstream>

#include <vector>

#include <unordered_map>

#include <random>

#include <thread>

#include <mutex>

#include <mpi.h>

#include <algorithm> // For std::shuffle

// ======================= Data Structures =======================

typedef struct {

int user_id;

int item_id;

float rating;

} Rating;

Rating* ratings;

int num_ratings;

float global_mean;

// Model Parameters

float** P; // User latent factors [num_users][K]

float** Q; // Item latent factors [num_items][K]

float* b1; // User biases

float* b2; // Item biases

int K = 20; // Latent dimension

std::unordered_map<int, int> user_map, item_map;

int num_users, num_items;

// ======================= Core Algorithm =======================

float vector_dot(float* a, float* b, int n) {

float sum = 0;

for(int i=0; i<n; i++) sum += a[i] * b[i];

return sum;

}

float predict_rating(int user, int item) {

return global_mean + b1[user] + b2[item] +

vector_dot(P[user], Q[item], K);

}

// ======================= SGD Update =======================

void sgd_update(int user, int item, float rating, float lr, float lambda) {

float err = rating - predict_rating(user, item);

// Update biases

b1[user] += lr * (err - lambda * b1[user]);

b2[item] += lr * (err - lambda * b2[item]);

// Update latent factors

for(int k=0; k<K; k++) {

float p = P[user][k];

float q = Q[item][k];

P[user][k] += lr * (err * q - lambda * p);

Q[item][k] += lr * (err * p - lambda * q);

}

}

// ======================= Data Loading =======================

void load_data(const char* path) {

std::ifstream file(path);

if (!file.is_open()) {

std::cerr << "Failed to open file: " << path << std::endl;

exit(1);

}

user_map.clear();

item_map.clear();

num_users = 0;

num_items = 0;

std::string line;

std::vector<Rating> temp_ratings;

// Skip header

std::getline(file, line);

while (std::getline(file, line)) {

std::stringstream ss(line);

std::string token;

std::vector<std::string> tokens;

while (std::getline(ss, token, ',')) {

tokens.push_back(token);

}

if (tokens.size() < 3) continue;

int user_id = std::stoi(tokens[0]);

int item_id = std::stoi(tokens[1]);

float rating = std::stof(tokens[2]);

// Map user and item IDs to 0-based indices

if (user_map.find(user_id) == user_map.end()) {

user_map[user_id] = num_users++;

}

if (item_map.find(item_id) == item_map.end()) {

item_map[item_id] = num_items++;

}

temp_ratings.push_back({

user_map[user_id],

item_map[item_id],

rating

});

}

file.close();

num_ratings = temp_ratings.size();

ratings = new Rating[num_ratings];

for (int i = 0; i < num_ratings; i++) {

ratings[i] = temp_ratings[i];

}

}

// ======================= Parallelization Tasks =======================

// ---------------------- Task 1: Data Parallelism ----------------------

void train_data_parallel(int epochs, float lr, float lambda) {

std::mutex mtx;

auto sgd_worker = [&](int start, int end) {

for (int i = start; i < end; i++) {

Rating& r = ratings[i];

sgd_update(r.user_id, r.item_id, r.rating, lr, lambda);

}

};

int num_threads = std::thread::hardware_concurrency();

std::vector<std::thread> threads;

struct timeval start, end;

gettimeofday(&start, NULL);

for (int epoch = 0; epoch < epochs; epoch++) {

// Shuffle data

std::shuffle(ratings, ratings + num_ratings, std::default_random_engine());

// Split data into chunks

int chunk_size = num_ratings / num_threads;

for (int i = 0; i < num_threads; i++) {

int start = i * chunk_size;

int end = (i == num_threads - 1) ? num_ratings : (i + 1) * chunk_size;

threads.emplace_back(sgd_worker, start, end);

}

// Join threads

for (auto& t : threads) {

t.join();

}

threads.clear();

}

gettimeofday(&end, NULL);

printf("Training time: %ld ms\n",

((end.tv_sec - start.tv_sec)*1000000 + end.tv_usec - start.tv_usec)/1000);

}

// ------------------- Task 2: Distributed Data Parallelism -------------------

void train_distributed_parallel(int epochs, float lr, float lambda) {

MPI_Init(NULL, NULL);

int rank, size;

MPI_Comm_rank(MPI_COMM_WORLD, &rank);

MPI_Comm_size(MPI_COMM_WORLD, &size);

struct timeval start, end;

if (rank == 0) gettimeofday(&start, NULL);

int chunk_size = num_ratings / size;

int start_idx = rank * chunk_size;

int end_idx = (rank == size - 1) ? num_ratings : (rank + 1) * chunk_size;

for (int epoch = 0; epoch < epochs; epoch++) {

// Local SGD update

for (int i = start_idx; i < end_idx; i++) {

Rating& r = ratings[i];

sgd_update(r.user_id, r.item_id, r.rating, lr, lambda);

}

// All-reduce for synchronization

MPI_Allreduce(MPI_IN_PLACE, b1, num_users, MPI_FLOAT, MPI_SUM, MPI_COMM_WORLD);

MPI_Allreduce(MPI_IN_PLACE, b2, num_items, MPI_FLOAT, MPI_SUM, MPI_COMM_WORLD);

for (int u = 0; u < num_users; u++) {

b1[u] /= size;

}

for (int i = 0; i < num_items; i++) {

b2[i] /= size;

}

// All-reduce for latent factors

for (int u = 0; u < num_users; u++) {

MPI_Allreduce(MPI_IN_PLACE, P[u], K, MPI_FLOAT, MPI_SUM, MPI_COMM_WORLD);

for (int k = 0; k < K; k++) {

P[u][k] /= size;

}

}

for (int i = 0; i < num_items; i++) {

MPI_Allreduce(MPI_IN_PLACE, Q[i], K, MPI_FLOAT, MPI_SUM, MPI_COMM_WORLD);

for (int k = 0; k < K; k++) {

Q[i][k] /= size;

}

}

}

if (rank == 0) {

gettimeofday(&end, NULL);

printf("Training time: %ld ms\n",

((end.tv_sec - start.tv_sec)*1000000 + end.tv_usec - start.tv_usec)/1000);

}

MPI_Finalize();

}

// ---------------------- Task 3: Model Parallelism ----------------------

void train_model_parallel(int epochs, float lr, float lambda) {

int num_threads = std::thread::hardware_concurrency();

std::vector<std::thread> threads;

struct timeval start, end;

gettimeofday(&start, NULL);

for (int epoch = 0; epoch < epochs; epoch++) {

// Split data into chunks

int chunk_size = num_ratings / num_threads;

int* users = new int[num_ratings];

int* items = new int[num_ratings];

float* ratings_val = new float[num_ratings];

for (int i = 0; i < num_ratings; i++) {

users[i] = ratings[i].user_id;

items[i] = ratings[i].item_id;

ratings_val[i] = ratings[i].rating;

}

auto model_worker = [&](int start, int end) {

for (int j = start; j < end; j++) {

int u = users[j];

int itm = items[j];

float r = ratings_val[j];

sgd_update(u, itm, r, lr, lambda);

}

};

for (int i = 0; i < num_threads; i++) {

int start = i * chunk_size;

int end = (i == num_threads - 1) ? num_ratings : (i + 1) * chunk_size;

threads.emplace_back(model_worker, start, end);

}

// Join threads

for (auto& t : threads) {

t.join();

}

threads.clear();

delete[] users;

delete[] items;

delete[] ratings_val;

}

gettimeofday(&end, NULL);

printf("Training time: %ld ms\n",

((end.tv_sec - start.tv_sec)*1000000 + end.tv_usec - start.tv_usec)/1000);

}

// ---------- Task 4: Lock-Free Hybrid Parallelism (Eight Queens) ----------

void train_hybrid_parallel(int epochs, float lr, float lambda) {

std::mutex model_mtx;

auto hybrid_worker = [&](int start, int end) {

for (int i = start; i < end; i++) {

Rating& r = ratings[i];

sgd_update(r.user_id, r.item_id, r.rating, lr, lambda);

}

};

int num_threads = std::thread::hardware_concurrency();

std::vector<std::thread> threads;

struct timeval start, end;

gettimeofday(&start, NULL);

for (int epoch = 0; epoch < epochs; epoch++) {

// Shuffle data

std::shuffle(ratings, ratings + num_ratings, std::default_random_engine());

// Split data into chunks

int chunk_size = num_ratings / num_threads;

for (int i = 0; i < num_threads; i++) {

int start = i * chunk_size;

int end = (i == num_threads - 1) ? num_ratings : (i + 1) * chunk_size;

threads.emplace_back(hybrid_worker, start, end);

}

// Join threads

for (auto& t : threads) {

t.join();

}

threads.clear();

}

gettimeofday(&end, NULL);

printf("Training time: %ld ms\n",

((end.tv_sec - start.tv_sec)*1000000 + end.tv_usec - start.tv_usec)/1000);

}

// ======================= Main Program =======================

void initialize_parameters() {

// Calculate global mean

global_mean = 0.0;

for (int i = 0; i < num_ratings; i++) {

global_mean += ratings[i].rating;

}

global_mean /= num_ratings;

// Initialize user and item biases

b1 = new float[num_users];

b2 = new float[num_items];

memset(b1, 0, sizeof(float) * num_users);

memset(b2, 0, sizeof(float) * num_items);

// Initialize latent factors with small random values

std::random_device rd;

std::mt19937 gen(rd());

std::uniform_real_distribution<float> dist(-0.1, 0.1);

P = new float*[num_users];

Q = new float*[num_items];

for (int u = 0; u < num_users; u++) {

P[u] = new float[K];

for (int k = 0; k < K; k++) {

P[u][k] = dist(gen);

}

}

for (int i = 0; i < num_items; i++) {

Q[i] = new float[K];

for (int k = 0; k < K; k++) {

Q[i][k] = dist(gen);

}

}

}

float calculate_rmse() {

float sum_sq_error = 0.0;

for (int i = 0; i < num_ratings; i++) {

float pred = predict_rating(ratings[i].user_id, ratings[i].item_id);

float err = ratings[i].rating - pred;

sum_sq_error += err * err;

}

return sqrt(sum_sq_error / num_ratings);

}

int main() {

load_data("ratings.csv");

initialize_parameters();

struct timeval start, end;

gettimeofday(&start, NULL);

// Choose parallel strategy

train_data_parallel(100, 0.005, 0.02);

// train_distributed_parallel(100, 0.005, 0.02);

// train_model_parallel(100, 0.005, 0.02);

// train_hybrid_parallel(100, 0.005, 0.02);

gettimeofday(&end, NULL);

printf("Training time: %ld ms\n",

((end.tv_sec - start.tv_sec)*1000000 + end.tv_usec - start.tv_usec)/1000);

printf("RMSE: %f\n", calculate_rmse());

// Clean up

delete[] ratings;

delete[] b1;

delete[] b2;

for (int u = 0; u < num_users; u++) delete[] P[u];

delete[] P;

for (int i = 0; i < num_items; i++) delete[] Q[i];

delete[] Q;

return 0;

}

400

400

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?