环境:

Hadoop版本:Apache Hadoop2.7.1

Spark版本:Apache Spark1.4.1

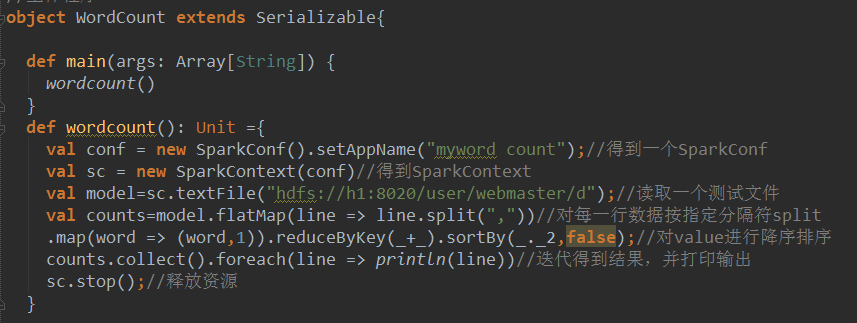

核心代码:

测试数据:

- a,b,a

- c,d,f

- a,b,h,p,z

- a,f,o

a,b,a

c,d,f

a,b,h,p,z

a,f,o

在命令行使用sbt打包:sbt clean package

上传jar至Hadoop或者Spark的集群上,如何提交?

- 三种模式提交:

- (1)需要启动HDFS+YRAN,无须启动spark的standalone集群

- bin/spark-submit --class com.spark.helloword.WordCount --master yarn-client ./spark-hello_2.11-1.0.jar

- (2)启动spark的standalone集群,并启动的Hadoop的HDFS分布式存储系统即可

- bin/spark-submit --class com.spark.helloword.WordCount --master spark://h1:7077 ./spark-hello_2.11-1.0.jar

- (3)//需要启动HDFS+YRAN,无须启动spark的standalone集群

- //--name 指定作业名字

- bin/spark-submit --class com.spark.helloword.WordCount --master yarn-cluster --name test-spark-wordcount ./spark-hello_2.11-1.0.jar

三种模式提交:

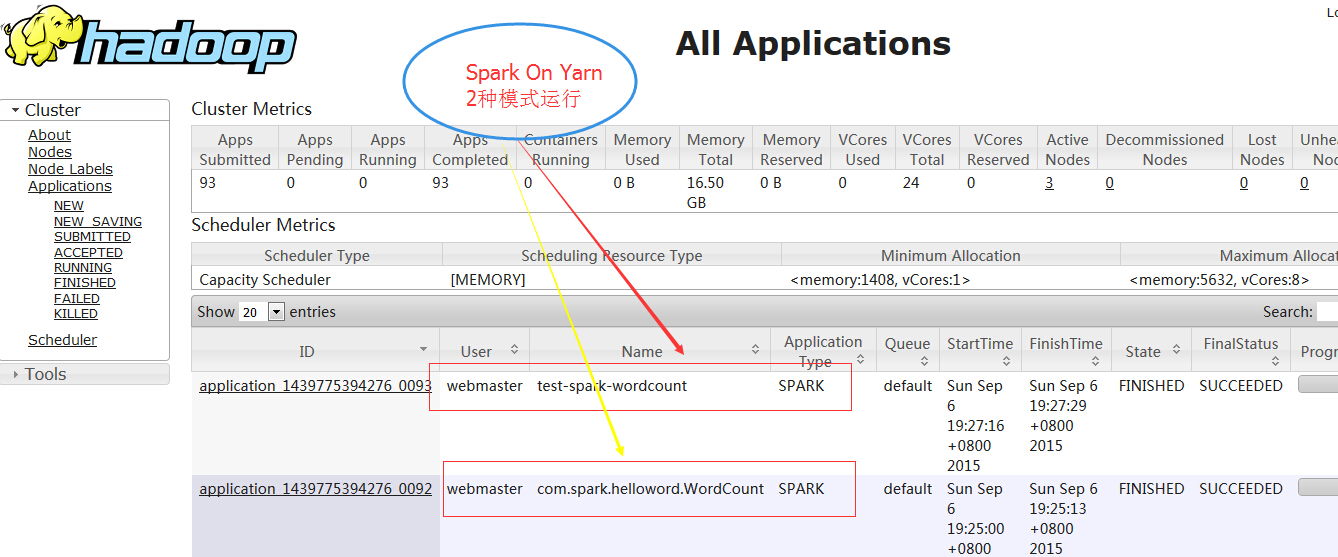

(1)需要启动HDFS+YRAN,无须启动spark的standalone集群

bin/spark-submit --class com.spark.helloword.WordCount --master yarn-client ./spark-hello_2.11-1.0.jar

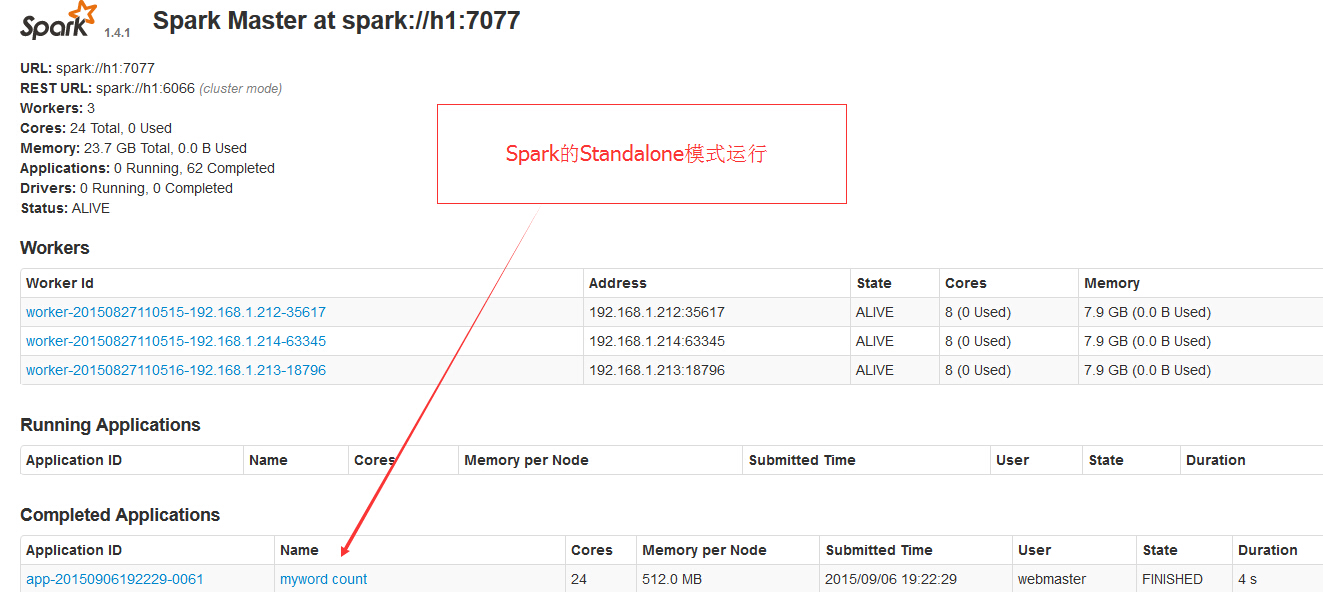

(2)启动spark的standalone集群,并启动的Hadoop的HDFS分布式存储系统即可

bin/spark-submit --class com.spark.helloword.WordCount --master spark://h1:7077 ./spark-hello_2.11-1.0.jar

(3)//需要启动HDFS+YRAN,无须启动spark的standalone集群

//--name 指定作业名字

bin/spark-submit --class com.spark.helloword.WordCount --master yarn-cluster --name test-spark-wordcount ./spark-hello_2.11-1.0.jar

执行结果:

- (a,4)

- (b,2)

- (f,2)

- (d,1)

- (z,1)

- (p,1)

- (h,1)

- (o,1)

- (c,1)

(a,4)

(b,2)

(f,2)

(d,1)

(z,1)

(p,1)

(h,1)

(o,1)

(c,1)

运行模式截图:

9202

9202

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?